Quickstart: Text to speech with the Azure OpenAI Service

In this quickstart, you use the Azure OpenAI Service for text to speech with OpenAI voices.

The available voices are: alloy, echo, fable, onyx, nova, and shimmer. For more information, see Azure OpenAI Service reference documentation for text to speech.

Prerequisites

- An Azure subscription - Create one for free.

- An Azure OpenAI resource created in the North Central US or Sweden Central regions with the

tts-1ortts-1-hdmodel deployed. For more information, see Create a resource and deploy a model with Azure OpenAI.

Set up

Retrieve key and endpoint

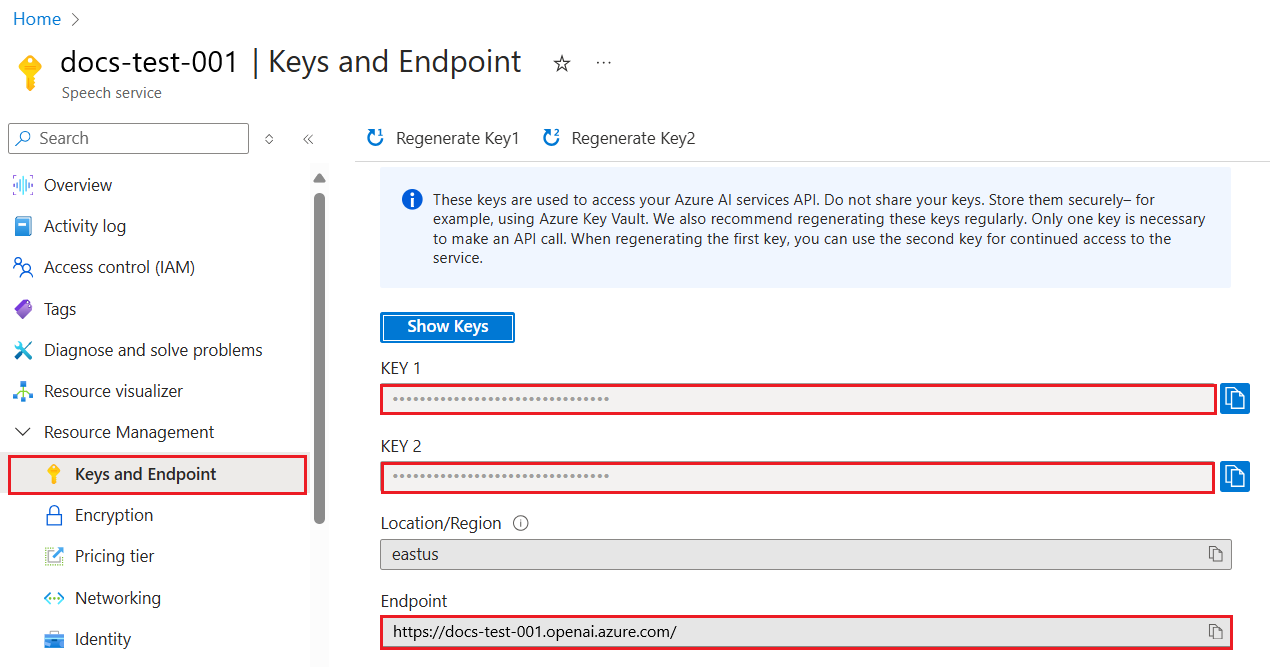

To successfully make a call against Azure OpenAI, you need an endpoint and a key.

| Variable name | Value |

|---|---|

AZURE_OPENAI_ENDPOINT |

The service endpoint can be found in the Keys & Endpoint section when examining your resource from the Azure portal. Alternatively, you can find the endpoint via the Deployments page in Azure AI Foundry portal. An example endpoint is: https://docs-test-001.openai.azure.com/. |

AZURE_OPENAI_API_KEY |

This value can be found in the Keys & Endpoint section when examining your resource from the Azure portal. You can use either KEY1 or KEY2. |

Go to your resource in the Azure portal. The Endpoint and Keys can be found in the Resource Management section. Copy your endpoint and access key as you need both for authenticating your API calls. You can use either KEY1 or KEY2. Always having two keys allows you to securely rotate and regenerate keys without causing a service disruption.

Environment variables

Create and assign persistent environment variables for your key and endpoint.

Important

Use API keys with caution. Don't include the API key directly in your code, and never post it publicly. If you use an API key, store it securely in Azure Key Vault. For more information about using API keys securely in your apps, see API keys with Azure Key Vault.

For more information about AI services security, see Authenticate requests to Azure AI services.

setx AZURE_OPENAI_API_KEY "REPLACE_WITH_YOUR_KEY_VALUE_HERE"

setx AZURE_OPENAI_ENDPOINT "REPLACE_WITH_YOUR_ENDPOINT_HERE"

Create a REST request and response

In a bash shell, run the following command. You need to replace YourDeploymentName with the deployment name you chose when you deployed the text to speech model. The deployment name isn't necessarily the same as the model name. Entering the model name results in an error unless you chose a deployment name that is identical to the underlying model name.

curl $AZURE_OPENAI_ENDPOINT/openai/deployments/YourDeploymentName/audio/speech?api-version=2024-02-15-preview \

-H "api-key: $AZURE_OPENAI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"model": "tts-1-hd",

"input": "I'm excited to try text to speech.",

"voice": "alloy"

}' --output speech.mp3

The format of your first line of the command with an example endpoint would appear as follows curl https://aoai-docs.openai.azure.com/openai/deployments/{YourDeploymentName}/audio/speech?api-version=2024-02-15-preview \.

Important

For production, use a secure way of storing and accessing your credentials like Azure Key Vault. For more information about credential security, see the Azure AI services security article.

Source code | Package (npm) | Samples

Prerequisites

- An Azure subscription - Create one for free

- LTS versions of Node.js

- Azure CLI used for passwordless authentication in a local development environment, create the necessary context by signing in with the Azure CLI.

- An Azure OpenAI resource created in a supported region (see Region availability). For more information, see Create a resource and deploy a model with Azure OpenAI.

Microsoft Entra ID prerequisites

For the recommended keyless authentication with Microsoft Entra ID, you need to:

- Install the Azure CLI used for keyless authentication with Microsoft Entra ID.

- Assign the

Cognitive Services Userrole to your user account. You can assign roles in the Azure portal under Access control (IAM) > Add role assignment.

Set up

Create a new folder

synthesis-quickstartto contain the application and open Visual Studio Code in that folder with the following command:mkdir synthesis-quickstart && cd synthesis-quickstartCreate the

package.jsonwith the following command:npm init -yInstall the OpenAI client library for JavaScript with:

npm install openaiFor the recommended passwordless authentication:

npm install @azure/identity

Retrieve resource information

You need to retrieve the following information to authenticate your application with your Azure OpenAI resource:

| Variable name | Value |

|---|---|

AZURE_OPENAI_ENDPOINT |

This value can be found in the Keys and Endpoint section when examining your resource from the Azure portal. |

AZURE_OPENAI_DEPLOYMENT_NAME |

This value will correspond to the custom name you chose for your deployment when you deployed a model. This value can be found under Resource Management > Model Deployments in the Azure portal. |

OPENAI_API_VERSION |

Learn more about API Versions. You can change the version in code or use an environment variable. |

Learn more about keyless authentication and setting environment variables.

Caution

To use the recommended keyless authentication with the SDK, make sure that the AZURE_OPENAI_API_KEY environment variable isn't set.

Create a speech file

Create the

index.jsfile with the following code:const { writeFile } = require("fs/promises"); const { AzureOpenAI } = require("openai"); const { DefaultAzureCredential, getBearerTokenProvider } = require("@azure/identity"); require("openai/shims/node"); // You will need to set these environment variables or edit the following values const endpoint = process.env.AZURE_OPENAI_ENDPOINT || "Your endpoint"; const speechFilePath = "<path to save the speech file>"; // Required Azure OpenAI deployment name and API version const deploymentName = process.env.AZURE_OPENAI_DEPLOYMENT_NAME || "tts"; const apiVersion = process.env.OPENAI_API_VERSION || "2024-08-01-preview"; // keyless authentication const credential = new DefaultAzureCredential(); const scope = "https://cognitiveservices.azure.com/.default"; const azureADTokenProvider = getBearerTokenProvider(credential, scope); function getClient() { return new AzureOpenAI({ endpoint, azureADTokenProvider, apiVersion, deployment: deploymentName, }); } async function generateAudioStream( client, params ) { const response = await client.audio.speech.create(params); if (response.ok) return response.body; throw new Error(`Failed to generate audio stream: ${response.statusText}`); } export async function main() { console.log("== Text to Speech Sample =="); const client = getClient(); const streamToRead = await generateAudioStream(client, { model: deploymentName, voice: "alloy", input: "the quick brown chicken jumped over the lazy dogs", }); console.log(`Streaming response to ${speechFilePath}`); await writeFile(speechFilePath, streamToRead); console.log("Finished streaming"); } main().catch((err) => { console.error("The sample encountered an error:", err); });Sign in to Azure with the following command:

az loginRun the JavaScript file.

node index.js

Source code | Package (npm) | Samples

Prerequisites

- An Azure subscription - Create one for free

- LTS versions of Node.js

- TypeScript

- Azure CLI used for passwordless authentication in a local development environment, create the necessary context by signing in with the Azure CLI.

- An Azure OpenAI resource created in a supported region (see Region availability). For more information, see Create a resource and deploy a model with Azure OpenAI.

Microsoft Entra ID prerequisites

For the recommended keyless authentication with Microsoft Entra ID, you need to:

- Install the Azure CLI used for keyless authentication with Microsoft Entra ID.

- Assign the

Cognitive Services Userrole to your user account. You can assign roles in the Azure portal under Access control (IAM) > Add role assignment.

Set up

Create a new folder

assistants-quickstartto contain the application and open Visual Studio Code in that folder with the following command:mkdir assistants-quickstart && cd assistants-quickstartCreate the

package.jsonwith the following command:npm init -yUpdate the

package.jsonto ECMAScript with the following command:npm pkg set type=moduleInstall the OpenAI client library for JavaScript with:

npm install openaiFor the recommended passwordless authentication:

npm install @azure/identity

Retrieve resource information

You need to retrieve the following information to authenticate your application with your Azure OpenAI resource:

| Variable name | Value |

|---|---|

AZURE_OPENAI_ENDPOINT |

This value can be found in the Keys and Endpoint section when examining your resource from the Azure portal. |

AZURE_OPENAI_DEPLOYMENT_NAME |

This value will correspond to the custom name you chose for your deployment when you deployed a model. This value can be found under Resource Management > Model Deployments in the Azure portal. |

OPENAI_API_VERSION |

Learn more about API Versions. You can change the version in code or use an environment variable. |

Learn more about keyless authentication and setting environment variables.

Caution

To use the recommended keyless authentication with the SDK, make sure that the AZURE_OPENAI_API_KEY environment variable isn't set.

Create a speech file

Create the

index.tsfile with the following code:import { writeFile } from "fs/promises"; import { AzureOpenAI } from "openai"; import { DefaultAzureCredential, getBearerTokenProvider } from "@azure/identity"; import type { SpeechCreateParams } from "openai/resources/audio/speech"; import "openai/shims/node"; // You will need to set these environment variables or edit the following values const endpoint = process.env.AZURE_OPENAI_ENDPOINT || "Your endpoint"; const speechFilePath = "<path to save the speech file>"; // Required Azure OpenAI deployment name and API version const deploymentName = process.env.AZURE_OPENAI_DEPLOYMENT_NAME || "tts"; const apiVersion = process.env.OPENAI_API_VERSION || "2024-08-01-preview"; // keyless authentication const credential = new DefaultAzureCredential(); const scope = "https://cognitiveservices.azure.com/.default"; const azureADTokenProvider = getBearerTokenProvider(credential, scope); function getClient(): AzureOpenAI { return new AzureOpenAI({ endpoint, azureADTokenProvider, apiVersion, deployment: deploymentName, }); } async function generateAudioStream( client: AzureOpenAI, params: SpeechCreateParams ): Promise<NodeJS.ReadableStream> { const response = await client.audio.speech.create(params); if (response.ok) return response.body; throw new Error(`Failed to generate audio stream: ${response.statusText}`); } export async function main() { console.log("== Text to Speech Sample =="); const client = getClient(); const streamToRead = await generateAudioStream(client, { model: deploymentName, voice: "alloy", input: "the quick brown chicken jumped over the lazy dogs", }); console.log(`Streaming response to ${speechFilePath}`); await writeFile(speechFilePath, streamToRead); console.log("Finished streaming"); } main().catch((err) => { console.error("The sample encountered an error:", err); });The import of

"openai/shims/node"is necessary when running the code in a Node.js environment. It ensures that the output type of theclient.audio.speech.createmethod is correctly set toNodeJS.ReadableStream.Create the

tsconfig.jsonfile to transpile the TypeScript code and copy the following code for ECMAScript.{ "compilerOptions": { "module": "NodeNext", "target": "ES2022", // Supports top-level await "moduleResolution": "NodeNext", "skipLibCheck": true, // Avoid type errors from node_modules "strict": true // Enable strict type-checking options }, "include": ["*.ts"] }Transpile from TypeScript to JavaScript.

tscSign in to Azure with the following command:

az loginRun the code with the following command:

node index.js

Prerequisites

- An Azure subscription. You can create one for free.

- An Azure OpenAI resource with a Whisper model deployed in a supported region. For more information, see Create a resource and deploy a model with Azure OpenAI.

- The .NET 8.0 SDK

Create the .NET app

Create a .NET app using the

dotnet newcommand:dotnet new console -n TextToSpeechChange into the directory of the new app:

cd OpenAISpeechInstall the

Azure.OpenAIclient library:dotnet add package Azure.AI.OpenAI

Authenticate and connect to Azure OpenAI

To make requests to your Azure OpenAI service, you need the service endpoint as well as authentication credentials via one of the following options:

- Microsoft Entra ID is the recommended approach for authenticating to Azure services and is more secure than key-based alternatives.

- Access keys allow you to provide a secret key to connect to your resource.

Important

Use API keys with caution. Don't include the API key directly in your code, and never post it publicly. If you use an API key, store it securely in Azure Key Vault. For more information about using API keys securely in your apps, see API keys with Azure Key Vault.

For more information about AI services security, see Authenticate requests to Azure AI services.

Get the Azure OpenAI endpoint

The service endpoint can be found in the Keys & Endpoint section when examining your resource from the Azure portal. Alternatively, you can find the endpoint via the Deployments page in Azure AI Foundry portal. An example endpoint is: https://docs-test-001.openai.azure.com/.

Authenticate using Microsoft Entra ID

If you choose to use Microsoft Entra ID authentication, you'll need to complete the following:

Add the

Azure.Identitypackage.dotnet add package Azure.IdentityAssign the

Cognitive Services Userrole to your user account. This can be done in the Azure portal on your OpenAI resource under Access control (IAM) > Add role assignment.Sign-in to Azure using Visual Studio or the Azure CLI via

az login.

Authenticate using keys

The access key value can be found in the Keys & Endpoint section when examining your resource from the Azure portal. You can use either KEY1 or KEY2. Always having two keys allows you to securely rotate and regenerate keys without causing a service disruption.

Update the app code

Replace the contents of

program.cswith the following code and update the placeholder values with your own.using Azure; using Azure.AI.OpenAI; using Azure.Identity; // Required for Passwordless auth var endpoint = new Uri( Environment.GetEnvironmentVariable("YOUR_OPENAI_ENDPOINT") ?? throw new ArgumentNullException()); var credentials = new DefaultAzureCredential(); // Use this line for key auth // var credentials = new AzureKeyCredential( // Environment.GetEnvironmentVariable("YOUR_OPENAI_KEY") ?? throw new ArgumentNullException()); var deploymentName = "tts"; // Default deployment name, update with your own if necessary var speechFilePath = "YOUR_AUDIO_FILE_PATH"; var openAIClient = new AzureOpenAIClient(endpoint, credentials); var audioClient = openAIClient.GetAudioClient(deploymentName); var result = await audioClient.GenerateSpeechAsync( "the quick brown chicken jumped over the lazy dogs"); Console.WriteLine("Streaming response to ${speechFilePath}"); await File.WriteAllBytesAsync(speechFilePath, result.Value.ToArray()); Console.WriteLine("Finished streaming");Run the application using the

dotnet runcommand or the run button at the top of Visual Studio:dotnet runThe app generates an audio file at the location you specified for the

speechFilePathvariable. Play the file on your device to hear the generated audio.

Clean up resources

If you want to clean up and remove an Azure OpenAI resource, you can delete the resource. Before deleting the resource, you must first delete any deployed models.

Next steps

- Learn more about how to work with text to speech with Azure OpenAI Service in the Azure OpenAI Service reference documentation.

- For more examples, check out the Azure OpenAI Samples GitHub repository