Quickstart: Create an object detection project with the Custom Vision client library

Get started with the Custom Vision client library for .NET. Follow these steps to install the package and try out the example code for building an object detection model. You'll create a project, add tags, train the project on sample images, and use the project's prediction endpoint URL to programmatically test it. Use this example as a template for building your own image recognition app.

Note

If you want to build and train an object detection model without writing code, see the browser-based guidance instead.

Reference documentation | Library source code (training) (prediction) | Package (NuGet) (training) (prediction) | Samples

Prerequisites

- Azure subscription - Create one for free

- The Visual Studio IDE or current version of .NET Core.

- Once you have your Azure subscription, create a Custom Vision resource in the Azure portal to create a training and prediction resource.

- You can use the free pricing tier (

F0) to try the service, and upgrade later to a paid tier for production.

- You can use the free pricing tier (

Create environment variables

In this example, you'll write your credentials to environment variables on the local machine running the application.

Go to the Azure portal. If the Custom Vision resources you created in the Prerequisites section deployed successfully, select the Go to Resource button under Next steps. You can find your keys and endpoints in the resources' Keys and Endpoint pages, under Resource Management. You'll need to get the keys for both your training resource and prediction resource, along with the API endpoints.

You can find the prediction resource ID on the prediction resource's Properties tab in the Azure portal, listed as Resource ID.

Tip

You also use https://www.customvision.ai to get these values. After you sign in, select the Settings icon at the top right. On the Setting pages, you can view all the keys, resource ID, and endpoints.

To set the environment variables, open a console window and follow the instructions for your operating system and development environment.

- To set the

VISION_TRAINING KEYenvironment variable, replace<your-training-key>with one of the keys for your training resource. - To set the

VISION_TRAINING_ENDPOINTenvironment variable, replace<your-training-endpoint>with the endpoint for your training resource. - To set the

VISION_PREDICTION_KEYenvironment variable, replace<your-prediction-key>with one of the keys for your prediction resource. - To set the

VISION_PREDICTION_ENDPOINTenvironment variable, replace<your-prediction-endpoint>with the endpoint for your prediction resource. - To set the

VISION_PREDICTION_RESOURCE_IDenvironment variable, replace<your-resource-id>with the resource ID for your prediction resource.

Important

We recommend Microsoft Entra ID authentication with managed identities for Azure resources to avoid storing credentials with your applications that run in the cloud.

Use API keys with caution. Don't include the API key directly in your code, and never post it publicly. If using API keys, store them securely in Azure Key Vault, rotate the keys regularly, and restrict access to Azure Key Vault using role based access control and network access restrictions. For more information about using API keys securely in your apps, see API keys with Azure Key Vault.

For more information about AI services security, see Authenticate requests to Azure AI services.

setx VISION_TRAINING_KEY <your-training-key>

setx VISION_TRAINING_ENDPOINT <your-training-endpoint>

setx VISION_PREDICTION_KEY <your-prediction-key>

setx VISION_PREDICTION_ENDPOINT <your-prediction-endpoint>

setx VISION_PREDICTION_RESOURCE_ID <your-resource-id>

After you add the environment variables, you might need to restart any running programs that read the environment variables, including the console window.

Setting up

Create a new C# application

Using Visual Studio, create a new .NET Core application.

Install the client library

Once you've created a new project, install the client library by right-clicking on the project solution in the Solution Explorer and selecting Manage NuGet Packages. In the package manager that opens select Browse, check Include prerelease, and search for Microsoft.Azure.CognitiveServices.Vision.CustomVision.Training and Microsoft.Azure.CognitiveServices.Vision.CustomVision.Prediction. Select the latest version and then Install.

Tip

Want to view the whole quickstart code file at once? You can find it on GitHub, which contains the code examples in this quickstart.

From the project directory, open the program.cs file and add the following using directives:

using Microsoft.Azure.CognitiveServices.Vision.CustomVision.Prediction;

using Microsoft.Azure.CognitiveServices.Vision.CustomVision.Training;

using Microsoft.Azure.CognitiveServices.Vision.CustomVision.Training.Models;

using System;

using System.Collections.Generic;

using System.IO;

using System.Linq;

using System.Threading;

In the application's Main method, create variables that retrieve your resource's keys and endpoint from environment variables. You'll also declare some basic objects to be used later.

string trainingEndpoint = Environment.GetEnvironmentVariable("VISION_TRAINING_ENDPOINT");

string trainingKey = Environment.GetEnvironmentVariable("VISION_TRAINING_KEY");

string predictionEndpoint = Environment.GetEnvironmentVariable("VISION_PREDICTION_ENDPOINT");

string predictionKey = Environment.GetEnvironmentVariable("VISION_PREDICTION_KEY");

private static Iteration iteration;

private static string publishedModelName = "CustomODModel";

In the application's Main method, add calls for the methods used in this quickstart. You will implement these later.

CustomVisionTrainingClient trainingApi = AuthenticateTraining(trainingEndpoint, trainingKey);

CustomVisionPredictionClient predictionApi = AuthenticatePrediction(predictionEndpoint, predictionKey);

Project project = CreateProject(trainingApi);

AddTags(trainingApi, project);

UploadImages(trainingApi, project);

TrainProject(trainingApi, project);

PublishIteration(trainingApi, project);

TestIteration(predictionApi, project);

Authenticate the client

In a new method, instantiate training and prediction clients using your endpoint and keys.

private CustomVisionTrainingClient AuthenticateTraining(string endpoint, string trainingKey, string predictionKey)

{

// Create the Api, passing in the training key

CustomVisionTrainingClient trainingApi = new CustomVisionTrainingClient(new Microsoft.Azure.CognitiveServices.Vision.CustomVision.Training.ApiKeyServiceClientCredentials(trainingKey))

{

Endpoint = endpoint

};

return trainingApi;

}

private CustomVisionPredictionClient AuthenticatePrediction(string endpoint, string predictionKey)

{

// Create a prediction endpoint, passing in the obtained prediction key

CustomVisionPredictionClient predictionApi = new CustomVisionPredictionClient(new Microsoft.Azure.CognitiveServices.Vision.CustomVision.Prediction.ApiKeyServiceClientCredentials(predictionKey))

{

Endpoint = endpoint

};

return predictionApi;

}

Create a new Custom Vision project

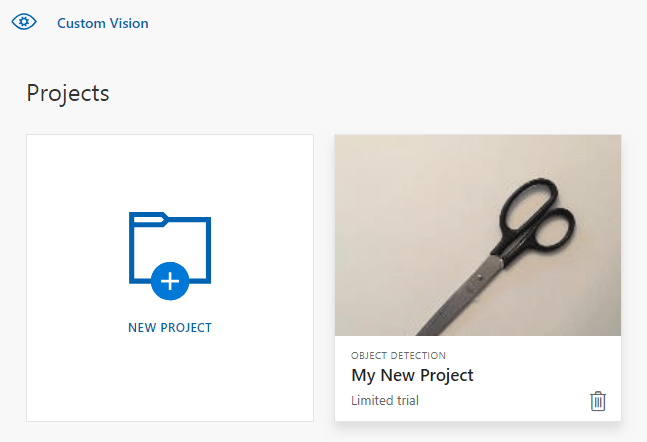

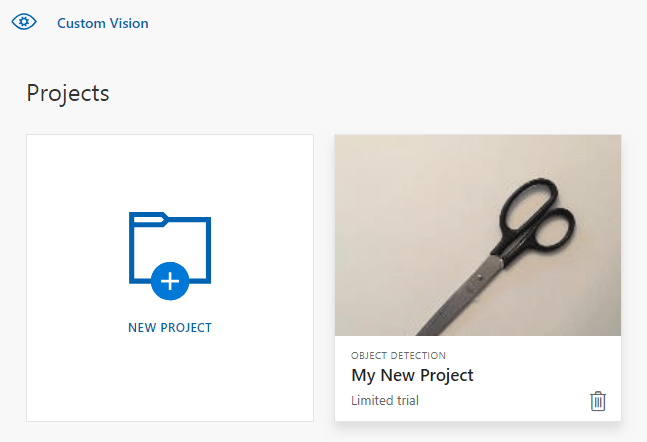

This next method creates an object detection project. The created project will show up on the Custom Vision website. See the CreateProject method to specify other options when you create your project (explained in the Build a detector web portal guide).

private Project CreateProject(CustomVisionTrainingClient trainingApi)

{

// Find the object detection domain

var domains = trainingApi.GetDomains();

var objDetectionDomain = domains.FirstOrDefault(d => d.Type == "ObjectDetection");

// Create a new project

Console.WriteLine("Creating new project:");

project = trainingApi.CreateProject("My New Project", null, objDetectionDomain.Id);

return project;

}

Add tags to the project

This method defines the tags that you will train the model on.

private void AddTags(CustomVisionTrainingClient trainingApi, Project project)

{

// Make two tags in the new project

var forkTag = trainingApi.CreateTag(project.Id, "fork");

var scissorsTag = trainingApi.CreateTag(project.Id, "scissors");

}

Upload and tag images

First, download the sample images for this project. Save the contents of the sample Images folder to your local device.

When you tag images in object detection projects, you need to specify the region of each tagged object using normalized coordinates. The following code associates each of the sample images with its tagged region.

private void UploadImages(CustomVisionTrainingClient trainingApi, Project project)

{

Dictionary<string, double[]> fileToRegionMap = new Dictionary<string, double[]>()

{

// FileName, Left, Top, Width, Height

{"scissors_1", new double[] { 0.4007353, 0.194068655, 0.259803921, 0.6617647 } },

{"scissors_2", new double[] { 0.426470578, 0.185898721, 0.172794119, 0.5539216 } },

{"scissors_3", new double[] { 0.289215684, 0.259428144, 0.403186262, 0.421568632 } },

{"scissors_4", new double[] { 0.343137264, 0.105833367, 0.332107842, 0.8055556 } },

{"scissors_5", new double[] { 0.3125, 0.09766343, 0.435049027, 0.71405226 } },

{"scissors_6", new double[] { 0.379901975, 0.24308826, 0.32107842, 0.5718954 } },

{"scissors_7", new double[] { 0.341911763, 0.20714055, 0.3137255, 0.6356209 } },

{"scissors_8", new double[] { 0.231617644, 0.08459154, 0.504901946, 0.8480392 } },

{"scissors_9", new double[] { 0.170343131, 0.332957536, 0.767156839, 0.403594762 } },

{"scissors_10", new double[] { 0.204656869, 0.120539248, 0.5245098, 0.743464053 } },

{"scissors_11", new double[] { 0.05514706, 0.159754932, 0.799019635, 0.730392158 } },

{"scissors_12", new double[] { 0.265931368, 0.169558853, 0.5061275, 0.606209159 } },

{"scissors_13", new double[] { 0.241421565, 0.184264734, 0.448529422, 0.6830065 } },

{"scissors_14", new double[] { 0.05759804, 0.05027781, 0.75, 0.882352948 } },

{"scissors_15", new double[] { 0.191176474, 0.169558853, 0.6936275, 0.6748366 } },

{"scissors_16", new double[] { 0.1004902, 0.279036, 0.6911765, 0.477124184 } },

{"scissors_17", new double[] { 0.2720588, 0.131977156, 0.4987745, 0.6911765 } },

{"scissors_18", new double[] { 0.180147052, 0.112369314, 0.6262255, 0.6666667 } },

{"scissors_19", new double[] { 0.333333343, 0.0274019931, 0.443627447, 0.852941155 } },

{"scissors_20", new double[] { 0.158088237, 0.04047389, 0.6691176, 0.843137264 } },

{"fork_1", new double[] { 0.145833328, 0.3509314, 0.5894608, 0.238562092 } },

{"fork_2", new double[] { 0.294117659, 0.216944471, 0.534313738, 0.5980392 } },

{"fork_3", new double[] { 0.09191177, 0.0682516545, 0.757352948, 0.6143791 } },

{"fork_4", new double[] { 0.254901975, 0.185898721, 0.5232843, 0.594771266 } },

{"fork_5", new double[] { 0.2365196, 0.128709182, 0.5845588, 0.71405226 } },

{"fork_6", new double[] { 0.115196079, 0.133611143, 0.676470637, 0.6993464 } },

{"fork_7", new double[] { 0.164215669, 0.31008172, 0.767156839, 0.410130739 } },

{"fork_8", new double[] { 0.118872553, 0.318251669, 0.817401946, 0.225490168 } },

{"fork_9", new double[] { 0.18259804, 0.2136765, 0.6335784, 0.643790841 } },

{"fork_10", new double[] { 0.05269608, 0.282303959, 0.8088235, 0.452614367 } },

{"fork_11", new double[] { 0.05759804, 0.0894935, 0.9007353, 0.3251634 } },

{"fork_12", new double[] { 0.3345588, 0.07315363, 0.375, 0.9150327 } },

{"fork_13", new double[] { 0.269607842, 0.194068655, 0.4093137, 0.6732026 } },

{"fork_14", new double[] { 0.143382356, 0.218578458, 0.7977941, 0.295751631 } },

{"fork_15", new double[] { 0.19240196, 0.0633497, 0.5710784, 0.8398692 } },

{"fork_16", new double[] { 0.140931368, 0.480016381, 0.6838235, 0.240196079 } },

{"fork_17", new double[] { 0.305147052, 0.2512582, 0.4791667, 0.5408496 } },

{"fork_18", new double[] { 0.234068632, 0.445702642, 0.6127451, 0.344771236 } },

{"fork_19", new double[] { 0.219362751, 0.141781077, 0.5919118, 0.6683006 } },

{"fork_20", new double[] { 0.180147052, 0.239820287, 0.6887255, 0.235294119 } }

};

Note

For your own projects, if you don't have a click-and-drag utility to mark the coordinates of regions, you can use the web UI at the Custom Vision website. In this example, the coordinates are already provided.

Then, this map of associations is used to upload each sample image with its region coordinates. You can upload up to 64 images in a single batch. You might need to change the imagePath value to point to the correct folder locations.

// Add all images for fork

var imagePath = Path.Combine("Images", "fork");

var imageFileEntries = new List<ImageFileCreateEntry>();

foreach (var fileName in Directory.EnumerateFiles(imagePath))

{

var region = fileToRegionMap[Path.GetFileNameWithoutExtension(fileName)];

imageFileEntries.Add(new ImageFileCreateEntry(fileName, File.ReadAllBytes(fileName), null, new List<Region>(new Region[] { new Region(forkTag.Id, region[0], region[1], region[2], region[3]) })));

}

trainingApi.CreateImagesFromFiles(project.Id, new ImageFileCreateBatch(imageFileEntries));

// Add all images for scissors

imagePath = Path.Combine("Images", "scissors");

imageFileEntries = new List<ImageFileCreateEntry>();

foreach (var fileName in Directory.EnumerateFiles(imagePath))

{

var region = fileToRegionMap[Path.GetFileNameWithoutExtension(fileName)];

imageFileEntries.Add(new ImageFileCreateEntry(fileName, File.ReadAllBytes(fileName), null, new List<Region>(new Region[] { new Region(scissorsTag.Id, region[0], region[1], region[2], region[3]) })));

}

trainingApi.CreateImagesFromFiles(project.Id, new ImageFileCreateBatch(imageFileEntries));

}

At this point, you've uploaded all the samples images and tagged each one (fork or scissors) with an associated pixel rectangle.

Train the project

This method creates the first training iteration in the project. It queries the service until training is completed.

private void TrainProject(CustomVisionTrainingClient trainingApi, Project project)

{

// Now there are images with tags start training the project

Console.WriteLine("\tTraining");

iteration = trainingApi.TrainProject(project.Id);

// The returned iteration will be in progress, and can be queried periodically to see when it has completed

while (iteration.Status == "Training")

{

Thread.Sleep(1000);

// Re-query the iteration to get its updated status

iteration = trainingApi.GetIteration(project.Id, iteration.Id);

}

}

Tip

Train with selected tags

You can optionally train on only a subset of your applied tags. You might want to do this if you haven't applied enough of certain tags yet, but you do have enough of others. In the TrainProject call, use the trainingParameters parameter. Construct a TrainingParameters and set its SelectedTags property to a list of IDs of the tags you want to use. The model will train to only recognize the tags on that list.

Publish the current iteration

This method makes the current iteration of the model available for querying. You can use the model name as a reference to send prediction requests. You need to enter your own value for predictionResourceId. You can find the prediction resource ID on the resource's Properties tab in the Azure portal, listed as Resource ID.

private void PublishIteration(CustomVisionTrainingClient trainingApi, Project project)

{

// The iteration is now trained. Publish it to the prediction end point.

var predictionResourceId = Environment.GetEnvironmentVariable("VISION_PREDICTION_RESOURCE_ID");

trainingApi.PublishIteration(project.Id, iteration.Id, publishedModelName, predictionResourceId);

Console.WriteLine("Done!\n");

}

Test the prediction endpoint

This method loads the test image, queries the model endpoint, and outputs prediction data to the console.

private void TestIteration(CustomVisionPredictionClient predictionApi, Project project)

{

// Make a prediction against the new project

Console.WriteLine("Making a prediction:");

var imageFile = Path.Combine("Images", "test", "test_image.jpg");

using (var stream = File.OpenRead(imageFile))

{

var result = predictionApi.DetectImage(project.Id, publishedModelName, stream);

// Loop over each prediction and write out the results

foreach (var c in result.Predictions)

{

Console.WriteLine($"\t{c.TagName}: {c.Probability:P1} [ {c.BoundingBox.Left}, {c.BoundingBox.Top}, {c.BoundingBox.Width}, {c.BoundingBox.Height} ]");

}

}

Console.ReadKey();

}

Run the application

Run the application by clicking the Debug button at the top of the IDE window.

As the application runs, it should open a console window and write the following output:

Creating new project:

Training

Done!

Making a prediction:

fork: 98.2% [ 0.111609578, 0.184719115, 0.6607002, 0.6637112 ]

scissors: 1.2% [ 0.112389535, 0.119195729, 0.658031344, 0.7023591 ]

You can then verify that the test image (found in Images/Test/) is tagged appropriately and that the region of detection is correct. At this point, you can press any key to exit the application.

Clean up resources

If you wish to implement your own object detection project (or try an image classification project instead), you might want to delete the fork/scissors detection project from this example. A free subscription allows for two Custom Vision projects.

On the Custom Vision website, navigate to Projects and select the trash can under My New Project.

Next steps

Now you've done every step of the object detection process in code. This sample executes a single training iteration, but often you'll need to train and test your model multiple times in order to make it more accurate. The following guide deals with image classification, but its principles are similar to object detection.

- What is Custom Vision?

- The source code for this sample can be found on GitHub

- SDK reference documentation

This guide provides instructions and sample code to help you get started using the Custom Vision client library for Go to build an object detection model. You'll create a project, add tags, train the project, and use the project's prediction endpoint URL to programmatically test it. Use this example as a template for building your own image recognition app.

Note

If you want to build and train an object detection model without writing code, see the browser-based guidance instead.

Reference documentation (training) (prediction)

Prerequisites

- Azure subscription - Create one for free

- Go 1.8+

- Once you have your Azure subscription, create a Custom Vision resource in the Azure portal to create a training and prediction resource.

- You can use the free pricing tier (

F0) to try the service, and upgrade later to a paid tier for production.

- You can use the free pricing tier (

Create environment variables

In this example, you'll write your credentials to environment variables on the local machine running the application.

Go to the Azure portal. If the Custom Vision resources you created in the Prerequisites section deployed successfully, select the Go to Resource button under Next steps. You can find your keys and endpoints in the resources' Keys and Endpoint pages, under Resource Management. You'll need to get the keys for both your training resource and prediction resource, along with the API endpoints.

You can find the prediction resource ID on the prediction resource's Properties tab in the Azure portal, listed as Resource ID.

Tip

You also use https://www.customvision.ai to get these values. After you sign in, select the Settings icon at the top right. On the Setting pages, you can view all the keys, resource ID, and endpoints.

To set the environment variables, open a console window and follow the instructions for your operating system and development environment.

- To set the

VISION_TRAINING KEYenvironment variable, replace<your-training-key>with one of the keys for your training resource. - To set the

VISION_TRAINING_ENDPOINTenvironment variable, replace<your-training-endpoint>with the endpoint for your training resource. - To set the

VISION_PREDICTION_KEYenvironment variable, replace<your-prediction-key>with one of the keys for your prediction resource. - To set the

VISION_PREDICTION_ENDPOINTenvironment variable, replace<your-prediction-endpoint>with the endpoint for your prediction resource. - To set the

VISION_PREDICTION_RESOURCE_IDenvironment variable, replace<your-resource-id>with the resource ID for your prediction resource.

Important

We recommend Microsoft Entra ID authentication with managed identities for Azure resources to avoid storing credentials with your applications that run in the cloud.

Use API keys with caution. Don't include the API key directly in your code, and never post it publicly. If using API keys, store them securely in Azure Key Vault, rotate the keys regularly, and restrict access to Azure Key Vault using role based access control and network access restrictions. For more information about using API keys securely in your apps, see API keys with Azure Key Vault.

For more information about AI services security, see Authenticate requests to Azure AI services.

setx VISION_TRAINING_KEY <your-training-key>

setx VISION_TRAINING_ENDPOINT <your-training-endpoint>

setx VISION_PREDICTION_KEY <your-prediction-key>

setx VISION_PREDICTION_ENDPOINT <your-prediction-endpoint>

setx VISION_PREDICTION_RESOURCE_ID <your-resource-id>

After you add the environment variables, you might need to restart any running programs that read the environment variables, including the console window.

Setting up

Install the Custom Vision client library

To write an image analysis app with Custom Vision for Go, you'll need the Custom Vision service client library. Run the following command in PowerShell:

go get -u github.com/Azure/azure-sdk-for-go/...

or if you use dep, within your repo run:

dep ensure -add github.com/Azure/azure-sdk-for-go

Get the sample images

This example uses the images from the Azure AI services Python SDK Samples repository on GitHub. Clone or download this repository to your development environment. Remember its folder location for a later step.

Create the Custom Vision project

Create a new file called sample.go in your preferred project directory, and open it in your preferred code editor.

Add the following code to your script to create a new Custom Vision service project.

See the CreateProject method to specify other options when you create your project (explained in the Build a detector web portal guide).

import(

"context"

"bytes"

"fmt"

"io/ioutil"

"path"

"log"

"time"

"github.com/Azure/azure-sdk-for-go/services/cognitiveservices/v3.0/customvision/training"

"github.com/Azure/azure-sdk-for-go/services/cognitiveservices/v3.0/customvision/prediction"

)

// retrieve environment variables:

var (

training_key string = os.Getenv("VISION_TRAINING_KEY")

prediction_key string = os.Getenv("VISION_PREDICTION_KEY")

prediction_resource_id = os.Getenv("VISION_PREDICTION_RESOURCE_ID")

endpoint string = os.Getenv("VISION_ENDPOINT")

project_name string = "Go Sample OD Project"

iteration_publish_name = "detectModel"

sampleDataDirectory = "<path to sample images>"

)

func main() {

fmt.Println("Creating project...")

ctx = context.Background()

trainer := training.New(training_key, endpoint)

var objectDetectDomain training.Domain

domains, _ := trainer.GetDomains(ctx)

for _, domain := range *domains.Value {

fmt.Println(domain, domain.Type)

if domain.Type == "ObjectDetection" && *domain.Name == "General" {

objectDetectDomain = domain

break

}

}

fmt.Println("Creating project...")

project, _ := trainer.CreateProject(ctx, project_name, "", objectDetectDomain.ID, "")

Create tags in the project

To create classification tags to your project, add the following code to the end of sample.go:

# Make two tags in the new project

forkTag, _ := trainer.CreateTag(ctx, *project.ID, "fork", "A fork", string(training.Regular))

scissorsTag, _ := trainer.CreateTag(ctx, *project.ID, "scissors", "Pair of scissors", string(training.Regular))

Upload and tag images

When you tag images in object detection projects, you need to specify the region of each tagged object using normalized coordinates.

Note

If you don't have a click-and-drag utility to mark the coordinates of regions, you can use the web UI at Customvision.ai. In this example, the coordinates are already provided.

To add the images, tags, and regions to the project, insert the following code after the tag creation. Note that in this tutorial the regions are hard-coded inline. The regions specify the bounding box in normalized coordinates, and the coordinates are given in the order: left, top, width, height.

forkImageRegions := map[string][4]float64{

"fork_1.jpg": [4]float64{ 0.145833328, 0.3509314, 0.5894608, 0.238562092 },

"fork_2.jpg": [4]float64{ 0.294117659, 0.216944471, 0.534313738, 0.5980392 },

"fork_3.jpg": [4]float64{ 0.09191177, 0.0682516545, 0.757352948, 0.6143791 },

"fork_4.jpg": [4]float64{ 0.254901975, 0.185898721, 0.5232843, 0.594771266 },

"fork_5.jpg": [4]float64{ 0.2365196, 0.128709182, 0.5845588, 0.71405226 },

"fork_6.jpg": [4]float64{ 0.115196079, 0.133611143, 0.676470637, 0.6993464 },

"fork_7.jpg": [4]float64{ 0.164215669, 0.31008172, 0.767156839, 0.410130739 },

"fork_8.jpg": [4]float64{ 0.118872553, 0.318251669, 0.817401946, 0.225490168 },

"fork_9.jpg": [4]float64{ 0.18259804, 0.2136765, 0.6335784, 0.643790841 },

"fork_10.jpg": [4]float64{ 0.05269608, 0.282303959, 0.8088235, 0.452614367 },

"fork_11.jpg": [4]float64{ 0.05759804, 0.0894935, 0.9007353, 0.3251634 },

"fork_12.jpg": [4]float64{ 0.3345588, 0.07315363, 0.375, 0.9150327 },

"fork_13.jpg": [4]float64{ 0.269607842, 0.194068655, 0.4093137, 0.6732026 },

"fork_14.jpg": [4]float64{ 0.143382356, 0.218578458, 0.7977941, 0.295751631 },

"fork_15.jpg": [4]float64{ 0.19240196, 0.0633497, 0.5710784, 0.8398692 },

"fork_16.jpg": [4]float64{ 0.140931368, 0.480016381, 0.6838235, 0.240196079 },

"fork_17.jpg": [4]float64{ 0.305147052, 0.2512582, 0.4791667, 0.5408496 },

"fork_18.jpg": [4]float64{ 0.234068632, 0.445702642, 0.6127451, 0.344771236 },

"fork_19.jpg": [4]float64{ 0.219362751, 0.141781077, 0.5919118, 0.6683006 },

"fork_20.jpg": [4]float64{ 0.180147052, 0.239820287, 0.6887255, 0.235294119 },

}

scissorsImageRegions := map[string][4]float64{

"scissors_1.jpg": [4]float64{ 0.4007353, 0.194068655, 0.259803921, 0.6617647 },

"scissors_2.jpg": [4]float64{ 0.426470578, 0.185898721, 0.172794119, 0.5539216 },

"scissors_3.jpg": [4]float64{ 0.289215684, 0.259428144, 0.403186262, 0.421568632 },

"scissors_4.jpg": [4]float64{ 0.343137264, 0.105833367, 0.332107842, 0.8055556 },

"scissors_5.jpg": [4]float64{ 0.3125, 0.09766343, 0.435049027, 0.71405226 },

"scissors_6.jpg": [4]float64{ 0.379901975, 0.24308826, 0.32107842, 0.5718954 },

"scissors_7.jpg": [4]float64{ 0.341911763, 0.20714055, 0.3137255, 0.6356209 },

"scissors_8.jpg": [4]float64{ 0.231617644, 0.08459154, 0.504901946, 0.8480392 },

"scissors_9.jpg": [4]float64{ 0.170343131, 0.332957536, 0.767156839, 0.403594762 },

"scissors_10.jpg": [4]float64{ 0.204656869, 0.120539248, 0.5245098, 0.743464053 },

"scissors_11.jpg": [4]float64{ 0.05514706, 0.159754932, 0.799019635, 0.730392158 },

"scissors_12.jpg": [4]float64{ 0.265931368, 0.169558853, 0.5061275, 0.606209159 },

"scissors_13.jpg": [4]float64{ 0.241421565, 0.184264734, 0.448529422, 0.6830065 },

"scissors_14.jpg": [4]float64{ 0.05759804, 0.05027781, 0.75, 0.882352948 },

"scissors_15.jpg": [4]float64{ 0.191176474, 0.169558853, 0.6936275, 0.6748366 },

"scissors_16.jpg": [4]float64{ 0.1004902, 0.279036, 0.6911765, 0.477124184 },

"scissors_17.jpg": [4]float64{ 0.2720588, 0.131977156, 0.4987745, 0.6911765 },

"scissors_18.jpg": [4]float64{ 0.180147052, 0.112369314, 0.6262255, 0.6666667 },

"scissors_19.jpg": [4]float64{ 0.333333343, 0.0274019931, 0.443627447, 0.852941155 },

"scissors_20.jpg": [4]float64{ 0.158088237, 0.04047389, 0.6691176, 0.843137264 },

}

Then, use this map of associations to upload each sample image with its region coordinates (you can upload up to 64 images in a single batch). Add the following code.

Note

You'll need to change the path to the images based on where you downloaded the Azure AI services Go SDK Samples project earlier.

// Go through the data table above and create the images

fmt.Println("Adding images...")

var fork_images []training.ImageFileCreateEntry

for file, region := range forkImageRegions {

imageFile, _ := ioutil.ReadFile(path.Join(sampleDataDirectory, "fork", file))

regiontest := forkImageRegions[file]

imageRegion := training.Region{

TagID: forkTag.ID,

Left: ®iontest[0],

Top: ®iontest[1],

Width: ®iontest[2],

Height: ®iontest[3],

}

var fileName string = file

fork_images = append(fork_images, training.ImageFileCreateEntry{

Name: &fileName,

Contents: &imageFile,

Regions: &[]training.Region{imageRegion}

})

}

fork_batch, _ := trainer.CreateImagesFromFiles(ctx, *project.ID, training.ImageFileCreateBatch{

Images: &fork_images,

})

if (!*fork_batch.IsBatchSuccessful) {

fmt.Println("Batch upload failed.")

}

var scissor_images []training.ImageFileCreateEntry

for file, region := range scissorsImageRegions {

imageFile, _ := ioutil.ReadFile(path.Join(sampleDataDirectory, "scissors", file))

imageRegion := training.Region {

TagID:scissorsTag.ID,

Left:®ion[0],

Top:®ion[1],

Width:®ion[2],

Height:®ion[3],

}

scissor_images = append(scissor_images, training.ImageFileCreateEntry {

Name: &file,

Contents: &imageFile,

Regions: &[]training.Region{ imageRegion },

})

}

scissor_batch, _ := trainer.CreateImagesFromFiles(ctx, *project.ID, training.ImageFileCreateBatch{

Images: &scissor_images,

})

if (!*scissor_batch.IsBatchSuccessful) {

fmt.Println("Batch upload failed.")

}

Train and publish the project

This code creates the first iteration of the prediction model and then publishes that iteration to the prediction endpoint. The name given to the published iteration can be used to send prediction requests. An iteration is not available in the prediction endpoint until it's published.

iteration, _ := trainer.TrainProject(ctx, *project.ID)

fmt.Println("Training status:", *iteration.Status)

for {

if *iteration.Status != "Training" {

break

}

time.Sleep(5 * time.Second)

iteration, _ = trainer.GetIteration(ctx, *project.ID, *iteration.ID)

fmt.Println("Training status:", *iteration.Status)

}

trainer.PublishIteration(ctx, *project.ID, *iteration.ID, iteration_publish_name, prediction_resource_id))

Use the prediction endpoint

To send an image to the prediction endpoint and retrieve the prediction, add the following code to the end of the file:

fmt.Println("Predicting...")

predictor := prediction.New(prediction_key, endpoint)

testImageData, _ := ioutil.ReadFile(path.Join(sampleDataDirectory, "Test", "test_od_image.jpg"))

results, _ := predictor.DetectImage(ctx, *project.ID, iteration_publish_name, ioutil.NopCloser(bytes.NewReader(testImageData)), "")

for _, prediction := range *results.Predictions {

boundingBox := *prediction.BoundingBox

fmt.Printf("\t%s: %.2f%% (%.2f, %.2f, %.2f, %.2f)",

*prediction.TagName,

*prediction.Probability * 100,

*boundingBox.Left,

*boundingBox.Top,

*boundingBox.Width,

*boundingBox.Height)

fmt.Println("")

}

}

Run the application

Run sample.go.

go run sample.go

The output of the application should appear in the console. You can then verify that the test image (found in samples/vision/images/Test) is tagged appropriately and that the region of detection is correct.

Clean up resources

If you wish to implement your own object detection project (or try an image classification project instead), you might want to delete the fork/scissors detection project from this example. A free subscription allows for two Custom Vision projects.

On the Custom Vision website, navigate to Projects and select the trash can under My New Project.

Next steps

Now you've done every step of the object detection process in code. This sample executes a single training iteration, but often you'll need to train and test your model multiple times in order to make it more accurate. The following guide deals with image classification, but its principles are similar to object detection.

Get started using the Custom Vision client library for Java to build an object detection model. Follow these steps to install the package and try out the example code for basic tasks. Use this example as a template for building your own image recognition app.

Note

If you want to build and train an object detection model without writing code, see the browser-based guidance instead.

Reference documentation | Library source code (training) (prediction)| Artifact (Maven) (training) (prediction) | Samples

Prerequisites

- An Azure subscription - Create one for free

- The current version of the Java Development Kit(JDK)

- The Gradle build tool, or another dependency manager.

- Once you have your Azure subscription, create a Custom Vision resource in the Azure portal to create a training and prediction resource.

- You can use the free pricing tier (

F0) to try the service, and upgrade later to a paid tier for production.

- You can use the free pricing tier (

Create environment variables

In this example, you'll write your credentials to environment variables on the local machine running the application.

Go to the Azure portal. If the Custom Vision resources you created in the Prerequisites section deployed successfully, select the Go to Resource button under Next steps. You can find your keys and endpoints in the resources' Keys and Endpoint pages, under Resource Management. You'll need to get the keys for both your training resource and prediction resource, along with the API endpoints.

You can find the prediction resource ID on the prediction resource's Properties tab in the Azure portal, listed as Resource ID.

Tip

You also use https://www.customvision.ai to get these values. After you sign in, select the Settings icon at the top right. On the Setting pages, you can view all the keys, resource ID, and endpoints.

To set the environment variables, open a console window and follow the instructions for your operating system and development environment.

- To set the

VISION_TRAINING KEYenvironment variable, replace<your-training-key>with one of the keys for your training resource. - To set the

VISION_TRAINING_ENDPOINTenvironment variable, replace<your-training-endpoint>with the endpoint for your training resource. - To set the

VISION_PREDICTION_KEYenvironment variable, replace<your-prediction-key>with one of the keys for your prediction resource. - To set the

VISION_PREDICTION_ENDPOINTenvironment variable, replace<your-prediction-endpoint>with the endpoint for your prediction resource. - To set the

VISION_PREDICTION_RESOURCE_IDenvironment variable, replace<your-resource-id>with the resource ID for your prediction resource.

Important

We recommend Microsoft Entra ID authentication with managed identities for Azure resources to avoid storing credentials with your applications that run in the cloud.

Use API keys with caution. Don't include the API key directly in your code, and never post it publicly. If using API keys, store them securely in Azure Key Vault, rotate the keys regularly, and restrict access to Azure Key Vault using role based access control and network access restrictions. For more information about using API keys securely in your apps, see API keys with Azure Key Vault.

For more information about AI services security, see Authenticate requests to Azure AI services.

setx VISION_TRAINING_KEY <your-training-key>

setx VISION_TRAINING_ENDPOINT <your-training-endpoint>

setx VISION_PREDICTION_KEY <your-prediction-key>

setx VISION_PREDICTION_ENDPOINT <your-prediction-endpoint>

setx VISION_PREDICTION_RESOURCE_ID <your-resource-id>

After you add the environment variables, you might need to restart any running programs that read the environment variables, including the console window.

Setting up

Create a new Gradle project

In a console window (such as cmd, PowerShell, or Bash), create a new directory for your app, and navigate to it.

mkdir myapp && cd myapp

Run the gradle init command from your working directory. This command will create essential build files for Gradle, including build.gradle.kts, which is used at runtime to create and configure your application.

gradle init --type basic

When prompted to choose a DSL, select Kotlin.

Install the client library

Locate build.gradle.kts and open it with your preferred IDE or text editor. Then copy in the following build configuration. This configuration defines the project as a Java application whose entry point is the class CustomVisionQuickstart. It imports the Custom Vision libraries.

plugins {

java

application

}

application {

mainClassName = "CustomVisionQuickstart"

}

repositories {

mavenCentral()

}

dependencies {

compile(group = "com.azure", name = "azure-cognitiveservices-customvision-training", version = "1.1.0-preview.2")

compile(group = "com.azure", name = "azure-cognitiveservices-customvision-prediction", version = "1.1.0-preview.2")

}

Create a Java file

From your working directory, run the following command to create a project source folder:

mkdir -p src/main/java

Navigate to the new folder and create a file called CustomVisionQuickstart.java. Open it in your preferred editor or IDE and add the following import statements:

import java.util.Collections;

import java.util.HashMap;

import java.util.List;

import java.util.UUID;

import com.google.common.io.ByteStreams;

import com.microsoft.azure.cognitiveservices.vision.customvision.training.models.Classifier;

import com.microsoft.azure.cognitiveservices.vision.customvision.training.models.Domain;

import com.microsoft.azure.cognitiveservices.vision.customvision.training.models.DomainType;

import com.microsoft.azure.cognitiveservices.vision.customvision.training.models.ImageFileCreateBatch;

import com.microsoft.azure.cognitiveservices.vision.customvision.training.models.ImageFileCreateEntry;

import com.microsoft.azure.cognitiveservices.vision.customvision.training.models.Iteration;

import com.microsoft.azure.cognitiveservices.vision.customvision.training.models.Project;

import com.microsoft.azure.cognitiveservices.vision.customvision.training.models.Region;

import com.microsoft.azure.cognitiveservices.vision.customvision.training.models.TrainProjectOptionalParameter;

import com.microsoft.azure.cognitiveservices.vision.customvision.training.CustomVisionTrainingClient;

import com.microsoft.azure.cognitiveservices.vision.customvision.training.Trainings;

import com.microsoft.azure.cognitiveservices.vision.customvision.training.CustomVisionTrainingManager;

import com.microsoft.azure.cognitiveservices.vision.customvision.prediction.models.ImagePrediction;

import com.microsoft.azure.cognitiveservices.vision.customvision.prediction.models.Prediction;

import com.microsoft.azure.cognitiveservices.vision.customvision.prediction.CustomVisionPredictionClient;

import com.microsoft.azure.cognitiveservices.vision.customvision.prediction.CustomVisionPredictionManager;

import com.microsoft.azure.cognitiveservices.vision.customvision.training.models.Tag;

Tip

Want to view the whole quickstart code file at once? You can find it on GitHub, which contains the code examples in this quickstart.

In the application's CustomVisionQuickstart class, create variables that retrieve your resource's keys and endpoint from environment variables.

// retrieve environment variables

final static String trainingApiKey = System.getenv("VISION_TRAINING_KEY");

final static String trainingEndpoint = System.getenv("VISION_TRAINING_ENDPOINT");

final static String predictionApiKey = System.getenv("VISION_PREDICTION_KEY");

final static String predictionEndpoint = System.getenv("VISION_PREDICTION_ENDPOINT");

final static String predictionResourceId = System.getenv("VISION_PREDICTION_RESOURCE_ID");

In the application's main method, add calls for the methods used in this quickstart. You'll define these later.

Project projectOD = createProjectOD(trainClient);

addTagsOD(trainClient, projectOD);

uploadImagesOD(trainClient, projectOD);

trainProjectOD(trainClient, projectOD);

publishIterationOD(trainClient, project);

testProjectOD(predictor, projectOD);

Object model

The following classes and interfaces handle some of the major features of the Custom Vision Java client library.

| Name | Description |

|---|---|

| CustomVisionTrainingClient | This class handles the creation, training, and publishing of your models. |

| CustomVisionPredictionClient | This class handles the querying of your models for object detection predictions. |

| ImagePrediction | This class defines a single object prediction on a single image. It includes properties for the object ID and name, the bounding box location of the object, and a confidence score. |

Code examples

These code snippets show you how to do the following tasks with the Custom Vision client library for Java:

- Authenticate the client

- Create a new Custom Vision project

- Add tags to the project

- Upload and tag images

- Train the project

- Publish the current iteration

- Test the prediction endpoint

Authenticate the client

In your main method, instantiate training and prediction clients using your endpoint and keys.

// Authenticate

CustomVisionTrainingClient trainClient = CustomVisionTrainingManager

.authenticate(trainingEndpoint, trainingApiKey)

.withEndpoint(trainingEndpoint);

CustomVisionPredictionClient predictor = CustomVisionPredictionManager

.authenticate(predictionEndpoint, predictionApiKey)

.withEndpoint(predictionEndpoint);

Create a new Custom Vision project

This next method creates an object detection project. The created project will show up on the Custom Vision website that you visited earlier. See the CreateProject method overloads to specify other options when you create your project (explained in the Build a detector web portal guide).

public static Project createProjectOD(CustomVisionTrainingClient trainClient) {

Trainings trainer = trainClient.trainings();

// find the object detection domain to set the project type

Domain objectDetectionDomain = null;

List<Domain> domains = trainer.getDomains();

for (final Domain domain : domains) {

if (domain.type() == DomainType.OBJECT_DETECTION) {

objectDetectionDomain = domain;

break;

}

}

if (objectDetectionDomain == null) {

System.out.println("Unexpected result; no objects were detected.");

}

System.out.println("Creating project...");

// create an object detection project

Project project = trainer.createProject().withName("Sample Java OD Project")

.withDescription("Sample OD Project").withDomainId(objectDetectionDomain.id())

.withClassificationType(Classifier.MULTILABEL.toString()).execute();

return project;

}

Add tags to your project

This method defines the tags that you will train the model on.

public static void addTagsOD(CustomVisionTrainingClient trainClient, Project project) {

Trainings trainer = trainClient.trainings();

// create fork tag

Tag forkTag = trainer.createTag().withProjectId(project.id()).withName("fork").execute();

// create scissors tag

Tag scissorsTag = trainer.createTag().withProjectId(project.id()).withName("scissor").execute();

}

Upload and tag images

First, download the sample images for this project. Save the contents of the sample Images folder to your local device.

Note

Do you need a broader set of images to complete your training? Trove, a Microsoft Garage project, allows you to collect and purchase sets of images for training purposes. Once you've collected your images, you can download them and then import them into your Custom Vision project in the usual way. Visit the Trove page to learn more.

When you tag images in object detection projects, you need to specify the region of each tagged object using normalized coordinates. The following code associates each of the sample images with its tagged region.

Note

If you don't have a click-and-drag utility to mark the coordinates of regions, you can use the web UI at Customvision.ai. In this example, the coordinates are already provided.

public static void uploadImagesOD(CustomVisionTrainingClient trainClient, Project project) {

// Mapping of filenames to their respective regions in the image. The

// coordinates are specified

// as left, top, width, height in normalized coordinates. I.e. (left is left in

// pixels / width in pixels)

// This is a hardcoded mapping of the files we'll upload along with the bounding

// box of the object in the

// image. The boudning box is specified as left, top, width, height in

// normalized coordinates.

// Normalized Left = Left / Width (in Pixels)

// Normalized Top = Top / Height (in Pixels)

// Normalized Bounding Box Width = (Right - Left) / Width (in Pixels)

// Normalized Bounding Box Height = (Bottom - Top) / Height (in Pixels)

HashMap<String, double[]> regionMap = new HashMap<String, double[]>();

regionMap.put("scissors_1.jpg", new double[] { 0.4007353, 0.194068655, 0.259803921, 0.6617647 });

regionMap.put("scissors_2.jpg", new double[] { 0.426470578, 0.185898721, 0.172794119, 0.5539216 });

regionMap.put("scissors_3.jpg", new double[] { 0.289215684, 0.259428144, 0.403186262, 0.421568632 });

regionMap.put("scissors_4.jpg", new double[] { 0.343137264, 0.105833367, 0.332107842, 0.8055556 });

regionMap.put("scissors_5.jpg", new double[] { 0.3125, 0.09766343, 0.435049027, 0.71405226 });

regionMap.put("scissors_6.jpg", new double[] { 0.379901975, 0.24308826, 0.32107842, 0.5718954 });

regionMap.put("scissors_7.jpg", new double[] { 0.341911763, 0.20714055, 0.3137255, 0.6356209 });

regionMap.put("scissors_8.jpg", new double[] { 0.231617644, 0.08459154, 0.504901946, 0.8480392 });

regionMap.put("scissors_9.jpg", new double[] { 0.170343131, 0.332957536, 0.767156839, 0.403594762 });

regionMap.put("scissors_10.jpg", new double[] { 0.204656869, 0.120539248, 0.5245098, 0.743464053 });

regionMap.put("scissors_11.jpg", new double[] { 0.05514706, 0.159754932, 0.799019635, 0.730392158 });

regionMap.put("scissors_12.jpg", new double[] { 0.265931368, 0.169558853, 0.5061275, 0.606209159 });

regionMap.put("scissors_13.jpg", new double[] { 0.241421565, 0.184264734, 0.448529422, 0.6830065 });

regionMap.put("scissors_14.jpg", new double[] { 0.05759804, 0.05027781, 0.75, 0.882352948 });

regionMap.put("scissors_15.jpg", new double[] { 0.191176474, 0.169558853, 0.6936275, 0.6748366 });

regionMap.put("scissors_16.jpg", new double[] { 0.1004902, 0.279036, 0.6911765, 0.477124184 });

regionMap.put("scissors_17.jpg", new double[] { 0.2720588, 0.131977156, 0.4987745, 0.6911765 });

regionMap.put("scissors_18.jpg", new double[] { 0.180147052, 0.112369314, 0.6262255, 0.6666667 });

regionMap.put("scissors_19.jpg", new double[] { 0.333333343, 0.0274019931, 0.443627447, 0.852941155 });

regionMap.put("scissors_20.jpg", new double[] { 0.158088237, 0.04047389, 0.6691176, 0.843137264 });

regionMap.put("fork_1.jpg", new double[] { 0.145833328, 0.3509314, 0.5894608, 0.238562092 });

regionMap.put("fork_2.jpg", new double[] { 0.294117659, 0.216944471, 0.534313738, 0.5980392 });

regionMap.put("fork_3.jpg", new double[] { 0.09191177, 0.0682516545, 0.757352948, 0.6143791 });

regionMap.put("fork_4.jpg", new double[] { 0.254901975, 0.185898721, 0.5232843, 0.594771266 });

regionMap.put("fork_5.jpg", new double[] { 0.2365196, 0.128709182, 0.5845588, 0.71405226 });

regionMap.put("fork_6.jpg", new double[] { 0.115196079, 0.133611143, 0.676470637, 0.6993464 });

regionMap.put("fork_7.jpg", new double[] { 0.164215669, 0.31008172, 0.767156839, 0.410130739 });

regionMap.put("fork_8.jpg", new double[] { 0.118872553, 0.318251669, 0.817401946, 0.225490168 });

regionMap.put("fork_9.jpg", new double[] { 0.18259804, 0.2136765, 0.6335784, 0.643790841 });

regionMap.put("fork_10.jpg", new double[] { 0.05269608, 0.282303959, 0.8088235, 0.452614367 });

regionMap.put("fork_11.jpg", new double[] { 0.05759804, 0.0894935, 0.9007353, 0.3251634 });

regionMap.put("fork_12.jpg", new double[] { 0.3345588, 0.07315363, 0.375, 0.9150327 });

regionMap.put("fork_13.jpg", new double[] { 0.269607842, 0.194068655, 0.4093137, 0.6732026 });

regionMap.put("fork_14.jpg", new double[] { 0.143382356, 0.218578458, 0.7977941, 0.295751631 });

regionMap.put("fork_15.jpg", new double[] { 0.19240196, 0.0633497, 0.5710784, 0.8398692 });

regionMap.put("fork_16.jpg", new double[] { 0.140931368, 0.480016381, 0.6838235, 0.240196079 });

regionMap.put("fork_17.jpg", new double[] { 0.305147052, 0.2512582, 0.4791667, 0.5408496 });

regionMap.put("fork_18.jpg", new double[] { 0.234068632, 0.445702642, 0.6127451, 0.344771236 });

regionMap.put("fork_19.jpg", new double[] { 0.219362751, 0.141781077, 0.5919118, 0.6683006 });

regionMap.put("fork_20.jpg", new double[] { 0.180147052, 0.239820287, 0.6887255, 0.235294119 });

The next code block adds the images to the project. You'll need to change the arguments of the GetImage calls to point to the locations of the fork and scissors folders that you downloaded.

Trainings trainer = trainClient.trainings();

System.out.println("Adding images...");

for (int i = 1; i <= 20; i++) {

String fileName = "fork_" + i + ".jpg";

byte[] contents = GetImage("/fork", fileName);

AddImageToProject(trainer, project, fileName, contents, forkTag.id(), regionMap.get(fileName));

}

for (int i = 1; i <= 20; i++) {

String fileName = "scissors_" + i + ".jpg";

byte[] contents = GetImage("/scissors", fileName);

AddImageToProject(trainer, project, fileName, contents, scissorsTag.id(), regionMap.get(fileName));

}

}

The previous code snippet makes use of two helper functions that retrieve the images as resource streams and upload them to the service (you can upload up to 64 images in a single batch). Define these methods.

private static void AddImageToProject(Trainings trainer, Project project, String fileName, byte[] contents,

UUID tag, double[] regionValues) {

System.out.println("Adding image: " + fileName);

ImageFileCreateEntry file = new ImageFileCreateEntry().withName(fileName).withContents(contents);

ImageFileCreateBatch batch = new ImageFileCreateBatch().withImages(Collections.singletonList(file));

// If Optional region is specified, tack it on and place the tag there,

// otherwise

// add it to the batch.

if (regionValues != null) {

Region region = new Region().withTagId(tag).withLeft(regionValues[0]).withTop(regionValues[1])

.withWidth(regionValues[2]).withHeight(regionValues[3]);

file = file.withRegions(Collections.singletonList(region));

} else {

batch = batch.withTagIds(Collections.singletonList(tag));

}

trainer.createImagesFromFiles(project.id(), batch);

}

private static byte[] GetImage(String folder, String fileName) {

try {

return ByteStreams.toByteArray(CustomVisionSamples.class.getResourceAsStream(folder + "/" + fileName));

} catch (Exception e) {

System.out.println(e.getMessage());

e.printStackTrace();

}

return null;

}

Train the project

This method creates the first training iteration in the project. It queries the service until training is completed.

public static String trainProjectOD(CustomVisionTrainingClient trainClient, Project project) {

Trainings trainer = trainClient.trainings();

System.out.println("Training...");

Iteration iteration = trainer.trainProject(project.id(), new TrainProjectOptionalParameter());

while (iteration.status().equals("Training")) {

System.out.println("Training Status: " + iteration.status());

Thread.sleep(5000);

iteration = trainer.getIteration(project.id(), iteration.id());

}

System.out.println("Training Status: " + iteration.status());

}

Publish the current iteration

This method makes the current iteration of the model available for querying. You can use the model name as a reference to send prediction requests. You need to enter your own value for predictionResourceId. You can find the prediction resource ID on the resource's Properties tab in the Azure portal, listed as Resource ID.

public static String publishIterationOD(CustomVisionTrainingClient trainClient, Project project) {

Trainings trainer = trainClient.trainings();

// The iteration is now trained. Publish it to the prediction endpoint.

String publishedModelName = "myModel";

String predictionID = "<your-prediction-resource-ID>";

trainer.publishIteration(project.id(), iteration.id(), publishedModelName, predictionID);

return publishedModelName;

}

Test the prediction endpoint

This method loads the test image, queries the model endpoint, and outputs prediction data to the console.

public static void testProjectOD(CustomVisionPredictionClient predictor, Project project) {

// load test image

byte[] testImage = GetImage("/ObjectTest", "test_image.jpg");

// predict

ImagePrediction results = predictor.predictions().detectImage().withProjectId(project.id())

.withPublishedName(publishedModelName).withImageData(testImage).execute();

for (Prediction prediction : results.predictions()) {

System.out.println(String.format("\t%s: %.2f%% at: %.2f, %.2f, %.2f, %.2f", prediction.tagName(),

prediction.probability() * 100.0f, prediction.boundingBox().left(), prediction.boundingBox().top(),

prediction.boundingBox().width(), prediction.boundingBox().height()));

}

}

Run the application

You can build the app with:

gradle build

Run the application with the gradle run command:

gradle run

Clean up resources

If you want to clean up and remove an Azure AI services subscription, you can delete the resource or resource group. Deleting the resource group also deletes any other resources associated with it.

If you wish to implement your own object detection project (or try an image classification project instead), you might want to delete the fork/scissors detection project from this example. A free subscription allows for two Custom Vision projects.

On the Custom Vision website, navigate to Projects and select the trash can under My New Project.

Next steps

Now you've done every step of the object detection process in code. This sample executes a single training iteration, but often you'll need to train and test your model multiple times in order to make it more accurate. The following guide deals with image classification, but its principles are similar to object detection.

- What is Custom Vision?

- The source code for this sample can be found on GitHub

This guide provides instructions and sample code to help you get started using the Custom Vision client library for Node.js to build an object detection model. You create a project, add tags, train the project, and use the project's prediction endpoint URL to programmatically test it. Use this example as a template for building your own image recognition app.

Note

If you want to build and train an object detection model without writing code, see the browser-based guidance instead.

Reference documentation (training) (prediction) | Package (npm) (training) (prediction) | Samples

Prerequisites

- Azure subscription - Create one for free

- The current version of Node.js

- Once you have your Azure subscription, create a Custom Vision resource in the Azure portal to create a training and prediction resource.

- You can use the free pricing tier (

F0) to try the service, and upgrade later to a paid tier for production.

- You can use the free pricing tier (

Create environment variables

In this example, you'll write your credentials to environment variables on the local machine running the application.

Go to the Azure portal. If the Custom Vision resources you created in the Prerequisites section deployed successfully, select the Go to Resource button under Next steps. You can find your keys and endpoints in the resources' Keys and Endpoint pages, under Resource Management. You'll need to get the keys for both your training resource and prediction resource, along with the API endpoints.

You can find the prediction resource ID on the prediction resource's Properties tab in the Azure portal, listed as Resource ID.

Tip

You also use https://www.customvision.ai to get these values. After you sign in, select the Settings icon at the top right. On the Setting pages, you can view all the keys, resource ID, and endpoints.

To set the environment variables, open a console window and follow the instructions for your operating system and development environment.

- To set the

VISION_TRAINING KEYenvironment variable, replace<your-training-key>with one of the keys for your training resource. - To set the

VISION_TRAINING_ENDPOINTenvironment variable, replace<your-training-endpoint>with the endpoint for your training resource. - To set the

VISION_PREDICTION_KEYenvironment variable, replace<your-prediction-key>with one of the keys for your prediction resource. - To set the

VISION_PREDICTION_ENDPOINTenvironment variable, replace<your-prediction-endpoint>with the endpoint for your prediction resource. - To set the

VISION_PREDICTION_RESOURCE_IDenvironment variable, replace<your-resource-id>with the resource ID for your prediction resource.

Important

We recommend Microsoft Entra ID authentication with managed identities for Azure resources to avoid storing credentials with your applications that run in the cloud.

Use API keys with caution. Don't include the API key directly in your code, and never post it publicly. If using API keys, store them securely in Azure Key Vault, rotate the keys regularly, and restrict access to Azure Key Vault using role based access control and network access restrictions. For more information about using API keys securely in your apps, see API keys with Azure Key Vault.

For more information about AI services security, see Authenticate requests to Azure AI services.

setx VISION_TRAINING_KEY <your-training-key>

setx VISION_TRAINING_ENDPOINT <your-training-endpoint>

setx VISION_PREDICTION_KEY <your-prediction-key>

setx VISION_PREDICTION_ENDPOINT <your-prediction-endpoint>

setx VISION_PREDICTION_RESOURCE_ID <your-resource-id>

After you add the environment variables, you might need to restart any running programs that read the environment variables, including the console window.

Setting up

Create a new Node.js application

In a console window (such as cmd, PowerShell, or Bash), create a new directory for your app, and navigate to it.

mkdir myapp && cd myapp

Run the npm init command to create a node application with a package.json file.

npm init

Install the client library

To write an image analysis app with Custom Vision for Node.js, you need the Custom Vision npm packages. To install them, run the following command in PowerShell:

npm install @azure/cognitiveservices-customvision-training

npm install @azure/cognitiveservices-customvision-prediction

Your app's package.json file is updated with the dependencies.

Create a file named index.js and import the following libraries:

const util = require('util');

const fs = require('fs');

const TrainingApi = require("@azure/cognitiveservices-customvision-training");

const PredictionApi = require("@azure/cognitiveservices-customvision-prediction");

const msRest = require("@azure/ms-rest-js");

Tip

Want to view the whole quickstart code file at once? You can find it on GitHub, which contains the code examples in this quickstart.

Create variables for your resource's Azure endpoint and keys.

// retrieve environment variables

const trainingKey = process.env["VISION_TRAINING_KEY"];

const trainingEndpoint = process.env["VISION_TRAINING_ENDPOINT"];

const predictionKey = process.env["VISION_PREDICTION_KEY"];

const predictionResourceId = process.env["VISION_PREDICTION_RESOURCE_ID"];

const predictionEndpoint = process.env["VISION_PREDICTION_ENDPOINT"];

Also add fields for your project name and a timeout parameter for asynchronous calls.

const publishIterationName = "detectModel";

const setTimeoutPromise = util.promisify(setTimeout);

Object model

| Name | Description |

|---|---|

| TrainingAPIClient | This class handles the creation, training, and publishing of your models. |

| PredictionAPIClient | This class handles the querying of your models for object detection predictions. |

| Prediction | This interface defines a single prediction on a single image. It includes properties for the object ID and name, and a confidence score. |

Code examples

These code snippets show you how to do the following tasks with the Custom Vision client library for JavaScript:

- Authenticate the client

- Create a new Custom Vision project

- Add tags to the project

- Upload and tag images

- Train the project

- Publish the current iteration

- Test the prediction endpoint

Authenticate the client

Instantiate client objects with your endpoint and key. Create an ApiKeyCredentials object with your key, and use it with your endpoint to create a TrainingAPIClient and PredictionAPIClient object.

const credentials = new msRest.ApiKeyCredentials({ inHeader: { "Training-key": trainingKey } });

const trainer = new TrainingApi.TrainingAPIClient(credentials, trainingEndpoint);

const predictor_credentials = new msRest.ApiKeyCredentials({ inHeader: { "Prediction-key": predictionKey } });

const predictor = new PredictionApi.PredictionAPIClient(predictor_credentials, predictionEndpoint);

Add helper function

Add the following function to help make multiple asynchronous calls. You'll use this later on.

const credentials = new msRest.ApiKeyCredentials({ inHeader: { "Training-key": trainingKey } });

const trainer = new TrainingApi.TrainingAPIClient(credentials, trainingEndpoint);

const predictor_credentials = new msRest.ApiKeyCredentials({ inHeader: { "Prediction-key": predictionKey } });

const predictor = new PredictionApi.PredictionAPIClient(predictor_credentials, predictionEndpoint);

Create a new Custom Vision project

Start a new function to contain all of your Custom Vision function calls. Add the following code to create a new Custom Vision service project.

(async () => {

console.log("Creating project...");

const domains = await trainer.getDomains()

const objDetectDomain = domains.find(domain => domain.type === "ObjectDetection");

const sampleProject = await trainer.createProject("Sample Obj Detection Project", { domainId: objDetectDomain.id });

Add tags to the project

To create classification tags to your project, add the following code to your function:

const forkTag = await trainer.createTag(sampleProject.id, "Fork");

const scissorsTag = await trainer.createTag(sampleProject.id, "Scissors");

Upload and tag images

First, download the sample images for this project. Save the contents of the sample Images folder to your local device.

To add the sample images to the project, insert the following code after the tag creation. This code uploads each image with its corresponding tag. When you tag images in object detection projects, you need to specify the region of each tagged object using normalized coordinates. For this tutorial, the regions are hardcoded inline with the code. The regions specify the bounding box in normalized coordinates, and the coordinates are given in the order: left, top, width, height. You can upload up to 64 images in a single batch.

const sampleDataRoot = "Images";

const forkImageRegions = {

"fork_1.jpg": [0.145833328, 0.3509314, 0.5894608, 0.238562092],

"fork_2.jpg": [0.294117659, 0.216944471, 0.534313738, 0.5980392],

"fork_3.jpg": [0.09191177, 0.0682516545, 0.757352948, 0.6143791],

"fork_4.jpg": [0.254901975, 0.185898721, 0.5232843, 0.594771266],

"fork_5.jpg": [0.2365196, 0.128709182, 0.5845588, 0.71405226],

"fork_6.jpg": [0.115196079, 0.133611143, 0.676470637, 0.6993464],

"fork_7.jpg": [0.164215669, 0.31008172, 0.767156839, 0.410130739],

"fork_8.jpg": [0.118872553, 0.318251669, 0.817401946, 0.225490168],

"fork_9.jpg": [0.18259804, 0.2136765, 0.6335784, 0.643790841],

"fork_10.jpg": [0.05269608, 0.282303959, 0.8088235, 0.452614367],

"fork_11.jpg": [0.05759804, 0.0894935, 0.9007353, 0.3251634],

"fork_12.jpg": [0.3345588, 0.07315363, 0.375, 0.9150327],

"fork_13.jpg": [0.269607842, 0.194068655, 0.4093137, 0.6732026],

"fork_14.jpg": [0.143382356, 0.218578458, 0.7977941, 0.295751631],

"fork_15.jpg": [0.19240196, 0.0633497, 0.5710784, 0.8398692],

"fork_16.jpg": [0.140931368, 0.480016381, 0.6838235, 0.240196079],

"fork_17.jpg": [0.305147052, 0.2512582, 0.4791667, 0.5408496],

"fork_18.jpg": [0.234068632, 0.445702642, 0.6127451, 0.344771236],

"fork_19.jpg": [0.219362751, 0.141781077, 0.5919118, 0.6683006],

"fork_20.jpg": [0.180147052, 0.239820287, 0.6887255, 0.235294119]

};

const scissorsImageRegions = {

"scissors_1.jpg": [0.4007353, 0.194068655, 0.259803921, 0.6617647],

"scissors_2.jpg": [0.426470578, 0.185898721, 0.172794119, 0.5539216],

"scissors_3.jpg": [0.289215684, 0.259428144, 0.403186262, 0.421568632],

"scissors_4.jpg": [0.343137264, 0.105833367, 0.332107842, 0.8055556],

"scissors_5.jpg": [0.3125, 0.09766343, 0.435049027, 0.71405226],

"scissors_6.jpg": [0.379901975, 0.24308826, 0.32107842, 0.5718954],

"scissors_7.jpg": [0.341911763, 0.20714055, 0.3137255, 0.6356209],

"scissors_8.jpg": [0.231617644, 0.08459154, 0.504901946, 0.8480392],

"scissors_9.jpg": [0.170343131, 0.332957536, 0.767156839, 0.403594762],

"scissors_10.jpg": [0.204656869, 0.120539248, 0.5245098, 0.743464053],

"scissors_11.jpg": [0.05514706, 0.159754932, 0.799019635, 0.730392158],

"scissors_12.jpg": [0.265931368, 0.169558853, 0.5061275, 0.606209159],

"scissors_13.jpg": [0.241421565, 0.184264734, 0.448529422, 0.6830065],

"scissors_14.jpg": [0.05759804, 0.05027781, 0.75, 0.882352948],

"scissors_15.jpg": [0.191176474, 0.169558853, 0.6936275, 0.6748366],

"scissors_16.jpg": [0.1004902, 0.279036, 0.6911765, 0.477124184],

"scissors_17.jpg": [0.2720588, 0.131977156, 0.4987745, 0.6911765],

"scissors_18.jpg": [0.180147052, 0.112369314, 0.6262255, 0.6666667],

"scissors_19.jpg": [0.333333343, 0.0274019931, 0.443627447, 0.852941155],

"scissors_20.jpg": [0.158088237, 0.04047389, 0.6691176, 0.843137264]

};

console.log("Adding images...");

let fileUploadPromises = [];

const forkDir = `${sampleDataRoot}/fork`;

const forkFiles = fs.readdirSync(forkDir);

await asyncForEach(forkFiles, async (file) => {

const region = { tagId: forkTag.id, left: forkImageRegions[file][0], top: forkImageRegions[file][1], width: forkImageRegions[file][2], height: forkImageRegions[file][3] };

const entry = { name: file, contents: fs.readFileSync(`${forkDir}/${file}`), regions: [region] };

const batch = { images: [entry] };

// Wait one second to accommodate rate limit.

await setTimeoutPromise(1000, null);

fileUploadPromises.push(trainer.createImagesFromFiles(sampleProject.id, batch));

});

const scissorsDir = `${sampleDataRoot}/scissors`;

const scissorsFiles = fs.readdirSync(scissorsDir);

await asyncForEach(scissorsFiles, async (file) => {

const region = { tagId: scissorsTag.id, left: scissorsImageRegions[file][0], top: scissorsImageRegions[file][1], width: scissorsImageRegions[file][2], height: scissorsImageRegions[file][3] };

const entry = { name: file, contents: fs.readFileSync(`${scissorsDir}/${file}`), regions: [region] };

const batch = { images: [entry] };

// Wait one second to accommodate rate limit.

await setTimeoutPromise(1000, null);

fileUploadPromises.push(trainer.createImagesFromFiles(sampleProject.id, batch));

});

await Promise.all(fileUploadPromises);

Important

You need to change the path to the images (sampleDataRoot) based on where you downloaded the Azure AI services Python SDK Samples repo.

Note

If you don't have a click-and-drag utility to mark the coordinates of regions, you can use the web UI at Customvision.ai. In this example, the coordinates are already provided.

Train the project

This code creates the first iteration of the prediction model.

console.log("Training...");

let trainingIteration = await trainer.trainProject(sampleProject.id);

// Wait for training to complete

console.log("Training started...");

while (trainingIteration.status == "Training") {

console.log("Training status: " + trainingIteration.status);

// wait for ten seconds

await setTimeoutPromise(10000, null);

trainingIteration = await trainer.getIteration(sampleProject.id, trainingIteration.id)

}

console.log("Training status: " + trainingIteration.status);

Publish the current iteration

This code publishes the trained iteration to the prediction endpoint. The name given to the published iteration can be used to send prediction requests. An iteration is not available in the prediction endpoint until it is published.

// Publish the iteration to the end point

await trainer.publishIteration(sampleProject.id, trainingIteration.id, publishIterationName, predictionResourceId);

Test the prediction endpoint

To send an image to the prediction endpoint and retrieve the prediction, add the following code to your function.

const testFile = fs.readFileSync(`${sampleDataRoot}/test/test_image.jpg`);

const results = await predictor.detectImage(sampleProject.id, publishIterationName, testFile)

// Show results

console.log("Results:");

results.predictions.forEach(predictedResult => {

console.log(`\t ${predictedResult.tagName}: ${(predictedResult.probability * 100.0).toFixed(2)}% ${predictedResult.boundingBox.left},${predictedResult.boundingBox.top},${predictedResult.boundingBox.width},${predictedResult.boundingBox.height}`);

});

Then, close your Custom Vision function and call it.

})()

Run the application

Run the application with the node command on your quickstart file.

node index.js

The output of the application should appear in the console. You can then verify that the test image (found in <sampleDataRoot>/Test/) is tagged appropriately and that the region of detection is correct. You can also go back to the Custom Vision website and see the current state of your newly created project.

Clean up resources

If you wish to implement your own object detection project (or try an image classification project instead), you might want to delete the fork/scissors detection project from this example. A free subscription allows for two Custom Vision projects.

On the Custom Vision website, navigate to Projects and select the trash can under My New Project.

Next steps

Now you've done every step of the object detection process in code. This sample executes a single training iteration, but often you need to train and test your model multiple times in order to make it more accurate. The following guide deals with image classification, but its principles are similar to object detection.

- What is Custom Vision?

- The source code for this sample can be found on GitHub

- SDK reference documentation (training)

- SDK reference documentation (prediction)

Get started with the Custom Vision client library for Python. Follow these steps to install the package and try out the example code for building an object detection model. You create a project, add tags, train the project, and use the project's prediction endpoint URL to programmatically test it. Use this example as a template for building your own image recognition app.

Note

If you want to build and train an object detection model without writing code, see the browser-based guidance instead.

Reference documentation | Library source code | Package (PyPI) | Samples

Prerequisites

- Azure subscription - Create one for free

- Python 3.x

- Your Python installation should include pip. You can check if you have pip installed by running

pip --versionon the command line. Get pip by installing the latest version of Python.

- Your Python installation should include pip. You can check if you have pip installed by running

- Once you have your Azure subscription, create a Custom Vision resource in the Azure portal to create a training and prediction resource.

- You can use the free pricing tier (

F0) to try the service, and upgrade later to a paid tier for production.

- You can use the free pricing tier (

Create environment variables

In this example, you'll write your credentials to environment variables on the local machine running the application.

Go to the Azure portal. If the Custom Vision resources you created in the Prerequisites section deployed successfully, select the Go to Resource button under Next steps. You can find your keys and endpoints in the resources' Keys and Endpoint pages, under Resource Management. You'll need to get the keys for both your training resource and prediction resource, along with the API endpoints.

You can find the prediction resource ID on the prediction resource's Properties tab in the Azure portal, listed as Resource ID.

Tip

You also use https://www.customvision.ai to get these values. After you sign in, select the Settings icon at the top right. On the Setting pages, you can view all the keys, resource ID, and endpoints.

To set the environment variables, open a console window and follow the instructions for your operating system and development environment.

- To set the

VISION_TRAINING KEYenvironment variable, replace<your-training-key>with one of the keys for your training resource. - To set the

VISION_TRAINING_ENDPOINTenvironment variable, replace<your-training-endpoint>with the endpoint for your training resource. - To set the

VISION_PREDICTION_KEYenvironment variable, replace<your-prediction-key>with one of the keys for your prediction resource. - To set the

VISION_PREDICTION_ENDPOINTenvironment variable, replace<your-prediction-endpoint>with the endpoint for your prediction resource. - To set the

VISION_PREDICTION_RESOURCE_IDenvironment variable, replace<your-resource-id>with the resource ID for your prediction resource.

Important

We recommend Microsoft Entra ID authentication with managed identities for Azure resources to avoid storing credentials with your applications that run in the cloud.

Use API keys with caution. Don't include the API key directly in your code, and never post it publicly. If using API keys, store them securely in Azure Key Vault, rotate the keys regularly, and restrict access to Azure Key Vault using role based access control and network access restrictions. For more information about using API keys securely in your apps, see API keys with Azure Key Vault.

For more information about AI services security, see Authenticate requests to Azure AI services.

setx VISION_TRAINING_KEY <your-training-key>

setx VISION_TRAINING_ENDPOINT <your-training-endpoint>

setx VISION_PREDICTION_KEY <your-prediction-key>

setx VISION_PREDICTION_ENDPOINT <your-prediction-endpoint>

setx VISION_PREDICTION_RESOURCE_ID <your-resource-id>