Azure Cloud Services for Tello Drones: How to perform image analyses at the edge with Azure Custom Vision Services

Introduction

Tello is a programmable mini drone, which is perfect and popular for beginners. Users can easily control it by programming languages such as Scratch, Python, and Swift. Microsoft Azure provides a variety of cloud computing services including artificial intelligence, machine learning, IoT, storage, security, networking, media, integration and so on. Azure Custom Vision is an image recognition service that lets you build, deploy, and improve your own image identifier models. An image identifier applies labels to images, according to their detected visual characteristics. Unlike the Azure Computer Vision service, Custom Vision allows you to specify your own labels and train custom models to detect them. So, compared with Azure Computer Vision service, it’s more flexible to use. Azure IoT Edge is a device-focused runtime that we can deploy, run, and monitor containerized Linux workloads. Azure IoT Edge is able to bring the analytical power of the cloud closer to the local devices to drive better business insights and enable offline decision making.

In this article, we will walk you through the steps required to perform image analyses from Tello drone at the Azure IoT Edge with Azure Custom Vision Services. Additionally, we will mark the identified target with red rectangle. In this article, we will focus on the work that the local Azure IoT Edge device does.

Prerequisites

- Tello Drone.

- Edge device with Azure IoT Edge runtime installed.

- IDE: PyCharm Community

- Azure subscription

System Overview

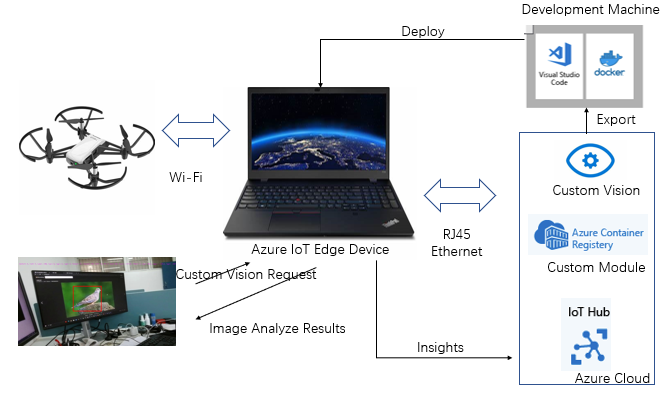

Since Tello is connected to the PC by Wi-Fi, it is straightforward to know that our PC should equipped with two network interface cards, one is for connecting with Tello, and the other one is for connecting with Internet.

This work is accomplished with the help of this tutorial: Perform image classification at the edge with Custom Vision Service. Please refer to the link to read and do the step-by-step work carefully before get-started.

Fig. 1 System Overview

Build an image classifier with Custom Vision

To build an image classifier, we need to create a Custom Vision project and provide training images. For more information about the steps that you take in this section, see How to build a classifier with Custom Vision. Once your image classifier is built and trained, you can export it as a Docker container and deploy it to an IoT Edge device.

In this project, I create an Azure Custom Vision project to detect the mask we want to identify. You can establish your model according to your purpose.

Export your classifier

After training your model, select Export on the Performance page of the project. Select DockerFile for the platform. Select Linux for the version. Select Export. When the export is complete, select Download and save the .zip package locally on your computer. Extract all files from the package. You'll use these files to create an IoT Edge module that contains the image classification server.

Create and deploy an IoT Edge solution

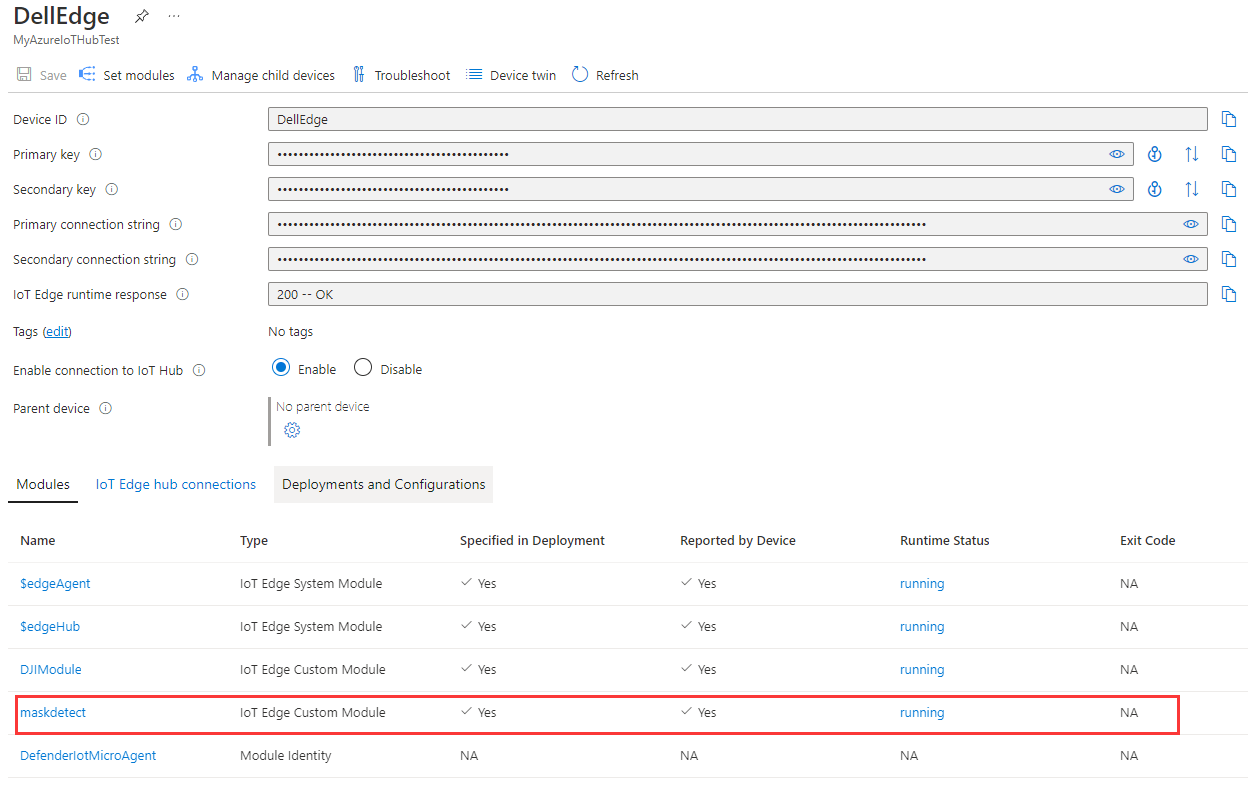

On the development machine, you will now create an IoT Edge solution, add your Azure Registry credentials, select your target architecture, add your own image classifier files, prepare a deployment manifest, build and push your IoT Edge solution to Azure container registry. Finally, don’t forget to deploy the module to the IoT Edge device. In this project, I create a module named “maskdetector”, which you will see it in Azure Portal as shown in Fig. 2.

Fig. 2 Azure IoT Edge Modules running on the device

Install Python Packages

In this project, we will install “djitellopy”, “Pygame” and “PIL” packages to accelerate the development. Please refer to the “Install Python Azure IoT SDK and Tello Python SDK” section of the article “Azure Cloud Services for Tello Drones: How to send telemetry to Azure IoTHub” to complete this step.

Create and Debug Python Code on Your PC

Copy and paste the following code to your PyCharm project.

from djitellopy import tello

import KeyPressModule as kp

import cv2

import requests

import json

from PIL import Image

from PIL import ImageDraw

kp.init()

me = tello.Tello()

me.connect()

print(me.get_battery())

me.streamon()

global img

Local_Custom_Vision_ENDPOINT = "http://IP:Port/image"

# Send an image to the image classifying server

# Return the JSON response from the server with the prediction result

def sendFrameForProcessing(imagePath, imageProcessingEndpoint):

headers = {'Content-Type': 'application/octet-stream'}

with open(imagePath, mode="rb") as test_image:

try:

response = requests.post(imageProcessingEndpoint, headers = headers, data = test_image)

print("Response from custom vision service: (" + str(response.status_code) + ") " + json.dumps(response.json()) + "\n")

except Exception as e:

print(e)

print("No response from custom vision service")

return None

return json.dumps(response.json())

def getKeyboardInput():

lr, fb, ud, yv = 0, 0, 0, 0

speed = 50

if kp.getKey("LEFT"):

lr = -speed

elif kp.getKey("RIGHT"):

lr = speed

if kp.getKey("UP"):

fb = speed

elif kp.getKey("DOWN"):

fb = -speed

if kp.getKey("w"):

ud = speed

elif kp.getKey("s"):

ud = -speed

if kp.getKey("a"):

yv = speed

elif kp.getKey("d"):

yv = -speed

if kp.getKey("q"): me.land()

if kp.getKey("e"): me.takeoff()

if kp.getKey("z"):

global img

cv2.imwrite(f'Resources/Images/capture.jpg', img)

target = 0

# open and detect the captured image

results = sendFrameForProcessing(f'Resources/Images/capture.jpg', Local_Custom_Vision_ENDPOINT)

for index in range(len(results['predictions'])):

if(results['predictions'][index]['probability'] > 0.5):

target = 1

print("\t" + results['predictions'][index]['tagName'] + ": {0:.2f}%".format(results['predictions'][index]['probability'] * 100))

bbox = results['predictions'][index]['boundingBox']

im = Image.open(f'Resources/Images/capture.jpg')

draw = ImageDraw.Draw(im)

draw.rectangle([int(bbox['left'] * 1280), int(bbox['top'] * 720), int((bbox['left'] + bbox['width']) * 1280),

int((bbox['top'] + bbox['height']) * 720)], outline='red', width=5)

im.save(f'Resources/Images/results.jpg')

if target == 1:

img = cv2.imread(f'Resources/Images/results.jpg', cv2.IMREAD_COLOR)

cv2.imshow("results", img)

cv2.waitKey(1000)

cv2.destroyWindow("results")

else:

img = cv2.imread(f'Resources/Images/capture.jpg', cv2.IMREAD_COLOR)

cv2.imshow("capture", img)

cv2.waitKey(1000)

cv2.destroyWindow("capture")

return [lr, fb, ud, yv]

# Send an image to the custom vision server

# Return the JSON response from the server with the prediction result

def sendFrameForProcessing(imagePath, imageProcessingEndpoint):

headers = {'Content-Type': 'application/octet-stream'}

with open(imagePath, mode="rb") as test_image:

try:

response = requests.post(imageProcessingEndpoint, headers = headers, data = test_image)

print("Response from local custom vision service: (" + str(response.status_code) + ") " + json.dumps(response.json()) + "\n")

except Exception as e:

print(e)

print("No response from custom vision service")

return None

return response.json()

def main():

print("Tello Custom Vision IoT Edge Demo")

while True:

vals = getKeyboardInput()

me.send_rc_control(vals[0], vals[1], vals[2], vals[3])

global img

img = me.get_frame_read().frame

img = cv2.resize(img, (1280, 720))

cv2.putText(img, str(me.get_current_state()), (10, 60), cv2.FONT_HERSHEY_PLAIN, 0.9, (255, 0, 255), 1)

cv2.imshow("image", img)

cv2.waitKey(50)

if __name__ == "__main__":

main()

As we did in this serial’s articles, we define a function getKeyboardInput to receive the keyboard input of the user, which return the control parameters to the loop in main function. It is quite the same as we designed in this article “Azure Cloud Services for Tello Drones: How to Control Tello by Azure C2D Messages”.

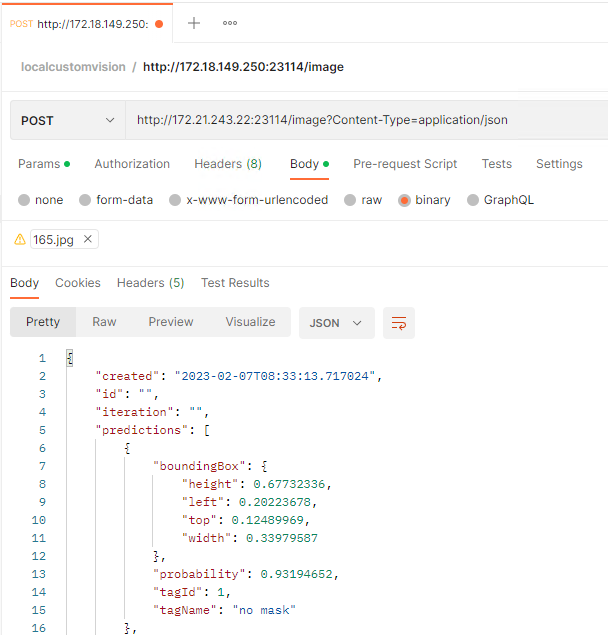

To achieve the local custom vision service at Azure IoT Edge device, we should validate that whether it is running normally. Hence, we can make use of Postman to see if it works. You can make a HTTP Post with “http://IP:Port/image?Content-Type=application/json” and a picture as binary data. You will receive the response if the module works normally as shown in Fig. 3.

Fig. 3 Debug HTTP Request and Response with Postman

The Python application first store the image in the folder “Resources/Images/” of the project when we input “z” from keyboard. Then, the function sendFrameForProcessing is called to send the image to our local Azure Custom Vision service to get the predictions of the image. The results will be displayed on the screen as soon as the application receives the reply.

Please do substitute the IP address and the Port Number of the IoT Edge device with yours that created before. Then, power on the Tello, connect your PC with Tello by Wi-Fi. You will notice that the LED on the Tello will flash quickly with yellow color. Press Run or Debug button to start the process.

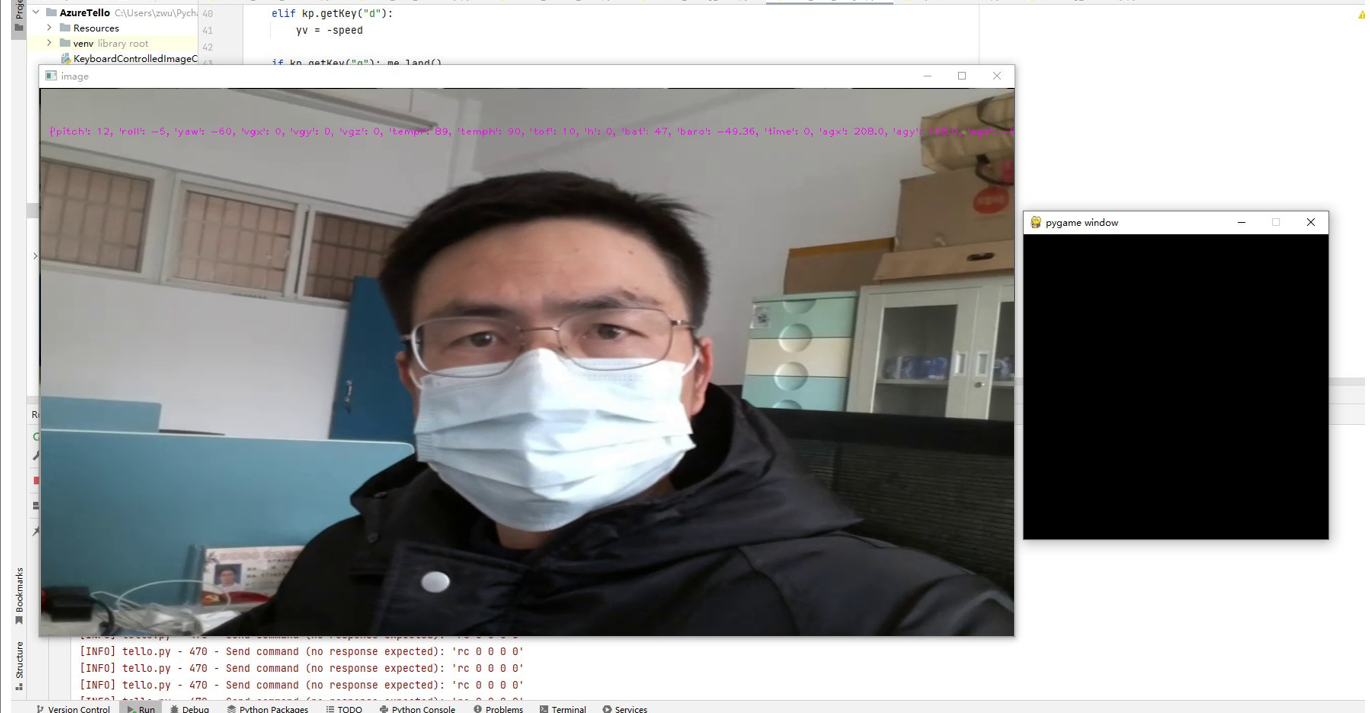

After a few seconds, the real time image that streamed from the Tello, as well as the Pygame window will be shown on the screen, as presented in Fig. 4.

Then, we can click the mouse on the Pygame window to focus user input on it. After that, we can use “w, s, a, d, e, q, up arrow, down arrow, left arrow, right arrow” to control the movement of the Tello drone. The device status of the Tello will be written on the top center of the image.

Fig. 4 Real time image and Pygame window

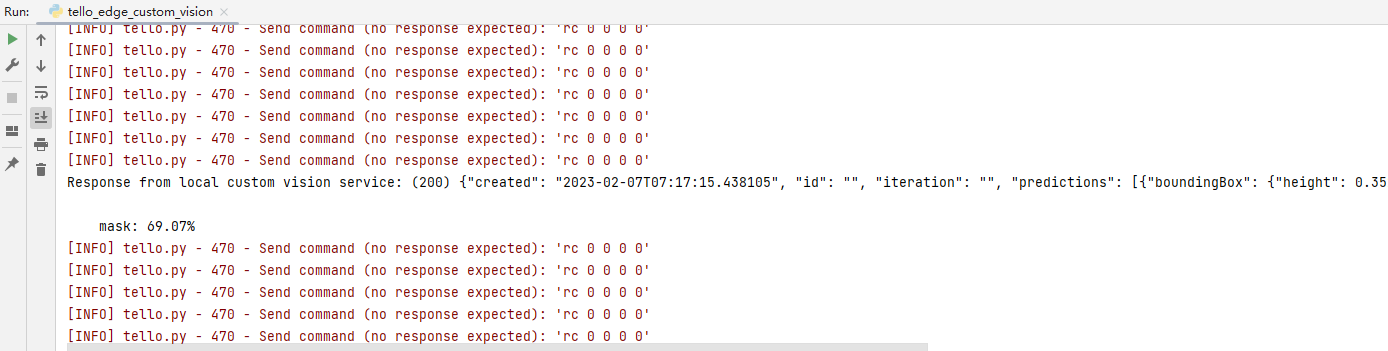

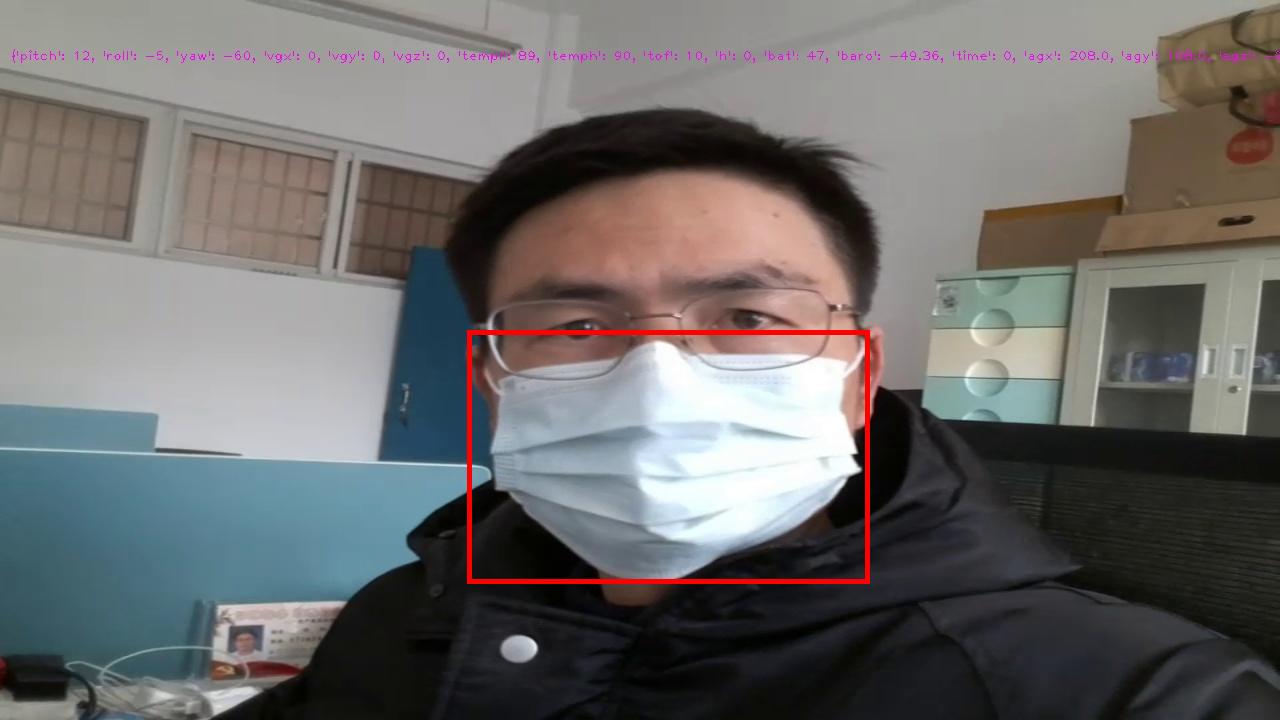

When we click “z” from keyboard, the method “sendFrameForProcessing” is called to send the image to the local Azure Custom Vision service to get the predicts of the image. In this project, we want to detect the mask face in the image. So, here I capture the image of mine with mask on my face. The results will be analyzed to show in the output window in Fig. 5. And the recognized object will be highlighted with red rectangle and stored as “results.jpg” in the folder “Resources/Images/” of the project, which are shown in Fig. 5 and Fig. 6 respectively. If the probability of the prediction is below 0.5, we will not show the object. And the image will be stored as “capture.jpg”

Fig. 5 Custom Vision Results of the captured image from Tello

Fig. 6 The image with Custom Vision Results

Summary

In this tutorial, we have presented the steps and Python codes on how to create an Azure Custom Vision Service on Azure IoT Edge device, send the image streamed from Tello drone to the deployed model to get the prediction, as well as show the prediction results.

Resources

- MS Docs for Azure Custom Vision.

- MS Docs for Azure IoT Edge.

- MS Docs for Perform image classification at the edge with Custom Vision Service.

See Also

- Azure Cloud Services for Tello Drones: How to send telemetry to Azure IoTHub

- Azure Cloud Services for Tello Drones: How to Control Tello by Azure C2D Messages

- Azure Cloud Services for Tello Drones: How to Config Tello by Azure Direct Methods

- Azure Cloud Services for Tello Drones: How to Send Images to Azure Blob Storage

- Azure Cloud Services for Tello Drones: How to Analyze Images by Azure Computer Vision

- Azure Cloud Services for Tello Drones: How to Use Azure Custom Vision Services

- Azure Cloud Services for Tello Drones: How to Send Recorded Videos to Azure Blob Storage