Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Introduction

In this post we will read about how to deploy a data pipeline from Dynamics 365 to an Azure SQL Database using Azure Datafactory pipelines.

Azure Data Factory is a managed cloud data integration service. With this service, we can create automated pipelines to transform, analyze data, and much more.

Create a Data Factory Project

In the following steps, we will create a Data Factory project with one pipeline with a copy data activity and two datasets, a dynamics entity and an azuresqltable.

Step 1. Search for the Azure Data Factory service

Search for 'Data factory' service and click Enter.

Step 2. Create the service

Select Create to begin the creation of the service.

In the "New data factory" form, we must fill up all the mandatory fields.

| Setting | Value |

| Name | Type a valid name for the Data Factory service |

| Subscription | Select a valid Subscription |

| Resource Group | Select an existing or create a new Resource Group |

| Version | Select the Data Factory version, V2 |

| Location | Select a Location for the Data Factory service |

| Git Hub (If Enabled Then Needs Configuration) | |

| GIT URL | Type the URL of an existing Git Hub repository. For ex. https://github.com/myusername or https://myAccount.visualstudio.com/myProject |

| Repo name | Type the name of an existing repository. |

| Branch name | This is the name of an existing Git branch to use for collaboration, usually is master |

| Root folder | Folder in the collaboration branch where Factory's entities would be stored. For ex: '/factorydata'. '/' would indicate the root folder. |

Create a Pipeline

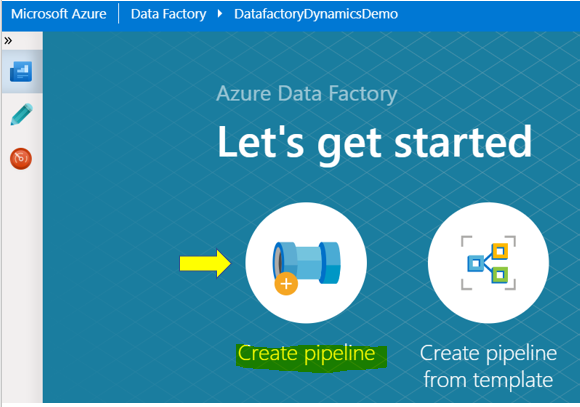

When the Data Factory deployment is completed we can start to deploy the pipeline. From the left main blade select Overview - Auditor & Monitor.

Step 1. Create pipeline

A new page will open and begin the deployment of the pipeline we have to select Create pipeline.

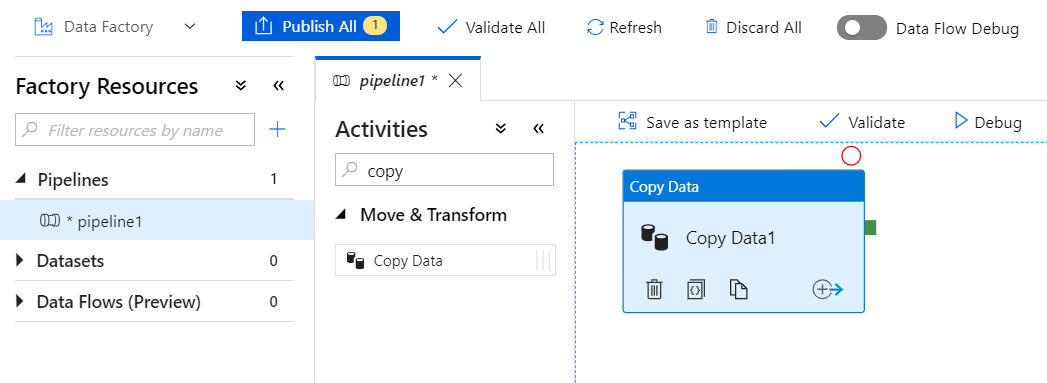

Step 2. Add Copy Data activity

From the Activities Search box, type "copy", and drag & drop the Copy Data activity.

Step 3. Configure the Source parameters

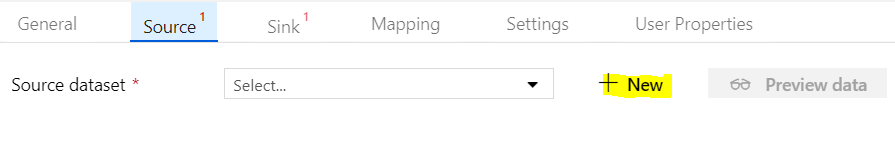

Then, select Source and from the Source dataset click +New, to add a Dynamics Data Store

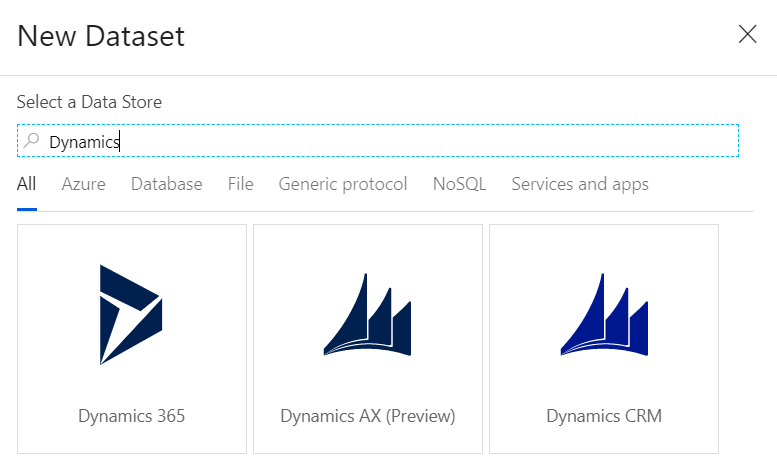

In the search box type "Dynamics", select Dynamics 365 and click Continue.

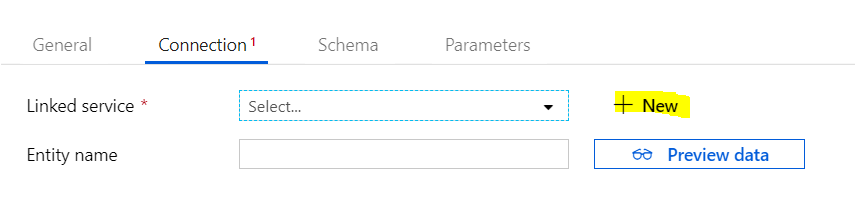

Click +New to add Linked service

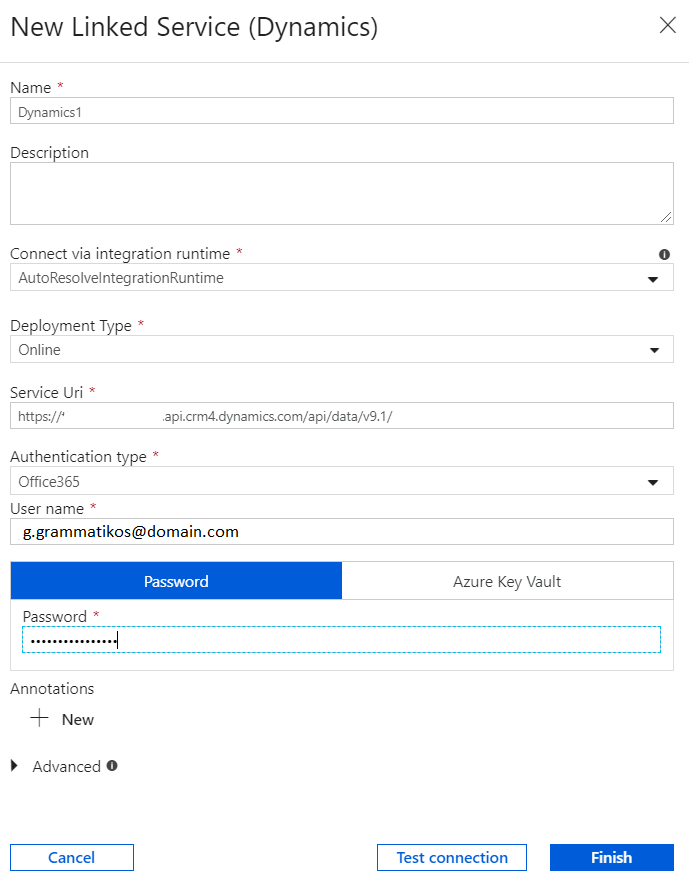

Type a Name for the New Linked Service, e.g Dynamics1 (default), type the Service Uri, Username, Password and then select Finish.

At this point we can Test connection and if works then select an Entity name, e.g Account.

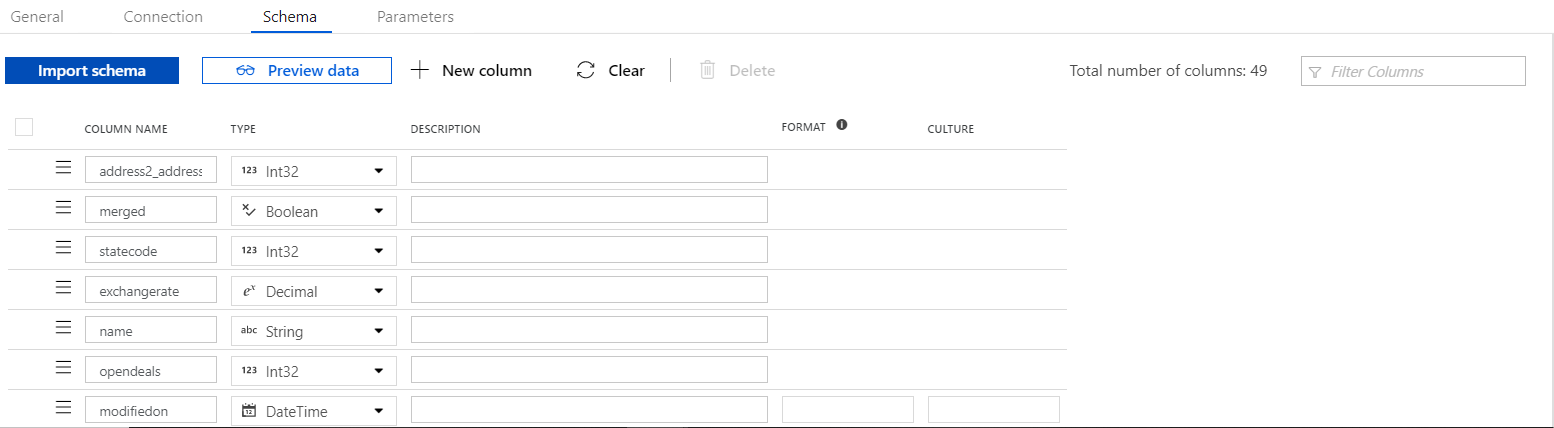

Select Schema and click Import schema, at this point we can keep or remove the unnecessary fields that we want to get data from Dynamics.

When we complete the changes, we wait for a few seconds and select the button Publish All.

Step 4. Configure the Sink parameters

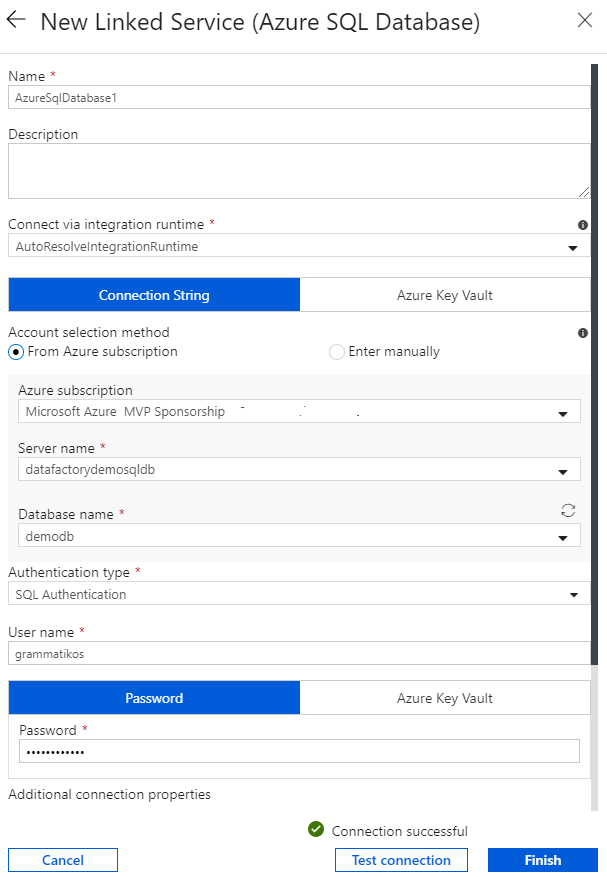

Type a **Name **for the New Linked Service, e.g AzureSqlDatabase1 (default), select a valid Azure Subscription, a Server name, a Database name, type Username, Password and then select Finish.

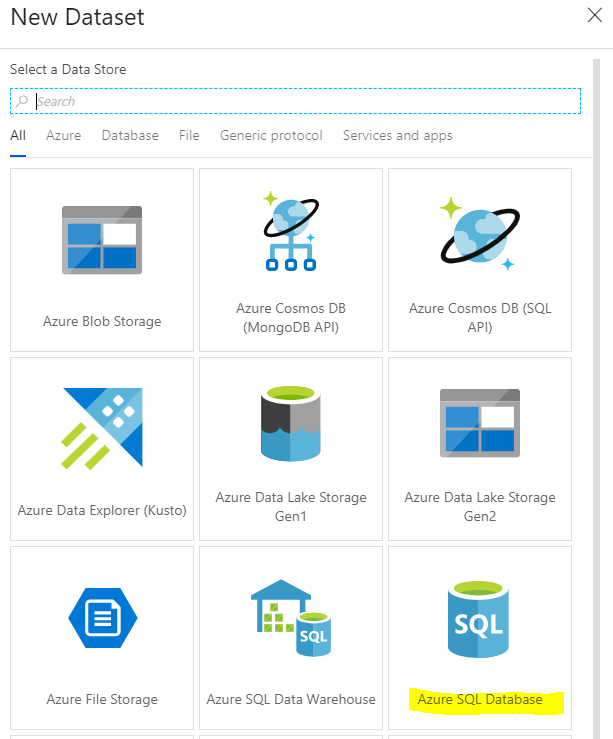

In the search box type "Azure SQL Database", select **Azure SQL Database **and click Continue.

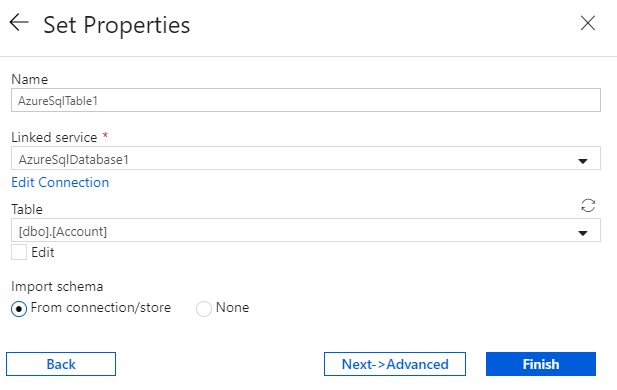

Next, we have to create the Dataset, type a Name, e.g AzureSqlTable1 (default), select Linked service, Table, click Import schema {From connection/store} and select Finish.

Select Schema, and click Import schema.

After a few seconds select Publish All.

Test the pipeline

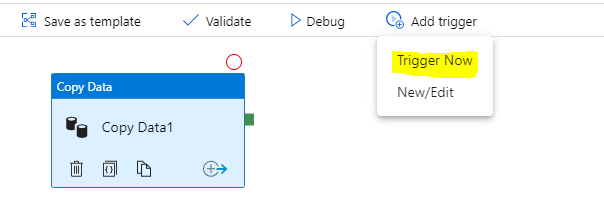

To test the pipeline, click Add trigger - Trigger Now.

After the Trigger executes the pipeline we must make sure that the execution was succeeded.

To make sure that the pipeline worked, open an SSMS and run a Select statement from the Azure SQL Database table (Accounts).

Schedule the Trigger

To schedule the Trigger run periodically, click Add trigger - New/Edit.

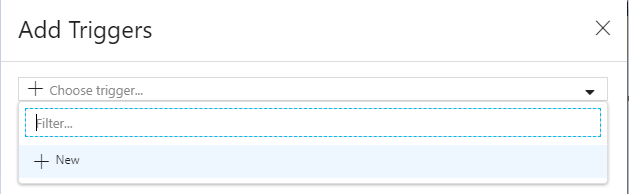

In the Add Triggers page, choose +New

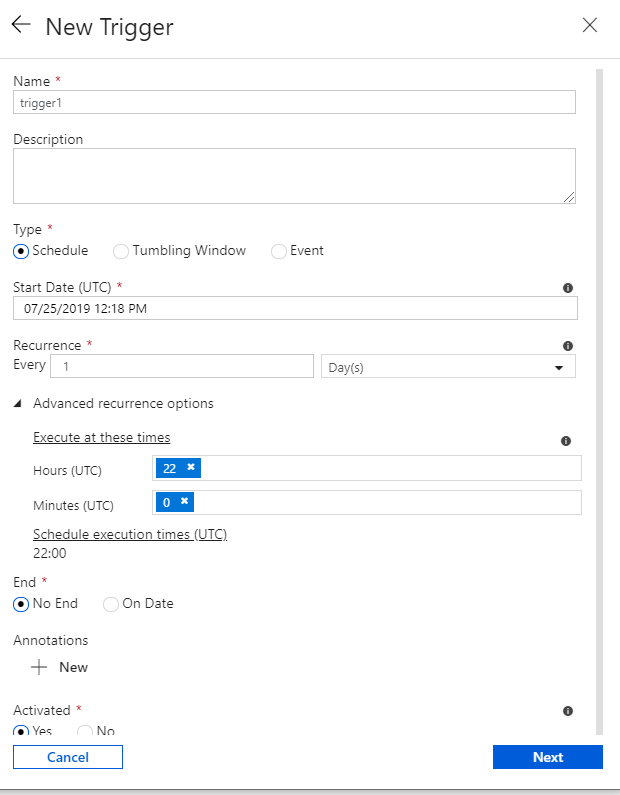

In the New Trigger page, type a Name, e.g trigger1 (default), leave the Type as Schedule (default), specify a Start Date (UTC) the Recurrence (Minutes, Hours, Days, Weeks, Months ). To execute the trigger on a specific time, from the Advanced recurrence options, set Hours and Minutes. Before click Next, we have to be sure that the trigger is Activated.

Select Finish, to save the scheduled task, and Publish it.

Conclusion

By using two simple connectors for the datasets we create a copy of dynamics entity to an Azure SQL table. This can be useful if we want to transform the data for a custom report e.g Power BI, Azure SQL, etc. Of course, their many other solutions that we can deploy using this service.