Azure Load Balancer: Features and Deployment Scenarios

Introduction

Azure Load Balancer offers variety of services which are suitable for multiple scenarios. For an architect or an Azure consultant, it is essential to know which configuration is suitable for which scenario.

In this article, we will explore multiple services catered by Azure Load Balancer and will also discuss different kinds of deployment scenarios.

Important facts about Azure Load Balancer

- Azure Load Balancer is a Layer-4 Load Balancer, which works Transport Layer and supports TCP and UDP Protocol.

- Load Balancer can translate IP address and Port, but cannot translate Protocol. That means, if incoming traffic comes through TCP protocol, Load Balancer will forward it through TCP protocol. If incoming traffic comes through UDP protocol, Load Balancer will forward it through UDP protocol.

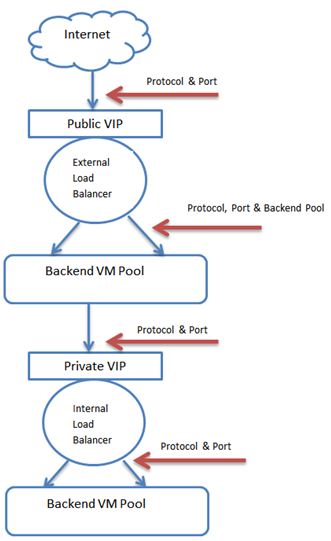

- Using Azure Load Balancer, we can deploy External / Public Load Balancer (ELB) or Internal Load Balancer (ILB) depending on the scenario. We will cover this in later sections

- Load Balancers are attached to Azure Virtual Machines through VM Network Card (NIC).

- Most of the time traffic is inbound to Azure VMs. However, a public Load Balancer can also provide outbound connections for virtual machines inside the virtual network by translating their private IP addresses to public IP addresses.

- Azure Load Balancer comes in two different SKUs, Basic & Standard. Basic Load Balancer is free, while Standard Load Balancer involves charges.

- The SKU for Public IP and Azure Load Balancer must be same. You cannot attach a Basic IP address with Standard Load Balancer, or a Standard IP address with Basic Load Balancer.

- A virtual machine can be connected to one public and one internal Load Balancer resource.

Load Balancer Coverage

- You cannot attach Azure Load Balancer to Azure VMs in different regions; all VMs must be in the same Azure region. This is true for both Basic and Standard Load Balancer. For multi-region coverage, Microsoft has another offering called Azure Traffic Manager.

- Basic Load Balancer can cover VMs from a single Availability Set , single Scale Sets and Single Availability Zone. In other words, Basic Load Balancer can work only within a single Azure Data Center.

- Standard Load Balancer can include VMs from multiple Availability Sets , multiple Scale Sets.

- Standard Load Balancer can also include VMs from multiple Availability Zones. It supports cross-zone Load Balancing, which is a Microsoft recommended an approach to sustain zone failure.

- For an Internal Load Balancer, the Private VIP is accessible from the Azure VNET as well as from corporate network and other inter-connected VNETs, if there is proper connectivity. This makes ILB extremely useful. For example, ILB can be used with SQL Always on Cluster, where front-end port would be the Always-On listener port.

- Starting from March 2019, Azure Global VNET Peering supports Standard Load Balancer. This means, you can reach the endpoint of an Internal Standard Load Balancer from another region, if these two regions are connected through Global VNET Peering. For more details please follow this link.

Basic and Standard Load Balancer

- Microsoft recommends Standard Load Balancer for large and complex deployment, as it enhances capabilities of Basic Load Balancer and adds more features.

- Standard Load Balancer has wider coverage compared to basic, which have explained in the previous section. Standard Load Balancer supports Availability Zones. Basic Load balancer, on the other hand, works only within a single Azure Data Center.

- Standard Load Balancer supports HTTPS Health Probe, which Basic Load Balancer does not support. They both support HTTP and TCP based Health Probes.

- Basic Load Balancer is free, while Standard Load Balancer involves charges.

- To get a full comparison between Standard and Basic SKUs, please follow this link.

- For details on configuring Standard Load Balancer across Availability Zones, please refer to this article.

How Azure Load Balancer Works

Below are the components of Azure Load Balancer:

Component |

Description |

Front-end (VIP) |

Front-end consists of below 3 components :

|

Back-end Pool |

VMs which would be Load Balanced. VM NICs would be attached with the Load Balancer |

Health Probe |

To Monitor app status. Requires Parameters like Port, Path, Interval etc. |

Load Balancer Rule |

Load Balancer rule defines how the LB will behave, and consists of the below parameters : a) Front End IP b) Protocol (TCP or UDP) c) Front-end Port d) Back-end Pool e) Back-end Port f) Health Probe g) Session Persistence |

Workflow

- Azure Load Balancer receives incoming traffic in front end virtual IP. For external Load Balancer, the front end VIP is a Public IP. For Internal Load Balancer, the front end VIP is a private IP.

- The incoming traffic is received through a certain port which is configured. By default, the port is 80.

- Upon receiving the traffic, the Load Balancer checks the LB rule and based on the rule, it forwards the incoming traffic to the back-end pool on the mentioned port. The destination port can be same as source port or different, based on the LB rule.

- To ensure all VMs and applications are up and running in the back-end pool, Azure Load Balancer monitors the Health Probe after a specific interval. The health probe dynamically adds or removes VMs from the load balancer rotation based on their response to health checks. By default, a VM is removed from the load balancer distribution after two consecutive failures at 15-second intervals. We create a health probe based on a protocol or a specific health check page for our app.

Distribution Mode

Distribution Mode defines how incoming traffic would be distributed across back-end Azure VMs.

In other words, Distribution Mode is the Load balancing algorithm which decides to which back-end VM traffic would be sent.

Azure Load Balancer supports below two distribution modes :

- Hash Based Distribution Mode (Default Mode)

- Source IP Affinity Mode (Session Affinity / Client Affinity)

In Hash Based Distribution Mode , Azure Load Balancer generates a Hash Value from the below 5 tuples :

- Source IP

- Source Port

- Destination IP

- Destination Port

- Protocol Type

In Hash Based Distribution, Azure Load Balancer offers stickiness only in the same session, not for a new session. This means, as long as a session is running, traffic from same source IP would be always diverted to same back-end DIP (data-center IP). But if the same source IP starts a new session, traffic may be diverted to a different DIP.

In Source IP affinity mode, Azure Load Balancer always divert traffic to the same DIP endpoint which is coming from a particular source, provided that back-end server passes the health probe.

For more information on the distribution mode please refer this article.

Health Probes

**Health Probes do not monitor the health of Azure VMs; they monitor application hosted on those VMs. For the back-end servers to participate in the load balancer set, they must pass the probe check. **

- Azure Basic Load Balancer supports TCP and HTTP Health Probes. Azure Standard Load Balancer supports TCP, HTTP & HTTPS Health Probes.

- Health Probes originate from the IP address 168.63.129.16. So this IP address must not be blocked so that Health Probes can function.

- A TCP Probe fails when the TCP listener on the instance does not respond at all, or when the probe receives TCP reset information.

- An HTTP / HTTPS Probe fails when there is any code other than 200 (Ex: 403, 404 or 500) or probe endpoint does not respond at all during 31 second timeout period. It can also fail if the probe endpoint closes the connection via a TCP reset.

Please refer to this article for more information on Health Probes.

High Availability Ports

High Availability Ports can only be configured using Internal Standard Load Balancer. Basic Load Balancer and External Load Balancer does not support this configuration.

HA Ports is one of the most useful feature which an Internal Standard Load Balancer offers. There are many scenarios, where we have to Load Balance incoming traffic across all ports and protocols. Before the HA Ports feature was available, it was a difficult task to configure this because by default Load Balancing rules work on this principle : "If an incoming traffic arrives in X Port using Y Protocol, forward the traffic to X1 Port using Y Protocol". In this principle, we have to create separate rule for each port and protocol.

HA Ports feature solves this issue. When HA Ports is configured, we can define one single rule which will cover all ports and protocols. Internal Load Balancer would Load Balance all TCP and UDP flows, regardless of port number. In fact, when you select the option "HA Ports" during Load Balancer rule creation, the source port, destination port and protocol options would be disappeared.

The HA ports feature is being used in below scenarios:

- High Availability

- Scale for network virtual appliances (NVAs) inside virtual networks.

To configure HA Ports, we need to specify the following things:

• Front End and Back End Port to Zero (0)

• Protocol = All

Please refer this article to get more information on High Available (HA) Ports rule, and **this article **to configure HA Ports in an ILB.

Port Forwarding (NAT Rules)

Azure Load Balancer supports Port Forwarding feature, with the configuration of Network Address Translation (NAT) rules.

Using the Port Forwarding feature, we can connect to an Azure VNET using Load Balancers Public IP address. The Load Balancer will receive the traffic in a certain port, and based on the NAT rule it will forward the traffic to a specific VM through a specific port.

Please note that while a Load Balancer Rule is attached to multiple VMs in the back-end pool, NAT rule is attached to only one back-end VM. So when you have to connect to a single VM through a certain port, use NAT rule instead of Load Balancer Rule.

To configure Port Forwarding, please refer to this article.

Outbound Connection

So far we have discussed Load Balancing and NATing incoming traffic flowing towards Azure VMs, but Azure Load Balancer can also be used for the outbound connection scenario.

Let’s consider a scenario where an Azure VM wants to communicate outside world using a Public IP address. There are multiple solutions available based on the scenario:

Scenario 1

If the Azure VM is having an Instance-Level Public IP Address (ILPIP), it will always communicate to the outside world using that IP address. In this scenario, even if this VM is behind a Load Balancer, it will not use Load Balancer Front-end IP address but its own ILPIP.

Scenario 2

In this scenario, the Azure VM is behind a Public Load Balancer, and the does not have ILPIP. If Load Balancer rule is properly configured, then the Public Load Balancer will translate the outgoing traffic from VMs Private IP address to Load Balancer’s Front-end IP address, using SNAT.

Port masquerading SNAT (PAT)

Port masquerading is an algorithm, which Azure uses to masquerading (disguise) the source when a single VM or multiple VMs communicate to outside world.

Let’s assume a scenario, where multiple VMs are attached behind a Public Load Balancer, and none of the VMs are having its own Public IP (ILPIP). As discussed before, Azure Load Balancer will translate the private IP addresses to its Front-end address. However, since a single Public IP address is used to represent multiple private IP addresses, Azure will use Ephemeral ports (SNAT ports).

Scenario 3

In this scenario, there is no Load Balancer or Instance-Level Public IP Address. When we configure the outbound connection in this scenario, the VM will use an arbitrary public IP to communicate outside world. This IP address is dynamically assigned by Azure and we do not have any control over it. For more information on configuring the outbound connection using Load Balancer, please refer this link.

Azure Load Balancer with multiple Front-ends

Azure Load Balancer provides flexibility in defining the load balancing rules, which can be leveraged in multiple scenarios.

Azure Load Balancer provides an option to configure same back-end port across multiple rules within a single Load Balancer, we are called back-end port reuse.

There are two types of rules:

- The default rule with no back-end port reuse.

- The Floating IP rule where back-end ports are reused.

Floating IP and Direct Server Return (DSR)

Floating IP and DSR are used when same back-end port needs to be used across multiple rules in a single Load Balancer.

As Microsoft mentions, if you want to reuse the same back-end port across multiple rules, you must enable Floating IP (DSR) in the rule definition.

When Floating IP / DSR is being implemented, the Front-end IP is configured within the Azure VM guest OS, and not in the Load Balancer.

The main difference between DSR and traditional Load Balancing approach is, in DSR the source IP address is not getting translated to destination IP. In other words, there is no NATing and the source IP address is directly configured in the VM’s guest OS. If there is more than one VM behind the load balancer, every VM is configured with the same front-end IP address. The interface where the front-end IP address is assigned is called Loop back Interface.

In addition to the loopback interface, there will be one DIP (Destination IP) per VM which is configured in Azure and not within OS. The purpose of this DIP is to facilitate health probe so that Load Balancer can function.

Also, when the traffic returns, it returns to the original address (not to the load balancer) from where the traffic originated. This is true for normal Load Balancer operation, even if DSR is not implemented. That why Microsoft says: At a platform level, Azure Load Balancer always operates in a DSR flow topology regardless of whether Floating IP is enabled or not. This means that the outbound part of a flow is always correctly rewritten to flow directly back to the origin.

Please do not confuse return traffic with the outbound connection, which we have discussed earlier. Outbound connection is a separate configuration, where a VM behind a Load Balancer originates the connection and sends traffic to the outside world.

This Microsoft Article** ** discusses these scenarios in detail.

A classic example of DSR deployment is SQL Always On Availability Group. If you need to deploy Azure Load Balancer to support SQL Always On Availability Group, please consider reading this article.

Summary

We have discussed most of the features and scenarios related to Load Balancer, now its time to put everything together. I hope the below table would help architects and designers to choose the right product based on the scenario.

Scenario |

Proposed Solution |

Load-balance incoming Internet traffic to Azure VMs within a single region. |

Public Load Balancer (Basic / Standard) |

Load Balance traffic across Azure VMs within Azure VNET (Including traffic from on premise) within a single region. |

Internal Load Balancer (Basic / Standard) |

Forward traffic to a specific port on a specific VM behind the load balancer (not to any VM , but to a specific VM). |

Configure NAT Rule. |

VM needs to communicate to outside world, and it does not have its own public IP address. |

Public Load Balancer (Basic / Standard), outbound connection with Source NAT (SNAT). |

Need multiple Front-ends, but back-end port would not be reused across multiple rules. Each rule will use different back-end port. |

It is possible to configure multiple front-ends in a single Azure Load Balancer. Basic /Standard and Internal / External support this configuration. |

Reuse Back-end Ports across multiple rules in a single Load Balancer. In other words, same back-end port needs to be used in more than one rule. Ex: Cluster High Availability, Network Virtual Appliance (NVA) |

Enable Floating IP (DSR) in the rule definition. Basic / Standard and Internal / External support this configuration. Please note that without enabling Floating IP (DSR), you cannot re-use same back-end port for multiple rules. |

Need to define one single Load Balancing rule, which will be applicable for all ports and protocols and not just for a specific port / protocol. |

Configure High Available (HA) Ports. Only Internal Standard Load Balancer supports this configuration. |

Load-balance incoming Internet traffic to Azure VMs which are in different Azure Regions. |

Azure Load Balancer does not support this scenario, as Load balancer works only within single region. You can use Azure Traffic Manager in this scenario. |

Load Balance traffic based on resource utilization. For example, when on premise server would be overloaded, the remaining traffic would be diverted to Azure VMs without any service interruption. (Burst to Cloud) |

Azure Load Balancer does not support this scenario. Use Azure Traffic Manager. |

Route Traffic based on incoming URL, and not based on source IP address or Port. |

Azure Load Balancer does not support this scenario. Use Azure Application Gateway. |

See Also

- Azure Load Balancer Overview

- Standard and Basic Load Balancer

- Standard Load Balancer and Availability Zones

- Load balance Windows virtual machines in Azure to create a highly available application with Azure PowerShell

- Load balance VMs across availability zones with a Standard Load Balancer using the Azure portal

- Internal Load Balancing

- Multiple Front-ends for Azure Load Balancer

- Outbound connections in Azure

- Using load-balancing services in Azure

- High availability ports overview

- Configure High Availability Ports for an internal load balancer

- Troubleshoot Azure Load Balancer