Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

1 Introduction

In a previous series (see: Migrating BizTalk Maps to Azure Logic Apps: Shortcomings & Solutions part 1 & part 2) was described how BizTalk maps could be migrated to a Logic App Integration Account. Several limitations were highlighted with the Transform XML action, and alternatives or workarounds were suggested. Since then there have been a few updates to the Transform XML action: support for external assemblies and non-XML output, and now the XSLT 2.0/3.0 processor is in preview. So, it now seems a good time to review and provide an update.

In addition to these XSLT processors there is also the support for Liquid templates, consequently there are now three different options (or four if you include Azure Functions) available to the developer when implementing message translation patterns. It may seem strange to distinguish XSLT 1.0 from XSLT 2.0/3.0, but there are two good reasons for this: first, XSLT 2.0 is not backwards compatible with XSLT 1.0 (unless a vendor optionally implements a backwards compatibility mode) and, second, the new XSLT 2.0/3.0 support in Logic Apps has come about not by enhancements to the Microsoft XSLT processor, but by implementing the SAXON HE (9.8.0.8) open source XSLT processor.

If one were to generalize the transformation abilities of each option, it would look something like the table below:

| Source - Target | Liquid | XSLT 1.0 | XSLT 2.0/3.0 |

| JSON - JSON | Y | N | N/Y |

| JSON - XML | N | N | N/Y |

| JSON - TEXT | Y | N | N/Y |

| XML - XML | N | Y | Y/Y |

| XML - JSON | Y | Y | Y/Y |

| XML - TEXT | Y | Y | Y/Y |

| TEXT - TEXT | N | N | Y/Y |

| TEXT - JSON | N | N | Y/Y |

| TEXT - XML | N | N | Y/Y |

There are, however, a few caveats to these. For example, in XSLT 1.0 it is possible to use an msxsl:script to reference the Newtonsoft.Json assembly. This assembly contains a class JsonConvert with several methods to convert JSON to XML. Now, the Transform XML action will validate the input is well formed XML and throw an exception if it is not, but if the JSON input is passed as an XSLT parameter it is possible to convert it to XML, transform it, and convert the XML result back to JSON. So, while it is technically possible, realistically, using Liquid would be the sensible choice in most cases. In a similar manner, while XSLT 2.0/3.0 can read text files and produce text output in most cases where the data is delimited or positional it will be easier to implement a 2-step process and use the Logic App Flat File Decoding action to convert the input to XML and pass the result to the XSLT 2.0/3.0 map.

The addition of these three new features to the Transform XML action helps us greatly in migrating the more complex BizTalk maps, in particular, those that use database functoids, custom functoids, and external assemblies from the scripting functoid. It means that in all the scenarios described in the series all can now be migrated to the Logic Apps Integration Account removing any reliance on the use of an Azure Function. The following three sections will review each of these new features.

2 External Assemblies

The ability to upload external assemblies was announced on the Logic Apps live webcast in February 2018, and an example on how to implement it with a XSLT transform was provided a month later. The external assembly must be uploaded to the Integration Account before any XSLT file that makes calls to the custom .NET code. The reason for this is because when the XSLT file is uploaded it is compiled (the XSL into one assembly, the inline C# code into another), and hence all the references must be resolved before the compilation can succeed. This compilation process is the reason why, if you attempt to run a Logic App that uses the XSLT map immediately after uploading, you may receive the error: “MapNotReady. The map 'xxxxxxxxxxx' is still being processed. Please try again later.”

The example linked to earlier uses the msxsl:assembly and msxsl:using elements to reference and use the assembly. However, it is also possible to load and reference the assembly at run-time without using either of these elements. In this case the order of uploading does not matter, to see how this is done see the example here . Finally, behind the scenes the transformation actions are hosted in a separate Azure Web Apps environment (https://xslt-*****.azurewebsites.net where ***** is your 32 character Integration Account ID, found in the Callback URL property e.g. https://prod-22.northcentralus.logic.azure.com:443/integrationAccounts/*****? ...), but it’s not accessible via Kudu.

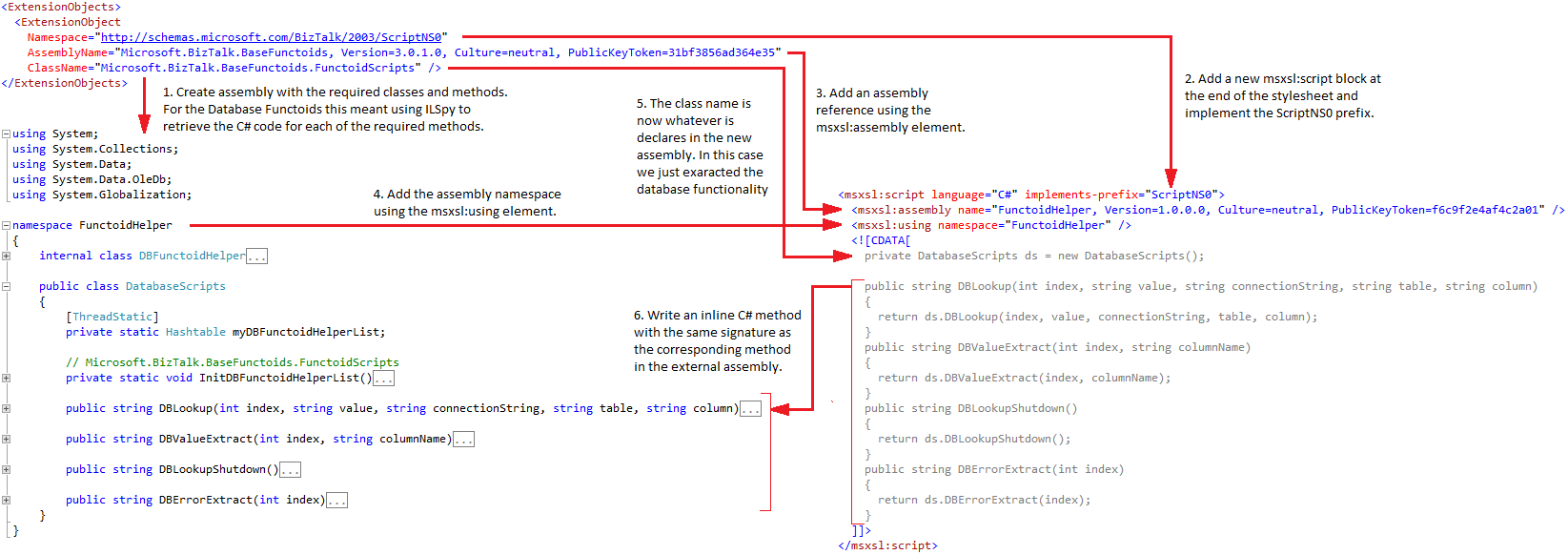

In part 2 #7 an example was given that on how to migrate a BizTalk map that utilized Database Functoids using an Azure Function, but one important note to make is that there were no changes to the XSLT map. The goal in all these migration scenarios is to keep the XSLT changes to a minimum; the fewer the changes the less likelihood of introducing errors. But if we follow the example first given on how to call custom assembly .NET code, it would seem some changes will now be required to the XSLT when it is migrated.

Thinking a little deeper on how the BizTalk Visual Studio map links the XSLT and external assemblies it becomes clear that the real issue isn’t the migration of the XSLT map but rather the migration of the Custom Extension XML file that the BizTalk Mapper generates:

<ExtensionObjects>

<ExtensionObject

Namespace="http://schemas.microsoft.com/BizTalk/2003/ScriptNS0"

AssemblyName="Microsoft.BizTalk.BaseFunctoids, Version=3.0.1.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35"

ClassName="Microsoft.BizTalk.BaseFunctoids.FunctoidScripts" />

</ExtensionObjects>

Understanding how this ‘link’ works is the key to the migration. Basically the BizTalk Mapper parses the XML file and creates an XsltArgumentList object that is passed as a parameter to a XslCompiledTransform class’ Transform method (see here for more details).

At this point the Visual Studio BizTalk Mapper becomes a hinderance since we need to move away from using this XML file whereas the BizTalk Mapper relies on it. Rather what we need to do now is to invoke the XSLT processor from the XML | Start XSLT Debugging option on the Visual Studio menu-bar when using our XSLT map. But first we need to build our assembly to upload. Now, normally for custom assemblies there will be no change but in this instance, since we are dealing with the database functoids, we need to create a new assembly with the required classes and methods (see Appendix A) extracted from the Microsoft.BizTalk.BaseFunctoids assembly using ILSpy.

Finally, it may not have been obvious, but we are not limited to a single code block i.e. msxsl:script element in XSLT. So, the solution is to move the Custom Extension XML ‘functionality’ into a separate code block and append it to the XSLT. The migration process is as follows and includes six steps:

The new msxsl:script element can be simply appended to the end of the XSLT as shown below:

<?xml version="1.0" encoding="utf-16"?>

<xsl:stylesheet xmlns:xsl="http://www.w3.org/1999/XSL/Transform" xmlns:msxsl="urn:schemas-microsoft-com:xslt" xmlns:var="http://schemas.microsoft.com/BizTalk/2003/var" exclude-result-prefixes="msxsl var s0 userCSharp ScriptNS0" version="1.0" xmlns:s0="http://AzureBizTalkMapsDemo/Person" xmlns:ns0="http://AzureBizTalkMapsDemo/Contact" xmlns:userCSharp="http://schemas.microsoft.com/BizTalk/2003/userCSharp" xmlns:ScriptNS0="http://schemas.microsoft.com/BizTalk/2003/ScriptNS0">

<xsl:output omit-xml-declaration="yes" method="xml" version="1.0" />

<xsl:template match="/">

<xsl:apply-templates select="/s0:Person" />

</xsl:template>

<xsl:template match="/s0:Person">

<xsl:variable name="var:v1" select="userCSharp:StringTrimRight("")" />

<ns0:Contact>

<Title>

<xsl:value-of select="Title/text()" />

</Title>

<Forename>

<xsl:value-of select="Forename/text()" />

</Forename>

<Surname>

<xsl:value-of select="Surname/text()" />

</Surname>

<Street>

<xsl:value-of select="$var:v1" />

</Street>

<City>

<xsl:value-of select="$var:v1" />

</City>

<State>

<xsl:value-of select="$var:v1" />

</State>

<ZipCode>

<xsl:value-of select="$var:v1" />

</ZipCode>

<xsl:variable name="var:v2" select="ScriptNS0:DBLookup(0 , string(ID/text()) , "Provider=SQLOLEDB; Data Source=.;Initial Catalog=Demo;Integrated Security=SSPI;" , "Customers" , "ID")" />

<xsl:variable name="var:v3" select="ScriptNS0:DBValueExtract(string($var:v2) , "Phone")" />

<Phone>

<xsl:value-of select="$var:v3" />

</Phone>

</ns0:Contact>

<xsl:variable name="var:v4" select="ScriptNS0:DBLookupShutdown()" />

</xsl:template>

<msxsl:script language="C#" implements-prefix="userCSharp">

<![CDATA[

public string StringTrimRight(string str)

{

if (str == null)

{

return "";

}

return str.TrimEnd(null);

}

]]>

</msxsl:script>

<msxsl:script language="C#" implements-prefix="ScriptNS0">

<msxsl:assembly name="FunctoidHelper, Version=1.0.0.0, Culture=neutral, PublicKeyToken=f6c9f2e4af4c2a01" />

<msxsl:using namespace="FunctoidHelper" />

<![CDATA[

private DatabaseScripts ds = new DatabaseScripts();

public string DBLookup(int index, string value, string connectionString, string table, string column)

{

return ds.DBLookup(index, value, connectionString, table, column);

}

public string DBValueExtract(int index, string columnName)

{

return ds.DBValueExtract(index, columnName);

}

public string DBLookupShutdown()

{

return ds.DBLookupShutdown();

}

public string DBErrorExtract(int index)

{

return ds.DBErrorExtract(index);

}

]]>

</msxsl:script>

</xsl:stylesheet>

Things to note:

- There are no changes to any of the map’s XSL elements;

- The inline script ‘userCSharp’ code block remains unaltered and co-exists with the new ‘ScriptNS0’ code block;

- Both code blocks get compiled into the same DLL when the map is uploaded to the Integration Account;

- For a external assembly the ExtensionObject AssemblyName and ClassName values would map to the msxsl:assembly and private class variable name.

- For a custom functoid the functoid assembly would need the dependency on the BaseFunctoid class removing, essentially turning the functoid assembly into an external assembly;

Using this method of using a separate msxsl:script element avoids having modify the XSLT, for example modifying the ScriptNS0 prefixes in all the select=”” expressions to use userCSharp prefix instead. It also allows, if these external assemblies are used in multiple maps, for the script to be placed in its own XSLT file and imported using xsl:import. For an example on how to do this see here or here .

3 XSLT 2.0/3.0

As mentioned previously the Transform XML action implements the SAXON HE open source processor and, like the XSLT 1.0 processor, the XSLT gets compiled although this is done when the map is first executed and not when it is uploaded to the Integration Account. Incidentally, all the compiled map components get cached; there’s a separate cache for the XSLT 1.0 maps, XSLT 1.0 scripts, uploaded assemblies, and the XSLT 2.0/3.0 maps.

There are a couple of things to bear in mind when using the XSLT 2.0/3.0 processor:

- The XSLT 3.0 specification defines a new element xsl:evaluate which allows the dynamic evaluation of an XPath expression. So, the obvious choice for a transformation requiring this functionality from the table given in the introduction would be to choose XSLT 3.0. However, while the SAXON HE processor implements this element, it is in a restricted sense, so that while the processor recognises the element as valid XSLT 3.0 the feature is disabled and results in an error if the instruction is executed. Conversely, as another caveat to the table, XSLT 1.0 does not support dynamic XPath expressions, but through the use of an msxslt:script element to define a script block, it is possible to execute dynamic XPath expressions (see the XSLT here for an example on how to do this).

- There is no ability to include inline C# script (or any of the other .NET language available) nor call external assemblies. Now, Saxon does provide for integrated .NET extension functions in the HE open source processor .NET extension functions ) but this would have to be coded for in an Azure Function (see Using an XSLT 2.0/3.0 Map in Logic Apps and Azure Functions on how to use the HE processor in an Azure Function). So, if there is a possibility that a map may need to call some external function e.g. for data enrichment, then XSLT 2.0/3.0 might not be an option. Having said that there almost always is an alternative, in this case required data could be looked up before hand and passed to the map as an xsl:param.

It is well worth familiarising oneself with the features available in the Saxon HE processor to understand what features are, or are not, available (see here ), and standards conformance (see here ).

XSLT 1.0 to 2.0/3.0 Migration Considerations

There are two mapping migrations to consider: the first is a migration from a BizTalk Server map to a Logic App Integration Account map as already discussed, and the second is a migration from XSLT 1.0 to XSLT 2.0/3.0 which will now be the focus.

An XSLT map that has a version attribute set to 2.0 (or 3.0) cannot be uploaded as an XSLT 1.0 map. Technically XSLT 1.0 should be forwards compatible, and the Microsoft XSLT 1.0 processor will process such a map, it is the validation performed by the Integration Account maps blade that prevents its upload as a XSLT 1.0 map. Where forwards compatibility is in effect the XSLT processor must be more lax when encountering unknown attributes, declarations and instructions. Using forward compatibility in a stylesheet is one way to prepare for XSLT 2.0 as part of an overall migration strategy.

A migration to XSLT 2.0/3,0, if desired, needs careful consideration. Several years ago a group IBM developers produced a series of documents on performing such a migration. They described five strategies each with its own advantages and disadvantages:

- Full rewrite to version 2.0/3.0

- Convert most of the stylesheet to 2.0/3.0 but keep some 1.0 islands

- Institute 2.0/3.0 islands or rewrite modules that need overhaul

- Change the stylesheet to 2.0/3.0 and debug from there

- Stay at version 1.0

Fortunately, XSLT 1.0 backwards-compatibility mode was reinstated in Saxon HE 9.8.0.7 so all options above are available. In is also worth noting that the options are not mutually exclusive, rather they can be blended to suit your needs, to quote from part 2:

“For example, you can draw up a grand plan as in options 1 and 2, but implement changes using the try-it-and-see approach of option 4. If you don't like the current modularization of your stylesheets, you might want to refactor all the modules for functional purposes, then refactor further to break out modules that will use a designated XSLT version, as in options 2 and 3. Another possibility is to take one motivating feature, such as stylesheet functions (see Part 1 of this series for more information), find all the places where you could use it, then decide whether you can isolate those places as in option 3 or force a complete refactoring as in option 1.”

Unfortunately this series from IBM seems to have disappeared from their developerWorks website (apart from Part 3 ), but the series can still be read at www.archive.org , see: Planning to upgrade XSLT 1.0 to 2.0, Part 2: Five strategies for changing from XSLT 1.0 to 2.0 , but the key thing is that if there is strategy to migrate all maps, whether BizTalk maps or non-BizTalk maps, from XSLT 1.0 to 2.0/3.0 careful planning is required.

For the complete series on Planning to upgrade XSLT 1.0 to 2.0, see:

- Part 1: Improvements in XSLT

- Part 2: Five strategies for changing from XSLT 1.0 to 2.0

- Part 3: Why the transition requires planning

- Part 4: The toolkit for XSLT portability

- Part 5: Make your stylesheets work with any processor version

- Part 6: How to mix XSLT versions for a 2.0 processor

- Part 7: Selection of XSLT 2.0 features and the 1.0 shortcomings they address

4 Generate Text Output

An XSLT map can now return a non-XML output, specifically text output. This now allows the second method of returning multiple target messages to be implemented: How to Return Multiple Target Messages from a Transform XML Action (Method 2) .

The Generate text output is in the Transform XML action’s Advanced settings as shown below:

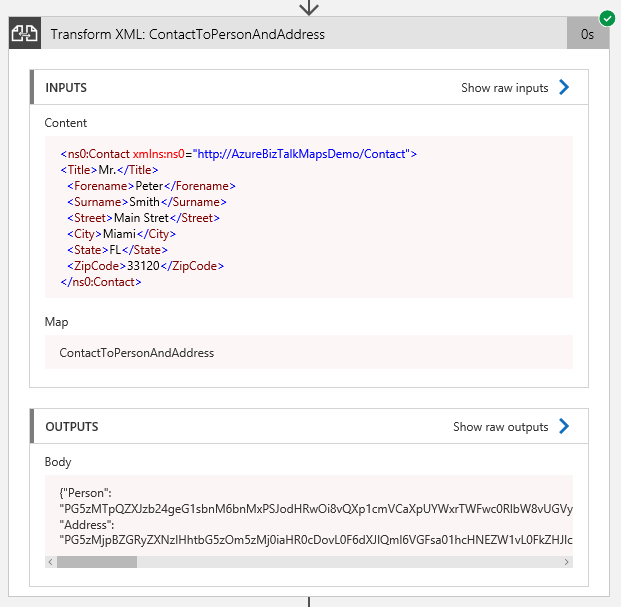

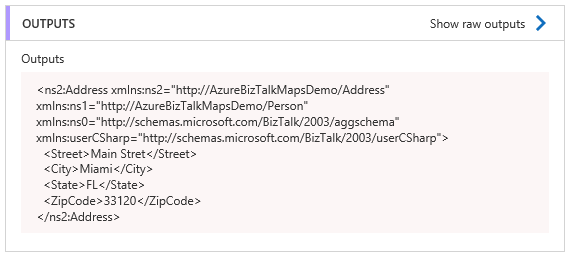

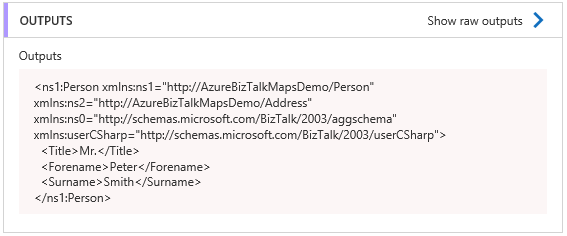

When the map given in the example is executed the output generated is as follows:

Each of the target XML messages can be referenced as follows in any subsequent Logic App actions:

One thing to remember is that is doesn’t matter what the media type is set to in the XSLT map e.g. media-type ="application/json" the output will always be treated by the Logic App as text. This means that if the output is to be used in subsequent actions it needs to be cast to JSON (in this example) beforehand (i.e. using the @json() function).

One of the consequences of this new feature is that it now provides a mechanism whereby a custom class method can now be called from a Logic App and the return value used rather than, say, calling an Azure Function. For an example of this see Azure Logic App: Calling an Integration Account Assembly Function from within an app .

5 Summary

The addition of external assemblies now makes the migration of BizTalk maps containing custom assemblies or custom functoids a lot easier. Without needing to change the existing code and by simply adding a new msxsl:script element to the XSLT, the map can be migrated. Care must be taken during deployment to ensure any assemblies are uploaded to the Integration Account prior to the maps.

The new XSLT 2.0/3.0 processor brings a vast swathe of new functionality to the XSLT developer and there are goods reason to consider migrating (see Why You Should Be Using XSLT 3.0 ), but it is important to know what functionality is, and what is not, available in the new XSLT processor; in some cases staying at XSLT 1.0 may be a more sensible option.

Finally, there is now only one more ‘group’ of maps to consider, namely, those maps that use custom assemblies or custom functoids that reference third-party components. A good example of this would be a custom functoid that references Oracle’s ODP.NET to perform database lookups in a similar way to the in-built database functoids for SQL Server, but even this isn't an insurmountable problem; third-party assemblies can be uploaded too (see Appendix B for an example on how to connect to and query an Oracle cloud database from an XSLT map).

6 References

- Migrating BizTalk Maps to Azure Logic Apps: Shortcomings & Solutions (part 1)

- Migrating BizTalk Maps to Azure Logic Apps: Shortcomings & Solutions (part 2)

- ILSpy (Open Source .NET Decompiler)

- http://ilspy.net

- Saxon-HE 9.7 (XSLT 2.0 Processor)

- Database Functoids Reference

- XSLT Stylesheet Scripting Using <msxsl:script>

- Integration Account Assemblies

7 Appendix A: BizTalk Database Functoids

Using ILSpy (or similar)

using System;

using System.Collections;

using System.Data;

using System.Data.OleDb;

using System.Globalization;

namespace FunctoidHelper

{

internal class DBFunctoidHelper

{

private string error;

private string connectionString;

private string table;

private string column;

private string value;

private OleDbConnection conn;

private Hashtable mapValues;

public string Error

{

get { return this.error; }

set { this.error = value; }

}

public string ConnectionString

{

get { return this.connectionString; }

set { this.connectionString = value; }

}

public string Table

{

get { return this.table; }

set { this.table = value; }

}

public string Column

{

get { return this.column; }

set { this.column = value; }

}

public string Value

{

get { return this.value; }

set { this.value = value; }

}

public OleDbConnection Connection

{

get { return this.conn; }

}

public Hashtable MapValues

{

get { return this.mapValues; }

}

public DBFunctoidHelper()

{

this.conn = new OleDbConnection();

this.mapValues = new Hashtable();

}

}

public class DatabaseScripts

{

[ThreadStatic]

private static Hashtable myDBFunctoidHelperList;

// Microsoft.BizTalk.BaseFunctoids.FunctoidScripts

private static void InitDBFunctoidHelperList()

{

if (myDBFunctoidHelperList == null)

{

myDBFunctoidHelperList = new Hashtable();

}

}

public string DBLookup(int index, string value, string connectionString, string table, string column)

{

DBFunctoidHelper dBFunctoidHelper = null;

bool flag = false;

DatabaseScripts.InitDBFunctoidHelperList();

if (!DatabaseScripts.myDBFunctoidHelperList.Contains(index))

{

dBFunctoidHelper = new DBFunctoidHelper();

DatabaseScripts.myDBFunctoidHelperList.Add(index, dBFunctoidHelper);

}

else

{

dBFunctoidHelper = (DBFunctoidHelper)DatabaseScripts.myDBFunctoidHelperList[index];

}

try

{

if (dBFunctoidHelper.ConnectionString == null || (dBFunctoidHelper.ConnectionString != null&& string.Compare(dBFunctoidHelper.ConnectionString, connectionString, StringComparison.Ordinal) != 0) || dBFunctoidHelper.Connection.State != ConnectionState.Open)

{

flag = true;

dBFunctoidHelper.MapValues.Clear();

dBFunctoidHelper.Error = "";

if (dBFunctoidHelper.Connection.State == ConnectionState.Open)

{

dBFunctoidHelper.Connection.Close();

}

dBFunctoidHelper.ConnectionString = connectionString;

dBFunctoidHelper.Connection.ConnectionString = connectionString;

dBFunctoidHelper.Connection.Open();

}

if (flag || string.Compare(dBFunctoidHelper.Table, table, StringComparison.Ordinal) != 0 || string.Compare(dBFunctoidHelper.Column, column, StringComparison.OrdinalIgnoreCase) != 0 || string.Compare(dBFunctoidHelper.Value, value, StringComparison.Ordinal) != 0 || (dBFunctoidHelper.Error != null&& dBFunctoidHelper.Error.Length > 0))

{

dBFunctoidHelper.Table = table;

dBFunctoidHelper.Column = column;

dBFunctoidHelper.Value = value;

dBFunctoidHelper.MapValues.Clear();

dBFunctoidHelper.Error = "";

using (OleDbCommand oleDbCommand = new OleDbCommand(string.Concat(new string[]

{

"SELECT * FROM ",

table,

" WHERE ",

column,

"= ?"

}), dBFunctoidHelper.Connection))

{

OleDbParameter oleDbParameter = new OleDbParameter();

oleDbParameter.Value = value;

oleDbCommand.Parameters.Add(oleDbParameter);

IDataReader dataReader = oleDbCommand.ExecuteReader();

if (dataReader.Read())

{

for (int i = 0; i < dataReader.FieldCount; i++)

{

string text = dataReader.GetName(i);

text = text.ToLower(CultureInfo.InvariantCulture);

object value2 = dataReader.GetValue(i);

dBFunctoidHelper.MapValues[text] = value2;

}

}

dataReader.Close();

}

}

}

catch (OleDbException ex)

{

if (ex.Errors.Count> 0)

{

dBFunctoidHelper.Error = ex.Errors[0].Message;

}

}

catch (Exception ex2)

{

dBFunctoidHelper.Error = ex2.Message;

}

finally

{

if (dBFunctoidHelper.Connection.State == ConnectionState.Open)

{

dBFunctoidHelper.Connection.Close();

}

}

return index.ToString(CultureInfo.InvariantCulture);

}

public string DBValueExtract(int index, string columnName)

{

string result = "";

DatabaseScripts.InitDBFunctoidHelperList();

if (DatabaseScripts.myDBFunctoidHelperList.Contains(index)&& !string.IsNullOrEmpty(columnName))

{

DBFunctoidHelper dBFunctoidHelper = (DBFunctoidHelper)DatabaseScripts.myDBFunctoidHelperList[index];

columnName = columnName.ToLower(CultureInfo.InvariantCulture);

object obj = dBFunctoidHelper.MapValues[columnName];

if (obj != null)

{

result = obj.ToString();

}

}

return result;

}

public string DBLookupShutdown()

{

return string.Empty;

}

public string DBErrorExtract(int index)

{

string text = "";

InitDBFunctoidHelperList();

try

{

if (myDBFunctoidHelperList.Contains(index))

{

DBFunctoidHelper dBFunctoidHelper = (DBFunctoidHelper)myDBFunctoidHelperList[index];

if (dBFunctoidHelper != null)

{

text = dBFunctoidHelper.Error;

}

}

}

catch (Exception)

{

}

if (text == null)

{

text = "";

}

return text;

}

}

}

8 Appendix B: How to Query an Oracle Cloud Database

This example assumes you have access to an Oracle Cloud database. We can upload any .DLL to the Integration Account, but for files larger than 2MB the process is a bit more involved and, like for large schema files, must use blob storage. Remember that the assemblies will get cached and so this download from blob storage will not occur every time the map is run.

The process is as follows:

- Download the NuGet package Oracle.ManagedDataAccess 18.3.0;

- Create an Azure blob storage, if you don't already have one;

- Locate the Oracle.ManagedDataAccess.dll and upload it to the blob storage. Note: Use the Storage Explorer to check the content-type. Ensure it is set to application/octet-stream and not application/x-msdownload;

- Copy the Oracle.ManagedDataAccess blob URI and go to the Integration Account Assemblies blade;

- Upload the Assembly, choose larger than 2MB and paste in the URI;

- Create an XSLT map as below an upload it to the Integration Account Maps blade (update the connection string values and change the query to suit the Oracle database table you want to use);

<?xml version="1.0" encoding="utf-16"?>

<xsl:stylesheet xmlns:xsl="http://www.w3.org/1999/XSL/Transform" xmlns:msxsl="urn:schemas-microsoft-com:xslt" exclude-result-prefixes="msxsl s0 ScriptNS0" version="1.0" xmlns:s0="http://AzureBizTalkMapsDemo/Person" xmlns:ns0="http://AzureBizTalkMapsDemo/Contact" xmlns:ScriptNS0="http://schemas.microsoft.com/BizTalk/2003/ScriptNS0">

<xsl:output omit-xml-declaration="yes" method="xml" indent="yes" version="1.0" />

<xsl:template match="/">

<xsl:apply-templates select="/s0:Person" />

</xsl:template>

<xsl:template match="/s0:Person">

<ns0:Contact>

<Title><xsl:value-of select="Title/text()" /></Title>

<Forename><xsl:value-of select="Forename/text()" /></Forename>

<Surname><xsl:value-of select="Surname/text()" /></Surname>

<Phone><xsl:value-of select="ScriptNS0:GetPhoneNo(ID/text())" /></Phone>

</ns0:Contact>

</xsl:template>

<msxsl:script language="C#" implements-prefix="ScriptNS0">

<msxsl:assembly name ="System.Data"/>

<msxsl:assembly name ="Oracle.ManagedDataAccess, Version=4.122.18.3, Culture=neutral, PublicKeyToken=89b483f429c47342"/>

<msxsl:using namespace="Oracle.ManagedDataAccess.Client" />

<![CDATA[

public string GetPhoneNo(int id)

{

//Create a connection to Oracle

string conString = "User Id=system; password=;" +

//How to connect to an Oracle DB without SQL*Net configuration file

//also known as tnsnames.ora.

"Data Source=xxx.xxx.xxx.xxx:1521/PDB1.xxxxxxxxx.oraclecloud.internal; Pooling=false;";

OracleConnection con = new OracleConnection();

con.ConnectionString = conString;

con.Open();

OracleCommand cmd = con.CreateCommand();

cmd.CommandText = "SELECT PHONE FROM CUSTOMERS WHERE ID=" + id.ToString();

//Execute the command and use datareader to get the data

OracleDataReader reader = cmd.ExecuteReader();

reader.Read();

return reader.GetString(0);

}

]]>

</msxsl:script>

</xsl:stylesheet>