Cognitive Services : Extract handwritten text from an image using Computer Vision API With ASP.NET Core And C#

Introduction

In this article, we are going to learn how to extract handwritten text from an image using one of the important Cognitive Services API called Computer Vision API. So, we need a valid subscription key for accessing this feature. So before reading this article, you must read our previous articles related to Computer Vision API because we have explained other features of Computer Vision API in our previous article. This technology is currently in preview and is only available for English text.

Before reading this article, you must read the articles given below for Computer Vision API Knowledge.

- Cognitive Services : Analyze an Image Using Computer Vision API

- Cognitive Services – Optical Character Recognition (OCR) from an image using Computer Vision API And C#

Prerequisites

- Subscription key ( Azure Portal ).

- Visual Studio 2015 or 2017

Subscription Key Free Trial

If you don’t have Microsoft Azure Subscription and want to test the Computer Vision API because it requires a valid Subscription key for processing the image information. Don’t worry !! Microsoft gives a 7 day trial Subscription Key ( Click here ). We can use that Subscription key for testing purposes. If you sign up using the Computer Vision free trial, then your subscription keys are valid for the westcentral region (https://westcentralus.api.cognitive.microsoft.com ).

Requirements

These are the major requirements mentioned in the Microsoft docs.

- Supported input methods: Raw image binary in the form of an application/octet stream or image URL.

- Supported image formats: JPEG, PNG, BMP.

- Image file size: Less than 4 MB.

- Image dimensions must be at least 40 x 40, at most 3200 x 3200.

Computer Vision API

First, we need to log into the Azure Portal with our Azure credentials. Then we need to create an Azure Computer Vision Subscription Key in the Azure portal.

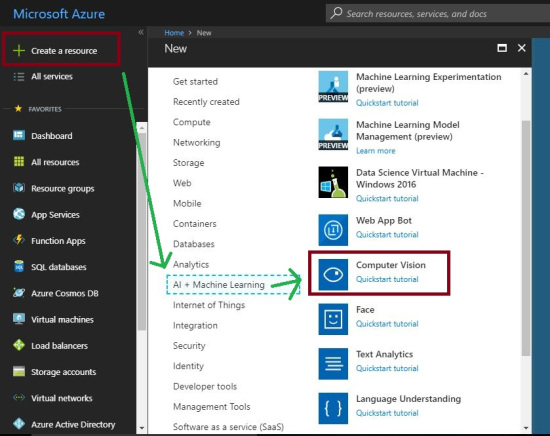

Click on "Create a resource" on the left side menu and it will open an "Azure Marketplace". There, we can see the list of services. Click "AI + Machine Learning" then click on the "Computer Vision".

Provision a Computer Vision Subscription Key

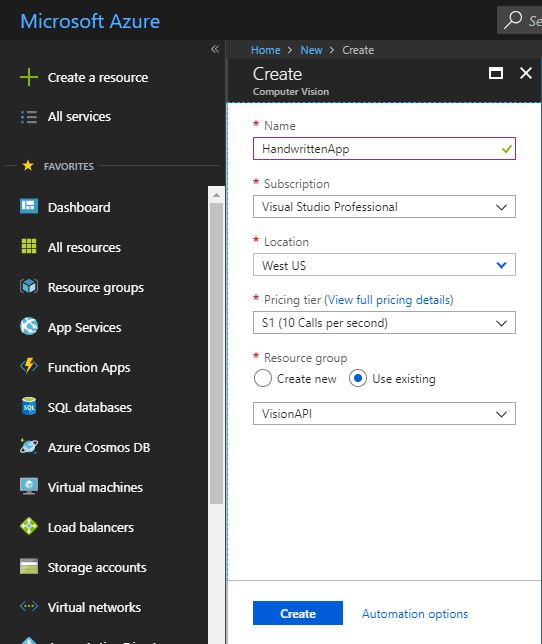

After clicking the "Computer Vision", It will open another section. There, we need to provide the basic information about Computer Vision API.

Name : Name of the Computer Vision API ( Eg. HandwrittenApp ).

Subscription : We can select our Azure subscription for Computer Vision API creation.

Location : We can select our location of resource group. The best thing is we can choose a location closest to our customer.

Pricing tier : Select an appropriate pricing tier for our requirement.

Resource group : We can create a new resource group or choose from an existing one.

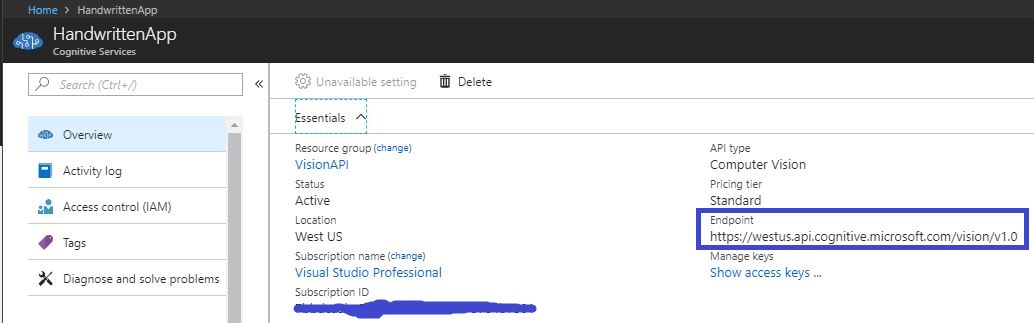

Now click on the “HandwrittenApp” in dashboard page and it will redirect to the details page of HandwrittenApp ( “Overview” ). Here, we can see the Manage Key ( Subscription key details ) & Endpoint details. Click on the “Show access keys…” links and it will redirect to another page.

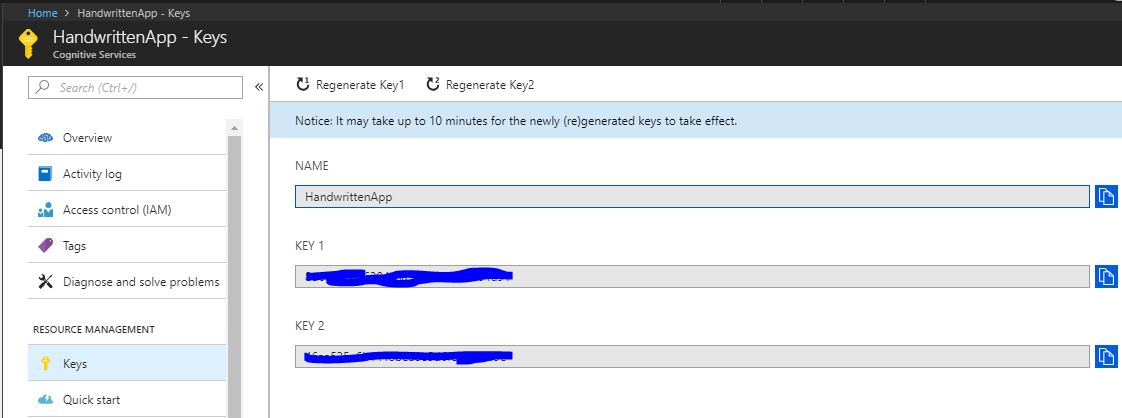

We can use any of the subscription keys or regenerate the given key for getting image information using Computer Vision API.

Endpoint

As we mentioned above the location is the same for all the free trial Subscription Keys. In Azure we can choose available locations while creating a Computer Vision API. We have used the following endpoint in our code.

https://westus.api.cognitive.microsoft.com/vision/v1.0/recognizeText

View Model

The following model contains the API image response information.

using System.Collections.Generic;

namespace HandwrittenTextApp.Models

{

public class Word

{

public List<int> boundingBox { get; set; }

public string text { get; set; }

}

public class Line

{

public List<int> boundingBox { get; set; }

public string text { get; set; }

public List<Word> words { get; set; }

}

public class RecognitionResult

{

public List<Line> lines { get; set; }

}

public class ImageInfoViewModel

{

public string status { get; set; }

public RecognitionResult recognitionResult { get; set; }

}

}

Request URL

We can add additional parameters or request parameters ( optional ) in our API “endPoint” and it will provide more information for the given image.

https://[location].api.cognitive.microsoft.com/vision/v1.0/recognizeText[?handwriting]

Request parameters

These are the following optional parameters available in Computer Vision API.

- mode

mode

The mode will be different for different versions of Vision API. So don’t get confused while we are using Version v1 that is given in our Azure portal. If this parameter is set to “Printed”, printed text recognition is performed. If “Handwritten” is specified, handwriting recognition is performed. (Note: This parameter is case sensitive.) This is a required parameter and cannot be empty.

Interface

The “IVisionApiService” contains two signatures for processing or extracting handwritten content in an image. So we have injected this interface in the ASP.NET Core “Startup.cs” class as a “AddTransient”.

using System.Threading.Tasks;

namespace HandwrittenTextApp.Business_Layer.Interface

{

interface IVisionApiService

{

Task<string> ReadHandwrittenText();

byte[] GetImageAsByteArray(string imageFilePath);

}

}

Vision API Service

The following code will process and generate image information using Computer Vision API and its response is mapped into the “ImageInfoViewModel”. We can add the valid Computer Vision API Subscription Key into the following code.

using HandwrittenTextApp.Business_Layer.Interface;

using HandwrittenTextApp.Models;

using Newtonsoft.Json;

using System;

using System.Collections.Generic;

using System.IO;

using System.Linq;

using System.Net.Http;

using System.Net.Http.Headers;

using System.Threading.Tasks;

namespace HandwrittenTextApp.Business_Layer

{

public class VisionApiService : IVisionApiService

{

// Replace <Subscription Key> with your valid subscription key.

const string subscriptionKey = "<Subscription Key>";

// You must use the same region in your REST call as you used to

// get your subscription keys. The paid subscription keys you will get

// it from microsoft azure portal.

// Free trial subscription keys are generated in the westcentralus region.

// If you use a free trial subscription key, you shouldn't need to change

// this region.

const string endPoint =

"https://westus.api.cognitive.microsoft.com/vision/v1.0/recognizeText";

///

<summary>

/// Gets the handwritten text from the specified image file by using

/// the Computer Vision REST API.

/// </summary>

/// <param name="imageFilePath">The image file with handwritten text.</param>

public async Task<string> ReadHandwrittenText()

{

string imageFilePath = @"C:\Users\rajeesh.raveendran\Desktop\vaisakh.jpg";

var errors = new List<string>();

ImageInfoViewModel responeData = new ImageInfoViewModel();

string extractedResult = "";

try

{

HttpClient client = new HttpClient();

// Request headers.

client.DefaultRequestHeaders.Add(

"Ocp-Apim-Subscription-Key", subscriptionKey);

// Request parameter.

// Note: The request parameter changed for APIv2.

// For APIv1, it is "handwriting=true".

string requestParameters = "mode=Handwritten";

// Assemble the URI for the REST API Call.

string uri = endPoint + "?" + requestParameters;

HttpResponseMessage response;

// Two REST API calls are required to extract handwritten text.

// One call to submit the image for processing, the other call

// to retrieve the text found in the image.

// operationLocation stores the REST API location to call to

// retrieve the text.

string operationLocation;

// Request body.

// Posts a locally stored JPEG image.

byte[] byteData = GetImageAsByteArray(imageFilePath);

using (ByteArrayContent content = new ByteArrayContent(byteData))

{

// This example uses content type "application/octet-stream".

// The other content types you can use are "application/json"

// and "multipart/form-data".

content.Headers.ContentType =

new MediaTypeHeaderValue("application/octet-stream");

// The first REST call starts the async process to analyze the

// written text in the image.

response = await client.PostAsync(uri, content);

}

// The response contains the URI to retrieve the result of the process.

if (response.IsSuccessStatusCode)

operationLocation =

response.Headers.GetValues("Operation-Location").FirstOrDefault();

else

{

// Display the JSON error data.

string errorString = await response.Content.ReadAsStringAsync();

//Console.WriteLine("\n\nResponse:\n{0}\n",

// JToken.Parse(errorString).ToString());

return errorString;

}

// The second REST call retrieves the text written in the image.

//

// Note: The response may not be immediately available. Handwriting

// recognition is an async operation that can take a variable amount

// of time depending on the length of the handwritten text. You may

// need to wait or retry this operation.

//

// This example checks once per second for ten seconds.

string result;

int i = 0;

do

{

System.Threading.Thread.Sleep(1000);

response = await client.GetAsync(operationLocation);

result = await response.Content.ReadAsStringAsync();

++i;

}

while (i < 10 && result.IndexOf("\"status\":\"Succeeded\"") == -1);

if (i == 10 && result.IndexOf("\"status\":\"Succeeded\"") == -1)

{

Console.WriteLine("\nTimeout error.\n");

return "Error";

}

//If it is success it will execute further process.

if (response.IsSuccessStatusCode)

{

// The JSON response mapped into respective view model.

responeData = JsonConvert.DeserializeObject<ImageInfoViewModel>(result,

new JsonSerializerSettings

{

NullValueHandling = NullValueHandling.Include,

Error = delegate (object sender, Newtonsoft.Json.Serialization.ErrorEventArgs earg)

{

errors.Add(earg.ErrorContext.Member.ToString());

earg.ErrorContext.Handled = true;

}

}

);

var linesCount = responeData.recognitionResult.lines.Count;

for (int j = 0; j < linesCount; j++)

{

var imageText = responeData.recognitionResult.lines[j].text;

extractedResult += imageText + Environment.NewLine;

}

}

}

catch (Exception e)

{

Console.WriteLine("\n" + e.Message);

}

return extractedResult;

}

///

<summary>

/// Returns the contents of the specified file as a byte array.

/// </summary>

/// <param name="imageFilePath">The image file to read.</param>

/// <returns>The byte array of the image data.</returns>

public byte[] GetImageAsByteArray(string imageFilePath)

{

using (FileStream fileStream =

new FileStream(imageFilePath, FileMode.Open, FileAccess.Read))

{

BinaryReader binaryReader = new BinaryReader(fileStream);

return binaryReader.ReadBytes((int)fileStream.Length);

}

}

}

}

API Response – Based on the given Image

The successful json response.

{

"status": "Succeeded",

"recognitionResult": {

"lines": [

{

"boundingBox": [

170,

34,

955,

31,

956,

78,

171,

81

],

"text": "Memories ! are born not made !",

"words": [

{

"boundingBox": [

158,

33,

378,

33,

373,

81,

153,

81

],

"text": "Memories"

},

{

"boundingBox": [

359,

33,

407,

33,

402,

81,

354,

81

],

"text": "!"

},

{

"boundingBox": [

407,

33,

508,

33,

503,

81,

402,

81

],

"text": "are"

},

{

"boundingBox": [

513,

33,

662,

33,

657,

81,

508,

81

],

"text": "born"

},

{

"boundingBox": [

676,

33,

786,

33,

781,

81,

671,

81

],

"text": "not"

},

{

"boundingBox": [

786,

33,

940,

33,

935,

81,

781,

81

],

"text": "made"

},

{

"boundingBox": [

926,

33,

974,

33,

969,

81,

921,

81

],

"text": "!"

}

]

},

{

"boundingBox": [

181,

121,

918,

112,

919,

175,

182,

184

],

"text": "Bloom of roses to my heart",

"words": [

{

"boundingBox": [

162,

123,

307,

121,

298,

185,

154,

187

],

"text": "Bloom"

},

{

"boundingBox": [

327,

120,

407,

119,

398,

183,

318,

185

],

"text": "of"

},

{

"boundingBox": [

422,

119,

572,

117,

563,

181,

413,

183

],

"text": "roses"

},

{

"boundingBox": [

577,

117,

647,

116,

638,

180,

568,

181

],

"text": "to"

},

{

"boundingBox": [

647,

116,

742,

115,

733,

179,

638,

180

],

"text": "my"

},

{

"boundingBox": [

757,

115,

927,

113,

918,

177,

748,

179

],

"text": "heart"

}

]

},

{

"boundingBox": [

190,

214,

922,

201,

923,

254,

191,

267

],

"text": "Sometimes lonely field as",

"words": [

{

"boundingBox": [

178,

213,

468,

209,

467,

263,

177,

267

],

"text": "Sometimes"

},

{

"boundingBox": [

486,

209,

661,

206,

660,

260,

485,

263

],

"text": "lonely"

},

{

"boundingBox": [

675,

206,

840,

203,

839,

257,

674,

260

],

"text": "field"

},

{

"boundingBox": [

850,

203,

932,

202,

931,

256,

848,

257

],

"text": "as"

}

]

},

{

"boundingBox": [

187,

304,

560,

292,

561,

342,

188,

354

],

"text": "sky kisses it",

"words": [

{

"boundingBox": [

173,

302,

288,

300,

288,

353,

173,

355

],

"text": "sky"

},

{

"boundingBox": [

288,

300,

488,

295,

488,

348,

288,

353

],

"text": "kisses"

},

{

"boundingBox": [

488,

295,

573,

293,

573,

346,

488,

348

],

"text": "it"

}

]

},

{

"boundingBox": [

191,

417,

976,

387,

979,

469,

194,

499

],

"text": "Three years iam gifted with",

"words": [

{

"boundingBox": [

173,

417,

324,

412,

318,

494,

167,

499

],

"text": "Three"

},

{

"boundingBox": [

343,

411,

504,

405,

498,

488,

337,

493

],

"text": "years"

},

{

"boundingBox": [

517,

405,

623,

401,

617,

483,

512,

487

],

"text": "iam"

},

{

"boundingBox": [

646,

400,

839,

394,

833,

476,

640,

483

],

"text": "gifted"

},

{

"boundingBox": [

839,

394,

977,

389,

971,

471,

833,

476

],

"text": "with"

}

]

},

{

"boundingBox": [

167,

492,

825,

472,

828,

551,

169,

572

],

"text": "gud friend happiness !",

"words": [

{

"boundingBox": [

159,

493,

274,

489,

274,

569,

159,

573

],

"text": "gud"

},

{

"boundingBox": [

284,

489,

484,

483,

484,

563,

284,

569

],

"text": "friend"

},

{

"boundingBox": [

504,

482,

814,

473,

814,

553,

504,

562

],

"text": "happiness"

},

{

"boundingBox": [

794,

474,

844,

472,

844,

552,

794,

554

],

"text": "!"

}

]

},

{

"boundingBox": [

167,

608,

390,

628,

387,

664,

163,

644

],

"text": "50870 W,",

"words": [

{

"boundingBox": [

159,

603,

321,

623,

310,

661,

147,

641

],

"text": "50870"

},

{

"boundingBox": [

309,

621,

409,

634,

397,

672,

297,

659

],

"text": "W,"

}

]

},

{

"boundingBox": [

419,

607,

896,

601,

897,

665,

420,

671

],

"text": "Seperation , sheds",

"words": [

{

"boundingBox": [

404,

609,

713,

604,

707,

669,

399,

674

],

"text": "Seperation"

},

{

"boundingBox": [

703,

604,

749,

604,

743,

669,

698,

669

],

"text": ","

},

{

"boundingBox": [

740,

604,

910,

602,

904,

667,

734,

669

],

"text": "sheds"

}

]

},

{

"boundingBox": [

161,

685,

437,

688,

436,

726,

160,

724

],

"text": "blood as in",

"words": [

{

"boundingBox": [

147,

687,

299,

684,

291,

726,

139,

729

],

"text": "blood"

},

{

"boundingBox": [

311,

683,

387,

682,

379,

724,

303,

725

],

"text": "as"

},

{

"boundingBox": [

398,

681,

440,

681,

432,

723,

390,

724

],

"text": "in"

}

]

},

{

"boundingBox": [

518,

678,

686,

679,

685,

719,

517,

718

],

"text": "tears !",

"words": [

{

"boundingBox": [

518,

677,

678,

682,

665,

723,

505,

717

],

"text": "tears"

},

{

"boundingBox": [

658,

681,

708,

683,

695,

724,

645,

722

],

"text": "!"

}

]

},

{

"boundingBox": [

165,

782,

901,

795,

900,

868,

164,

855

],

"text": "I can't bear it Especially",

"words": [

{

"boundingBox": [

145,

785,

191,

786,

184,

862,

138,

861

],

"text": "I"

},

{

"boundingBox": [

204,

786,

342,

787,

336,

863,

198,

862

],

"text": "can't"

},

{

"boundingBox": [

370,

788,

513,

789,

506,

865,

364,

864

],

"text": "bear"

},

{

"boundingBox": [

522,

789,

595,

790,

589,

866,

516,

865

],

"text": "it"

},

{

"boundingBox": [

605,

790,

913,

794,

907,

869,

598,

866

],

"text": "Especially"

}

]

},

{

"boundingBox": [

165,

874,

966,

884,

965,

942,

164,

933

],

"text": "final year a bunch of white",

"words": [

{

"boundingBox": [

155,

872,

306,

875,

294,

936,

143,

933

],

"text": "final"

},

{

"boundingBox": [

331,

876,

457,

878,

445,

939,

320,

936

],

"text": "year"

},

{

"boundingBox": [

466,

878,

508,

879,

496,

940,

454,

939

],

"text": "a"

},

{

"boundingBox": [

525,

879,

676,

882,

664,

943,

513,

940

],

"text": "bunch"

},

{

"boundingBox": [

697,

882,

772,

884,

760,

945,

685,

943

],

"text": "of"

},

{

"boundingBox": [

785,

884,

970,

888,

958,

948,

773,

945

],

"text": "white"

}

]

},

{

"boundingBox": [

174,

955,

936,

960,

935,

1006,

173,

1001

],

"text": "roses to me . I Loved it ! !",

"words": [

{

"boundingBox": [

164,

953,

348,

954,

341,

1002,

157,

1001

],

"text": "roses"

},

{

"boundingBox": [

376,

955,

445,

955,

437,

1003,

368,

1003

],

"text": "to"

},

{

"boundingBox": [

449,

955,

537,

956,

529,

1004,

442,

1003

],

"text": "me"

},

{

"boundingBox": [

518,

956,

564,

957,

557,

1005,

511,

1004

],

"text": "."

},

{

"boundingBox": [

569,

957,

615,

957,

607,

1005,

561,

1005

],

"text": "I"

},

{

"boundingBox": [

629,

957,

799,

959,

791,

1007,

621,

1005

],

"text": "Loved"

},

{

"boundingBox": [

817,

959,

886,

960,

879,

1008,

810,

1007

],

"text": "it"

},

{

"boundingBox": [

881,

960,

927,

960,

920,

1008,

874,

1008

],

"text": "!"

},

{

"boundingBox": [

909,

960,

955,

960,

948,

1008,

902,

1008

],

"text": "!"

}

]

},

{

"boundingBox": [

613,

1097,

680,

1050,

702,

1081,

635,

1129

],

"text": "by",

"words": [

{

"boundingBox": [

627,

1059,

683,

1059,

681,

1107,

625,

1107

],

"text": "by"

}

]

},

{

"boundingBox": [

320,

1182,

497,

1191,

495,

1234,

318,

1224

],

"text": "Vaisak",

"words": [

{

"boundingBox": [

309,

1183,

516,

1186,

492,

1229,

286,

1227

],

"text": "Vaisak"

}

]

},

{

"boundingBox": [

582,

1186,

964,

1216,

961,

1264,

578,

1234

],

"text": "Viswanathan",

"words": [

{

"boundingBox": [

574,

1186,

963,

1218,

945,

1265,

556,

1232

],

"text": "Viswanathan"

}

]

},

{

"boundingBox": [

289,

1271,

997,

1279,

996,

1364,

288,

1356

],

"text": "( Menonpara, Palakkad )",

"words": [

{

"boundingBox": [

274,

1264,

324,

1265,

306,

1357,

256,

1356

],

"text": "("

},

{

"boundingBox": [

329,

1265,

679,

1273,

661,

1364,

311,

1357

],

"text": "Menonpara,"

},

{

"boundingBox": [

669,

1273,

979,

1279,

961,

1371,

651,

1364

],

"text": "Palakkad"

},

{

"boundingBox": [

969,

1279,

1019,

1280,

1001,

1371,

951,

1370

],

"text": ")"

}

]

}

]

}

}

Download

Output

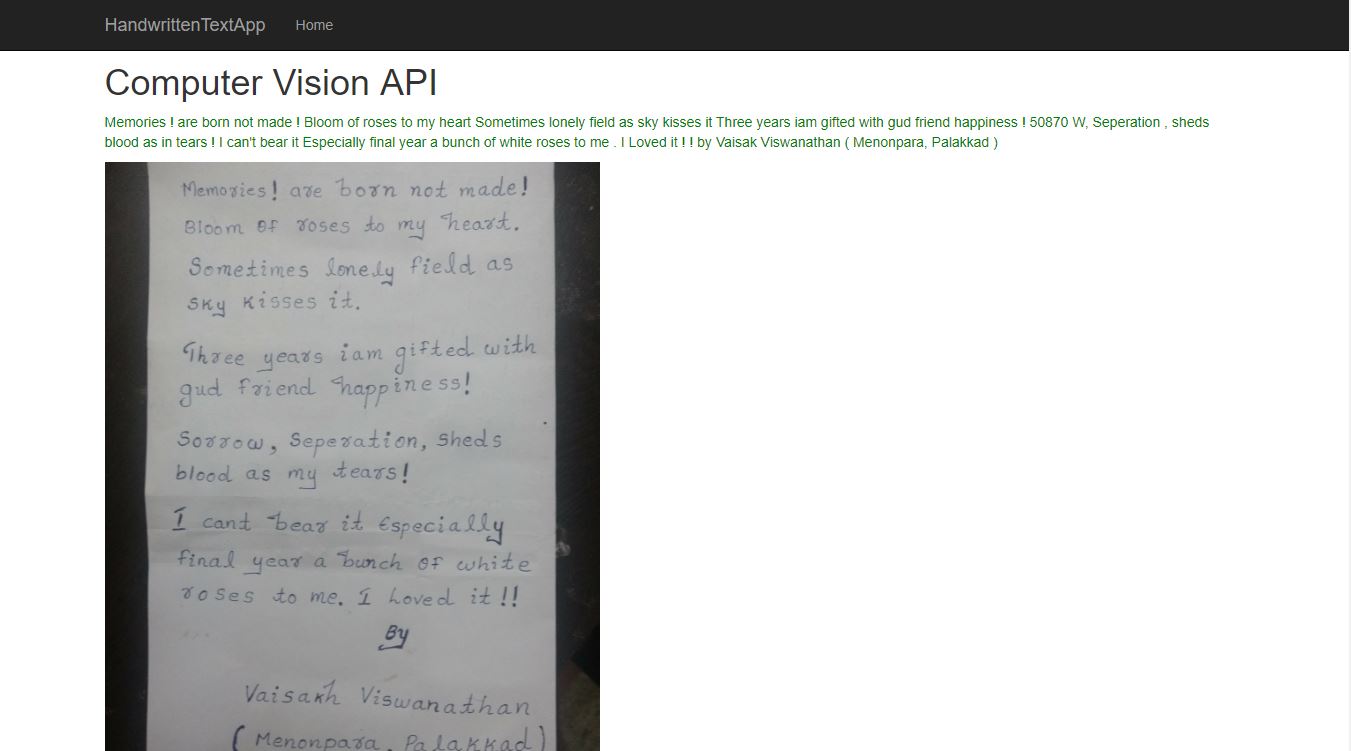

Handwritten content from an image using Computer Vision API. The content is extracted (around 99.99%) from the given image. If any failure occurs in detecting the image,it means that the Vision algorithm is not able to identify the written content.

Note : Thank you Vaisakh Viswanathan ( The author of the poem ).

Reference

Summary

From this article we have learned extract handwritten content from an image using One of the important Cognitive Services API ( Computer Vision API ). I hope this article is useful for all Azure Cognitive Services API beginners.

See Also

It's recommended to read more articles related to ASP.NET Core & Azure App Service.

- ASP.NET CORE 1.0: Getting Started

- ASP.NET Core 1.0: Project Layout

- ASP.NET Core 1.0: Middleware And Static files (Part 1)

- Middleware And Staticfiles In ASP.NET Core 1.0 - Part Two

- ASP.NET Core 1.0 Configuration: Aurelia Single Page Applications

- ASP.NET Core 1.0: Create An Aurelia Single Page Application

- Create Rest API Or Web API With ASP.NET Core 1.0

- ASP.NET Core 1.0: Adding A Configuration Source File

- Code First Migration - ASP.NET Core MVC With EntityFrameWork Core

- Building ASP.NET Core MVC Application Using EF Core and ASP.NET Core 1.0

- Send Email Using ASP.NET CORE 1.1 With MailKit In Visual Studio 2017

- ASP.NET Core And MVC Core: Session State

- Startup Page In ASP.NET Core

- Sending SMS Using ASP.NET Core With Twilio SMS API

- Create And Deploy An ASP.NET Core Web App In Azure

- Chat Bot with Azure Bot Service

- Channel Configuration - Azure Bot Service To Slack Application

- Cognitive Services : Analyze an Image Using Computer Vision API

- Cognitive Services – Optical Character Recognition (OCR) from an image using Computer Vision API And C#