Storage Architecture for Private Cloud

Note: This article is no longer being updated by the Microsoft team that originally published it. It remains online for the community to update, if desired. Current documents from Microsoft that help you plan for cloud solutions with Microsoft products are found at the TechNet Library Solutions or Cloud and Datacenter Solutions pages.

The storage design for any private cloud or virtualization-based solution is a critical element that is typically responsible for a large percentage of the solution’s overall cost, performance, and agility. The following sections outline Microsoft guiding principles for storage architecture.

Storage Options

Although many storage options exist, organizations should choose their storage devices based on their specific data management needs. Storage devices are typically modular and flexible midrange and high-end SANs. Modular midrange SANs are procured independently and can be chained together to provide greater capacity. They are efficient, can grow with the environment as needed, and require less up-front investment than high-end SANs. Large enterprises may have larger storage demands and may need to serve a larger set of customers and workloads. In this case, high-end SANs can provide the highest performance and capacity. High-end SANs typically include more advanced features such as continuous data availability through technologies like dispersed cluster support.

SAN Storage Protocols

Comparing iSCSI, FC, and FCoE

Fibre Channel has historically been the storage protocol of choice for enterprise data centers for a variety of reasons, including good performance and low latency. Over the past several years, however, the advancing performance of Ethernet from 1 Gbps to 10 Gbps and beyond has led to great interest in storage protocols that make use of Ethernet transport—such as Internet Small Computer System Interface (iSCSI) and more recently, Fibre Channel over Ethernet (FCoE).

A key advantage of the protocols that use the Ethernet transport is the ability to use a ―converged‖ network architecture, where a single Ethernet infrastructure serves as the transport for both LAN and storage traffic. FCoE is an emerging technology, which brings the benefits of using an Ethernet transport while retaining the advantages of the Fibre Channel protocol and the ability to use Fibre Channel storage arrays.

Several enhancements to standard Ethernet are required for FCoE. This is commonly referred to as enhanced Ethernet or data center Ethernet. These enhancements require Ethernet switches that are capable of supporting enhanced Ethernet.

A common practice in large-scale Hyper-V deployments is to utilize both Fibre Channel and iSCSI. Fibre Channel and iSCSI can provide the host storage connectivity, and in contrast iSCSI is directly used by guests—for example, for the shared disks in a guest cluster. In this case, although Ethernet and some storage I/O will be sharing the same pipe, segregation is achieved by VLANs, and quality of service (QoS) can be further applied by the OEM’s networking software.

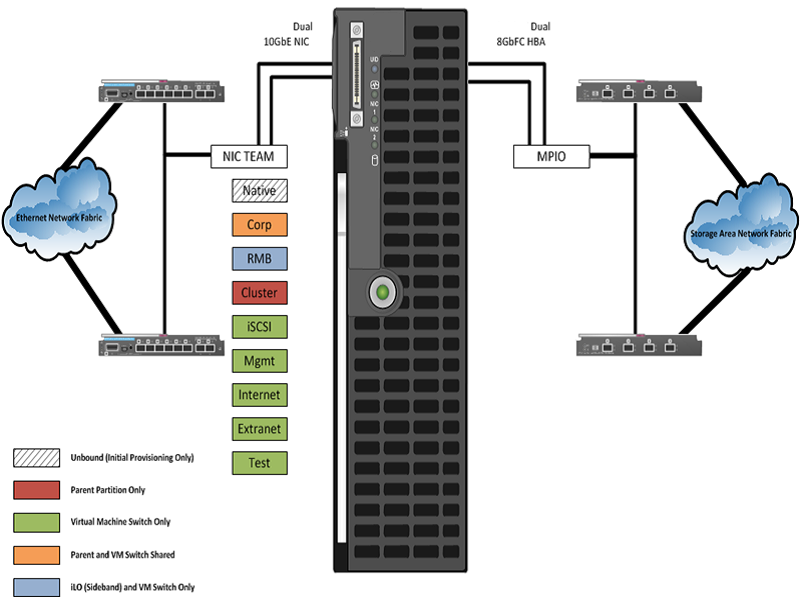

Figure 1: Example of blade server host design

Storage Network

Both iSCSI and FCoE utilize an Ethernet transport for storage networking. This provides another

architecture choice in terms of whether to use a dedicated Ethernet network with separate switches,

cables, paths, and other infrastructure, or instead to use a converged network where multiple traffic

types are run over the same cabling and infrastructure.

The storage solution must provide logical or physical isolation between storage and Ethernet I/O. If

it’s a converged network, QoS must be provided to guarantee storage performance. The storage

solution must provide iSCSI connectivity for guest clustering, and there must be fully redundant,

independent paths for storage I/O.

For FCoE, utilize standards-based converged network adapters, switches, and Fibre Channel storage

arrays. Ensure that the selected storage arrays also provide iSCSI connectivity over standard

Ethernet so that Hyper-V guest clusters can be utilized. If using iSCSI or Fibre Channel, make sure

that there are dedicated network adapters, switches, and paths for the storage traffic.

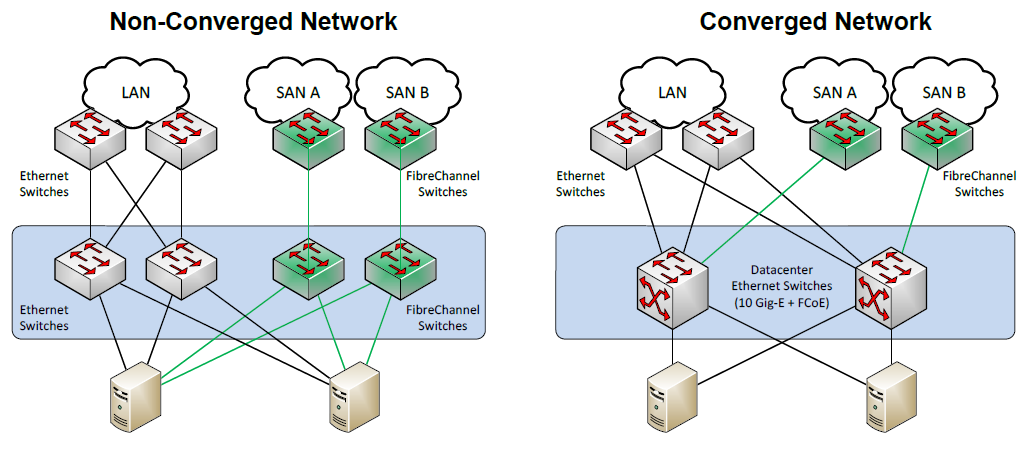

The diagram below illustrates the differences between a traditional architecture (left) with separate

Ethernet and Fibre Channel switches, each with redundant paths, compared to a converged

architecture (right) where both Ethernet and Fibre Channel (via FCoE) utilize the same set of cables

while still providing redundant paths. The converged architecture requires fewer switches and cables.

In the converged architecture, the switches must be capable of supporting enhanced Ethernet.

Figure 2: A traditional architecture compared to a converged architecture

Clustered File Systems

The choice of file system to run on top of the storage architecture is another critical design factor.

Although not strictly required to support live migration and other advanced features, use of clustered

file systems or cluster shared volumes (CSV) as part of Windows Server 2008 R2 can provide

significant manageability benefits. Both CSV and clustered file systems enable the use of larger

logical unit numbers (LUNs) to store multiple VMs while providing the ability for each VM to be ―live

migrated‖ independently. This is enabled by providing all nodes with the ability to read and write from

the shared LUN at the same time.

The decision to use CSV or a third-party solution that is compatible with Hyper-V and Windows

failover clustering should be made by carefully by weighing the advantages of one versus the other

versus the actual environment requirements.

Cluster Shared Volumes

Windows Server 2008 R2 includes the first version of Windows failover clustering to offer a distributed file access solution. CSV in Windows Server 2008 R2 is exclusively for use with the Hyper-V role and enables all nodes in the cluster to access the same cluster storage volumes at the same time. This enhancement eliminates the one VM per LUN requirement of previous Hyper-V versions without using a third-party file system. CSV uses standard New Technology File System (NTFS) and has no special hardware requirements. From a functional standpoint, if the storage is suitable for failover clustering, it is suitable for CSV.

CSV provides not only shared access to the disk, but also storage path I/O fault tolerance (dynamic I/O redirection). In the event that the storage path on one node becomes unavailable, the I/O for that node is rerouted via Server Message Block (SMB) through another node.

CSV maintains metadata information about the volume access and requires that some I/O operations take place over the cluster communications network. One node in the cluster is designated as the coordinator node and is responsible for these disk operations. However, all nodes in the cluster can read/write directly and concurrently to the same volume (not the same file) through the dedicated storage paths for disk I/O, unless a failure scenario occurs as described above.

CSV Characteristics

Table 1 below shows the characteristics that are defined by the New Technology File System (NTFS) and are inherited by CSV.

CSV Volume Sizing

Because all cluster nodes can access all CSV volumes simultaneously, IT managers can now use standard LUN allocation methodologies based on performance and capacity requirements of the expected workloads. Generally speaking, isolating the VM operating system I/O from the application data I/O is a good start, in addition to application-specific considerations such as segregating database I/O from logging I/O and creating SAN volumes and storage pools that factor in the I/O profile itself (that is, random read and write operations versus sequential write operations).

The architecture of CSV differs from traditional clustered file systems, which frees it from common scalability limitations. As a result, there is no special guidance for scaling the number of Hyper-V nodes or VMs on a CSV volume. The important thing to keep in mind is that all VM virtual disks running on a particular CSV will contend for storage I/O.

Also worth noting is that individual SAN LUNs do not necessarily equate to dedicated disk spindles. A SAN storage pool or RAID array may contain many LUNs. A LUN is simply a logic representation of a disk provisioned from a pool of disks. Therefore, if an enterprise application requires specific storage I/O operations per second (IOPS) or disk response times, IT managers must consider all the LUNs in use on that storage pool. An application that would require dedicated physical disks were it not virtualized may require dedicate storage pools and CSV volumes running within a VM.

Consider the following when setting up your CSV infrastructure:

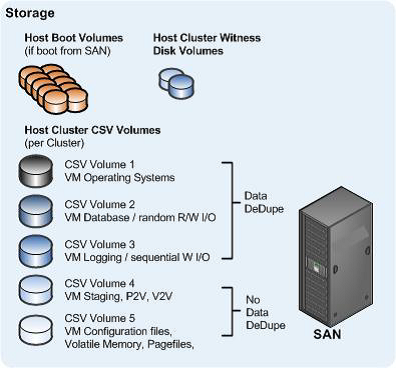

- At least four CSVs per host cluster are recommended for segregating operating system I/O, random R/W I/O, sequential I/O, and other VM-specific data.

- Create a standard size and IOPS profile for each type of CSV LUN to utilize for capacity planning. When additional capacity is needed, provision additional standard CSV LUNs.

- Consider prioritizing the network used for CSV traffic. For more information, see Designating a Preferred Network for Cluster Shared Volumes Communication in the Microsoft TechNet Library.

Figure 3: Example of a common CSV design for a large cluster

SAN Design

A highly available SAN design should have no single points of failure, including:

- Redundant power from independent power distribution units (PDUs)

- Redundant storage controllers

- Redundant storage paths (supported, for example, by redundant target ports of NICs per controller, redundant FC or IP network switches and redundant cabling)

- Data storage redundancy such as that that occurs with volume mirroring, or synchronous or asynchronous replication

Address the following elements when designing or modifying your SAN as the basis of your Hyper-V Cloud storage infrastructure:

- Performance

- Drive types

- Multipathing

- Fibre Channel SAN

- iSCSI SAN

- Data deduplication

- Thin provisioning

- Volume cloning

- Volume snapshots

Performance

Storage performance is a complex mix of drive, interface, controller, cache, protocol, SAN, HBA, driver, and operating system considerations. The overall performance of the storage architecture is typically measured in terms of maximum throughput, maximum I/O operations per second (IOPS), and latency or response time. Although each of these performance measurements is important, IOPS and latency are the most relevant to server virtualization.

Most modern SANs use a combination of high-speed disks, slower-speed disks, and large memory caches. A storage controller cache can improve performance during burst transfers or when the same data is accessed frequently by storing it in the cache memory, which is typically several orders of magnitude faster than the physical disk I/O. However, it is not a substitute for adequate disk spindles because caches are ineffective during heavy write operations.

Drive Types

The type of hard drive utilized in the host server or the storage array has the most significant impact on the overall storage architecture performance. As with the storage connectivity, high IOPS and low latency are more critical than maximum sustained throughput when it comes to host server sizing and guest performance. When selecting drives, this translates into selecting those with the highest rotational speed and lowest latency possible. Utilizing 15K RPM drives over 10K RPM drives can result in up to 35 percent more IOPS per drive.

Multipathing

In all cases, multipathing should be used. Generally, storage vendors build a device-specific module (DSM) on top of Windows Server 2008 R2 multipath I/O (MPIO) software. Each DSM and HBA has its own unique multipathing options, recommended number of connections, and other particulars.

Fibre Channel SAN

Fibre Channel is an option, because it is a supported storage connection protocol.

iSCSI SAN

As with a Fibre Channel-connected SAN, which is naturally on its own isolated network, the iSCSI SAN must be on an isolated network, both for security and performance. Any networking standard practice method for achieving this goal is acceptable, including a physically separate, dedicated storage network and a physically shared network with the iSCSI SAN running on a private VLAN. The switch hardware must provide class of service (CoS) or quality of service (QoS) guarantees for the private VLAN.

- Encryption and authentication. If multiple clusters or systems are used on the same SAN, proper segregation or device isolation must be provided. In other words, the storage used by cluster A must be visible only to cluster A, and not to any other cluster, nor to a node from a different cluster. We recommend the use of a session authentication protocol such as Challenge Handshake Authentication Protocol (CHAP). This provides a degree of security as well as segregation. Mutual CHAP or Internet Protocol Security (IPSec) can also be used.

- Jumbo frames. If supported at all points in the entire path of the iSCSI network, jumbo frames can increase throughput by up to 20 percent. Jumbo frames are supported in Hyper-V at the host and guest levels.

Data Deduplication

Data deduplication can yield significant storage cost savings in virtualization environments. Some common considerations are, for example, performance implications during the deduplication cycle and achieving maximum efficiency by locating similar data types on the same volume or LUN.

Thin Provisioning

Particularly in virtualization environments, thin provisioning is a common practice. This allows for efficient use of the available storage capacity. The LUN and corresponding CSV can grow as needed, typically in an automated fashion, to ensure availability of the LUN (auto-grow). However, storage can become overprovisioned in this scenario, so careful management and capacity planning are critical.

Volume Cloning

Volume cloning is another common practice in virtualization environments. This can be used for both host and VM volumes to dramatically decrease host installation times and VM provisioning times.

Volume Snapshots

SAN volume snapshots are a common method of providing a point-in-time, instantaneous backup of a SAN volume or LUN. These snapshots are typically block-level and only utilize storage capacity as blocks change on the originating volume. Some SANs provide tight integration with Hyper-V, integrating both the Hyper-V VSS Writer on hosts and volume snapshots on the SAN. This integration provides a comprehensive and high-performing backup and recovery solution.

Storage Automation

One of the objectives of the Hyper-V cloud solution is to enable rapid provisioning and deprovisioning of VMs. Doing so on a large scale requires tight integration with the storage architecture as well as robust automation. Provisioning a new VM on an already existing LUN is a simple operation. However, provisioning a new CSV LUN and adding it to a host cluster are relatively complicated tasks that should be automated.

Historically, many storage vendors have designed and implemented their own storage management systems, APIs, and command-line utilities. This has made it a challenge to use a common set of tools and scripts across heterogeneous storage solutions.

For the robust automation that is required in an advanced data center virtualization solution, preference is given to SANs that support standard and common automation interfaces.