Private Cloud Planning Guide for Service Delivery

This document provides guidance for the planning and design of Service Delivery in a private cloud. It addresses Service design considerations based on the Private Cloud Principles and Concepts. This guide should be used as an aid to operations, architects, and consultants who are designing Service Delivery processes, http://blogs.technet.com/cfs-file.ashx/__key/communityserver-blogs-components-weblogfiles/00-00-00-85-24-metablogapi/5658.image_5F00_64BCDD48.pngprocedures, and best practices for a private cloud. The reader should already be familiar with the Microsoft Operations Framework (MOF) and Information Technology Infrastructure Library (ITIL) models as well as the Private Cloud Principles, Concepts, and Patterns described in this documentation.

Note:

This document is part of a collection of documents that comprise the Reference Architecture for Private Cloud document set. The Reference Architecture for Private Cloud documentation is a community collaboration project. Please feel free to edit this document to improve its quality. If you would like to be recognized for your work on improving this article, please include your name and any contact information you wish to share at the bottom of this page.

This article is no longer being updated by the Microsoft team that originally published it. It remains online for the community to update, if desired. Current documents from Microsoft that help you plan for cloud solutions with Microsoft products are found at the TechNet Library Solutions or Cloud and Datacenter Solutions pages.

1 The Private Cloud Service Delivery Layer

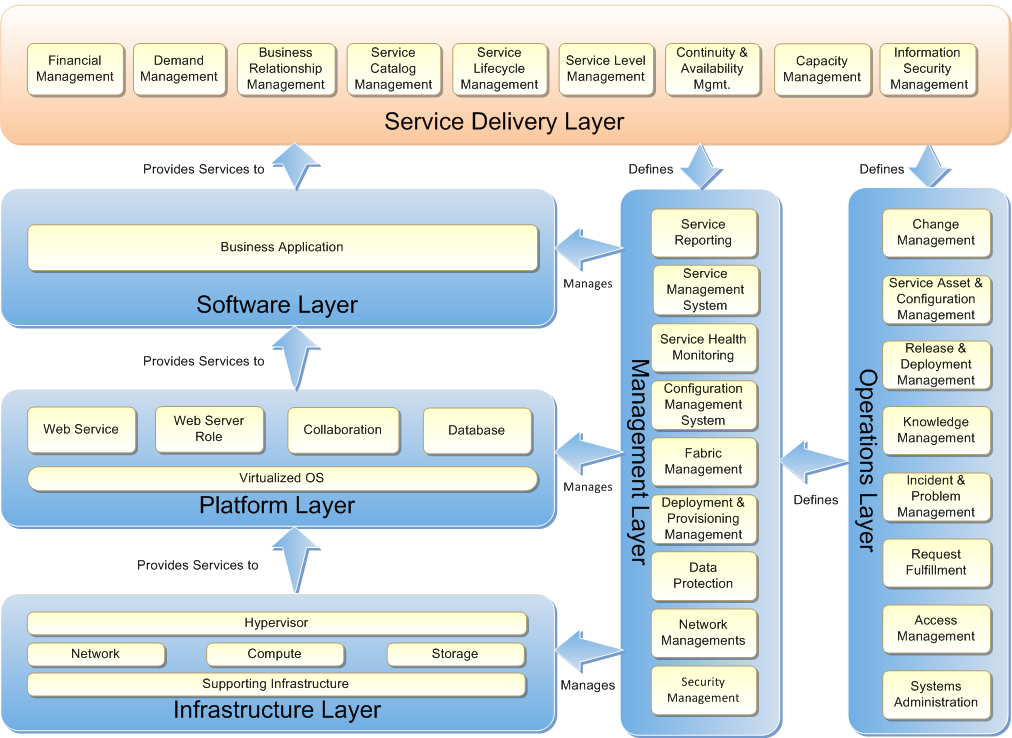

The Private Cloud Reference Model diagram with the Service Delivery Layer highlighted shows the primary functions within the Service Delivery layer that are impacted by the Private Cloud Principles and Concepts.

Figure 1: Private Cloud Reference Model

2 Service Delivery Layer

The Service Delivery Layer is the conduit for translating business requirements into Information Technology (IT) requirements for delivering a service. The Service Delivery Layer is the primary interface with the business and seeks to answer the following questions:

- What services does the business want?

- What level of service are they willing to pay for?

- How can IT leverage a private cloud to be a strategic partner to the business?

2.1 Financial Management

Financial Management incorporates the function and processes responsible for managing a service provider’s budgeting, accounting, and charging requirements.

2.1.1 Cost Transparency

A private cloud allows IT to provide greater cost transparency than what can be achieved in a traditional data center. Traditionally, the line between shared resources and dedicated resources can often become blurred, making it difficult to determine the actual cost of providing a given service. By taking a service provider’s approach to offering Infrastructure as a Service (IaaS), the metering of service consumption allows for a better understanding of the percentage of the overall infrastructure being consumed by a given service.

The two aspects of cost transparency that need to be considered are:

- Cost of Becoming an IaaS Provider: This represents the cost of having a datacenter available and ready for use. It includes the one-time or fixed cost of components like facilities, hardware, basic network bandwidth, and the power required to keep the data center on. It also includes the cost of human resources and maintenance and replacement of the hardware.

- Consumption Costs of Using a Private Cloud: This includes variable costs such as the additional energy cost needed to power compute for servers in use, and variable rates for network based on the bandwidth consumption. It is important to distinguish between these variable and fixed costs, as they may need to be approached differently in the cost model.

2.1.2 Service Classification

Service classification is covered in greater detail in the Service Catalog Management section of this document. However from a Financial Management perspective, it is important to note that the primary driver behind service classification is workload characteristics, which actually drives the cost. When applications are designed to handle hardware failure within the fabric, the cost of providing that fabric is significantly less. The infrastructure cost is higher for applications that cannot handle hardware failure gracefully and require live migration. The primary driver behind the differing costs is the cost of redundant power. Applications that need live migration must have greater infrastructure reliability, which requires redundant power. The cost of providing power redundancy should only be charged for those applications that require it.

This service classification may lead to three distinct environments within a data center, each with its own fixed cost. A traditional data center may be needed for hosting services that cannot be or should not be virtualized. There will be a datacenter high availability environment with redundant power to host applications that require live migration. And, there will be a standard datacenter environment without redundant power to host applications that are stateless and do not require live migration.

2.1.3 Consumption Based Pricing

In a Public Cloud offering, the consumer has a clearly defined consumption based price. They only pay for what they use. The provider takes the risk of making sure that the price they charge will cover their costs as a host and still make a profit.

When a business builds a private cloud and is, in effect, both the provider and the consumer, this model may not be the best approach. The business is not likely to be interested in making profit from their IaaS and may only wish to cover their costs. Also, depending on the nature of the business model, the cost of IaaS may be covered wholly by the business or there may be autonomous business units that require a clear cost separation. The following pricing options reflect these differing business models:

- Notional Pricing Model: The fixed cost of providing IaaS and the variable consumption cost of each hosted service is captured and shared with service owners. However, the total cost is not separated.

- Fixed + Variable Pricing Model: The fixed cost of each infrastructure environment is divided equitably amongst the business units that use the environment. The variable costs of consumption are then charged back to the consumer of each hosted service.

- Consumption Based Pricing Model: The fixed cost of providing IaaS is factored into the consumption price. This price is charged to the consumer of each hosted service based on their consumption.

The benefits and trade-offs of each model is outlined below.

| Option 1: Notional Pricing Model | |

| Benefit | Trade-off |

|

|

| Option 2: Fixed + Variable Pricing Model | |

| Benefit | Trade-off |

|

|

| Option 3: Consumption Based Pricing Model | |

| Benefit | Trade-off |

|

|

Regardless of the option that is used, the unit of consumption will need to be defined as a part of the Cost Model. There are many aspects of a private cloud such as power, compute, network, and storage that may be used as a unit of consumption. Power is likely to be the largest variable in the cost, but it is difficult to allocate specific power consumption to a given workload. There is a fairly close correlation between CPU utilization and power consumption and therefore CPU utilization by workload may be the most accurate way to reflect variable power consumption.

The Cost Model should also reflect the cost of Reserve Capacity. A discussion on calculating Reserve Capacity can be found in the Capacity Management section of this document. Depending on the model used, this cost can either be reflected as part of the fixed price charge or in the unit of consumption.

2.1.4 Encourage Desired Consumer Behavior

The ultimate goal of Financial Management is to encourage the desired consumer behavior. With cost transparency, consumption based pricing, and price based service classes; consumers can better understand the cost of the services they are consuming and are more likely to consume only what they really need. A private cloud model not only encourages desired behavior in consumers but also provides incentives to service owners and developers. After the cost differentiation across classes of service is known, the service owners are more likely to build or buy applications that do not require hardware redundancy and qualify for the least expensive class of service.

2.2 Demand Management

Demand Management involves activities that relate to understanding and influencing the customer demand for services and scaling capacity (up or down) to meet these demands. The principles of perceived infinite capacity and continuous availability are fundamental to the customer demand for Cloud-based services. Providing a resilient, predictable environment and managing capacity in a predictable way are both necessary for achieving these principles. Factors such as cost, quality, and agility are used to influence consumer demand for these services.

2.2.1 Perception of Infinite Capacity

In a datacenter, there is a delicate balance in maintaining the right amount of spare capacity. Enough spare capacity must be available to ensure the perception of infinite capacity while ensuring that excessive spare capacity is not being paid for while remaining unused. To this end, a strong relationship between IT and the business is required to ensure that the capacity meets the demand. Capacity planning is discussed in more detail in the Capacity Management section of this document. However, the key inputs around projecting future growth must be driven by the business. In order to be able to define the appropriate triggers for increasing data center capacity, IT must have a thorough understanding of the business’ capacity needs such as:

- Are there seasonal or cyclical capacity requirements (such as a holiday shopping season for an online retailer) that must be accounted for?

- What are the business growth expectations for the next quarter, year, and three years?

- What is the likelihood that there will be an accelerated growth requirement (caused by new markets, mergers, acquisitions, and so on) in that timeframe?

- The greater the insight that IT has into the rhythm of the business, the more accurate their capacity plan will be; this will allow for seamless capacity growth with minimal business interruption.

Additionally, the initial service portfolio assessment is critical to determining the demand for each of the environments.

- What is the demand for the Standard Environment?

- What is the demand for the High Availability Environment?

- What is the expected growth for these two environments?

- The answers to these questions will help ensure that the environments are right-sized and will have appropriate capacity plans.

2.3 Business Relationship Management

Business Relationship Management is the strategic interface between the business and IT. Business defines functionality of the services that are needed and then partners with IT to provide these services. Business must work closely with IT to also ensure that the current and future capacity requirements are met.

2.3.1 Service Provider's Approach to Delivering Infrastructure

Mature Business Relationship Management is critical to achieving a service provider’s approach. IT must not remain just a cost center; instead it should become a trusted advisor to the business. With a service provider’s approach, IT is better positioned to help the business make informed decisions on how to consume the services they need while balancing cost, benefit, and risk.

Business Relationship Management begins with an analysis of the existing IT service portfolio. IT can begin to advise the business on its IT portfolio strategy by understanding key business drivers, the critical function of each service for the business, and the capability of the market to meet these critical functions. Key questions that need to be answered by IT include:

- What are the key business drivers (Cost, Quality, and Agility)?

- Does the market offer a service that addresses the service requirements and business drivers? If so, what are the risks, trade-offs, costs, and benefits of leveraging the market?

- Can the service requirements and business drivers be met internally through a private cloud? If so, what are the risks, trade-offs, costs, and benefits of leveraging an internal private cloud?

A key service attribute that will become increasingly important, as Cloud services become more readily available, is service portability. As the market matures, services that are designed for portability will be best positioned to leverage the marketplace. It is beneficial to evaluate if a service (or components of the service) can be moved from internal IT to a Public Cloud provider, or from one Public Cloud provider to another to take advantage of changes in the marketplace. Services that are designed for portability will be in a position to benefit from these possibilities as they emerge. Other key questions that should be asked as part of portfolio analysis are:

- Is this service portable as it exists today?

- Is it likely that this service may benefit from portability capabilities in the future?

- Can this service be made portable?

- For each service that will be provided in-house, the appropriate class of service will need to be determined. A more detailed discussion on classes of service can be found in Financial Management and Service Catalog Management sections of this document. Services that cannot be hosted in a private cloud (or lack portability) require live migration and come at greater cost and reduced flexibility. These services should have an end-of-life plan and should be targeted for eventual replacement by less costly and more flexible options.

2.4 Service Catalog Management

Service Catalog Management involves defining and maintaining a catalog of services offered to consumers. This catalog will list the following:

- The classes of service that are available.

- The requirements to be eligible for each service class.

- The service level attributes and targets that are included with each service class (such as availability targets).

- The Cost Model for each service class.

The service catalog may also include specific Virtual Machine (VM) templates, such as a high compute template, designed for different workload patterns. Each template will define the VM configuration specifics; such as the amount of allocated CPU, memory, and storage.

2.4.1 Service Provider's Approach to Delivering Infrastructure

A public service provider offers a small number of service options to the consumer. This allows greater predictability to the provider. The consumer benefits from the cost savings that come with standardization by giving up a degree of flexibility. This same approach needs to be taken in an in-house private cloud. Because the workloads have been abstracted from the physical hardware, the consumer no longer needs to be concerned about specifics of the hardware. They only need to choose the VM template that provides the right balance of availability, performance, security characteristics, and cost.

Financial Management, Demand Management, and Business Relationship Management all provide key inputs necessary for designing a service catalog. From these management activities, a number of key questions will need to be answered and reflected in the service catalog including:

- What classes of service will be included?

- What VM templates are needed to meet the workloads that will be hosted in each service class?

- What will be the cost of each template for each service class?

- What are the eligibility requirements for each class of service?

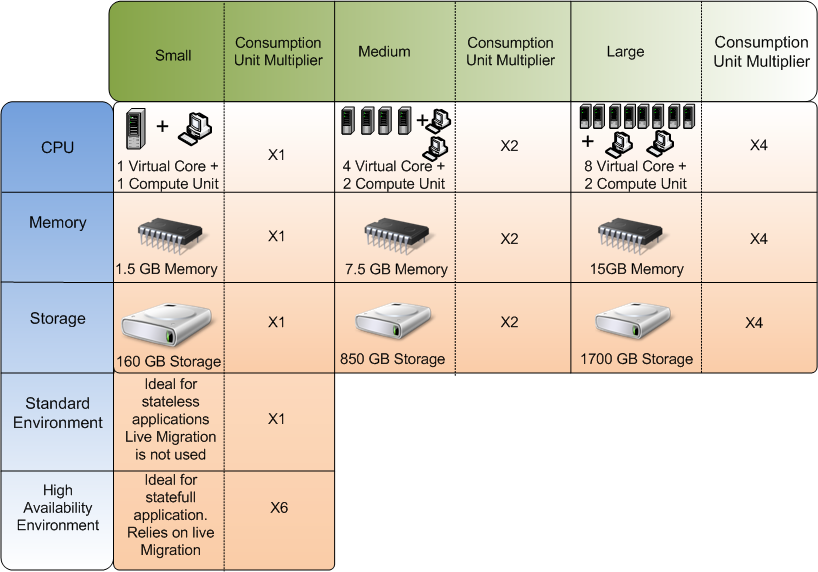

Below is a sample of what a service catalog may look like. The Compute Unit will be defined based on a logical division of the Random Access Memory (RAM) and CPUs of the physical hosts. The Consumption Unit will be defined based on the Cost Model described in the Financial Management portion of this document. This will be the base unit of consumption that will be charged to the consumer. If the selected VM had a small configuration for CPU, memory, and storage and was housed in the standard environment, the cost for the VM would be 1 Consumption Unit. If a larger configuration for CPU, memory, or storage was selected, or if the workload required live migration and redundant power, multipliers would be added to the cost of the VM.

For example, if the VM required medium CPU (x2), large memory (x4), small storage (x1), and required live migration (x6), the total cost of this VM would be 48 Consumption Units.

Another possible factor that is not shown in this sample but may be a service catalog option is input/output per second (IOPS). There may be a standard IOPS and a high IOPS option with an associated Consumption Unit multiplier.

Figure 2: Sample Service Catalog

2.5 Service Life Cycle Management

Service Life Cycle Management takes an end-to-end management view of a service. There is a natural journey that takes place from the identification of a business need through Business Relationship Management to the time when that service becomes available.

There are two aspects of Life Cycle Management that need to be discussed in the context of a private cloud. Firstly, what is unique about managing the life cycle of services end-to-end in a private cloud? Secondly, how does a private cloud enable Life Cycle Management for the services it hosts?

2.5.1 Service Provider's Approach to Delivering Infrastructure

Maturely managing the Service Life Cycle of a datacenter requires that a service provider’s approach be taken. A clear process needs to be defined for identifying when components need to be added to the data center and when they should be retired. A more detailed description of identifying capacity growth is provided in the Capacity Management section of this document. After the need to add additional capacity has been identified, a managed approach to capacity growth is necessary for Service Life Cycle Management to provide predictability within the data center.

The Scale Unit simplifies the process of adding new components to a datacenter. The models and quantities of hardware for the Scale Unit are known and therefore the procurement cycle becomes more predictable.

Hardware replacement should also become predictable and ease the burden of hardware budgeting. As part of the Service Life Cycle, hardware should be replaced regularly per the manufacturer’s recommendations. Hardware is often kept in production long after the recommended replacement date; driven by the mindset that, “if it’s not broken, don’t fix it.” There is risk in keeping hardware in production past its recommended life expectancy. The ongoing maintenance costs may go up because there will likely be an increase in incidents associated with the old hardware. When the hardware finally fails, the cost associated with the service outage and the management of the incident will also impact the cost of extending the life of the hardware. It becomes clear that the cost and risk of extending the life of hardware will typically not outweigh the benefits of increased stability and predictability that come with regular hardware replacement.

2.5.2 Homogenization of Physical Infrastructure

If you view a cloud from the perspective of IaaS, the workloads on a particular VM are irrelevant. Yet a cloud, by its very nature, can assist with the Life Cycle Management of those services. By providing a homogenized infrastructure, a cloud reduces environmental variation and provides more predictable and consistent development, testing, and production environments. Infrastructure homogenization combined with virtualization reduces the risks traditionally associated with the Deliver phase of a life cycle and facilitates a more rapid approach to server deployment. It is this ability to rapidly deploy services without compromising risk management that makes this a compelling option.

2.5.3 Cost Transparency

The cost transparency also provides incentives to service owners to think about service retirement. In a traditional data center, services may fall out of use but often there is no consideration on how to retire an unused service. The cost of ongoing support and maintenance for an under-utilized service may be hidden by the cost model of the data center. In a private cloud, the monthly consumption cost for each VM can be provided to the business and to the consumers, thus encouraging VM owners to retire unused services and reduce cost.

2.6 Service Level Management

Service Level Management process is responsible for negotiating Service Level Agreements (SLAs) and ensuring these agreements are met. In a private cloud, these agreements will define the targets of cost, quality, and agility by service class and the metrics for measuring the successful achievement of each.

2.6.1. Service Provider's Approach to Delivering Infrastructure

A high degree of Service Level Management maturity is necessary in maintaining a datacenter; this may require a re-examination of the existing approaches to define SLAs. SLAs for fabric availability will need to be defined in terms of the environment. For example, the Standard Environment that has removed power redundancy may have a Service Level Target of 99.9 percent fabric availability. On the other hand, the High Availability Environment may have a Service Level Target of 99.99 percent fabric availability. This reflects the fact that this environment will have power redundancy.

- Most importantly, the SLA of a datacenter should focus primarily on measuring the quality and consumer satisfaction of the service delivery. This is much more important than the percentage of up-time. Consumers want the perception of continuous availability and infinite capacity. They want an elastic infrastructure. They want cost transparency and consumption based pricing. What are the additional metrics that should be outlined in the SLA to capture this quality and satisfaction?

Additional questions that may need to be answered include:

- In addition to availability targets, will resiliency and reliability targets be included in the SLA?

- How will these metrics be measured and reported?

- How will the Consumption Unit be defined, implemented, measured, and reported?

- How will the Reserve Capacity be monitored and reported? What will trigger the execution of the capacity plan? How will the business communicate known capacity increases (for example, increase through an acquisition) to IT?

2.7 Continuity and Availability Management

Availability Management defines the processes necessary to achieve the perception of continuous availability. Continuity Management defines how risk will be managed in a disaster scenario and ensures that minimum service levels are maintained.

2.7.1 Holistic Approach to Availability

Strategies for achieving availability will differ across the standard and high availability environments based on requirements of the hosted workloads. In the standard environment, redundant power has been removed, putting the burden of redundancy on the application. In this environment, there is a high likelihood of an Uninterruptible Power Supply (UPS) failure at some point, which will cause a Fault Domain failure.

The details of constructing a Health Model are covered in the Planning and Design Guide for Systems Management. From the continuity and availability perspective, the most critical aspect of achieving resiliency is to understand the health of the UPS. Early signs of failing UPS health should be closely monitored and should automatically trigger the relocation of VMs in the effected Fault Domain. In the event there is a Fault Domain failure without warning, the management system should ideally detect the fault and restart the VMs on a functional Fault Domain.

2.7.2 Drive Predictability

The benefits and trade-offs of homogenized infrastructure are discussed in the Release and Deployment Management section of the Private Cloud Planning Guide for Operations. The less homogeneity there is in the environment, the greater the likelihood that this differentiation could cause or extend the length of an outage. Therefore, the extent of homogenization should be factored in when determining achievable availability targets.

When implementing a private cloud, existing Business Continuity and Disaster Recovery (BC/DR) plans should be re-examined. In a disaster recovery scenario, the recovery environment may be architected to include the standard and high availability environments though this is not a requirement. The recovery environment may not be dynamic, but a more traditional virtualized data center. The virtualized workloads could still be deployed and run in this environment, but there is likely to be a loss of resiliency. The most important aspect of continuity is ensuring that VMs are replicated and can be restarted in the recovery environment. Regularly testing this functionality should become part of the existing BC/DR plan.

2.8 Capacity Management

Capacity Management defines the processes necessary for achieving the perception of infinite capacity. Capacity needs to be managed to meet existing and future peak demands while controlling under-utilization. Business Relationship and Demand Management are key inputs into defining Capacity Management requirements.

2.8.1 Capacity Plan

The most fundamental aspect of maintaining the perception of infinite capacity is the development and execution of a mature Capacity Plan. Inputs to the plan need to include Reserve Capacity requirements, current capacity usage patterns, business input on projected growth, and an understanding of vendor procurement processes. The more accurate this information is, the more accurate the Capacity Plan will be.

The calculation for determining Reserve Capacity is detailed in the Patterns section of the Principles, Concepts, and Patterns document. The Reserve Capacity is not considered part of the available Resource Pool as this capacity has been reserved to ensure availability during Fault Domain failure, upgrades, and Resource Decay. Therefore, the Reserve Capacity is subtracted from the Resource Pool, leaving the Total Resource Units.

Initially, the business will provide a projected rate of growth. This projection should be regularly reviewed with the business as part of Business Relationship Management. This projected growth rate will be converted into the Projected Consumption Rate. The Projected Consumption Rate is a reflection of how many Available Resource Units are consumed each day. As historical data becomes available, Projected Consumption Rate should be compared to the Actual Consumption Rate and may need to be altered based on actual consumption.

An agreement will need to be reached with the suppliers on how long it will take to procure a Scale Unit after an order has been submitted. This length of time along with time allotted for internal procurement processes and installation will provide the Procurement Duration. Procurement Consumption (the amount of additional Available Resource Units that will be consumed during the Procurement Duration) is also factored and this is calculated by multiplying the Procurement Duration with the Actual Consumption Rate.

Lastly, the Safety Threshold should be assigned. This is an additional number of units held in reserve to reduce risk. In a green field scenario, there may not be historical data on capacity usage. Similarly, it may be difficult to get accurate growth projections from the business. In these situations, a larger safety threshold should be allocated. Less information means that there is greater risk, so more investment needs to be made to reduce it. Over time, as historical data becomes available and actual growth projections become more predictable, this becomes less of a risk and the size of the safety threshold can be lessened.

Here is the formula that shows how these inputs can be used to calculate the Scale Unit Threshold, or minimum available resource units allowed before the procurement process is triggered.

Here is the formula to calculate the Scale Time Threshold, or the number of days remaining before the procurement process is triggered. To know how many Available Resource Units remain, subtract the Total Resource Units from the Consumed Resource Units.

For example, let’s assume the Resource Pool, after removing Reserve Capacity, can host 500 Consumption Units (as defined in the service catalog). This gives us 500 Total Resource Units. Currently 450 units have been consumed, leaving us with 50 Available Resource Units.

The business expects a growth rate of 25 percent this year. This means we will need an additional 125 VMs by the end of the year. If we divide 125 VMs by 365 days we get a 0.34 Consumption Rate (the average additional VMs needed per day).

Feeling fairly confident in the projections, we will allocate a Safety Threshold of 10 Units.

It was determined that the Procurement Duration is 60 days. During the 60 days of the procurement process, there will be a growth of 0.34 VMs per day (our Consumption Rate); therefore the Procurement Consumption is 20.4 Units (60 x 0.34).

Based on the above formulas, we now know that the Scale Time Threshold is 56.8 days, meaning we need to initiate the procurement process in 56 days. We also know that the Scale Unit Threshold is 30.4 units, meaning we should also initiate the procurement process if the available resources are less than 31.

2.8.2 Scale Unit

Another important aspect of Capacity Management is determining the appropriate size for Scale Units. Looking specifically at the Compute Scale Unit, the Scale Unit must be at least as large as the Fault Domain in order to maintain the Reserve Capacity equation. For standard datacenter environments, it is likely that there will be a single UPS per rack, therefore the Fault Domain and minimum Scale Unit size should probably be the rack as well. However, depending on the size of the data center, the Scale Unit may be multiple racks.

While there isn’t a simple formula to determine the appropriate sized Scale Unit, there are a number of factors to consider. If the Consumption Rate is high, Procurement Duration is long, and the frequency of initiating the procurement process is high, then the size of the Scale Unit should be increased. On the other hand, if the Consumption Rate is low and the available Resource Units remain high, this indicates that there is excess capacity that remains unused and therefore a smaller Scale Unit might be more appropriate.

2.9 Information Security Management

Information Security Management ensures that the requirements for confidentiality, integrity, and availability of the organization’s assets, information, data, and services are maintained. An organization’s information security policies will drive the architecture, design, and operations of its datacenter. Considerations for resource segmentation and multi-tenancy requirements must be included.

2.9.1 Service Provider's Approach to Delivering Infrastructure

A service provider must provide adequate security countermeasures and mitigation plans in the event of a security issue. This includes having a formalized Security Risk Management process for identifying threats and vulnerabilities. A formal threat analysis and vulnerability assessment should be performed on an ongoing basis as the organizations risk profile changes because of environment, technology, business ventures, business process, and procedures. Data classification schemes and information compliance can help determine privacy requirements. These requirements may dictate a tenancy strategy for how Resource Pools are segmented, based on the risk of data leakage.

2.9.2 Holistic Approach to Availability

Availability is one of the primary goals of security. Looking at the problem of availability from a holistic point of view allows for effective deployment of countermeasures. Deploying countermeasures at each layer can add complexity and thereby lower agility. The defense-in-depth strategy is still a viable strategy in a datacenter, but it is important to understand how the countermeasures work together from end-to-end. If too many security measures are built into each component or layer without looking at the problem in its entirety, it could hamper resiliency. For example, an effective countermeasure will be to transfer workloads to newly provisioned VMs periodically to minimize the risk of malware or misconfigured item where an exploit can be leveraged against the datacenter.

2.9.3 Homogenization of Physical Infrastructure

Having a more uniform set of devices to manage makes it easier to standardize configurations and keep them up to date. As the number of permutations of devices is reduced, Patch Management implementation and test becomes easier, timely, and more reliable.

REFERENCES:

ACKNOWLEDGEMENTS LIST:

If you edit this page and would like acknowledgement of your participation in the v1 version of this document set, please include your name below:

[Enter your name here and include any contact information you would like to share]