Logic Apps: Image OCR and Detected Text Analysis Using Cognitive Computer Vision and Text Analytics API

What are Logic Apps?

Logic Apps are a piece of integration workflow hosted on Azure which are used to create scale-able integrations between various systems. These are very easy to design and provide connectivity between various disparate systems using many out of the box connectors as well as with the facility to design custom connectors for specific purposes. This makes integration easier than ever as the design aspect of the earlier complex integrations is made easy with minimum steps required to get a workflow in place and get it running.

What are Cognitive Services?

As per Microsoft :

Microsoft Cognitive Services( formerly Project Oxford) are a set of APIs, SDKs and services available to the developers to make their applications more intelligent, engaging and discoverable. Microsoft Cognitive Services expands on Microsoft's evolving portfolio of machine learning APIs and enables developers to easily add intelligent features such as emotion and video detection; facial, speech and vision recognition; and speech and language understanding - into their applications. Our vision is for more personal computing experiences and enhanced productivity aided by systems that increasingly can see hear, speak, understand and even begin to reason.

Scope

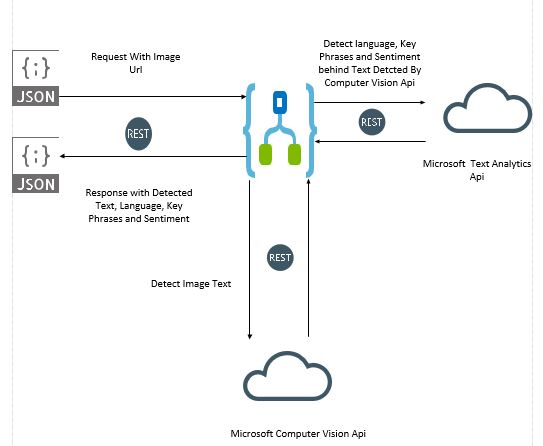

Microsoft Team has released various connectors for the ComputerVision API cognitive services which makes it easy to integrate them using Logic Apps in one way or another to fit them into systems dealing with intelligent image recognition. These connectors are in preview at the moment and can be used only in certain ways and yield results with some limitations, but once they move into the GA, it can be assumed that they will function at their full capacity. This article aims to discuss a business flow which will accept an image URL as input and then with the help of computer vision API extract the text from the image and then with help of the Text Analytics AAPIdetect the language of the extracted and then detect key phrases and the sentiment behind the text.

Flow for Image Analysis

- Accept the URL of the image which needs to be detected over a HTTP call as a JSON request payoad

- Call the Computer Vision API to detect the text in the image.

- Call the Text Analytics API to detect the language of the text.

- Call the Text Analytics API to detect the key phrases in the detected text.

- Calll the Text Analytics API to detect the sentiment in the text.

- Return the detected text, text language, key phrases, and sentiment back over an HTTP call as a JSON response payload.

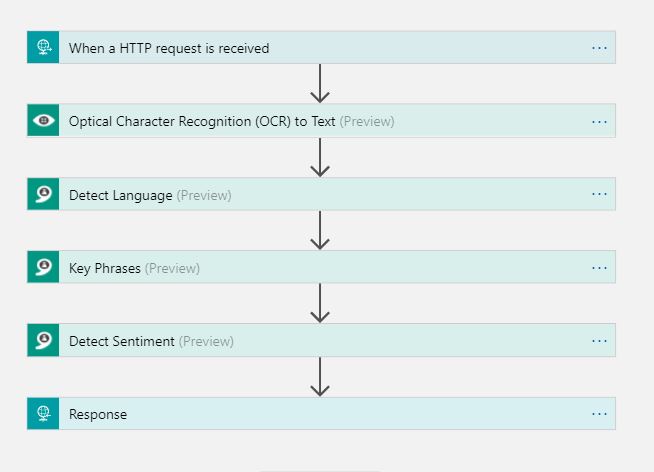

Logic App Flow

The following figure describes the flow of the logic flow which will be used to do a sample facial verification.

Design

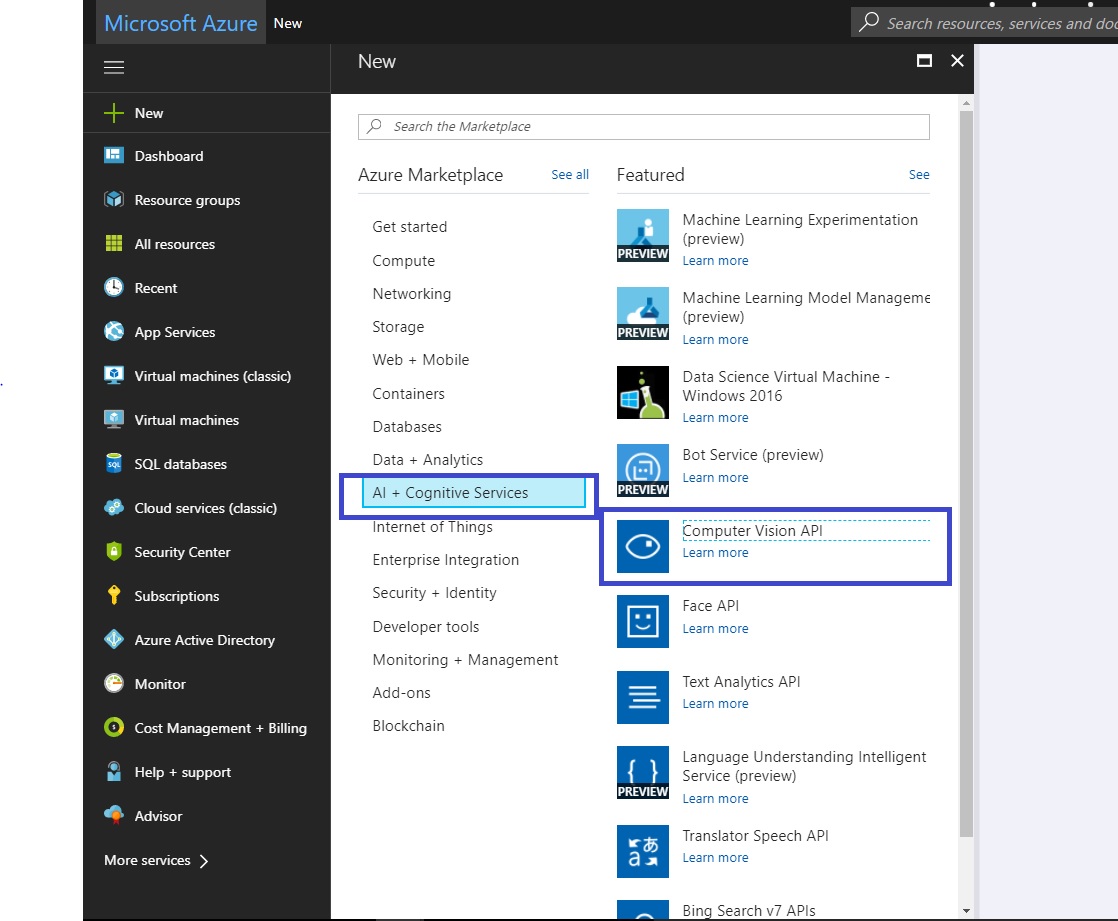

Computer Vision API Account

In order to use the Computer Vision API connectors in the Logic Apps, first an API account for the Computer Vision API needs to be created. Once this is done, the connectors will be available to integrate the Computer Vision API in Logic Apps. Following screenshot shows the process to do so.

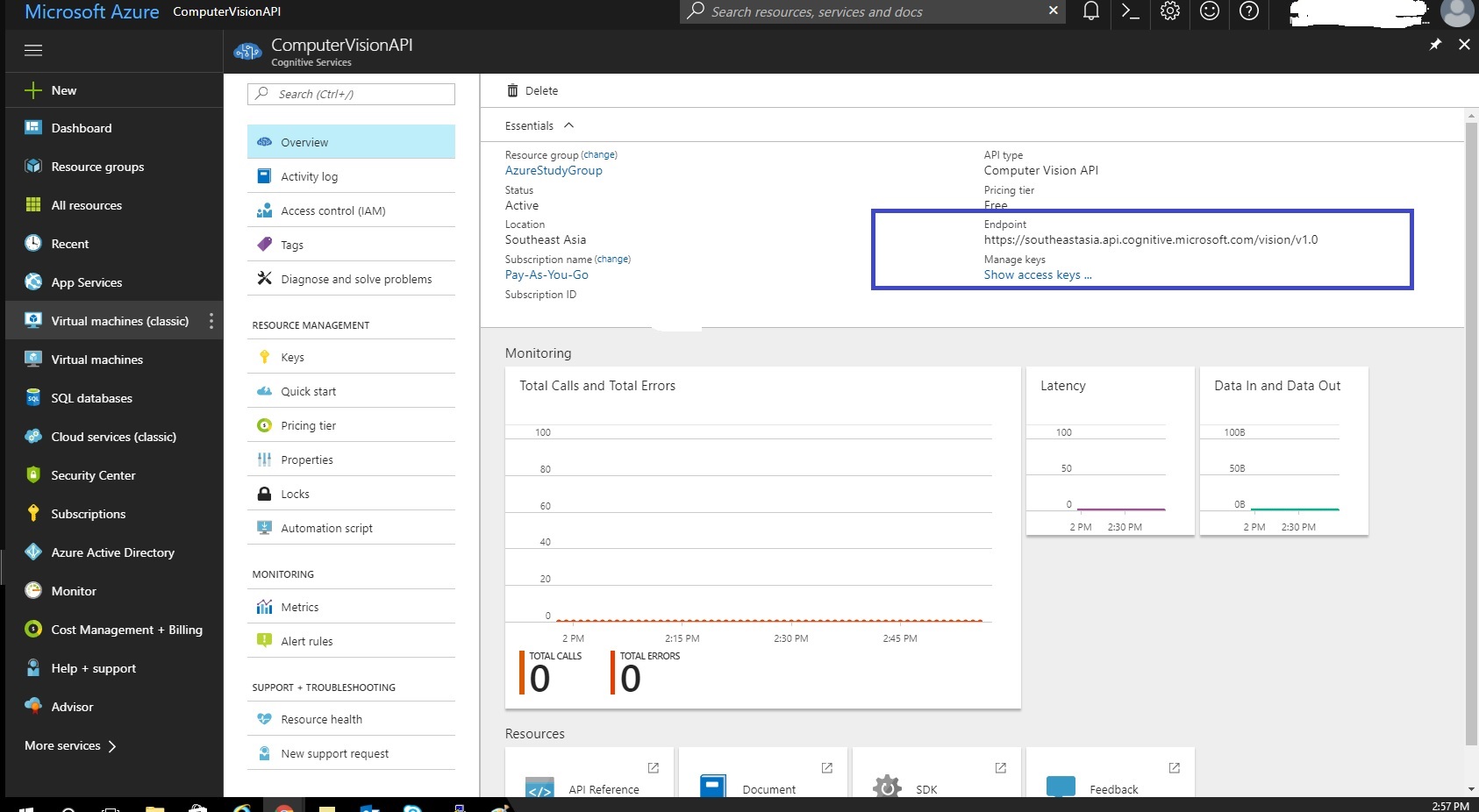

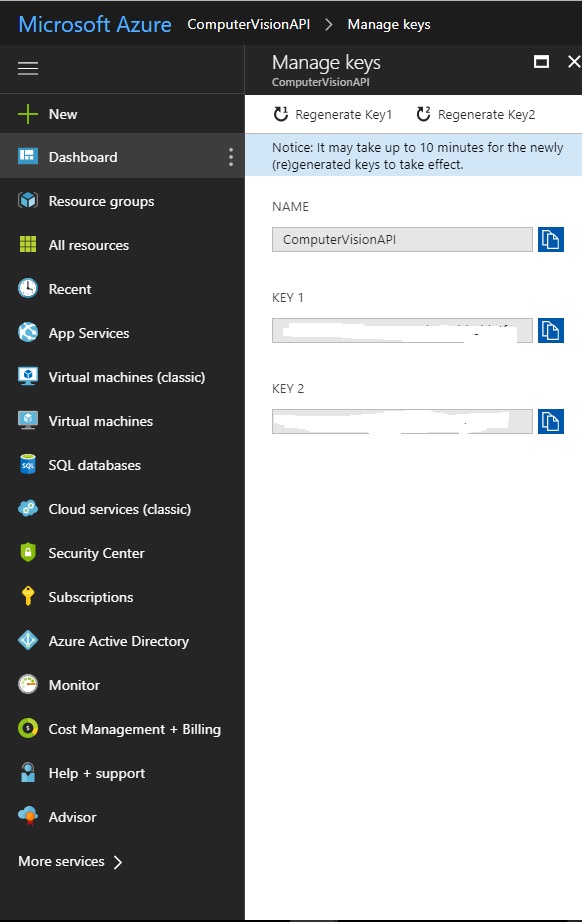

Once the API account is created, select the account from the dashboard and following window is visible, the access keys and end point are required from this window which will be used to create a connection to the face API.

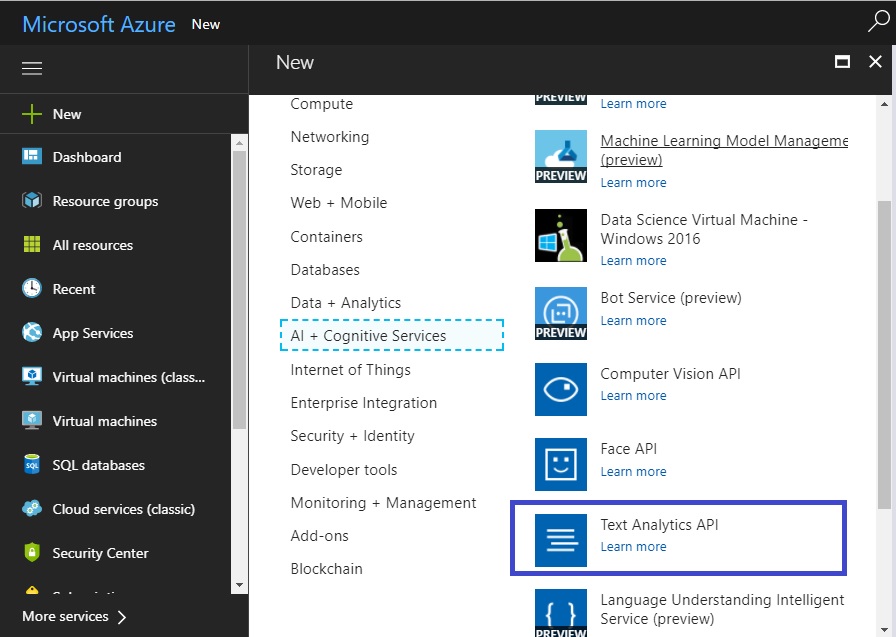

Text Analytics API Account

In order to use the Text Analytics API connectors in the Logic Apps, first an API account for the Text Analytics API needs to be created. Once this is done, the connectors will be available to integrate the Text Analytics API in Logic Apps. Following screenshot shows the process to do so.

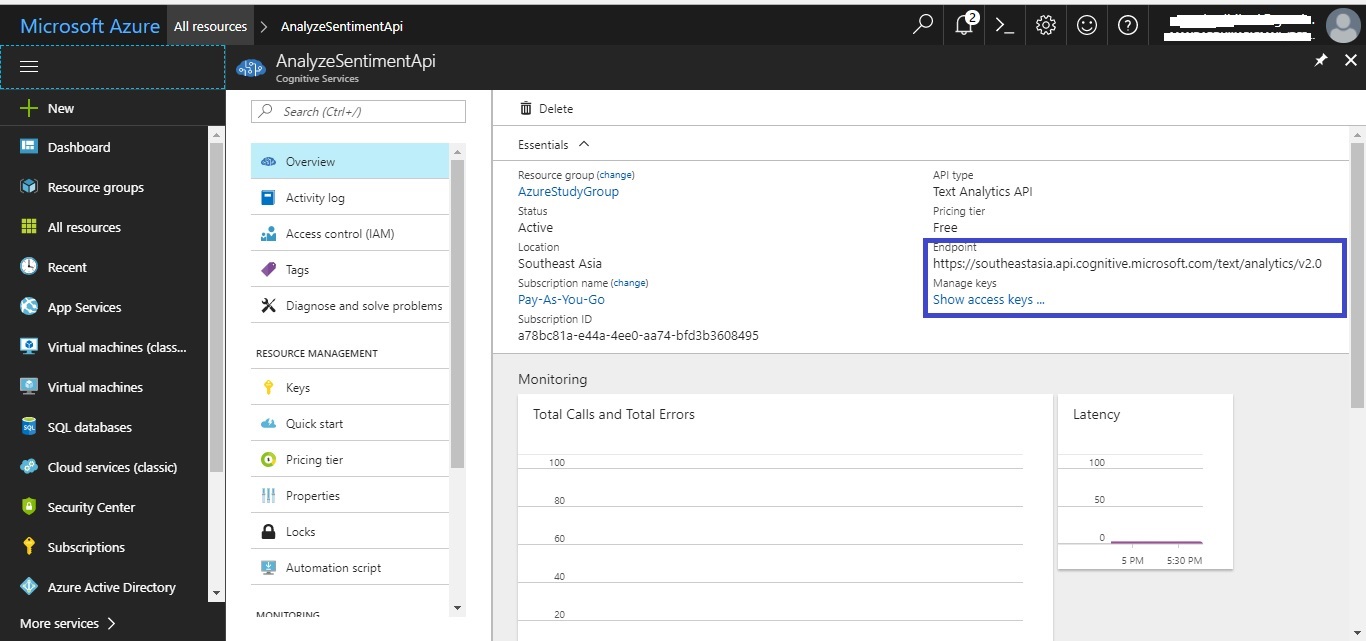

Once the API account is created, select the account from the dashboard and following window is visible, the access keys and end point are required from this window which will be used to create a connection to the face API.

Logic App Design

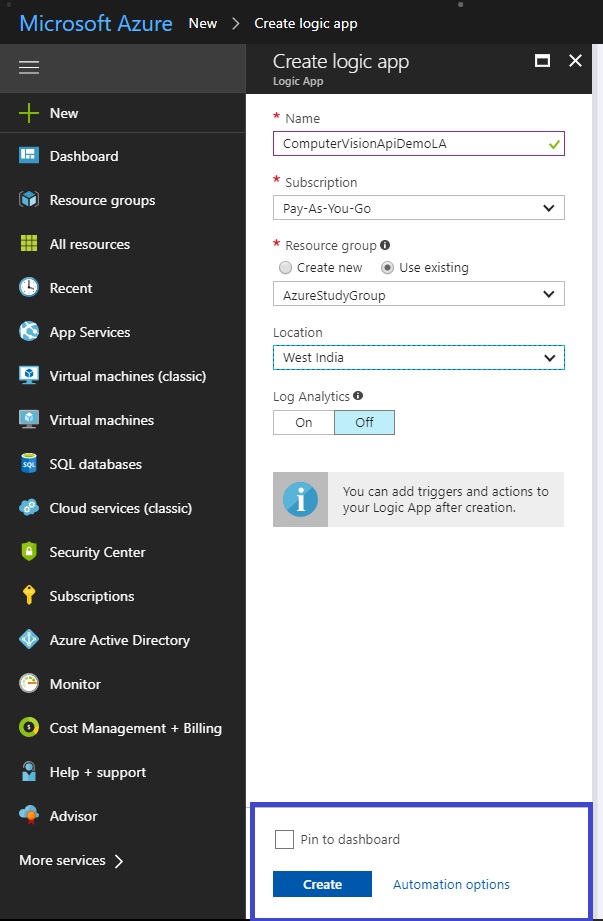

Create a Logic App Named ComputerVisionApiDemoLA

Select the details like subscription, Resource group, Location and then hit the create button on the blade. Refer following screen shot for sample.

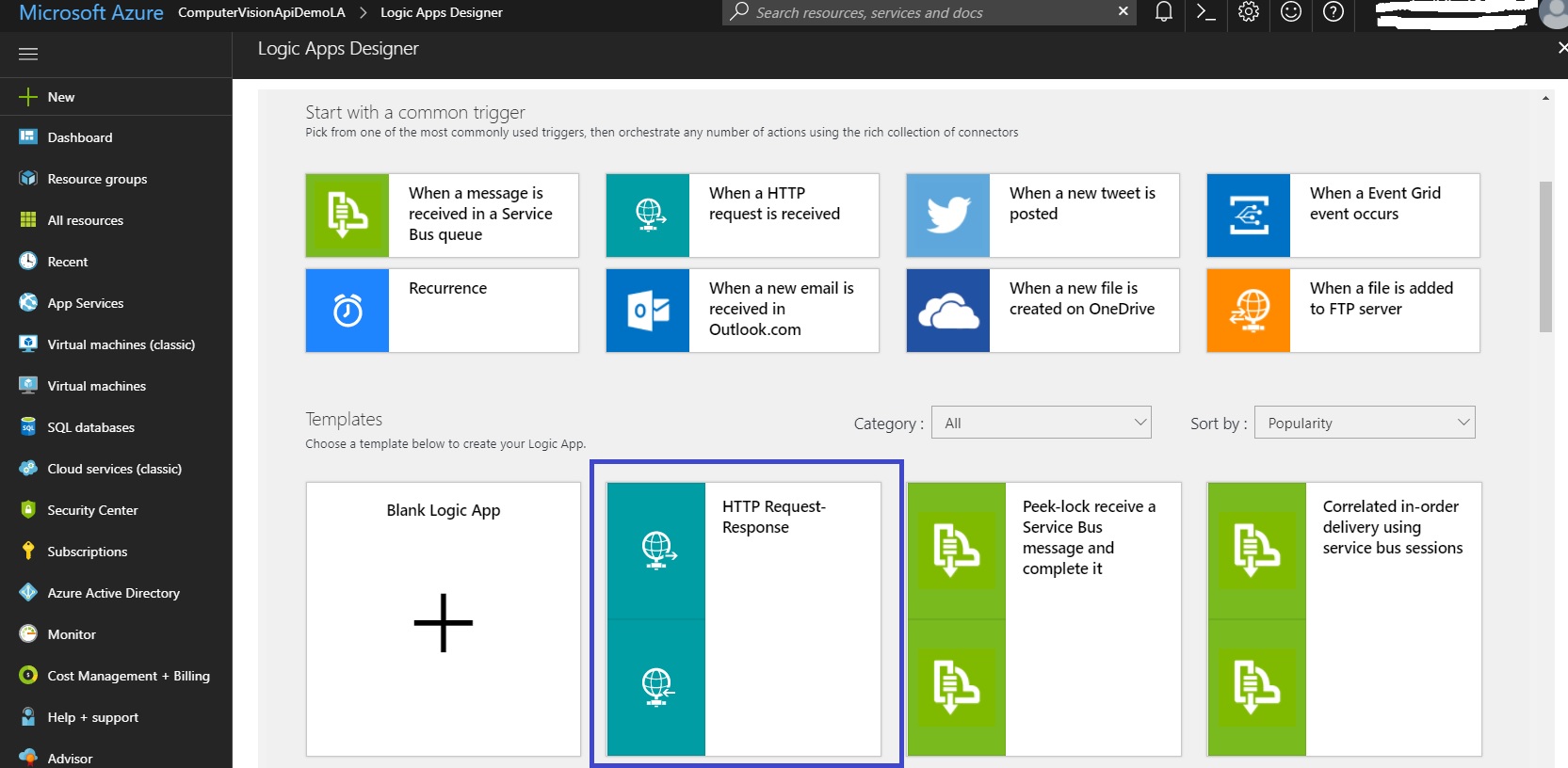

Select the HTTP Request-Response Template as shown in below screenshot.

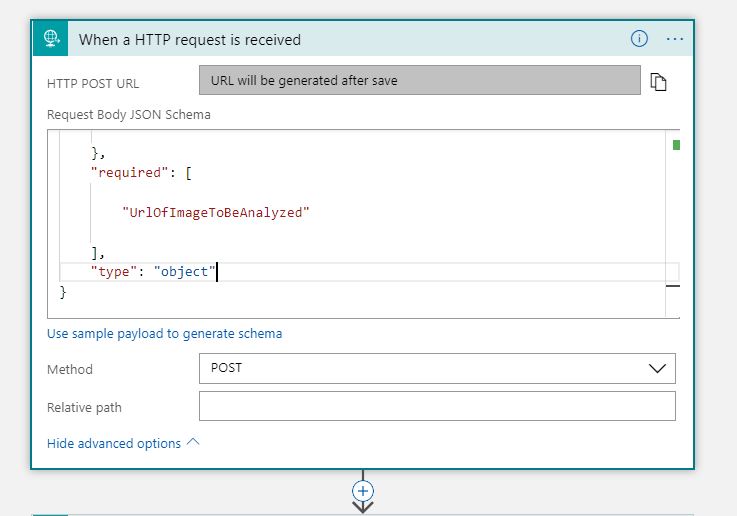

Use following JSON Payload as the request to the Logic App.

{ "$schema": "http://json-schema.org/draft-04/schema Jump #", "definitions": {}, "id": "http://computervisionapidemo Jump ", "properties": { "UrlOfImageToBeAnalyzed": { "type": "string" } }, "required": [ "UrlOfImageToBeAnalyzed" ], "type": "object" }Post above payload in the Request Body JSON Schema as shown below

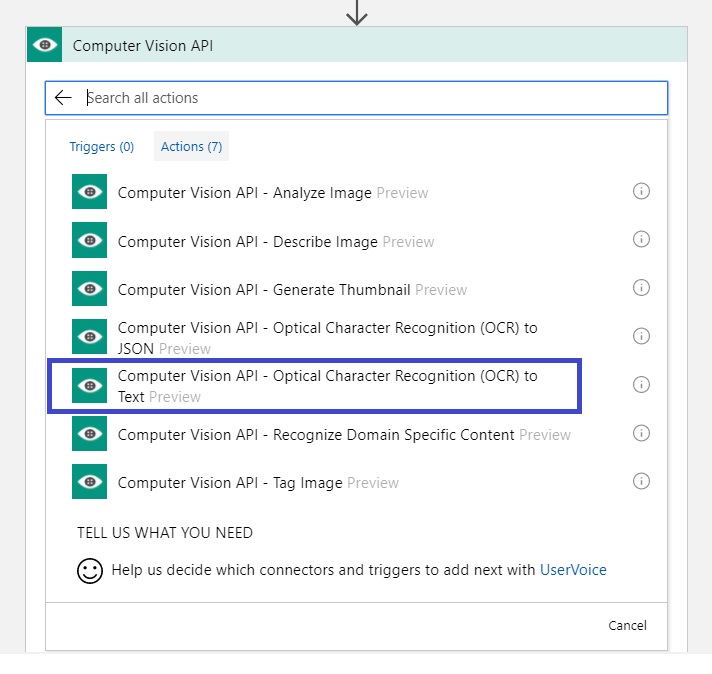

Click on the "+" sign as shown in above image and select Add action and then select the Optical Character Recognition To Text from the lst of available actions. Refer a sample screenshot as shown below.

Now a connection to the Computer Vision Api account that was created above needs to be made. The URL and the keys need to referred for this process as mentioned in the account creation steps. Refer below sample screenshot.

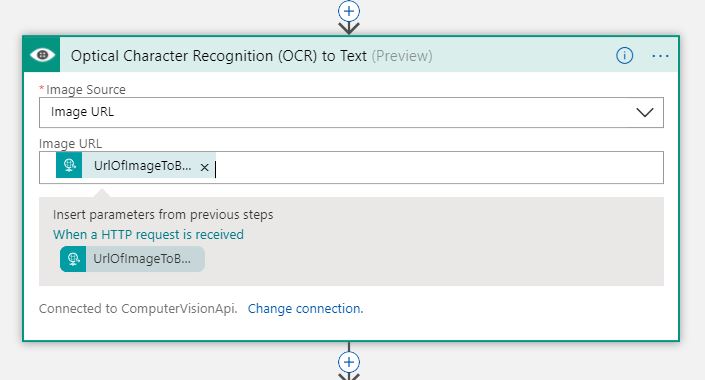

Once the Connection has been configured, the Logic App Designer will allow to specify the details that need to sent to the Computer Vision API. Currently the connector can accept the image url or the image data. This sample passes the URL as input to the connector. The URL is selected as it is provided in the request. Here the use of "use from previous steps" is made to access the url from the request. Refer below sample screenshot.

Once the image is sent to the Computer Vision API, it will detect the text written in the image. This text is then analyzed using the Text Analytics API.

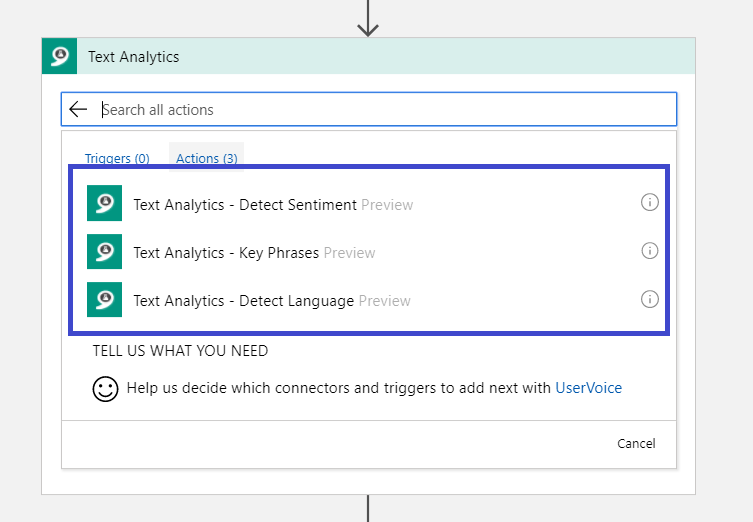

From the action related to the Text Analytics, following are used as shown in the screenshot below.

Now a connection to the Text Analytics API account that was created above needs to be made. The URL and the keys need to referred for this process as mentioned in the account creation steps. Refer below sample screenshot.

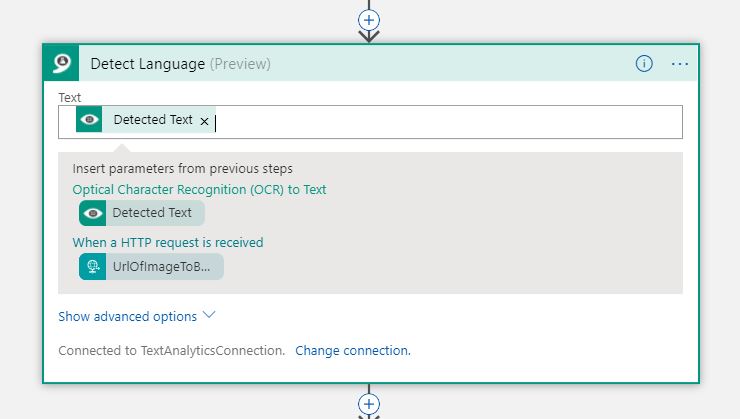

Select the Detect Language action shape and provide the Detected Text from the OCT to Text Connector as input to the connector. Refer the screen shot below.

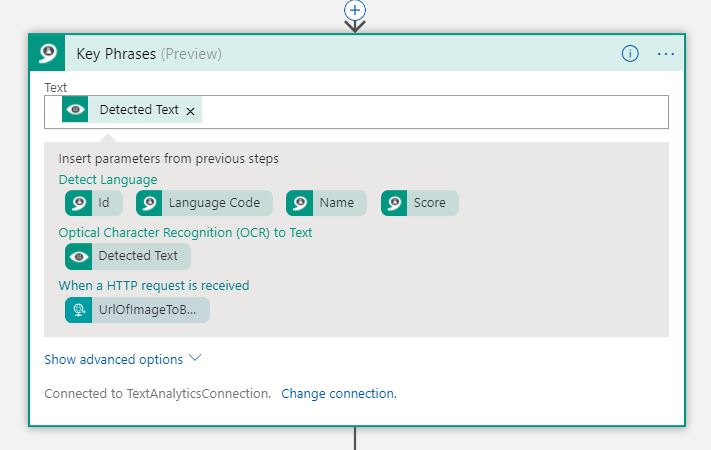

Select the Key Phrases action shape and then provide the Detected Text from the OCT to Text Connector as input to the connector. Refer the screen shot below.

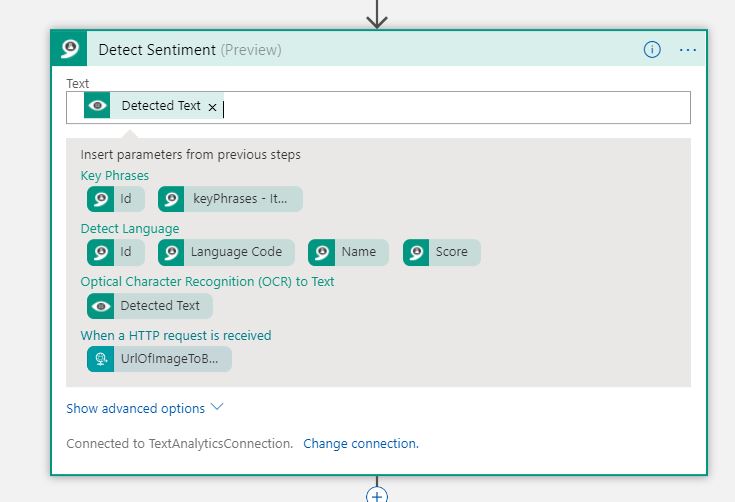

Select the Detect Sentiment action shape and then provide the Detected Text from the OCT to Text Connector as input to the connector. Refer the screen shot below.

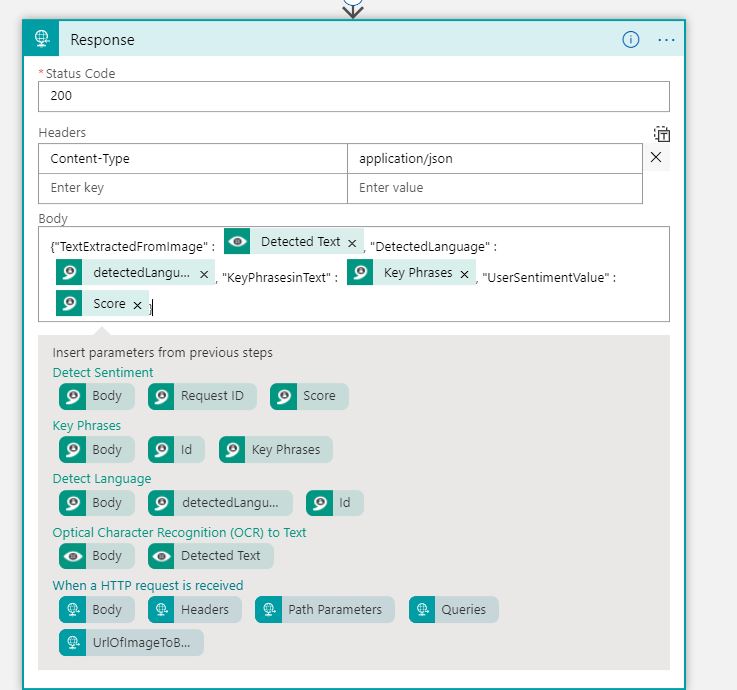

Configure the response action connector as shown below.

The final flow of the logic app looks like below

Save the Logic app and copy the url generated by the Request Trigger. This url is to be used for testing the Logic App

Testing the Logic App

Following Images are used for testing the Logic APP

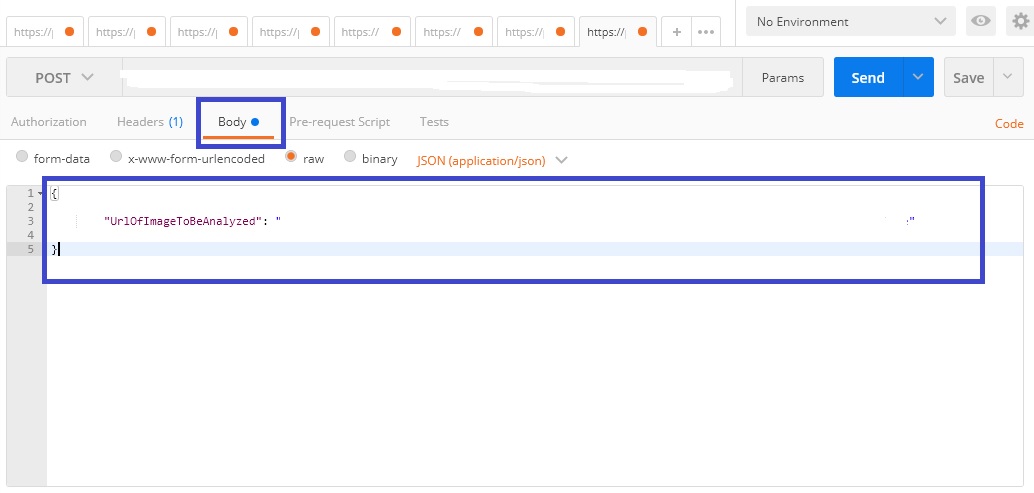

The JSON request payload used for testing the logic app is shown below.

{

"UrlOfImageToBeAnalyzed": "Url here"

}

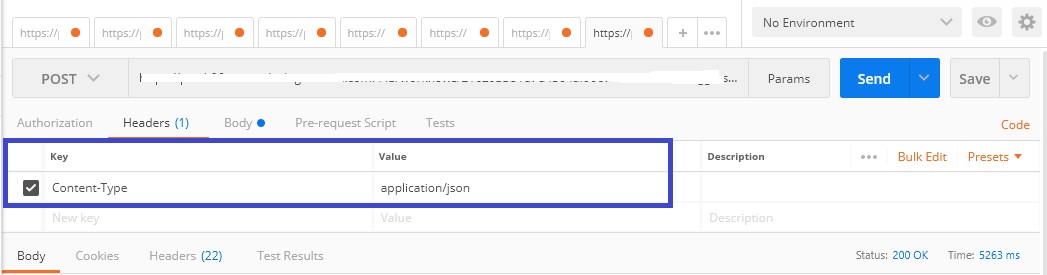

The testing is done using the POSTMAN app available in chrome app store, other wise tools such as SOAP UI can also be used. The configuration for the POSTMAN is as shown below.

The responses received from the Logic App for the images 1 and 2 respectively are as below.

Image 1:

{ "DetectedLanguage": [ { "name": "English", "iso6391Name": "en", "score": 1 } ], "KeyPhrasesinText": [ "great experience" ], "TextExtractedFromImage": "I had a great experience!", "UserSentimentValue": 0.99142962694168091 }Image 2:

{ "DetectedLanguage": [ { "name": "English", "iso6391Name": "en", "score": 1 } ], "KeyPhrasesinText": [ "Worst experience" ], "TextExtractedFromImage": "Worst experience ever!", "UserSentimentValue": 0.0035259723663330078 }

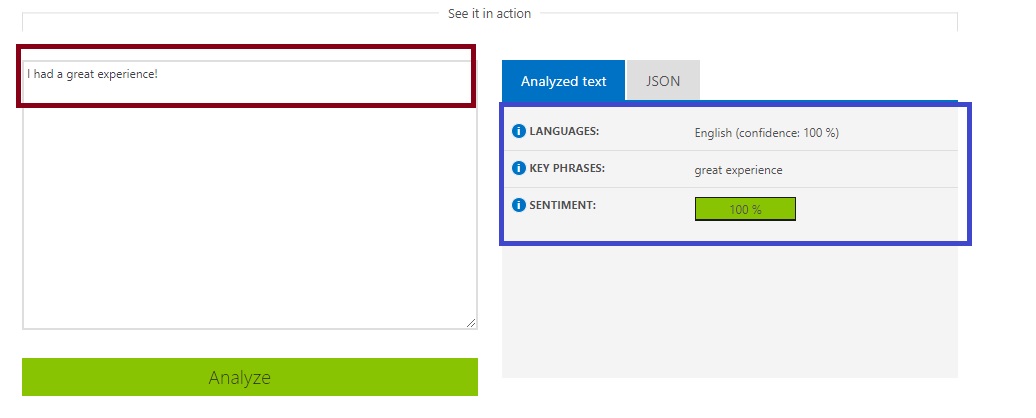

Actual result for the text analysis for the text detected from above test messages when done on the Microsoft Demo Page (For Details See References) are shown below.

Image1

The JSON response Payload for the result is as follows.

{ "languageDetection": { "documents": [ { "id": "bd7f8ad1-03d9-41a6-908a-defe53ce98de", "detectedLanguages": [ { "name": "English", "iso6391Name": "en", "score": 1.0 } ] } ], "errors": [] }, "keyPhrases": { "documents": [ { "id": "bd7f8ad1-03d9-41a6-908a-defe53ce98de", "keyPhrases": [ "great experience" ] } ], "errors": [] }, "sentiment": { "documents": [ { "id": "bd7f8ad1-03d9-41a6-908a-defe53ce98de", "score": 0.99142962694168091 } ], "errors": [] } }Visual result for the analysis is as shown below.

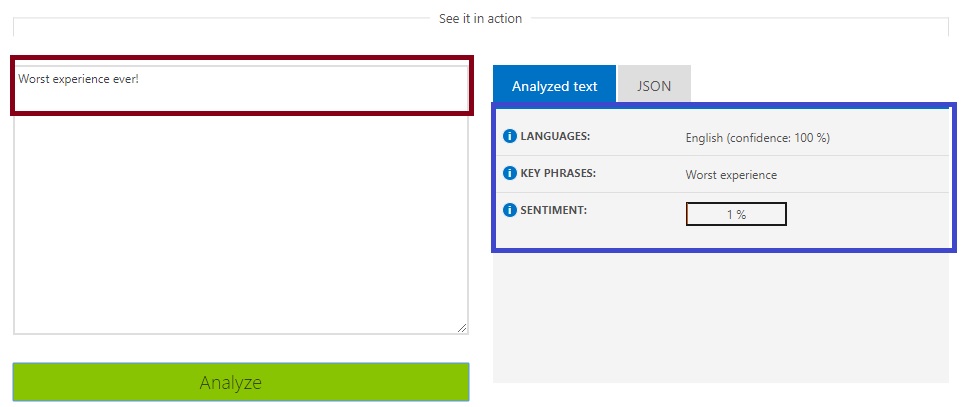

Image 2

The JSON response Payload for the result is as following.

{ "languageDetection": { "documents": [ { "id": "1a702600-6196-406b-aec7-969acdfe93dd", "detectedLanguages": [ { "name": "English", "iso6391Name": "en", "score": 1.0 } ] } ], "errors": [] }, "keyPhrases": { "documents": [ { "id": "1a702600-6196-406b-aec7-969acdfe93dd", "keyPhrases": [ "Worst experience" ] } ], "errors": [] }, "sentiment": { "documents": [ { "id": "1a702600-6196-406b-aec7-969acdfe93dd", "score": 0.0035259723663330078 } ], "errors": [] } }Visual result for the analysis is as shown below.

As visible from both the tests, the results are same for the Logic App integration as well as the Microsoft Demo tool.

Conclusion

As clear from the test results , it can be concluded that the Logic App can be used in conjunction with the Microsoft Cognitive Computer Vision API and Text Analytics API to detect the text from the image and analyze it .

Possible Application

This workflow can be used by a firm analyzing the user sentiments from the images and text messages posted on the social media to collate the data and analyze the thoughts of the general public about the issue/product at hand and then this analysis can be used to determine future strategies to generate positive sentiments from the public.