BizTalk Maps: Migrating to Azure Logic Apps - Shortcomings & Solutions (part 2)

<< Part 1

1. Introduction

The first part in this series dealt with problems faced when trying to migrate a BizTalk map to a Logic App Integration Account and using that map in an XML Transform action. This second part will focus on those maps where the Logic App XML Transform action cannot be used for some reason.

The first seven sections in this second part address problems faced when trying to migrate a BizTalk map to Logic App where an XML Transform action cannot be used, either because the map uses extension objects, custom functoids etc. or there is no Integration Account available. The key feature of each of these solutions is to avoid, as best as possible, any changes to the existing BizTalk XSLT map. The final three sections focus on those transformations in BizTalk that are performed by some component other than the BizTalk mapper.

2. How to Migrate a BizTalk XSLT Map without a Logic App Integration Account (Method 1)

Problem*: If there is no Logic App Integration Account subscription.*

Solution*: Write an Azure Function* that utilizes the System.Xml.Xsl namespace to perform the transform.

The easiest way to use an XSLT map without a Logic App integration account is to write an Azure Function that makes use of the .NET System.Xml.Xsl namespace which provides support for Extensible Stylesheet Transformation (XSLT) transforms. Any XSLT map(s) can be uploaded to the function folder and when the function is run the XSLT maps can be loaded from the file system.

Create a new Azure Function (C# HTTP trigger). A function like the one below should be created by the template:

We now need to create a folder for the XSLT map. For this we need to use Kudu. The URL will be in the follow form (just replace the highlighted with the Function Apps name that is being used): https://tcdemos.scm.azurewebsites.net/DebugConsole/?shell=powershell

Login to Kudu, select PowerShell from the Debug Console, navigate to the Azure Function Folder, and type mkdir Maps at the prompt to create a new sub-folder, e.g.:

The XSLT map file(s) can now be uploaded to this new sub-folder.

The run.csx code should be replaced with the following (note the highlighted should be replace with the name of the Azure Function):

using System.Net; using System.IO; using System.Xml; using System.Xml.Xsl; public static async Task<HttpResponseMessage> Run(HttpRequestMessage req, TraceWriter log) { log.Info( "C# HTTP trigger function processed a request." ); var input = await req.Content.ReadAsStringAsync(); string mapName = req.Headers.GetValues("MapName").First(); string output = string.Empty; XslCompiledTransform transform = new XslCompiledTransform(); XsltSettings settings = new XsltSettings(true, true); XmlUrlResolver resolver = new XmlUrlResolver(); XsltArgumentList argumentList = new XsltArgumentList(); using (StringReader stringReader = new StringReader(input)) { using (XmlReader xmlReader = XmlReader.Create(stringReader)) { using (StringWriter stringWriter = new StringWriter()) { transform.Load(@ "D:\home\site\wwwroot\TransformXML\" + mapName + " .xslt", settings, resolver); transform.Transform(xmlReader, argumentList, stringWriter); output = stringWriter.ToString(); } } } return req.CreateResponse(HttpStatusCode.OK, output); }In the Logic App configure an Azure Function action to call the new function. Specify the MapName value in the Headers e.g.:

Pros*: Relatively simple to implement. The BizTalk XSLT map requires no changes.*

Cons*: BizTalk XSLT maps need to be stored within the function. Need to construct the envelope message for multiple source messages.*

3. How to Migrate an XSLT Map without a Logic App Integration Account (Method 2)

Problem*: If there is no Logic App Integration Account subscription.*

Solution*: Upload the XSLT files to an Azure storage account and write an Azure Function that uses the Microsoft.WindowsAzure.Storage namespace to load the XSLT file and the System.Xml.Xsl namespace to perform the transform.*

This second method uses an Azure storage account to hold the XSLT files. The main difference between this method and the previous one is that the Azure storage API needs to be used to retrieve the XST files from the Azure storage file share rather that storing the files local to the function.

First create an Azure Storage account. Next select File service and then add a new File share named “maps”. Finally upload the XSLT maps to this File share, e.g.:

From the Storage account SETTINGS select Access Keys and copy one of the CONNECTION STRINGS:

Create a new Azure Function (C# HTTP trigger) and paste in the following code. Replace the highlighted code with the Connection String value copied in step 2:

#r "Microsoft.WindowsAzure.Storage"

using System.Net;

using System.IO;

using System.Xml;

using System.Xml.Xsl;

using System.Xml.XPath;

using Microsoft.Azure;

using Microsoft.WindowsAzure.Storage;

using Microsoft.WindowsAzure.Storage.File;

public static async Task<HttpResponseMessage> Run(HttpRequestMessage req, TraceWriter log)

{

log.Info("C# HTTP trigger function processed a request.");

var input = await req.Content.ReadAsStringAsync();

string mapName = req.Headers.GetValues("MapName").First();

string output = string.Empty;

// Get the XSLT file from the Azure file storage account

CloudStorageAccount storageAccount = CloudStorageAccount.Parse("DefaultEndpointsProtocol=https;AccountName= … ;AccountKey= … ;EndpointSuffix=core.windows.net");

CloudFileClient fileClient = storageAccount.CreateCloudFileClient();

CloudFileShare fileShare = fileClient.GetShareReference("configuration");

CloudFileDirectory fileDirectory = fileShare.GetRootDirectoryReference();

CloudFile file = fileDirectory.GetFileReference(mapName);

// Load the XSLT map

XPathDocument xdocXSL = new XPathDocument(file.OpenRead(null, null, null));

XsltSettings settings = new XsltSettings(true, true);

XslCompiledTransform transform = new XslCompiledTransform();

XsltArgumentList argumentList = new XsltArgumentList();

XmlUrlResolver resolver = new XmlUrlResolver();

// Transform XML

using (StringReader stringReader = new StringReader(input))

{

using (XmlReader xmlReader = XmlReader.Create(stringReader))

{

using (StringWriter stringWriter = new StringWriter())

{

transform.Load((IXPathNavigable)xdocXSL, settings, resolver);

transform.Transform(xmlReader, argumentList, stringWriter);

output = stringWriter.ToString();

}

}

}

return req.CreateResponse(HttpStatusCode.OK, output);

}

Add an Azure Function action to the Logic App to call the new function. Remember to Specify the MapName value in the Headers.

Pros*: Relatively simple to implement. The BizTalk XSLT map requires no changes.*

Cons*: Need to construct the envelope message for multiple source messages.*

4. How to Use an XSLT Map with xsl:import and xsl:include (Method 2)

A solution to this problem was offered in part 1 which utilizes the Logic App XML Transform action albeit once the issue with the length of the import XSLT’s URI had been resolved. Here follows two alternative solutions using an Azure function. Each solution extends one of the previous two sections:

a. Write an Azure Function and upload the File to import

Follow the steps in section 2. Create an Azure Function and upload the XSLT maps. The maps reads from the file system and a relative path can be used in the xsl:import elements to import an XSLT file. The key part of the C# code is highlighted below:

XsltSettings settings = new XsltSettings(true, true);

The constructor parameters are defined as:

enableDocumentFunction

Type: System.Boolean

true to enable support for the XSLT document() function; otherwise, false.

enableScript

Type: System.Boolean

true to enable support for embedded scripts blocks; otherwise, false.

Security Note: XSLT scripting should be enabled only if you require script support and you are working in a fully trusted environment. If you enable the document() function, you can restrict the resources that can be accessed by passing an XmlSecureResolver object to the Transform method.

For more information see: https://msdn.microsoft.com/en-us/library/ms163499(v=vs.110).aspx, also see section 17).

b. Write an Azure Function With A Custom Xml Resolver

It is possible to extend the solution in section 3 to use a custom resolver. By passing the CloudFileDirectory object in the constructor and overriding the GetEntity method of XmlResolver the XSLT can be loaded and returned as a stream. Note this code assumes the xsl:import file resides in the same folder as the XSLT map loaded by the XslCompiledTransform.Load method.

#r "Microsoft.WindowsAzure.Storage"

using System.Net;

using System.IO;

using System.Xml;

using System.Xml.Xsl;

using System.Xml.XPath;

using Microsoft.Azure;

using Microsoft.WindowsAzure.Storage;

using Microsoft.WindowsAzure.Storage.File;

public static async Task<HttpResponseMessage> Run(HttpRequestMessage req, TraceWriter log)

{

log.Info("C# HTTP trigger function processed a request.");

var input = await req.Content.ReadAsStringAsync();

string mapName = req.Headers.GetValues("MapName").First();

string output = string.Empty;

// Get the XSLT file from the Azure file storage account

CloudStorageAccount storageAccount = CloudStorageAccount.Parse("DefaultEndpointsProtocol=https;AccountName= … ;AccountKey= … ;EndpointSuffix=core.windows.net");

CloudFileClient fileClient = storageAccount.CreateCloudFileClient();

CloudFileShare fileShare = fileClient.GetShareReference("configuration");

CloudFileDirectory fileDirectory = fileShare.GetRootDirectoryReference();

CloudFile file = fileDirectory.GetFileReference(mapName);

// Load the XSLT map

XPathDocument xdocXSL = new XPathDocument(file.OpenRead(null, null, null));

XsltSettings settings = new XsltSettings(true, true);

XslCompiledTransform transform = new XslCompiledTransform();

XsltArgumentList argumentList = new XsltArgumentList();

// Transform XML

using (StringReader stringReader = new StringReader(input))

{

using (XmlReader xmlReader = XmlReader.Create(stringReader))

{

using (StringWriter stringWriter = new StringWriter())

{

transform.Load((IXPathNavigable)xdocXSL, settings, new CustomXmlResolver(fileDirectory));

transform.Transform(xmlReader, argumentList, stringWriter);

output = stringWriter.ToString();

}

}

}

return req.CreateResponse(HttpStatusCode.OK, output);

}

// Custom XmlResolver class to load xsl:import files from Azure file storage

private class CustomXmlResolver : XmlResolver

{

private CloudFileDirectory _fileDirectory;

public CustomXmlResolver(CloudFileDirectory fileDirectory) { _fileDirectory = fileDirectory;}

public override ICredentials Credentials

{

set { }

}

public override object GetEntity(Uri absoluteUri, string role, Type ofObjectToReturn)

{

MemoryStream entityStream = null;

switch (absoluteUri.Scheme)

{

case "custom-scheme":

string fileName = absoluteUri.Segments[absoluteUri.Segments.Count() - 1];

CloudFile file = _fileDirectory.GetFileReference(fileName);

return file.OpenRead(null, null, null);

break;

}

return null;

}

public override Uri ResolveUri(Uri baseUri, string relativeUri)

{

if (baseUri != null)

{

return base.ResolveUri(baseUri, relativeUri);

}

else

{

return new Uri("custom-scheme:" + relativeUri);

}

}

}

Note: Remember to pass the MapName in the Headers.

Pros*: The BizTalk XSLT map requires no changes. No need to implement a URI shortening function to resolve the XSLT import file.*

Cons*: Requires good knowledge in using the XSL and WindowsAzure. Storage namespaces.*

5. How to Return Non-XML Output from an XSLT Map

It is possible for an XSLT map to generate non-XML output. An example of this is given below. This XSLT converts the Contacts XML input to a CSV output:

<?xml version="1.0" encoding="UTF-16"?>

<xsl:stylesheet xmlns:xsl="http://www.w3.org/1999/XSL/Transform" xmlns:msxsl="urn:schemas-microsoft-com:xslt" xmlns:var="http://schemas.microsoft.com/BizTalk/2003/var" exclude-result-prefixes="msxsl var s0" version="1.0" xmlns:s0="http://AzureBizTalkMapsDemo/Contact" xmlns:ns0="http://schemas.microsoft.com/BizTalk/2003/Any">

<xsl:output media-type ="application/text" method="text" />

<xsl:template match="/">

<xsl:apply-templates select="/s0:Contacts" />

</xsl:template>

<xsl:template match="/s0:Contacts">>

<xsl:for-each select="s0:Contact">

<xsl:value-of select ="concat(Title,',',Forename,',',Surname,',',Street,',',City,',',State,',',ZipCode)" />

<xsl:text> </xsl:text>

</xsl:for-each>

</xsl:template>

</xsl:stylesheet>

Unfortunately if you try to use the Logic App Transform XML action with an XSLT map that produces an output other than XML it will fail with the errors:

“InternalServerError. The 'Xslt' action failed with error code 'InternalServerError'.” or “An error occurred while processing the map. 'Token Text in state Document would result in invalid XML document.'”

The solution is to use an Azure Function (a C# HTTP trigger function) to perform the transform. The code to perform the transformation is given below, notice that a MapURI header is expected:

using System.Net;

using System.IO;

using System.Text;

using System.Xml;

using System.Xml.Xsl;

using System.Xml.XPath;

public static async Task<HttpResponseMessage> Run(HttpRequestMessage req, TraceWriter log)

{

log.Info("Processed a request.");

string mapURI = req.Headers.GetValues("MapURI").First();

// Get XML input to be transformed

var input = await req.Content.ReadAsStreamAsync();

XPathDocument xdocXML = new XPathDocument(input);

// Load XSL and get media-type

XPathDocument xdocXSL = new XPathDocument(mapURI);

XPathNavigator navigator = xdocXSL.CreateNavigator();

XmlNamespaceManager namespaces = new XmlNamespaceManager(navigator.NameTable);

namespaces.AddNamespace("xsl", "http://www.w3.org/1999/XSL/Transform");

string mediaType = navigator.SelectSingleNode("/*/xsl:output/@media-type", namespaces).Value;

// Transform XML

XslCompiledTransform transform = new XslCompiledTransform();

XsltSettings settings = new XsltSettings(true,true);

XmlUrlResolver resolver = new XmlUrlResolver();

XsltArgumentList arguments = new XsltArgumentList();

transform.Load((IXPathNavigable)xdocXSL, settings, resolver);

MemoryStream stream = new MemoryStream();

transform.Transform((IXPathNavigable)xdocXML, arguments, stream);

stream.Position = 0;

var streamReader = new StreamReader(stream);

//Create the response with appropriate media type

var res = req.CreateResponse(HttpStatusCode.OK);

res.Content = new StringContent(streamReader.ReadToEnd(), Encoding.UTF8, mediaType);

return res;

}

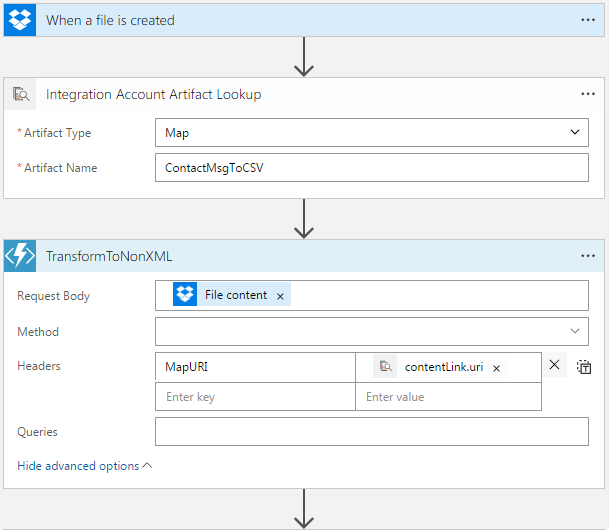

The MapURI value can be looked up in the Logic App by using an Integration Account Artifact Lookup action. The output will contain a contentLink.uri which can be set in the header of the Azure Function. The Logic App call to the Azure Function will look similar to this:

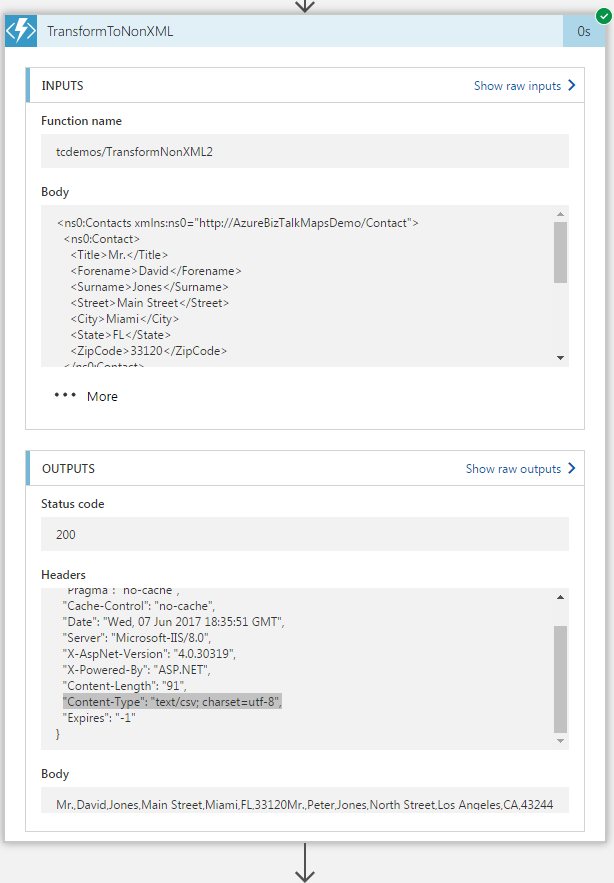

When the Logic App is run and the output from the Azure Function examined the CSV body can be seen as well as the Content-Type header set to: text/csv; charset=utf-8, e.g.:

Update: As of 2/2/2018 This limitation with the Logic Apps XML Transform action still exists.

Pros*: The BizTalk XSLT map requires no changes.*

Cons*:* Requires good knowledge in using the XSL and WindowsAzure. Storage namespaces.

6. How to use an XSLT Map that calls out to External Assemblies

Problem*: If a BizTalk map uses a Scripting functoid to call an external assembly function.*

Solution*: Migrate the external assembly function(s) to a new Azure Function. Modify the Azure Function to perform the XSLT transform using* the XslCompiledTransform and XsltArgumentList extension object classes.

The BizTalk mapper allows the developer to create a map which calls out to external assemblies using a Scripting functoid. If the XSLT is examined for the map something similar to that below will be seen:

<?xml version="1.0" encoding="UTF-16"?>

<xsl:stylesheet xmlns:xsl="http://www.w3.org/1999/XSL/Transform" xmlns:msxsl="urn:schemas-microsoft-com:xslt" xmlns:var="http://schemas.microsoft.com/BizTalk/2003/var" exclude-result-prefixes="msxsl var s0 userCSharp ScriptNS0" version="1.0" xmlns:ns0=" http://AzureBizTalkMapsDemo/Contact" xmlns:s0="http://AzureBizTalkMapsDemo/Person" xmlns:userCSharp="http://schemas.microsoft.com/BizTalk/2003/userCSharp" xmlns:ScriptNS0="http://schemas.microsoft.com/BizTalk/2003/ScriptNS0">

<xsl:output omit-xml-declaration="yes" method="xml" version="1.0" />

<xsl:template match="/">

<xsl:apply-templates select="/s0:Person" />

</xsl:template>

<xsl:template match="/s0:Person">

<ns0:Contact>

<xsl:variable name="var:v1" select="Forename" />

<xsl:variable name="var:v2" select="ScriptNS0:ExtMethod(string($var:v1))" />

<ns0:Forename>

<xsl:value-of select="$var:v2" />

</ns0:Forename>

. . . . .

</ns0:Contact>

</xsl:template>

<msxsl:script language="C#" implements-prefix="userCSharp">

<![CDATA[]]></msxsl:script>

</xsl:stylesheet>

Notice the highlighted pieces of code: a script namespace is declared which is then subsequently used to tell the XSLT processor to call out to an external function. In BizTalk a namespace is mapped to an actual assembly by configuring the map Custom Extension XML property to reference an .xml file that contains a list of extension objects. In this case the .xml file looks like this:

<ExtensionObjects>

<ExtensionObject Namespace="http://schemas.microsoft.com/BizTalk/2003/ScriptNS0" AssemblyName="ExternalAssembly, Version=1.0.0.0, Culture=neutral, PublicKeyToken=bc1170f72823f8c9" ClassName="ExternalAssembly.ExtCall" />

</ExtensionObjects>

The external C# function is very simple, it just capitalises the first letter of the text passed:

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

namespace ExternalAssembly

{

[Serializable]

public class ExtCall

{

public static string ExtMethod(string text)

{

if (text == null)

return null;

if (text.Length > 1)

return char.ToUpper(text[0]) + text.Substring(1);

return text.ToUpper();

}

}

}

To code this in an Azure Function the steps are as follows:

Create a new Azure Function (C# HTTP trigger);

Create a bin folder if one does not exist. For this use Kudu. The URL will be in the follow form (just replace the highlighted with the Function Apps name that is being used): https://tcdemos.scm.azurewebsites.net/DebugConsole/?shell=powershell

Upload the assembly/ies to the new bin folder;

Add the following code to the run.csx file:

#r "D:\home\site\wwwroot\MapTest\bin\ExternalAssembly.dll"

using System.Net;

using System.IO;

using System.Xml;

using System.Xml.Xsl;

using ExternalAssembly;

public static async Task<HttpResponseMessage> Run(HttpRequestMessage req, TraceWriter log)

{

log.Info("C# HTTP trigger function processed a request.");

var input = await req.Content.ReadAsStringAsync();

string mapURI = req.Headers.GetValues("MapURI").First();

string output;

var transform = new XslCompiledTransform();

XsltSettings settings = new XsltSettings(true, true);

var resolver = new XmlUrlResolver();

XsltArgumentList argumentList = new XsltArgumentList();

//Add Extension Objects

ExtCall ex = new ExtCall();

argumentList.AddExtensionObject(@"http://schemas.microsoft.com/BizTalk/2003/ScriptNS0", ex);

using (StringReader stringReader = new StringReader(input))

{

using (XmlReader xmlReader = XmlReader.Create(stringReader))

{

using (StringWriter stringWriter = new StringWriter())

{

transform.Load(mapURI, settings, resolver);

transform.Transform(xmlReader, argumentList, stringWriter);

output = stringWriter.ToString();

}

}

}

return req.CreateResponse(HttpStatusCode.OK, output);

}

There are couple things to notice here. A #r directive needs adding to reference the assembly for the framework to load. Second, objects needed by the XSLT map are first instantiated and then added to an XsltArgumentList object together with a namespace that matches the one declared in the XSLT map.

Update: In the November 2017 Logic Apps live Webcast it future support for extension objects was announced (https://www.youtube.com/watch?v=JAUYcQ_ENDU).

7. How to Use a BizTalk Map that Utilizes Database Functoids

Problem*: If a BizTalk map uses the Database functoids.*

Solution*: Replicate the Database functoid functionality in an external assembly. Upload this new external assembly to a new Azure Function.*

By now, having read the previous sections, the reader should feel confident at tackling this problem on their own. The steps being i) examine the XSLT produced by the BizTalk mapper, ii) understand how it works and determine what components are required, iii) upload them to an Azure Function and test, and finally iv) configure the required Logic App actions to call the Azure Function.

Consider a simple BizTalk map that looks up a customer ID to retrieve a telephone contact:

The XSLT generated for this map looks like this:

<?xml version="1.0" encoding="UTF-16"?>

<xsl:stylesheet xmlns:xsl="http://www.w3.org/1999/XSL/Transform" xmlns:msxsl="urn:schemas-microsoft-com:xslt" xmlns:var="http://schemas.microsoft.com/BizTalk/2003/var" exclude-result-prefixes="msxsl var s0 ScriptNS0" version="1.0" xmlns:s0="http://AzureBizTalkMapsDemo/Person" xmlns:ns0="http://AzureBizTalkMapsDemo/Contact" xmlns:ScriptNS0="http://schemas.microsoft.com/BizTalk/2003/ScriptNS0">

<xsl:output omit-xml-declaration="yes" method="xml" version="1.0" />

<xsl:template match="/">

<xsl:apply-templates select="/s0:Person" />

</xsl:template>

<xsl:template match="/s0:Person">

<ns0:Contact>

<Title>

<xsl:value-of select="Title/text()" />

</Title>

<Forename>

<xsl:value-of select="Forename/text()" />

</Forename>

<Surname>

<xsl:value-of select="Surname/text()" />

</Surname>

<xsl:variable name="var:v1" select="ScriptNS0:DBLookup(0 , string(ID/text()) , "Provider=SQLOLEDB; Data Source=.;Initial Catalog=Demo;Integrated Security=SSPI;" , "Customers" , "CustomerID")" />

<xsl:variable name="var:v2" select="ScriptNS0:DBValueExtract(string($var:v1) , "Phone")" />

<Phone>

<xsl:value-of select="$var:v2" />

</Phone>

</ns0:Contact>

<xsl:variable name="var:v3" select="ScriptNS0:DBLookupShutdown()" />

</xsl:template>

</xsl:stylesheet>

Immediately we can see the declared extension object namespace that calls out to external functions. When validating a map an Extension Object XML file is also created (in addition to the XSLT file). For this database functoid map the file looks like:

<ExtensionObjects>

<ExtensionObject Namespace="http://schemas.microsoft.com/BizTalk/2003/ScriptNS0" AssemblyName="Microsoft.BizTalk.BaseFunctoids, Version=3.0.1.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35" ClassName="Microsoft.BizTalk.BaseFunctoids.FunctoidScripts" />

</ExtensionObjects>

If a .NET assembly browser and disassembler (e.g. ILSpy) is used on the extention objects' assembly (see Appendix A) we can see exactly what this object does, or at least the three methods that are of interest (DBLookup, DBValueExtract & DBLookupShutdown).

DBLoop does the following:

creates an OleDbConnection to the SQL database;

creates an OleDbCommand “SELECT * FROM {table} WHERE {column} = {?}";

the value to be looked up is added to an OleDbParameter;

the OleDbCommand’s ExecuteReader method is called;

the result set, a IDataReader, is iterated and the results are stored in a HashTable;

the IDataReader’s close method called to close the database connection;

Returns an index for the DBValueExtract method to use;

DBValueExtract does the following:

Checks that a HashTable exists for this extract – this is the index return by the DBLookUp method;

Checks the column name exists in the HashTable;

Extract the Column value;

Returns the result as a string value;

DBLookupShutdown does the following:

- Returns string.empty;

This is really for just for completeness, that is, following the usual practice of opening a DB connection, reading from the DB, and finally closing the DB connection (the DBLoop method has already done this). Also, notice that the result is stored in a variable (var:v3) at the bottom of the stylesheet. Using this method in XSLT allows a script to be called without it affecting the XSLT output; the variable is never used.

Bringing this altogether, then, all that needs to be done is to write a custom assembly that has these three same methods and functionality, e.g.:

Once the external assembly is built, follow section 6 and create a new Azure function. Upload the new external assembly to the bin folder and make the following, highlighted, changes to the run.csx file:

#r "D:\home\site\wwwroot\MapTest\bin\FunctionHelper.dll"

using System.Net;

using System.IO;

using System.Xml;

using System.Xml.Xsl;

using FunctionHelper;

public static async Task<HttpResponseMessage> Run(HttpRequestMessage req, TraceWriter log)

{

log.Info( "C# HTTP trigger function processed a request." );

var input = await req.Content.ReadAsStringAsync();

string mapURI = req.Headers.GetValues("MapURI").First();

string output;

var transform = new XslCompiledTransform();

XsltSettings settings = new XsltSettings(true, true);

var resolver = new XmlUrlResolver();

XsltArgumentList argumentList = new XsltArgumentList();

//Add Extension Objects

DatabaseScripts dbs = new DatabaseScripts();

xslArg.AddExtensionObject(@ " http://schemas.microsoft.com/BizTalk/2003/ScriptNS0 " , dbs);

using (StringReader stringReader = new StringReader(input))

{

using (XmlReader xmlReader = XmlReader.Create(stringReader))

{

using (StringWriter stringWriter = new StringWriter())

{

transform.Load(mapURI, settings, resolver);

transform.Transform(xmlReader, argumentList, stringWriter);

output = stringWriter.ToString();

}

}

}

return req.CreateResponse(HttpStatusCode.OK, output);

}

From a purely technical view point there is nothing from stopping you from adding the entire Microsoft.BizTalk.BaseFunctoids assembly to the Azure Function bin folder and, therefore, making the entire range of functoids available. However, there will be a grey area around licensing. There are instances where having certain BizTalk artefacts can be installed on non-BizTalk Servers (e.g. WCF LOB Adapters) but this is not likely to apply to the BaseFunctoid assembly.

8. How to Use a BizTalk Map that Makes Use of Custom Functoids

This solution to this largely depends on the complexity of the custom functoid. Clearly a functoid that uses AddScriptTypeSupport is going to be more complex than a one that merely does string manipulation. Essentially the functionality in the custom functoid needs to be replicated in an assembly class library where the class is not derived from the BaseFunctoid class.

If specific functionality is required that resides in the BaseFunctoid class a .NET disassembler can be used to examine the function(s) and then replicate it in the new assembly class library.

Once the required functionality has been isolated in this new assembly it can be added as an extension object as shown in section 7.

There may be cases where the custom functoid is dependent on some external source, for example, a functoid that makes a call to SSO to retrieve a connection string. In this scenario, a new credential store is required, but, whatever replacement is chosen, so long as the method signature remains the same in the class library the extension object will still work.

9. Using an XSLT 2.0/3.0 Map in Logic Apps and Azure Functions

There is no XSLT 2.0/3.0 processor offered by Microsoft. While XSLT 2.0 & 3.0 have more functionality than 1.0 it cannot be used since there is no XSLT 2.0/3,0 support in .NET and, hence, neither can it be used in BizTalk maps. The same applies to the Transform XML action in Logic Apps.

There are, however, a couple of XSLT processors that are available for .NET (e.g. XQSharp & Saxon 9.x). It is possible, then, to write an Azure Function to execute an XSLT 2.0/3.0 map. There is an open source version of Saxon 9.x available and what follows is an example of how to use this processor in an Azure Function.

Download and install the Saxon 9.x Open Source package (see http://saxon.sourceforge.net/#F9.7HE);

Create a new Azure Function (C# HTTP trigger). In this example the name used was: TransformXSLT2;

Create a bin folder if one does not exist. For this use Kudu. The URL will be in the follow form (just replace the highlighted with the Function Apps name that is being used): https://tcdemos.scm.azurewebsites.net/DebugConsole/?shell=powershell

Upload the eight Saxon 9.x runtime assemblies to the new bin folder (IKVM.OpenJDK.Charsets.dll, IKVM.OpenJDK.Core.dll, IKVM.OpenJDK.Text.dll, IKVM.OpenJDK.Util.dll, IKVM.OpenJDK.XML.API.dll, IKVM.Runtime.dll, saxon9he.dll, saxon9he-api.dll). The Azure Function files list should now look similar to this:

Add the following code to the run.csx file (note the highlighted text will change depending on the name of the function created in step 2.:

#r "D:\home\site\wwwroot\TransformXSLT2\bin\saxon9he-api.dll" using System.Net; using System.IO; using System.Xml; using Saxon.Api; public static async Task<HttpResponseMessage> Run(HttpRequestMessage req, TraceWriter log) { log.Info("C# HTTP trigger function processed a request."); string mapURI = req.Headers.GetValues("MapURI").First(); // Get XML input to be transformed var input = await req.Content.ReadAsStreamAsync(); // Compile stylesheet Processor processor = new Processor(); XsltCompiler compiler = processor.NewXsltCompiler(); XsltExecutable executable = compiler.Compile(new Uri(mapURI)); // Do transformation to a destination var destination = new DomDestination(); var transformer = executable.Load(); transformer.SetInputStream(input, new Uri(mapURI)); transformer.Run(destination); return req.CreateResponse(HttpStatusCode.OK, destination.XmlDocument.InnerXml); }The XSLT 2.0 maps can still be added to the Integration Account maps;

When this Azure Function is called use an Integration Account Artifact LookUp action to retrieve the contentLink.uri of the XSLT map and passed it in the header.

The Saxon open-source XSLT processor implements the following: XSLT 2.0, 3.0, XPath 2.0, 3.0 and 3.1, and XQuery 1.0, 3.0, and 3.1. This provides a larger set of operations and functionality than XSLT 1.0 which in practice means there would be no need to use custom assemblies or inline code for complex operations.

Note that Saxon will not support msxsl:script as this is a Microsoft specific implementation to their XSLT processor.

Update: In the November 2017 Logic Apps live Webcast it future support for XSLT 3.0 was announced (https://www.youtube.com/watch?v=JAUYcQ_ENDU).

10. Serializing & Deserializing XML To and From .NET Classes

For completeness it is worth briefly mentioning transformations that are done using .NET classes (called from orchestrations), that is, by serializing the source message into a .NET class, transforming this class to some other class and then deserializing this new class to a destination message.

This technique was often used in the past for performance reasons. But, since the release of BizTalk 2013, BizTalk maps now use the XslCompiledTransform class. Porting these classes to an Azure Function should be straightforward and previous sections have already explained how to upload and use external .NET assemblies.

11. Some Further Considerations Using XSLT in Azure Logic App and Azure Functions

There are a few things to bear in mind when using the Logic App Transformation XML action and Azure Functions:

The Azure Logic App Transform XML action uses the .NET XslCompiledTransform class. If BizTalk maps are being migrated remember that BizTalk maps prior to BizTalk 2013 used the XslCompiled class. For more information see: Migrating From the XslTransform Class (/en-us/dotnet/standard/data/xml/migrating-from-the-xsltransform-class).

By default, the XslCompiledTransform class disables support for the XSLT document() function and embedded scripting. In order to overcome some of the scenarios presented in the previous sections it was required to enable these in the Azure Functions. For more information on XSLT Security Considerations and best practice see: /en-us/dotnet/standard/data/xml/xslt-security-considerations.

None of the solutions presented have considered the performance of the transformations, or how to handle large transformations. They have been solely concerned with implementing message patterns that are commonly used in BizTalk (e.g. Aggregator, Splitter) and migrating complex transforms that cannot be done Logic App Transformation XML action. If the performance of XSLT need to be considered see Understanding the BizTalk Mapper: Part 12 – Performance and Maintainability http://www.bizbert.com/bizbert/content/binary/Understanding%20the%20BizTalk%20Mapper%20v2.0.pdf

There are some differences between Logic Apps and Azure Functions. Azure Functions have an associated warm up time and no SLA is provided for Functions running under Consumption Plans. See https://azure.microsoft.com/en-us/support/legal/sla/functions/v1_0. For other differences between Logic Apps and Azure Functions see https://toonvanhoutte.wordpress.com/2017/09/12/azure-functions-vs-azure-logic-apps/

12. Summary

At this point the developer should feel confident in migrating BizTalk maps to Logic Apps as well as writing XSLT maps that are not constrained by the limitations of the Transform XML action. The sections have introduced the developer to a number of techniques for using msxml:script, XSL classes, Azure storage etc. all of which can be used together to overcome any limitations

The previous sections have provided solutions to migrating and using complex XSLT maps created in BizTalk. But is should be born in mind that the obvious solution isn’t necessarily the best choice. For example, take the case of an XSLT map that calls out to an external assembly. At first glance it seems a good fit for implementing as part 2 #6. However, if all the external assembly does is encapsulate code that is shared across multiple maps, then an alternative solution would be copy and paste the assembly code into an msxsl:script block in a stylesheet as in part 1 #7. This solution would allow the Logic Apps Transform XML action to be used instead of creating an Azure Function and having the additional step in the Logic App of using an Integration Account Artifact LookUp action to retrieve the XSLT map URI.

Note: Since the writing of this series there have been a number of updates to the Transform XML action, notably, support for external assemblies, non-XML output and an XSLT 3.0 processor. To understand what this means for migrating BizTalk maps see: Migrating BizTalk Maps to Azure Logic Apps: Shortcomings & Solutions (an update)

13. References

- Azure Functions C# developer reference: /en-us/azure/azure-functions/functions-reference-csharp

- System.Xml.Xsl Namespace: https://msdn.microsoft.com/en-us/library/system.xml.xsl%28v=vs.110%29.aspx

- Azure Logic Apps - XSLT with parameters: https://azure.microsoft.com/en-us/resources/templates/201-logic-app-xslt-with-params/

- XSLT Stylesheet Scripting Using <msxsl:script>: https://msdn.microsoft.com/en-us/library/533texsx(v=vs.110).aspx

- Database Functoids Reference: https://msdn.microsoft.com/en-us/library/aa562112.aspx

- Get started with Azure File storage on Windows: /en-us/azure/storage/storage-dotnet-how-to-use-files

- CloudStorageAccount Class: /en-us/dotnet/api/microsoft.windowsazure.storage.cloudstorageaccount?view=azure-dotnet

- Understanding the BizTalk Mapper: http://www.bizbert.com/bizbert/content/binary/Understanding%20the%20BizTalk%20Mapper%20v2.0.pdf

- BizTalk Map as an Azure Function: http://microsoftintegration.guru/2017/05/11/biztalk-map-as-an-azure-function/

- Migrating From the XslTransform Class: /en-us/dotnet/standard/data/xml/migrating-from-the-xsltransform-class

- XSLT Security Considerations: /en-us/dotnet/standard/data/xml/xslt-security-considerations

- ILSpy (Open Source .NET Decompiler): http://ilspy.net

- Saxon-HE 9.7 (XSLT 2.0 Processor): http://saxon.sourceforge.net/#F9.7HE

** **

14. Appendix A: BizTalk Database Functoids

// Microsoft.BizTalk.BaseFunctoids.FunctoidScripts

[ThreadStatic]

private static Hashtable myDBFunctoidHelperList;

private class DBFunctoidHelper

{

private string error;

private string connectionString;

private string table;

private string column;

private string value;

private OleDbConnection conn;

private Hashtable mapValues;

public string Error

{

get {return this.error; }

set {this.error = value; }

}

public string ConnectionString

{

get {return this.connectionString; }

set {this.connectionString = value; }

}

public string Table

{

get {return this.table; }

set {this.table = value; }

}

public string Column

{

get {return this.column; }

set {this.column = value;}

}

public string Value

{

get {return this.value; }

set {this.value = value; }

}

public OleDbConnection Connection

{

get {return this.conn; }

}

public Hashtable MapValues

{

get {return this.mapValues; }

}

public DBFunctoidHelper()

{

this.conn = new OleDbConnection();

this.mapValues = new Hashtable();

}

}

// Microsoft.BizTalk.BaseFunctoids.FunctoidScripts

private static void InitDBFunctoidHelperList()

{

if (FunctoidScripts.myDBFunctoidHelperList == null)

{

FunctoidScripts.myDBFunctoidHelperList = new Hashtable();

}

}

// Microsoft.BizTalk.BaseFunctoids.FunctoidScripts

public string DBLookup(int index, string value, string connectionString, string table, string column)

{

FunctoidScripts.DBFunctoidHelper dBFunctoidHelper = null;

bool flag = false;

FunctoidScripts.InitDBFunctoidHelperList();

if (!FunctoidScripts.myDBFunctoidHelperList.Contains(index))

{

dBFunctoidHelper = new FunctoidScripts.DBFunctoidHelper();

FunctoidScripts.myDBFunctoidHelperList.Add(index, dBFunctoidHelper);

}

else

{

dBFunctoidHelper = (FunctoidScripts.DBFunctoidHelper)FunctoidScripts.myDBFunctoidHelperList[index];

}

try

{

if (dBFunctoidHelper.ConnectionString == null || (dBFunctoidHelper.ConnectionString != null && string.Compare(dBFunctoidHelper.ConnectionString, connectionString, StringComparison.Ordinal) != 0) || dBFunctoidHelper.Connection.State != ConnectionState.Open)

{

flag = true;

dBFunctoidHelper.MapValues.Clear();

dBFunctoidHelper.Error = "";

if (dBFunctoidHelper.Connection.State == ConnectionState.Open)

{

dBFunctoidHelper.Connection.Close();

}

dBFunctoidHelper.ConnectionString = connectionString;

dBFunctoidHelper.Connection.ConnectionString = connectionString;

dBFunctoidHelper.Connection.Open();

}

if (flag || string.Compare(dBFunctoidHelper.Table, table, StringComparison.Ordinal) != 0 || string.Compare(dBFunctoidHelper.Column, column, StringComparison.OrdinalIgnoreCase) != 0 || string.Compare(dBFunctoidHelper.Value, value, StringComparison.Ordinal) != 0 || (dBFunctoidHelper.Error != null && dBFunctoidHelper.Error.Length > 0))

{

dBFunctoidHelper.Table = table;

dBFunctoidHelper.Column = column;

dBFunctoidHelper.Value = value;

dBFunctoidHelper.MapValues.Clear();

dBFunctoidHelper.Error = "";

using (OleDbCommand oleDbCommand = new OleDbCommand(string.Concat(new string[]

{

"SELECT * FROM ",

table,

" WHERE ",

column,

"= ?"

}), dBFunctoidHelper.Connection))

{

OleDbParameter oleDbParameter = new OleDbParameter();

oleDbParameter.Value = value;

oleDbCommand.Parameters.Add(oleDbParameter);

IDataReader dataReader = oleDbCommand.ExecuteReader();

if (dataReader.Read())

{

for (int i = 0; i < dataReader.FieldCount; i++)

{

string text = dataReader.GetName(i);

text = text.ToLower(CultureInfo.InvariantCulture);

object value2 = dataReader.GetValue(i);

dBFunctoidHelper.MapValues[text] = value2;

}

}

dataReader.Close();

}

}

}

catch (OleDbException ex)

{

if (ex.Errors.Count > 0)

{

dBFunctoidHelper.Error = ex.Errors[0].Message;

}

}

catch (Exception ex2)

{

dBFunctoidHelper.Error = ex2.Message;

}

finally

{

if (dBFunctoidHelper.Connection.State == ConnectionState.Open)

{

dBFunctoidHelper.Connection.Close();

}

}

return index.ToString(CultureInfo.InvariantCulture);

}

// Microsoft.BizTalk.BaseFunctoids.FunctoidScripts

public string DBValueExtract(int index, string columnName)

{

string result = "";

FunctoidScripts.InitDBFunctoidHelperList();

if (FunctoidScripts.myDBFunctoidHelperList.Contains(index) && !string.IsNullOrEmpty(columnName))

{

FunctoidScripts.DBFunctoidHelper dBFunctoidHelper = (FunctoidScripts.DBFunctoidHelper)FunctoidScripts.myDBFunctoidHelperList[index];

columnName = columnName.ToLower(CultureInfo.InvariantCulture);

object obj = dBFunctoidHelper.MapValues[columnName];

if (obj != null)

{

result = obj.ToString();

}

}

return result;

}

// Microsoft.BizTalk.BaseFunctoids.FunctoidScripts

public string DBLookupShutdown()

{

return string.Empty;

}