SharePoint 2013 Troubleshooting: ErrorID 808/829 – The object is not found (260 char MAX_PATH)

Introduction

This situation occurs when you crawl a file system with up to 260 characters in the file path. This will end to ghosted files in the crawl index and can lead to lots of ErrorID 808 / 829 in the crawl database.

From the user's view, files and folders over this limit will not be retrieved using Search Service within SharePoint. The problem is related on how the crawler in SharePoint manage UNC with too long path. You should preferably manage the file system environment to not go over this limit. It's a very rare condition, but you will notice no explicit evidence of this behavior if you don't suspect it. This can impact crawl performance over time if you have lots of files and folders above the limit.

The problem doesn't happen in SharePoint Server 2016 but have other browser limitations.

In a real world scenario, you should pay attention when crawling a file share who can exceed this limitation as many legacy applications have this limit.

Problem Description

Crawl File Share with Max Path - The Object is not found

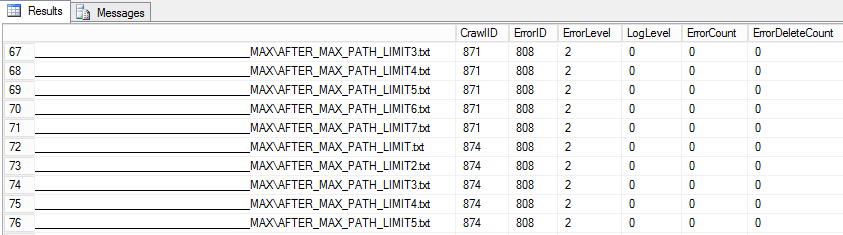

Crawling a File System with a long UNC (MAX_PATH) will produce an ErrorID 808/ 829 : The Object is not found in dbo.MSSCrawlURLLogs

These files crawl results will not appear in Crawl logs(GUI) but will appear with the Object is not found error message in database

Possible scenario for this problem:

- Scenario A : You're running SharePoint Server 2013 SP1 or later update (ErrorID 808)

- Scenario B : You're running SharePoint Server 2013 (August 2015) or later update (ErrorID 829)

Impacted & Working versions

| Version | File Crawl Result | Display GUI | ErrorID |

| SharePoint 2013 : 15.0.4569.1000(SP1) | The object is not found | no | 808 |

| SharePoint 2013 : 15.0.4745.1000

August 2015 |

The object is not found | no | 829 |

| SharePoint 2016 : 16.0.4351.100011

(RTM) - Default Search Service Application |

Crawled | yes, success | 0 |

Diagnostic Steps: The Lab Scenario

In this example, we have setup the following crawl configuration:

- SharePoint 2013 Farm

- SharePoint Server 2013 Search component

- A basic Search Center

- Indexation of a Windows 2012R2 File Share

- A Content Source: \filesrv\share\.*.)

- A custom file structure for this demonstration:

- One control folder below 260 limit

- One very long folder path (with lots of sub-folders)

- Some tests documents before and after the MAX_PATH limit

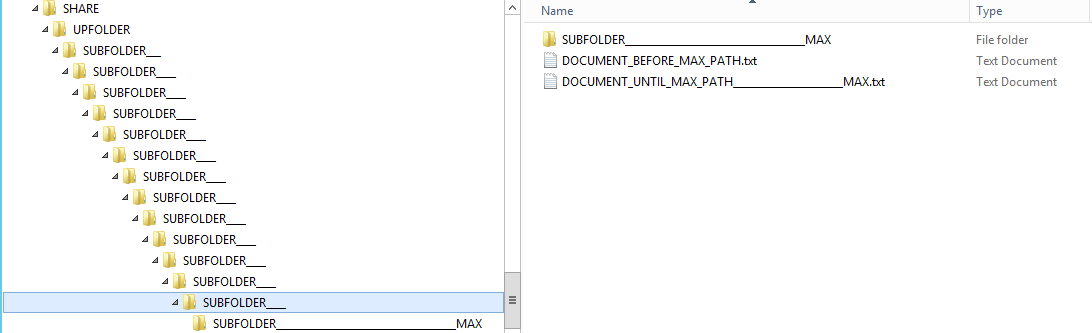

File System - Sample

View of a problematic file system used to demonstrate this scenario:

These folders contains:

- Sub folder before the MAX_PATH (local)

- Folder and files AFTER the MAX_PATH

Note

You cannot create a file with explorer in the SUBFOLDER____MAX, because you will receive an error: "The file name you are creating is too long"

If you want to create a file beyond this limit, map a drive to a subfolder item.

This will allow you to create file over the 260 character limit.

Detecting the issue

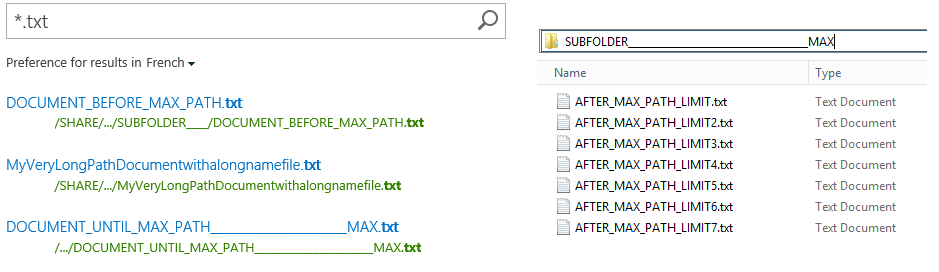

The problem is viewable (or not) in different places:

At the end-user desk

- Users don't find their created documents on an indexed file system. (with too long path)

At the search administrator level:

- The crawled URLs are not present in the crawl log

- The crawled URLs doesn't not appear in Error in the crawl log

The search administrator will run a Full or Incremental sync without success.

At the SQL Level:

The easy way to find the problematic URL is to execute the following SQL Query on the Search_Service_Application _CrawlStore Database in the dbo.MSSCrawlURLLog table :

/****** Script for SelectTopNRows command from SSMS ******/

SELECT TOP 1000

[PartitionID],[DocID],[AccessURL],[AccessHash],[CrawlID],[ErrorID],[ErrorLevel],[ErrorDesc],[LogTime],[LogLevel],[ErrorCount],[ErrorDeleteCount],[FirstErrorTime],[FirstErrorDeleteTime],[ContentSourceID],[StartAddressID],[ParentDocID],[TransactionFlags],[CrawlScope]

FROM [Search_Service_Application_CrawlStoreDB].[dbo].[MSSCrawlURLLog]

Results will show you all URLs in Error. Do the same for dbo.MSSCrawlURL (crawled) and compare results.

There is the problem: items beyond the 260-character limit MAX_PATH are dropped

"ErrorID 808 = The object is not found"

"ErrorID 829 = The object is not found"

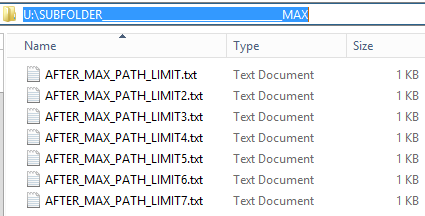

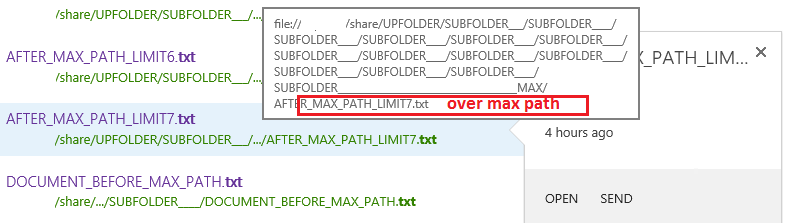

If you observe your sample .txt file, you will have this :

\\filesrv\SHARE\UPFOLDER\ SUBFOLDER___\SUBFOLDER____\SUBFOLDER____\SUBFOLDER____\SUBFOLDER____\SUBFOLDER____\SUBFOLDER____\SUBFOLDER____\SUBFOLDER____\SUBFOLDER____\SUBFOLDER____\SUBFOLDER____\SUBFOLDER____\SUBFOLDER____________________________________MAX\AFTER_MAX_PATH_LIMIT.txt

- Green part : Below or equal 259(0)

- Red Part : Above 259

This is important to know that if you have an UNC Path longer than the above example : the item path (including the file extension) will be smaller.

For example : \domain.local\DFSShareName\.. ; \filesrv.domain.local\Share

Note

Using SharePoint Server 2016 will successfully index a file share over 260 characters in path.

Root cause analysis

Why is this occurring?

See : https://msdn.microsoft.com/en-us/library/aa365247%28VS.85%29.aspx#maxpath

In the Windows API (with some exceptions discussed in the following paragraphs), the maximum length for a path is MAX_PATH, which is defined as 260 characters. A local path is structured in the following order: drive letter, colon, backslash, name components separated by backslashes, and a terminating null character.

For example, the maximum path on drive D is "D:\some 256-character path string<NUL>" where "<NUL>" represents the invisible terminating null character for the current system codepage. (The characters < > are used here for visual clarity and cannot be part of a valid path string.)

Why is this a problem for File Share Crawling?

- Because deep folders will not be searchable.

- Because this will garbage the SQL Crawl database.

- Each full crawl will duplicate the ErrorID 808 with all problematic files and folders.

- The ErrorID 808 829 will never be cleaned-up automatically (until the CrawlCleanUp)

Below, the configuration of deletion index items and why this is not working :

Manage deletion of index items

In SharePoint Server, the default policy for indexed items are the following define per Search Service Application.

Policy |

Description |

Delete policy for access denied or file not found |

When the crawler encounters an access denied or a file not found error, the item is deleted from the index if the error was encountered in more than ErrorDeleteCountAllowed consecutive crawls AND the duration since the first error is greater than ErrorDeleteIntervalAllowed hours. If both conditions are not met, the item is retried. |

Delete policy for all other errors |

When the crawler encounters errors of types other than access denied or file not found, the item is deleted from the index if the error was encountered in more than ErrorDeleteAllowed consecutive crawls AND the duration since the first error is greater than ErrorIntervalAllowed hours. |

Delete unvisited policy |

During a full crawl, the crawler executes a delete unvisited operation in which it deletes items that are in the crawl history that are not found in the current full crawl. You can use the DeleteUnvisitedMethod property to specify what items get deleted.You can specify the following three values: |

Re-crawl policy for SharePoint content |

This policy applies only to SharePoint content. If the crawler encounters errors when fetching changes from the SharePoint content database for RecrawlErrorCount consecutive crawls AND the duration since first error is RecrawlErrorInterval hours, the system re-crawls that content database |

Why is ErrorID 808 cleaned by the deletion policy ?

The TechNet documentation show the following: When the crawler encounters an access denied or a file not found error,

- the item is deleted from the index

if the error was encountered in more than ErrorDeleteCountAllowed consecutive crawls

AND

If the duration since the first error is greater than ErrorDeleteIntervalAllowed hours

- If both conditions are not met, the item is retried.

The ErrorID 808 : the object is not found should be deleted according to this policy.

However, even if the two conditions are met in a logical way, the database error will not go away because :

- The file is not really indexed. Different than if indexed and then deleted on the system.

- Each new full crawl for a file or folder in long UNC Path grab an new DocID

- Since the DocID is renewed, the ErrorDeleteCountAllowed seems not take in consideration by the deletion policy.

Testing a shorter deletion policy

For this demonstration, we will setup the deletion of the not found / access denied item to a lower condition. This will allow you to see the problem without waiting the default schedule. Remember, this will change the deletion behavior for all your content source. Please make these modification in test or for debug purpose.

$SearchApplication = Get-SPEnterpriseSearchServiceApplication -Identity "Search Service Application"

# Get all default value

$SearchApplication.GetProperty( "ErrorDeleteCountAllowed" )

$SearchApplication.GetProperty( "ErrorDeleteIntervalAllowed" )

$SearchApplication.GetProperty( "ErrorDeleteAllowed" )

$SearchApplication.GetProperty( "ErrorIntervalAllowed" )

$SearchApplication.GetProperty( "DeleteUnvisitedMethod" )

$SearchApplication.GetProperty( "RecrawlErrorCount" )

$SearchApplication.GetProperty( "RecrawlErrorInterval" )

# Set Error deletion settings to minimum

# Only the first two are needed to see the problem

$SearchApplication.SetProperty( "ErrorDeleteCountAllowed" , 1)

$SearchApplication.SetProperty( "ErrorDeleteIntervalAllowed" , 1)

$SearchApplication.SetProperty( "ErrorDeleteAllowed" , 1)

$SearchApplication.SetProperty( "ErrorIntervalAllowed" , 1)

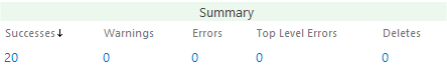

- Run full crawl (2 times) (ErrorDeleteCountAllowed)

- Wait for a hour (ErrorDeleteIntervalAllowed)

- Re-run full crawl

- Look again in the SQL DBO.MSSCrawlURLLogs :

Observation :

- Error808 / 829 are still present in the SQL CrawlURLLogs table.

- (File still not found)

How to clean-up dbo.MSSCrawlURLLogs ?

- 1. Run an index reset. However, this is not always possible in a production environment;

- 2. Perform a CrawlLogCleanUp

By default, the Crawl Log Cleanup for Search Application Search Service Application timer job will run :

- daily between 00:01:00 and 00:33:00

- With a default : CrawlLogCleanUpIntervalInDays = 90 (minimum = 1)

Forcing the clean-up : (https://blogs.msdn.microsoft.com/spses/2015/07/09/sharepoint-2013-crawl-database-grows-because-of-crawl-logs-and-cause-crawl-performance/ )

$ssa = Get-SPServiceApplication -Name "Search Service Application"

$ssa.CrawlLogCleanUpIntervalInDays = 1

$ssa.Update()

Run the timer job

$job = Get-SPTimerJob | where-object {$_.name -match "Crawl log"}

$job.runnow()

This will cleanup the following table : MssCrawlUrlLog, MssCrawlHostsLog, Msscrawlhostlist for all record older than "CrawlLogCleanUpIntervalInDays"

Solutions : How to Correct or Prevent this issue?

1. Clean-up Directories

Dress a list of your potential Max_Path folders :

For this purpose you can use the nice tool "Path Length Checker" made by Daniel Schroeder on Codeplex. Or use the DirectoryListing.ps1 on Script Center

Clean file system to not go beyond the 259(+0)-character limit in the file share.

If you have too long path, you probably manage your file not effectively.

Rename or Shorter Path (local) and UNC share.

Mount with subst folder to shorter name.

Don't forget than renaming folders or shares, will break shortcut or registry entry.

2. Upgrade to SharePoint Server 2016

SharePoint Server 2016 can index file share with path length with more than 260 characters. (maximum path is unknown)

The same crawl path and configuration will result in ErrorID 0 (Crawled). However, other limitations : Open, Send, Follow, Edit won't work

However, Internet Explorer 11, will not open link to the file system with more than 259(+0) URL Length.

When a user will try to open an indexed UNC file through Internet Explorer; the link will be broken and display HTTP 404 error.

Additional Fixes

Since manipulating SharePoint Databases directly is not recommended; if you want to get rid of these error filling the SQL database,

- You can run an index reset on the impacted Search Service Application.

- Run Crawl Log Cleanup for Search Application Search Service Application Timer job (see above)

Note : Be sure to correct the problematic MAX_PATH Folder before running index reset and launching full crawl again.

Additional Notes

Internet Explorer and Max Path (260)

As per this blog post

Beyond the underlying limitations, the User Interface also enforces various limits around URL length. For instance, the browser address bar is capped at 2047 characters. Start > Run is limited to 259 characters (the MAX_PATH limit of 260, which leaves one character for the null-terminator). Internet Explorer versions up to version 11 do not allow you to bookmark URLs longer than 260 characters. The Windows RichEdit v2 control's Automatic Hyperlink behavior (EM_AUTOURLDETECT) truncates the link after around 512 characters; this limit is higher in RichEdit5W controls.

Using the 8dot3name (Short Name)

If you're running folder with large name, you can use the 8dot3name for crawling a content source \server\share\8dot3name%.

This will allow you to crawl long URL for folder without any (know) impact for recent Browser or Office Applications. But this is rarely seen and you should prefer to have shorter folder name.

Using Cloud Search Service Application(2013)

Actually, I believe this can work in SharePoint Server 2013 with the new Cloud SSA, perhaps I've not managed to make it work correctly. Crawling MAX_PATH item is maybe included in the OSearch16.FileHandler.1

Conclusions

- Having +260 MAX_PATH folders is not a good idea. Avoid it

- Crawling File Share with MAX_PATH is pretty bad in SharePoint 2013

- If you encounter this issue, additional fix or re-organization may be required.

- Best support in SharePoint Server 2016 (with browser limitation)

See Also

Others Resources

- MSDN : Naming Files, Paths, and Namespaces

- MSDN : Maximum Path Length Limitation

- TechNet : SharePoint Server 2010 : URL path length restrictions

- TechNet : SharePoint Server 2010 : Manage deletion of index items

- BLOG : Crawl Database grows because of crawl logs and cause crawl performance

- BLOG : URL Length Limit