SQL Server 2016 Polybase: How to Query Unstructured Data

Scope

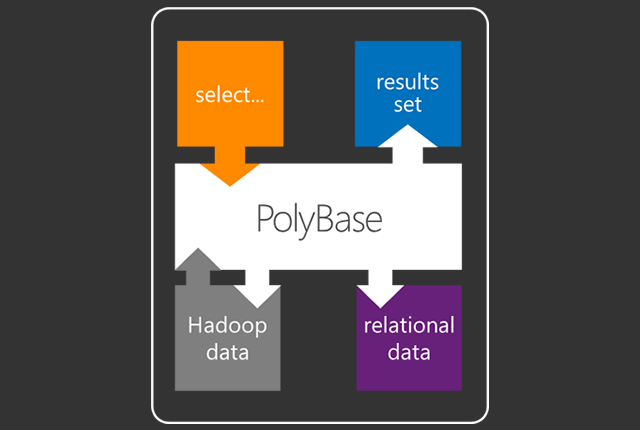

The following article demonstrates how unstructured data and relational data can be queried, joined and processed in a single query using PolyBase, a new feature in SQL Server 2016.

Pre-Requisites

- Introduction to Big Data Analytics Using Microsoft Azure

- Big Data Analytics Using Hive on Microsoft Azure

- Analyze Twitter Data With Hive in Azure HDInsight

- Running Hadoop on Linux using Azure HDInsight

Introduction

Traditionally, Big Data is processed using Apache Hadoop which is totally fine. But what if the result of this needs to be linked to the traditional Relation Database? For example, assume that from the analysis of tons of application logs, marketing needs to contact some customs that faced problems in an application following a failure in the application.

This problem is solved with PolyBase. PolyBase allows you to use Transact-SQL (T-SQL) statements to access data stored in Hadoop or Azure Blob Storage and query it in an ad-hoc fashion. It also let you query semi-structured data and join the results with relational data sets stored in SQL Server. PolyBase is optimized for data warehousing workloads and intended for analytical query scenarios.

Moreover, the user querying from T-SQL does not have to worry about Map-Reduce jobs processing the unstructured data, all this processing is transparent to the user.

Installing PolyBase

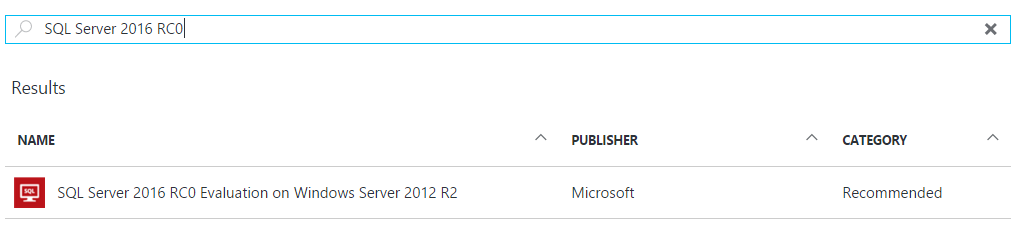

In this article, SQL Server 2016 RC0 is used and it is used in an Azure VM that is already pre-configured and can be provisioned at any time.

1. Ensure all the required software are installed:

- 64-bit SQL Server Evaluation edition

- An external data source, either Windows Azure blob storage, or a supported Hadoop version (see Choose a Hadoop version or Azure Blob storage using sp_configure).

- Microsoft .NET Framework 4.0. Go to the download center

- Oracle Java SE RunTime Environment (JRE) version 7.51 or higher (64-bit). Go to downloads. The installer will fail if JRE is not present.

- Minimum memory: 4GB

- Minimum hard disk space: 2GB

2. Install PolyBase

Go to Control Panel > Add/Remove programs > Microsoft SQL Server 2016 and click on Uninstall/ Change. Then click on Add and browse to the setup of SQL Server 2016 (64-bit).

On an Azure VM, the set-up is found at C:\SQLServer_13.0_Full

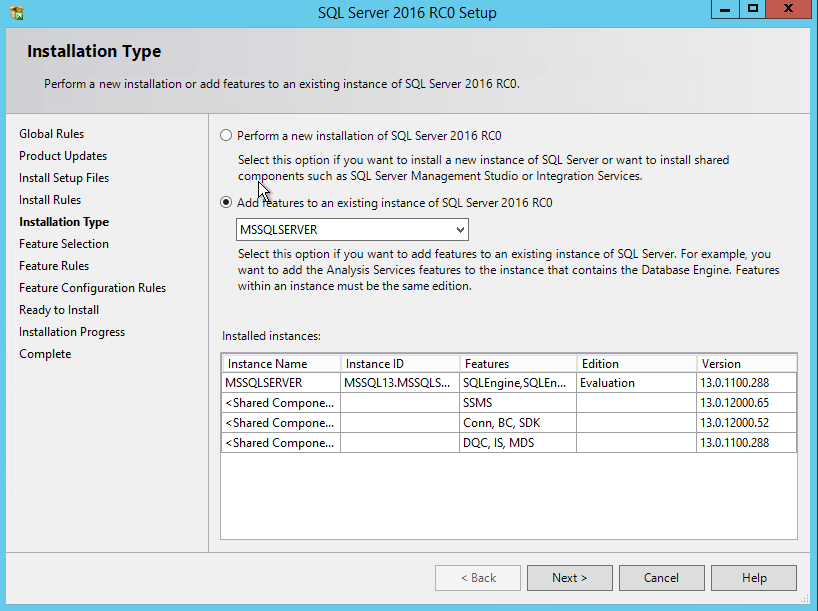

3. Add PolyBase feature to the existing SQL Server installation

**

**

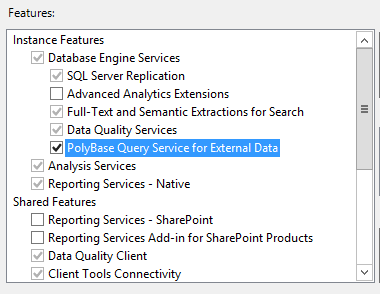

Then install PolyBase Query Service for External Data

4. Verify installation:

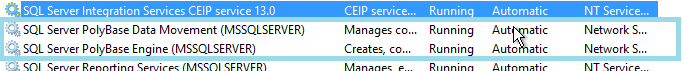

a. In services.msc, the 2 services below should be running.

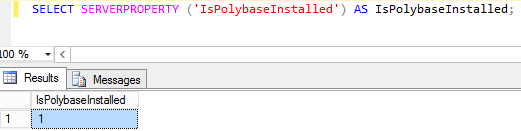

b. Verify Polybase installation in SSMS

Run the following query to view the status of the PolyBase installation.

1 = Installed

0= Not installed

Configure PolyBase to connect with an existing Big Data Solution

1.Create a Master Key Encryption

The database master key is a symmetric key used to protect the private keys of certificates and asymmetric keys that are present in the database.

When it is created, the master key is encrypted by using the AES_256 algorithm and a user-supplied password.

CREATE MASTER KEY ENCRYPTION BY PASSWORD=;

2. Create a Database-Scoped Credential

The credential is used by the database to access to the external location anytime the database is performing an operation that requires access.

CREATE DATABASE SCOPED CREDENTIAL HadoopUser1

WITH IDENTITY = ‘xxxx’,

Secret = ‘xxxxxx’;

Identity= Storage Account Name

Secret = Storage Account Key

**3. Reconfigure Hadoop Connectivity **

Reconfigure updates the currently configured value (the config_value column in the sp_configure result set) of a configuration option changed with the sp_configure system stored procedure.

In this example, it will change the global configuration settings for PolyBase Hadoop and Azure blob storage connectivity.

Below are the connections that can be used at the time of writing:

- Option 0: Disable Hadoop connectivity

- Option 1: Hortonworks HDP 1.3 on Windows Server

- Option 1: Azure blob storage (WASB[S])

- Option 2: Hortonworks HDP 1.3 on Linux

- Option 3: Cloudera CDH 4.3 on Linux

- Option 4: Hortonworks HDP 2.0 on Windows Server

- Option 4: Azure blob storage (WASB[S])

- Option 5: Hortonworks HDP 2.0 on Linux

- Option 6: Cloudera 5.1, 5.2, 5.3, 5.4, and 5.5 on Linux

- Option 7: Hortonworks 2.1, 2.2, and 2.3 on Linux

- Option 7: Hortonworks 2.1, 2.2, and 2.3 on Windows Server

- Option 7: Azure blob storage (WASB[S])

In this example, since Azure Blob Storage is being used, option will be used.

Sp_configure ‘hadoop connectivity’, 1;

reconfigure;

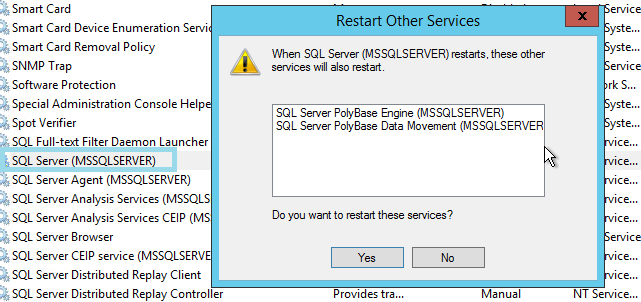

The next step is to restart the MSSQLSERVER service. If this is not done, several errors will be encountered during the next steps.

When this service is being restarted, the PolyBase services will also be restarted.

**

4. Create an External Data Source**

This defines from which source to fetch and process the data.

CREATE EXTERNAL DATA SOURCE AzureDs1

WITH (

TYPE = HADOOP,

--Specifiy the container name and account name

LOCATION = 'wasbs://X@Z.blob.core.windows.net/',

--Specify the credential that we created earlier

CREDENTIAL = HadoopUser1

);

X= Hadoop cluster name

Z= Storage account name

5. Create a File Format

This is a prerequisite for creating the actual layout of the data in the external table and defines the structure of the file to be read.

PolyBase supports these file formats:

• Delimited text

• Hive RCFile, and

• Hive ORC

CREATE EXTERNAL FILE FORMAT CommaFormat

WITH (

FORMAT_TYPE = DELIMITEDTEXT,

FORMAT_OPTIONS (FIELD_TERMINATOR =’,’)

);

**

6. Test - load and query sample data from Azure Blog Storage**

CREATE EXTERNAL TABLE Hvac (

Date varchar(10),

Time varchar(10),

TargetTemp smallint,

ActualTemp smallint,

SystemID smallint,

SystemAge smallint,

BuildingID smallint

)

WITH (

--Set the file to be the HVAC sensor sample file

LOCATION=’/HdiSamples/SensorSampleData/hvac/HVAC.csv’,

DATA_SOURCE = AzureDs,

FILE_FORMAT = CommaFormat,

--We will allow the header to be rejected

REJECT_TYPE = VALUE,

REJECT_VALUE = 1

);

Example: Analyzing Application Logs

Assume someone has a business running and has been storing all his application logs.

Someday, the application got a severe error on one of the most critical areas and the management decides to contact all the customers that tries to access the Application at that time.

Since, the database is down, the only way to track these customers were through the logs. But now, how to get the details of these customers?

This problem can be easily solved with PolyBase.

The following example demonstrates the analytics and BI capabilities of joining both the relational and unstructured data using PolyBase.

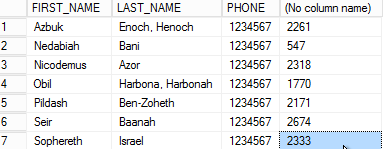

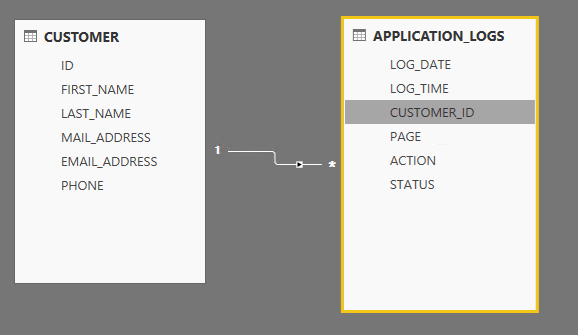

Relational Customer table

The following is a relational customer table where all the customer data are stored on-premise.

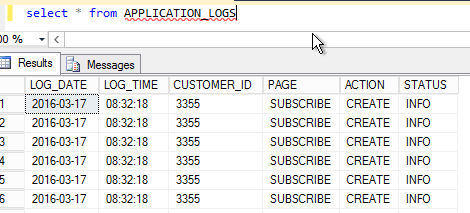

Application Logs on Azure Blob Store

The following is the raw dump of application logs stored on the Azure blob storage.

**

- Create external table for the logs

**

CREATE EXTERNAL TABLE APPLICATION_LOGS (

[LOG_DATE] varchar(50),

[LOG_TIME] varchar(50),

[CUSTOMER_ID] int ,

[PAGE] varchar(50),

[ACTION] varchar(50),

[STATUS] varchar(50)

)

WITH (

--Set the file to be the HVAC sensor sample file

LOCATION='/ApplicationLogSample.txt',

DATA_SOURCE = AzureDs,

FILE_FORMAT = CommaFormat,

--We will allow the header to be rejected

REJECT_TYPE = VALUE,

REJECT_VALUE = 1

);

2. View all customers that got an error on the EPayment Page and their contact details

**

**

select

CUST.[FIRST_NAME],

CUST.LAST_NAME,

CUST.PHONE,

COUNT(1)

from APPLICATION_LOGS APPLO

inner join [dbo].[CUSTOMER] CUST

ON CUST.[ID] = APPLO.CUSTOMER_ID

WHERE APPLO.STATUS='ERROR'

AND PAGE = 'EPAYMENT'

GROUP BY CUST.[FIRST_NAME],

CUST.LAST_NAME,

CUST.PHONE

HAVING COUNT(1)>3

**

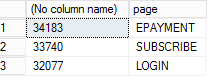

3. Fetch the features that got more than 10 errors

**

select

count(1),

page

from APPLICATION_LOGS

group by page

having count(1) > 10

4. Migrate data from the Blob storage to a relational table

**

**

SELECT * INTO

RELATIONAL_LOGS

FROM

APPLICATION_LOGS;

5. Transparent BI experience with both the unstructured and relational data using PowerBI Desktop

a. Connect to SQL Server Database

b. Data can be imported both from the structured and external unstructured tables

c. Relationships can also be defined between the tables

**

**

d. Creates Dashboard using any of the data seamlessly

**

**

Conclusion

PolyBase builds a bridge between the Relation and Unstructured data. Moreover, it reduces the complexity of Big Data Analytics whereby developers can use their existing SQL knowledge which translates into Map Reduces Jobs in the back-end. Once, the configurations are done and the tables are represented in SQL Server, all the data, both classic and external data can be queried using SQL and also explored using Power BI or any other BI tool seamlessly.

References

- https://msdn.microsoft.com/en-us/library/mt143171.aspx

- https://msdn.microsoft.com/en-us/library/mt163689.aspx

- https://msdn.microsoft.com/en-us/library/ms174382.aspx

- https://msdn.microsoft.com/en-us/library/mt270260.aspx

- https://msdn.microsoft.com/en-us/library/mt143174.aspx

- https://msdn.microsoft.com/en-us/library/dn935022.aspx