Solving the Number One Problem In Computer Security: A Data-Driven Defense whitepaper

Foreword

In today’s environment, information security executives face a challenge of protecting company assets by optimally aligning defenses with an ever increasing number of threats and risks. Often, organizations have considerable investments in protection without using a risk-based approach to prioritizing investments. This approach leads to ineffective security controls and an inefficient use of resources. Information security organizations collect a tremendous amount of data about IT environments. For some organizations, activities occurring on those IT infrastructures exceed more than ten billion events on a daily basis. In other words, considerable information is available about the environments we manage and it’s that data that can help us make informed decisions.

In support of these challenges, considerable improvement in rigor and process is necessary to inform and make better business decisions.

This whitepaper draws upon hundreds of engagements with Microsoft clients, as well as internal security operations, culminating in a framework for dramatically improving operational security posture. The methods discussed are based largely on Microsoft’s Information Security and Risk Management (ISRM) organization’s experience, which is accountable for protecting the assets of Microsoft IT, other Microsoft Business Divisions, and advising a selected number of Microsoft’s Global 500 customers.

The framework described utilizes a data-driven approach to optimize investment allocation for security defenses and significantly improve the management of risk for an organization.

Joseph Lindstrom – Sr. Director, Microsoft Information Security & Risk Management

Acknowledgements

Author

- Roger A. Grimes

Reviewers

- Shahbaz Yusuf

- Ashish Popli

- Joseph Lindstrom

Contributors

- Kurt Tonti

- Mark Simos

- Adam Shostack

Wiki Layout

- Peter Geelen

MICROSOFT MAKES NO WARRANTIES, EXPRESS OR IMPLIED, IN THIS DOCUMENT.

Complying with all applicable copyright laws is the responsibility of the user. Without limiting the rights under copyright, no part of this document may be reproduced, stored in or introduced into a retrieval system, or transmitted in any form or by any means (electronic, mechanical, photocopying, recording, or otherwise), or for any purpose, without the express written permission of Microsoft Corporation.

Microsoft may have patents, patent applications, trademarks, copyrights, or other intellectual property rights covering subject matter in this document. Except as expressly provided in any written license agreement from Microsoft, our provision of this document does not give you any license to these patents, trademarks, copyrights, or other intellectual property.

The descriptions of other companies’ products in this document, if any, are provided only as a convenience to you. Any such references should not be considered an endorsement or support by Microsoft. Microsoft cannot guarantee their accuracy, and the products may change over time. Also, the descriptions are intended as brief highlights to aid understanding, rather than as thorough coverage. For authoritative descriptions of these products, please consult their respective manufacturers.

© 2015 Microsoft Corporation. All rights reserved. Any use or distribution of these materials without express authorization of Microsoft Corp. is strictly prohibited. Microsoft and Windows are either registered trademarks or trademarks of Microsoft Corporation in the United States and/or other countries.

The names of actual companies and products mentioned herein may be the trademarks of their respective owners.

Gallery

You can get your offline copy of this white paper at: https://gallery.technet.microsoft.com/Fixing-the-1-Problem-in-2e58ac4a.

Contents

Executive summary

Many companies do not appropriately align computer security defenses with the threats that pose the greatest risk to their environment. The growing number of ever-evolving threats has made it more difficult for organizations to identify and appropriately rank the risk of all threats. This leads to inefficient and often ineffective application of security controls.

The implementation weaknesses described in this white paper are common to most organizations, and point to limitations in traditional modeling of and response to threats to computer security. Most of the problems occur due to ranking risk inappropriately, poor communications, and uncoordinated, slow, ineffectual responses.

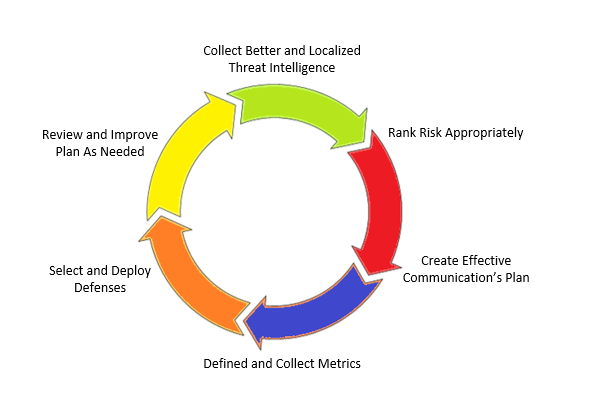

This paper proposes a framework that can help organizations more efficiently allocate defensive resources against the most likely threats to reduce risk. This new data-driven plan for defending computer security follows these steps:

- Collect better and localized threat intelligence

- Rank risk appropriately

- Create a communications plan that efficiently conveys the greatest risk threats to everyone in the organization

- Define and collect metrics

- Define and select defenses ranked by risk

- Review and improve the defense plan as needed

The outcome is a more efficient appropriation of defensive resources with measurably lower risk. The measure of success of a data- and relevancy-driven computer security defense is fewer high-risk compromises and faster responses to successful compromises.

If such a defense is implemented correctly, defenders will focus on the most critical initial-compromise exploits that harm their company the most in a given time period. It will efficiently reduce risk the fastest of any defense strategy, and appropriately align resources. And when the next attack vector cycle begins, the company can recognize it earlier, respond more quickly, and reduce damage faster.

The problem with most computer security defenses: Inefficient alignment of risk and defense

Imagine two armies, good and bad, engaged in a long-term fight on a field of battle. The bad army has successfully managed to compromise the good army’s defenses, again and again, by focusing most of its troops on the good army’s left flank. Surprisingly, instead of rushing to put reinforcements on its left flank, the good army keeps its troops evenly spread, or perhaps decides to put more of its defenders on the right flank. Or worse: it decides to pull troops from the left flank to man anti-aircraft weapons in the center because of rumors that the enemy might one-day attack from the sky. Despite continued reports of successful attacks on the left flank, the defending army continues to amass troops nearly everywhere else, and wonders why it is losing the battle.

Most companies do not correctly align defensive resources against the threats that are most successful or most likely to compromise their environments. |

No army would survive long ignoring successful attacks. Yet this scenario describes how most companies defend the security of their computer systems. Today, most enterprises do not correctly align their resources—money, labor, and time—against the threats that pose the greatest risk to their computer systems and have been most successful at attacking them.

Computer security defense has always been about identifying threats, determining risk, and then applying mitigations to minimize those risks. Unfortunately, the complexity of numerous threats and their constantly evolving nature has led many defenders to respond too slowly or to focus on the wrong threats.

This misalignment is due to several factors, including that enterprise defenders often fail to:

- Identify in a clear and timely way all the localized threat scenarios they face

- Focus on how initial compromises happen versus what happens afterward

- Understand the comparative relative risks of different threats

- Broadly communicate threats ranked by risk to all stakeholders, including senior management

- Efficiently coordinate agreed-upon responses to risk.

- Measure the success of deployed defensive resources against the threats they were defined to mitigate

All these implementation weaknesses lead to misalignment of computer security defenses against the highest risk threats.

A common example of misalignment

Currently, the primary way computers are initially exploited is through unpatched software, with just a few programs responsible for the majority of those exploits. In the recent past, vulnerabilities in unpatched operating systems (OS) were the most likely targets of malicious hackers, but as OS vendors gained success in helping customers patch their OS software, malware writers have turned to targeting applications—in particular, popular Internet browser–related software running on multiple platforms.

In some years, according to the Cisco 2014 Annual Security Report, a single unpatched program was identified as causing the vast majority—ranging as high as 91 percent—of successful web attacks. Although which software is most exploited changes over time—and the exact percentages reported vary depending on the survey used to assess it—it seems fairly reasonable to conclude that unpatched software, in general, and a few programs in particular time period, are responsible for most successful web exploits. This seems unlikely to change in the near term.

Another very common successful attack vector is social engineering, either through fake phish emails, rogue web links, or other forms of social engineering. Many of the world’s most damaging enterprise attacks have begun with a social engineering attack, which led from elevated credential compromise to malicious access of critical resources.

In today’s threat environment, it is clear that unpatched software and social engineering threats are among the two biggest threats to most organizations. Unless defenders can demonstrate that their environments are less susceptible to these risks than those of their peers, it seems reasonable to conclude that most companies should significantly improve patching, particularly of the most exploited programs, and work hard to decrease the potential success of social engineering attempts as their primary defense strategies.

Unfortunately, however, most companies don’t do this. Instead of patching the most problematic programs, companies don't differentiate between patching those applications and every other program. Often the highest risk programs are not patched at all, or at significantly lower rates due to a variety of factors (this fact is why those very programs are so highly targeted by attackers). So although a company may report a fairly high level of overall patching compliance, it often includes very low levels of patching compliance on the programs most likely to be exploited. It's possible, then, that a company reporting 95 percent overall patch compliance is likely not showing (or even aware of) the real risk caused by a few missing patches.

In most enterprises, patch management is left up to one or two employees who are rewarded based on their overall patching rate (or perhaps their patching rate for lower risk operating system patches), rather than how well they patch the highest-risk programs.

Indeed, patch management employees are often prevented from patching the very programs which would provide the most protection. Despite the continued presence of high risk attacks, most companies still tolerate large percentages of unpatched Internet-related software for various, and often substantial, reasons. These include that critical applications may break if the software is patched, and a lack of real authority given to the employees charged with patching software. It is well accepted in the computer security industry that it's easier to get fired by causing a substantial operational interruption than it is by deciding to accept residual risk by leaving high-risk programs unpatched.

But many other necessary defenses do not have a significant downside and are unlikely to cause significant operational interruption. For instance, effective end-user education designed to lower the risk of social engineering attacks is woefully under-utilized in most organizations. Even though social engineering is one of the most common attack types, most employees are lucky to get any training to defeat these attacks, or may get 15-30 minutes on an annual basis. This is not enough in light of the risk the education could mitigate. Often times this training is many years old and does not focus on the most likely attacks which employees could face.

Companies which conduct initial social engineering tests against their own employees are often surprised to find that these tests are successful against a large percentage of them. Even when the companies know that a significant portion of their employee base can be fooled by social engineering attacks, rarely does the company then commit the necessary resources to significantly reduce the risk.

The lack of substantial, focused, end-user training tends to be a factor of the perceived difficultly in delivering the correct education in enough quantities to substantially impact the organization. Some defenders will even state that there are some individuals in their organization which can never be trained well enough, and that those few individuals will undermine the overall value of the entire educational campaign. In this case, the goal of perceived perfection undermines improved education for the majority, which could significantly reduce risk.

So while most companies could most efficiently decrease the highest computer security risks by better patching a few high-risk programs and providing appropriate amounts of anti-social engineering training, few do so. Instead, most companies tend to focus on other defense activities—such as two-factor authentication, intrusion detection systems, and firewalls. While good to implement, they do not directly address the biggest initial compromise vectors. If the biggest problems are not corrected, then other defenses will most likely be inadequate at stopping the highest risk attacks.

In data-driven computer defense (like this paper is proposing), the fact that unpatched software and social engineering are the biggest threats would be confirmed against the enterprise’s actual experience. If confirmed, this would be communicated to all stakeholders, including senior management. Senior management would assign the necessary resources—and give them the authority—to combat the top threats. A special task force project team might be created to look at the overall problems, discuss mitigations, do testing, and reduce the risk posed by unpatched programs and social engineering. If every stakeholder understood the large threat posed by a few unpatched programs and social engineering, it's doubtful that they would stand by and do nothing (or give software-patching and education minor focus).

The top exploit method changes over time

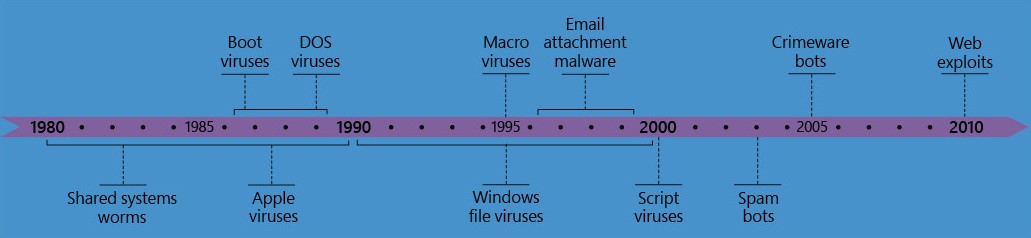

In general, the most popular programs are the ones that are attacked the most. The most successful and popular attack methods also change over time, as illustrated below.

In the late 1980s, it was boot viruses. In the 1990s and the first decade of this century, it was malicious email attachments, which eventually morphed into email with embedded links carrying toxic payloads. After 2010, most malicious compromises have been due to exploited websites and social engineering. A data-driven defense takes this into account and does not overly fixate on a particular threat past its risk window.

General timeline of the most popular malware threats

Within those exploit methods are the actual attack vectors that allowed those methods to be successfully executed—that is, the initial exploit vector. It's as least as important to recognize how a particular threat got through existing defenses as it is to simply recognize the threat. For example, if a malware program gets executed on a user's workstation, how did it get there? Did it exploit unpatched software or use social engineering? Was it embedded in a website the user visited, or did it crawl across network file shares? Defenders would realize bigger dividends if they concentrated more on how something was accomplished than on what it did after it was executed.

| The most successful exploit methods change over time. |

How did it get this way?

It isn't natural for an army or a company to ignore responding appropriately to the biggest risks. So how did it get this way?

There are a number of reasons (many beyond the scope of this paper), but they include the sheer number of threats, inadequate ranking of their severity, poor detection metrics, and poor communications. (Some of the best discussion on this subject has been written by the world-renowned computer security expert Bruce Schneier, particularly in his book Beyond Fear: Thinking Sensibly About Security in an Uncertain World.)

Sheer number of threats

In the computer world, new threats of astonishing variety arrive like water from a fire hose. The military analogy that started off this white paper would probably better reflect the digital environment if it showed one army being attacked over and over by every other army in the world, without a break.

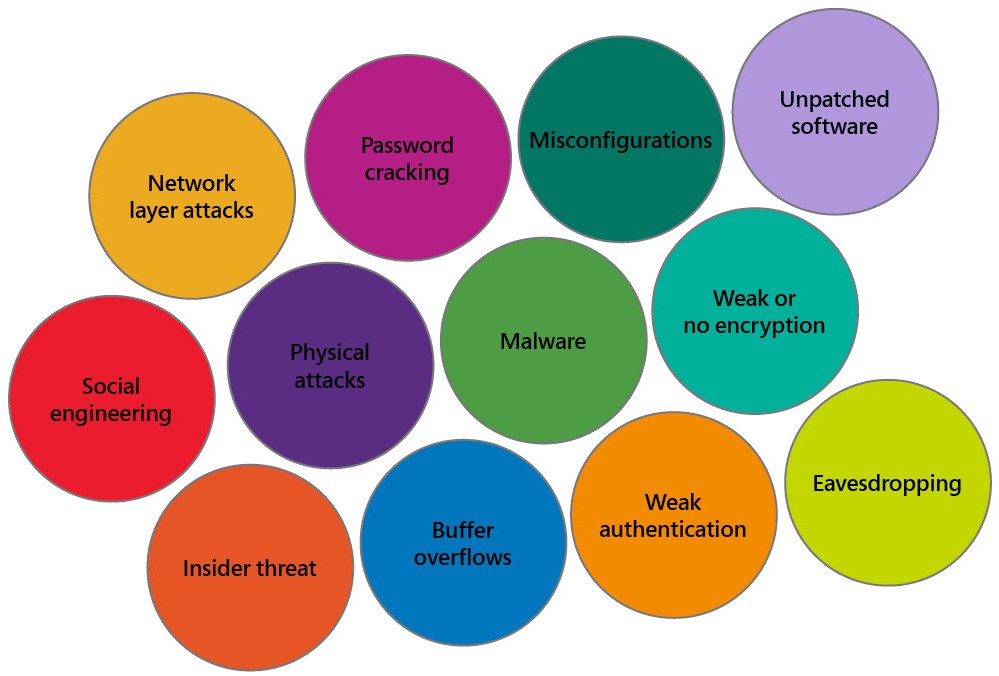

Malicious hackers have generated tens of millions of pieces of unique malware, and there are literally hundreds of different ways to exploit a computer system—malware, password cracking, memory corruption, misconfiguration, and eavesdropping, to name a few. Not only do defenders have to worry about nearly every threat ever found (because old threats rarely go away), but they must address every new exploitation vector that the bad guys create.

To further complicate matters, defenders are often in charge of protecting multiple OS platforms (Microsoft, Apple, Linux, Android, for example) and form factors (PCs, slates, a variety of mobile devices, and cloud threats). Defending against a particular type of attack, or even the same malicious threat, requires different defensive techniques. The end result is an incredible number of new and old priorities competing for limited resources, without the time to give each the consideration it deserves.

Poor ranking of threats

The sheer number of threats makes it harder to efficiently rank which threats to focus on. Because security defenders are besieged by so many threats and have neither the time nor the resources to analyze comparative risk, they see them as illustrated below (“like bubbles in a glass of champagne”), not as relative to the actual risk of each threat to the organization.

Threats are not ranked.

Without a focused set of threats ranked by the localized risk to an organization, it’s easy to see why the defenses mounted would mirror the un- or mis-ranked threats.

Every defense is treated equally.

It would greatly benefit a company to rank each threat based on how likely it is to occur within their own environment, and the actual localized risk to the organization.

Threats ranked by actual risk to the organization—the larger the circle, the greater the threat.

If IT defenders had a clearer picture of the relative organizational risk of each threat or exploit, they could better align their defenses to that risk.

Example of defenses ranked according to how much risk the threat poses to the organization—the larger the circle, the more important the defense.

Poor detection capabilities and metrics

In general, most companies do not do a good job of detecting localized and emerging threats. It’s not that most IT environments don’t have the ability to detect these threats; it’s that they often focus on the wrong things.

Understanding how a company was compromised and how long the compromise went undetected is far more important to a defense plan than names and numbers alone. For example, companies can generate a list of all the malware families that their antimalware software detected and removed. They can present actual numbers and identify the malware programs that were removed. But if they cannot tell you how that same malware was introduced into the environment in the first place—such as through social engineering, unpatched software, or a compromised website or USB drive—or say how long the malware program was in the environment before it was detected, then all those names and numbers are of little use.

Microsoft Showcase - Gathering data to identify attack vectors

The Conficker worm in 2008 could propagate using at least three different methods: memory corruption, guessing file share passwords, and running automatically from a USB drive. Initially it was believed that Conficker was spreading due to unpatched software—after all, unpatched software is often the number one problem. The Microsoft Security Intelligence Report Volume 12, however, explained that the key to efficiently eradicating Conficker was understanding how often it was successful using each exploit vector. Microsoft detection methodologies determined that most Conficker infections were the result not of unpatched software, but of poor password policies and autoruns from USB drives.

Microsoft communicated this information in its Security Intelligence Report, and created and distributed a patch that disabled autorun functionality. By focusing on the most common attack vectors, Microsoft was able to help customers significantly decrease the number of Conficker infections.

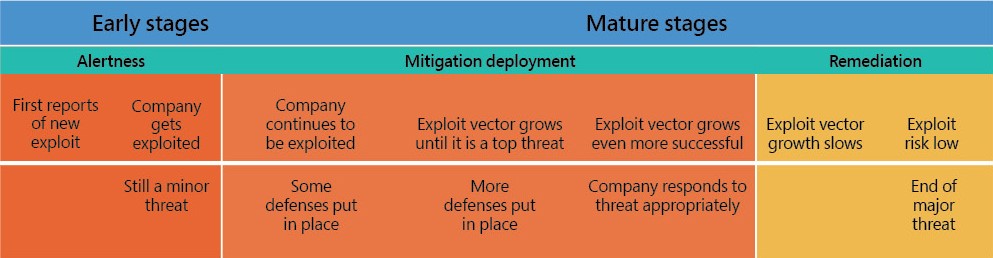

In general, most companies have inadequate metrics and data about successful malicious hacking against their company, in their industry, and even worldwide. Because of this, it often takes months—even years—for a defender to adequately respond to the biggest threats. And once the correct security defenses have been put in place and are working, the attackers simply change their tactics and start the whole cycle again. The next figure below shows the common phases a particular attack vector and the associated mitigations go through over time.

All computer threats and security defenses undergo a similar cycle of response and remediation with each exploit.

Early on, the company may not be prepared at all to deal with an emerging threat. If the company is not experiencing exploits from the new attack vector, it may even feel that it is somehow immune to the attack. Often by the time the company realizes that the new attack has made a significant negative impact, the exploit is widespread and out of control.

If the exploit continues to spread, the company must eventually respond to it if it wants to survive, and change or increase remediation methods to handle the exploit. Then, the company will begin to get a handle on the exploitation method, decreasing the number of successful exploitations. Eventually, either due to the company’s remediation responses or technology changes, the exploitation method will cease to be a top threat.

Historically, it has often taken longer than it should between the early and mature stages of computer defense. A data-driven defense seeks to minimize the time between initial exploitations and the successful remediation of the threat.

Poor communication of top threats

Can your employees accurately describe the number one threat to your organization? |

To determine if your company is a candidate for a data-driven computer security defense, ask if the majority of IT security employees would be able to accurately describe the number one threat to your organization. If you can get anything resembling consensus, and answers that are supported by actual data, your company is rare indeed. IT security employees in most companies cannot cohesively name the number one computer security threat that is most successfully exploiting their company, so unfortunately they cannot correctly or efficiently align defensive resources against them.

What a data-driven computer security defense looks like

In a company using a computer security defense model driven by data, every employee would know the top threats that are most successfully exploiting the organization's security. There would be no guessing; everyone could point to the top threats to the company's computer systems.

With a data-driven computer security defense, the IT team would actively collect threat intelligence and appropriately rank the risk to the company of the most likely and critical threats. It would then focus resources on the biggest threats with senior management involvement and approval, and use metrics to track success. The ultimate outcome is a lower number of exploitations and lower computer security risk to the organization.

The key outcome of a data-driven computer security defense is an operational framework that results in faster, more responsive cycles of alertness, mitigation, and more precise focus on reducing top threats. |

When a new threat emerges, the organization is on top of it from the start, measuring the company’s own rate of exploitation from the threat. It is a continuous, faster cycle of alertness, mitigation, and reduction.

Implementing a data-driven computer security defense

The ultimate objective for a computer security defense built on data is to create and implement a framework that assists defenders in creating more timely mitigations that focus on the biggest, most likely threats first. Central to this defense is focusing on threats that are ranked by risk to the specific organization’s computers.

A data-driven computer security defense plan includes the following steps:

Collect better and localized threat intelligence

Rank risk appropriately

Create a communications plan that efficiently conveys the greatest risk threats to everyone in the organization

Define and collect metrics

Define and select defenses ranked by risk

Review and improve the defense plan as needed

Here is a diagram summarizing the key components of a data-driven defense plan:

Collect better and localized threat intelligence

It is impossible to prepare for all threats, and that shouldn’t be the goal. Instead, defenders should focus on the most likely threats to their specific environment. To do that, each defender needs to create and gather threat intelligence from many sources, both inside and outside the company.

Start with your company’s own localized experience, which in general is the most relevant and reliable. Your company will always be susceptible to other threats, but the history of successful exploitations against your company is one of the best data sources; hackers and malware often use attack methods that have worked successfully against your company in the past. The most successful attack methods are in direct response to the organization’s biggest weaknesses.

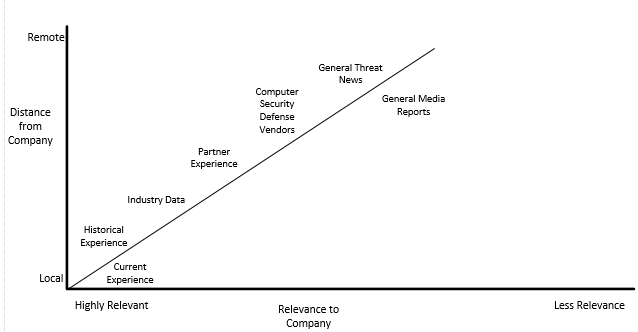

After gathering internal data, get additional data from partners and industry news, and then move out to external vendors and worldwide news feeds. As you move away from the company’s own experience, the data will usually become less relevant. The figure below summarizes the relationship as threat intelligence moves further away.

The Relevance of Threat Intelligence

This is not to say that vendor or general threat information cannot have acute relevance during particular time periods. Commonsense must prevail. For example, the first news of a fast moving Internet threat often comes from external sources far away from the organization. But even in those instances, the threat may or may not be relevant to the organization depending on its existing defenses and platform makeup.

It’s also important to note that simply because a critical vulnerability is found, or found thousands of times, does not necessarily make it a critical risk. A typical vulnerability scan in an average organization will reveal thousands of vulnerabilities, with a large percentage of them being ranked with the highest criticality. When you identify critical vulnerabilities it’s important to ask the likelihood of a specific vulnerability being used against the organization to access valuable resources. And conversely, a lessor ranked vulnerability should be given higher criticality if it has been used in the past or is currently actively being used against the organization.

The concept of threat intelligence localization is brought in this paper because most organizations neglect their own and best data. By beginning with their own experience, organizations are most likely to be able to respond to the most likely threat scenarios in most time periods.

Microsoft Threat Intelligence Resources

Many software and antimalware vendors have data feeds specifically for sharing threat intelligence. Some are free, but most are paid services. Microsoft offers many free resources that provide good, timely threat information. In particular, the Microsoft Security Intelligence Reports, published about once a quarter, provide specific threat information, including regional information, along with specific defense recommendations.

Microsoft offers many free data sources for threat intelligence. |

- Microsoft Internet Safety & Security Center

- Microsoft Malware Protection Center

- Microsoft Security Intelligence Report

- Microsoft Malware Protection Center blog

- Microsoft Threat Reports

- Microsoft Cyber Trust Blog

- Microsoft Security Response Center blog

- Microsoft Security Research and Defense Blog

- Microsoft Security Newsletter

Large organizations should assign intelligence data aggregation to an employee or team who can subscribe to and follow multiple security newsletters and RSS feeds, and create news pages that aggregate the search results.

Rank risk appropriately

Ranking risk is often described as evaluating the likelihood of a particular event happening, multiplied by the potential damage. Every model of risk management (including ISO 31000:2009) contains a section on determining the likelihood of a particular threat, which then leads directly to developing defenses that are ranked by risk.

Unfortunately, humans, if left to their own “gut instincts” or beliefs, will often inappropriately rank risks, not only in IT, but in their daily lives. For example, most people fear plane crashes and shark attacks more than they do the car ride to the locations of those activities, even though the car ride is thousands of times more likely to cause injury or death.

It’s often the same with computer security. IT professionals will often rank the most publicized threats above the attacks that are consistently and most successfully used against them. Oftentimes managers will ask front-line employees what they are doing to prevent a threat they have heard or read about versus asking what successful attacks the front-line employees are seeing the most. Or a manager will ask employees to drop what they are doing to defend against a (rarer) attack vector successfully exploited by a penetration tester against the organization before they are finished defending against the most likely attacks perpetrated by the more prevalent attackers in the wild.

Because of the chance of inappropriate risk assessment, risk must be assigned by using collected data and metrics to determine relevance.

|

How Microsoft Cybersecurity ranks threats to the company

|

Microsoft recommends assigning higher percentages of likelihood to malicious events that have occurred, are occurring, or are likely to reoccur to your company, unless you have taken sufficient steps to ensure that they don’t happen again. Companies are routinely re-exploited using methods that have been successful in the past; this is often a factor of what attackers are using against the company (each attacker has their own go-to, often-used set of attack tools) as well as an expected outcome when customers have gaps or weakness in a particular defense.

In general, Microsoft gives greater weight to past, current, and industry threats than to broad attacks on many industries or theoretical attacks which have not yet happened. Industry-specific attack campaigns are common. For example, if attackers targeting one energy company are successful, they are more likely to capitalize on energy industry–specific weaknesses against other energy companies.

This analysis must, of course, be compared with the success of those particular threats and attacks and how much damage they caused. For example, if your defense system cleans up every malware program before it has been able to execute on critical computers or devices, it’s less likely that future malware attacks of the same nature will have a significantly different impact. If, however, the opposite is true—that your company has suffered great damage due to undetected malware—then your company should give greater importance to such malware attacks in the future.

Appropriate risk assessment also means using common sense to recognize when your consideration of external risks forces a change of approach—for example, if vendor and national public resources announce a new critical threat that is likely to spread soon and fast in any environment. This has happened a few times in recent history, including ahead of the MS-Blaster worm. Microsoft and other agencies warned of a recently discovered critical vulnerability, and advised everyone to patch their systems. A few days later, a computer worm exploiting that vulnerability quickly made its way around the world, infecting millions of unpatched computers.

Use your company’s unique history as the starting point of predicting future malicious attacks, and then modify that prediction based on other external threats. |

Additionally, there is always a chance that a relatively unknown exploit could spread rapidly without any warning. This was the case with the 2003 SQL Slammer worm, which was released in the early hours of a weekend morning in North America, and infected most of the unpatched SQL servers on the Internet in the first 10 minutes. By the time most defenders woke up, it was too late to prevent the damage.

The idea is to use your company’s unique history as the starting point of predicting malicious attacks, and then modify that prediction based on other external threats. Although an externality may override your company’s own exploitation history, in the absence of competing priorities it’s best to use your company’s own history as the starting point for ranking risk.

If you have a hard time developing your own threat model, consider using the Microsoft Threat Modeling Tool, or the Microsoft STRIDE Threat Model, a classification scheme for characterizing known threats. The Open Web Application Security Project also offers an overview of the approaches to threat risk modeling.

Developing attack scenarios

Creating attack scenarios of the different ways a remote or insider attacker could compromise your environment can be helpful in determining threats and risks. For example, a scenario where:

- An attacker launches a massive distributed denial of service (DDoS) attack against the biggest web sites.

- An attacker defaces your company's website.

- An attacker uses phishing to obtain company credentials.

- Hackers exploit unpatched software to give them remote access to end-user workstations.

- An attacker gives or sells a customer database to your competitors.

- An attacker uses password hash dumping tools to obtain all Active Directory credentials.

- A virus deletes random files on the main corporate network.

- An attacker obtains access to protected source code.

It’s important to select risk scenarios that are most likely to occur and result in critical damage to your company. This is the point where most computer defense plans misalign defenses. Remember, there is a big digital gulf between discovered critical threats and the ones that are most likely to occur in your environment.

Start by documenting and using the scenarios that have most recently occurred, and then move out, much like the intelligence data feed idea, from scenarios that are more relevant to less relevant. Use attack scenarios to help calculate threat likelihoods and cost from damage.

Microsoft encourages vulnerability and threat modeling both inside and outside the company. For example, Microsoft has held its famous BlueHat hacking competition going on 14 years now. Plus, Microsoft Bounty Programs were recently expanded to include external competitors. In these programs, Microsoft offers direct payments for reporting certain types of vulnerabilities and exploitation techniques. Microsoft also offers cash prizes for employees who come up with the most inventive scenarios for modeling threats.

Defend against the initial compromise and additional tactics

Most malicious attacks have two phases:

- The initial phase is how hackers (or their malware) gained initial access—for example, through unpatched software, social engineering, misconfiguration, or weak passwords. For a defender, this phase is more important than the second phase, because it should directly correlate with contemplated defenses.

- The second phase is what the hackers (or malware) did after the initial compromise. Did they stay on the initial compromised computer or start to move laterally to other computers of the same type, or vertically to computers with different roles? What other exploits and tools did they use?

Not all hacker tactics work in both instances. There are different defenses for preventing an initial compromise and preventing additional movement or minimizing the subsequent damage.

The concept of the attacker beginning with an initial exploit followed by one or more exploits designed to move laterally or vertically is called exploit chaining. Defenders must try their best to prevent both. If they can prevent the initial compromise, they don’t have to prevent subsequent exploits. Unfortunately, most defenders have a hard time preventing initial compromises, in which case they have to be aware of and defend against both types of exploits.

|

A targeted spear phishing email campaign entices a user to open an email message, and click on the enclosed web link. The web link usually contains malicious JavaScript, which installs malware that exploits unpatched Java. The malware connects to its “mothership” computer and is instructed to connect to other servers. This process is repeated (sometimes up to 20 times), until the malware downloader is instructed to download additional remote control software. The remote control software gets activated and alerts a waiting human attacker, who now has local access on several computers. The user on one of the compromised computers is running as local Administrator. The hackers use the Administrator’s permissions to launch additional programs in the Local System, where they are able to obtain multiple user names, passwords, and password hashes. One of the passwords belongs to a tape backup service account that belongs to the Domain Admins group and is installed on every computer in the environment. The attacker uses this newly found credential to log on to the nearest domain controller, and downloads every Active Directory credential and password hash. Using the new credentials and Pass-the-Hash (PtH) tools, the attacker is able to log on to all the other servers in the environment, after which he can view and copy data at his leisure. |

Create a communications and mitigation plan

Once you have identified the biggest threats it’s time to communicate that knowledge throughout the organization. This needs to be done to ensure that all stakeholders have a cohesive understanding of the biggest threats. This will help with the allocation of resources and the creation of defenses across the organization.

Communication can be done using daily educational screen pushes, email messages, newsletters, and the like. The end goal of a communications plan is that every employee—from senior management and IT teams to end users and business partners—can correctly identify the biggest security threats to the company and how each person would work to participate in its remediation.

There is “no one size fits all.” A communications plan needs to include the right level of data for each stakeholder about the most likely threats (and implemented defenses), and also include ways for everyone to provide updates and feedback to the communications plan and defensive mitigations. Each stakeholder needs to be talked to in the language and metrics they are accustomed to receiving; at the same time, it’s crucial that key critical threats be communicated so everyone is on the same page.

In particular, senior management must be briefed on the most likely critical threats in the environment, as they ultimately decide how much risk the company can afford and what resources to allocate to defend the system. End users, too, need to know the most likely critical threats, as well as how to recognize and prevent them.

Microsoft Security Intelligence Reports are one of the most comprehensive looks at the biggest, most likely threats and how to prevent those threats. Download each report when it is released, and share those lessons with coworkers and employees when relevant to the company’s own experience.

For example, if a company recognized unpatched software as its biggest threat, this finding would be communicated to senior management and to every employee and team. IT security would create a project task force team to address the problem of why so much unpatched software exists in the enterprise, and develop a mitigation plan that would start with removing software where it is not needed and set up a plan for timely patching any software that remains. The patch management team would be given the needed authority and rewarded for patching the most exploited programs first. The plan would also provide for reworking applications that depend on it so that security updates do not break them, and put in place defense remediation specific to the software such as blocking exploits coming from the Internet. The IT security team would disseminate the remediation plan, as well as progress made, throughout the company.

Define and collect metrics

Once you have ranked your biggest risk threats, you need to define metrics to measure the success or failure of the current and future proposed defenses against specific threats.

- Many companies measure overall software patch status, or even just the status of Microsoft software, but each metric should align to a particular threat or set of threats. Be as specific as you can. For example, the biggest threats usually aren’t a general “unpatched software” or “social engineering”, but specific unpatched programs and email phishing with malicious attachments or links.

- Of course this specificity means your metrics will most certainly change over time. What programs and methods attackers focus on over time changes. So, too, should your metrics, as previous threats disappear and new ones appear.

- Companies work hard to move their logon authentication mechanisms to two-factor authentication, or at least to longer and more complex passwords—a laudable goal. But it’s important to ask if the most recent critical exploits would have been prevented by longer and more complex passwords or two-factor authentication. In most companies, the answer would be no. So, for example, if unpatched Java is your biggest problem, you would need to measure the percentage of patched Java in the enterprise.

- Similarly, while simple malware infections might not initially seem to be high risk, if persistent attackers use malware to gain their initial foothold, allowing them to quickly compromise the entire domain, then antimalware defenses might need to be moved up.

- It's also helpful to consider which metrics provide the most value to your company. For example, it’s not as important to know how many malware programs your antimalware program blocked in a particular time period as it is to know how many false negatives it had—that is, where the program did not deliver an alert after scanning malware that it should have detected. Blocked malware is like dropped packets on a firewall—each block measures a successful remediation of a problem.

- Metrics should indicate the success or failure of defenses against a specific threat. Use the metrics and information coming back from implementers to help guide future data-driven computer security defense plans. Malware and hackers never stop evolving, so neither will the defense plan.

- No defense system can immediately detect and remove 100 percent of malware. For example, there will always be some malware that is not initially detected, and because of the false-negative identification, is allowed to execute for a certain amount of time. Ultimately the biggest risk from malware is the time from initial execution to detection. But how do you measure that?

Within Microsoft, every Microsoft Windows asset runs Microsoft AppLocker in audit-only mode. This means we record every previously unexpected executable to the local event log. When we find and remove malware, we can compare the malware detection and removal date and time in the antimalware log to the first execution time recorded in the AppLocker events.

Currently in test mode, the eventual idea is to create a metric called Mean Time to Malware Detection, which ultimately correlates to our risk from undetected malware. The smaller that number, the better our detection and the lower our risk. If the metric trends up, we can look to our antimalware detection team for answers.

Learning from current and prior incidents is critical to understanding what threats have been seen within an environment and in helping to create better monitoring and metrics to detect those exploits faster. Getting help desk and incident response teams to better document and capture this kind of data will dramatically improve your ability to prioritize the best metrics about the most important threats.

Define and select defenses ranked by risk

After you make a list of risk-ranked threats, you can create and select appropriate defenses.

Make sure you implement defenses that will directly and immediately reduce the most critical and most likely threats. For example, in addressing the attack scenario of the spear phishing email that ultimately leads to PtH attacks, customers may conclude that they needed stronger authentication (often smart cards) and expensive intrusion detection systems.

Smart cards and multifactor authentication solutions are good for strengthening authentication, but rarely stop PtH attacks. Once a PtH attacker has your password hash, there is little they can’t do except log on without your smart card. But they can still log on remotely to other computers that accept the stolen credentials, map the drives, and copy and steal data. And most of the time, intrusion detection systems have a hard time differentiating between malicious behavior caused by a PtH attacker and what the original holder of the credential might do.

Proposed defenses should be proven to lower the risk they are being proposed to reduce. |

When a defense is proposed, ask the proposer to walk you through how their device or solution would actually stop the attacker in the proposed scenario. Don't take their word for it. Ask the proposer to show details to prove that the defense will work.

The typical company Microsoft advises has dozens and dozens of IT security projects and initiatives planned each year. A significant percentage of those projects never get done, and many of those that are done are done sub optimally, or do not directly reduce the threat they were intended to remediate. For most companies, it would be far more useful for IT security to focus on a few projects that will directly reduce the biggest threats the fastest.

Give precedence to defenses that stop initial compromises. This is also where you should make sure to consider how the exploit was initially successful against your environment. Stopping the initial compromise is more important than trying to stop a single malware family or malicious hacker, or trying to stop what hackers do once they have compromised your environment.

Give precedence to defenses that stop initial compromises. |

For example, for attackers to dump password hashes in a Windows environment, they must have either local Administrator or Domain Admins security contexts. Once they have that level of elevated privilege, they can do anything allowed by the OS, or even modify the OS to do things it would have never allowed. They can disable all your defenses, create a backdoor user account, or even modify the OS (in which case the OS is no longer the vendor’s or the user’s). Said another way, you can put down every single PtH attack and still not stop your attacker from successfully owning your environment. But if you stop your attacker from getting domain or enterprise admin, you’ve stopped many attacks.

|

Internally, Microsoft has created a Threat Mitigation Matrix that maps possible threats against current mitigation capabilities. It not only helps identify the gaps, but is also a good process for understanding when new capabilities are needed, and whether a new tool under consideration would cover a gap or is simply overlapping. Microsoft has also developed an internal Threat Monitoring Matrix, which maps threats against the monitoring tools most likely to alert or document a related incident. In this case, every log and tool that can generate an event is mapped against the different types of threats that may impact the environment. Like the mitigation matrix, the monitoring matrix is crucial for identifying gaps and weaknesses. |

Related reading

- Schneier, Bruce. Beyond Fear: Thinking Sensibly About Security in an Uncertain World, Copernicus Books, 2003

- Boose, Shelly. Key Metrics for Risk-Based Security Management, The State of Security, July 2013

- Grimes, Roger A. 5 reasons why hackers own your organization, InfoWorld, September 2014

- Jacobs, Jay and Rudis, Bob, Data-Driven Security: Analysis, Visualization and Dashboards, Wiley, 2014

- Microsoft Security Intelligence Reports

- Pereira, Marcelo. Human and tech flaws caused data hemorrhage from Dept of Energy. Let’s learn from their mistakes in 2014, January 2014

- Platt, Mosi K. Making Your Security Metrics Work for You, Pivot Point Security, August 2012

- Symantec. Why Take a Metrics and Data-Driven Approach to Security?, Confident Insights Newsletter, December 2012

- Young, Lisa. Tips for Using Metrics to Build a Business-driven Threat Intelligence Capability, ISACA, August 2014