WinRT C#: Developing lexer for Syntax Highlighting

This article explains the process of developing lexer for lexical analysis of source code. Using tokens generated by lexer, one can be able to syntax highlight source code. In this article, I'm developing lexer for Python Programming Language. However with little change in Regular Expression, other programming languages can also be scanned. Throughout the article it will be considered that you have knowledge of Regular Expressions.

Overview

Lexical analysis is a process that converts sequence of characters or source code into meaning-full character strings i.e Token. Lexical analysis is performed by Compilers and Syntax Editors. Lexical analysis is also known as Scanner, Tokenizer or Lexer. Working of lexer is quite simple can be implemented using Regular Expression.

Implementation

Lexers are fairly easy to write correctly and very easy to test. For an instance consider we are interested in finding alphanumeric identifiers. A simple regex pattern i.e [a-ZA-Z_][a-ZA-Z_0-9]* is able to do that so. Implementation of a simple lexer contains a very simple loop that iterates over whole strings:

01.IEnumerator<Token> Tokenize(string script)

02.{

03. int i = 0;

04. int length = script.Length;

05.

06. while (i < length)

07. {

08. // Lexer Logic here

09. }

10.}

To improve performance of this loop, there must be less code under the covers. On each iteration of loop we are going to match string against some rules and on match we will increment i by length of match. Before continuing let's first implement some important types.

Token Type

Token Type is an enumeration containing all the possible tokens types. All the tokens generated by lexer must be of any type from enumeration. Let's define some general Token Types.

01.public enum TokenType

02.{

03. Comment,

04. Number,

05. String,

06. Operator,

07. Delimiter,

08.

09. WhiteSpace,

10. Identifier,

11.

12. Unknown

13.}

Token

Token is a sequence meaningful characters. Each token has a specific TokenType associated with it. Instead of copying captured string value to token, best practice is to store start index of captured string in source string and length of captured string. Let's implement Token.

01.public class Token

02.{

03. public Token(int startIndex, int length, TokenType type)

04. {

05. StartIndex = startIndex;

06. Length = length;

07. Type = type;

08. }

09.

10. public TokenType Type { get; private set; }

11.

12. public int StartIndex { get; private set; }

13.

14. public int Length { get; private set; }

15.}

Lexical Rule

Lexical rule contains pattern to search in source string. Each lexical rule contains some additional information about search pattern. In our case we are going to store only TokenType for each lexical rule. Search pattern is defined in regular language consisting of regular expressions. Let's implement Lexical rule.

1.public class LexicalRule

2.{

3. public TokenType Type { get; set; }

4.

5. public Regex RegExpression { get; set; }

6.}

While definig lexical rules keep in mind that

- Lexial rules must be mutually exclusive i.e it can capture all the characters in the string. You can think off adding a LexicalRule at the end with pattern

^.this will match any character and prevents infinite loop of tokenizer. - Lexical rules pattern must not capture string of length zero. If there is such pattern that matches string of length zero then again an infinte loop in tokenizer.

- Lexical rules much capture string from start. For this simple add

^before pattern that will tell regular expression engine to match from start only.

Defining Lexical Rules

Lexical rules is the key part of a lexer. Changing these rules can changes the lexer targeted programming language. Let's define rules for general token types defined earlier.

WhiteSpace

1.LexicalRule _WhiteSpace = new LexicalRule 2.{ 3. Type = TokenType.WhiteSpace, 4. RegExpression = new Regex("^\\s") 5.};Single Character Operator

1.LexicalRule _SingleOperator = new LexicalRule 2.{ 3. Type = TokenType.Operator, 4. RegExpression = new Regex("^[\\+\\-\\*/%&|\\^~<>!]") 5.}Double Character Operator

1.LexicalRule _DoubleOperator = new LexicalRule 2.{ 3. Type = TokenType.Operator, 4. RegExpression = new Regex("^((==)|(!=)|(<=)|(>=)|(<>)|(<<)|(>>)|(//)|(\\*\\*))") 5.};Single Character Delimiter

1.LexicalRule _SingleDelimiter = new LexicalRule 2.{ 3. Type = TokenType.Delimiter, 4. RegExpression = new Regex("^[\\(\\)\\[\\]\\{\\}@,:`=;\\.]") 5.};Double Character Delimiter

1.LexicalRule _DoubleDelimiter = new LexicalRule 2.{ 3. Type = TokenType.Delimiter, 4. RegExpression = new Regex("^((\\+=)|(\\-=)|(\\*=)|(%=)|(/=)|(&=)|(\\|=)|(\\^=))") 5.};Identifier

1.LexicalRule _Identifier = new LexicalRule 2.{ 3. Type = TokenType.Identifier, 4. RegExpression = new Regex("^[_A-Za-z][_A-Za-z0-9]*") 5.};String Marker

1.LexicalRule _StringMarker = new LexicalRule 2.{ 3. Type = TokenType.String, 4. RegExpression = new Regex("^(@\"(?:[^\"]|\"\")*\"|\"(?:\\.|[^\\\"])*(\"|\\b))", RegexOptions.IgnoreCase) 5.};Comment

1.LexicalRule _Comment = new LexicalRule 2.{ 3. Type = TokenType.Comment, 4. RegExpression = new Regex("^(#.*)") 5.}Any Other Character

1.LexicalRule _Any = new LexicalRule 2.{ 3. Type = TokenType.Unknown, 4. RegExpression = new Regex("^.") 5.};

Tokenizing String

Final part of a lexer is to tokenize the source string according to the grammar rules or lexical analysis rules. This consists of a very simple loop that iterate over whole string and during each iteration string is matched with each lexical rule. Let's implement it

01.public IEnumerable<Token> Tokenize(string script)

02.{

03. if (string.IsNullOrEmpty(script))

04. throw new ArgumentNullException("script");

05.

06. int i = 0;

07. int length = script.Length;

08.

09. Match match;

10. var builder = new StringBuilder(script);

11.

12. string str = script;

13.

14. while (i < length)

15. {

16. foreach (var rule in Grammer.Rules)

17. {

18. match = rule.RegExpression.Match(str);

19.

20. if (match.Success)

21. {

22. if (match.Length == 0)

23. {

24. throw new Exception(string.Format("Regex matche length is zero. This can lead to infinite loop. Please modify your regex {0} for {1} so that it can't matche character of zero length", rule.RegExpression, rule.Type));

25. }

26.

27. yield return new Token(i, match.Length, rule.Type);

28. i += match.Length;

29.

30. builder.Remove(0, match.Length);

31. break;

32. }

33. }

34.

35. str = builder.ToString();

36. }

37.}

Syntax Highlighting

Tokens generated by tokenizer can be used for syntax highlighting and. All we have to do is to create an dictionary having key of type TokenType and value of type Color. Consider below dictionary

1.Dictionary<TokenType, Brush> ColorDict = new Dictionary<TokenType, Brush>()

2.{

3. { TokenType.Comment, new SolidColorBrush(Color.FromArgb(255, 221, 0, 0)) },

4. { TokenType.String, new SolidColorBrush(Color.FromArgb(255, 0, 171, 0)) },

5. { TokenType.Builtins, new SolidColorBrush(Color.FromArgb(255, 144, 0, 144)) },

6. { TokenType.Keyword, new SolidColorBrush(Color.FromArgb(255, 255, 119, 0)) },

7.};

I'm going to use RichTextBlock to view syntax highlighted script. For any source string call the tokenizer and append each token in RichTextBlock with it's color. Let's implement this

01.StringBuilder builder = new StringBuilder();

02.

03.var textView = new RichTextBlock();

04.var p = new Paragraph();

05.textView.Blocks.Add(p);

06.

07.Brush brush;

08.foreach (var token in lexer.Tokenize(value))

09.{

10. if (ColorDict.TryGetValue(token.Type, out brush))

11. {

12. if (builder.Length > 0)

13. {

14. p.Inlines.Add(new Run { Text = builder.ToString() });

15. builder.Clear();

16. }

17.

18. p.Inlines.Add(new Run

19. {

20. Text = value.Substring(token.StartIndex, token.Length),

21. Foreground = brush

22. });

23. }

24. else

25. builder.Append(value.Substring(token.StartIndex, token.Length));

26.}

27.

28.if (builder.Length > 0)

29.{

30. p.Inlines.Add(new Run { Text = builder.ToString() });

31. builder.Clear();

32.}

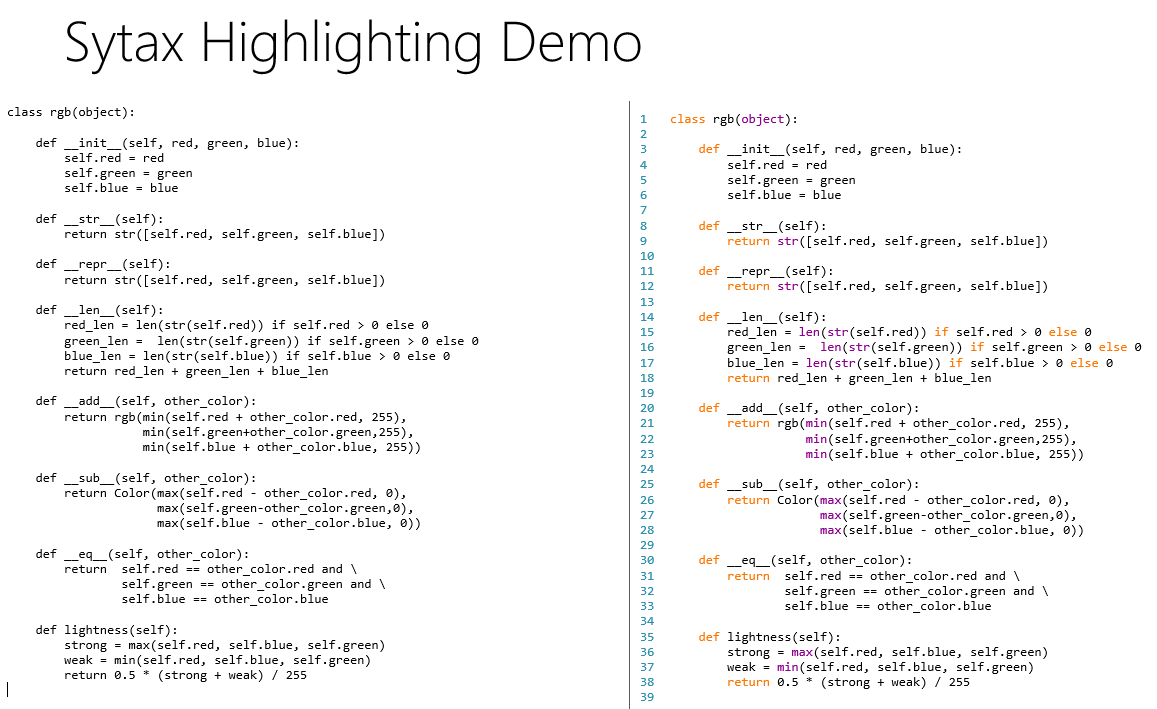

Below is the snapshot of demo syntax highlighter for python programming language.

Source Code

Download complete source code from here. Source code contains:

- Lexer

- Lexical Rules or Grammar for Python Programming Language

- Demo Windows Console project

- SyntaxViewer for syntax highlighting of source code Python Programming Language in Windows Store and Phone apps

- Demo SyntaxViewer project for Windows Store & Windows Phone Apps

If you have any question feel free to comment below