BizTalk Server: How to implement Concurrent FIFO Processing

Introduction

Sequential first-in-first-out (FIFO) message processing is a familiar requirement in major industries such as finance, insurance and healthcare. Also in most instances, there is some business process which requires an orchestration to confine such process. However, sequential processing imposes BizTalk Server to process the messages in a single-threaded mode thus limiting its multi-threading processing potential. In this article, we will comprehend an approach to practically overcome this limitation and realize a highly efficient solution.

Problem

BizTalk Server integration solution is often at the "heart" of an organization’s automation strategy and thus the need to be processing the messages reasonably without any performance bottlenecks is vital. But it is evident that implementing sequential message processing constrains BizTalk Server’s standard capabilities and as a result downgrades the normal performance. This warrants the need for an improved solution and keeps us thinking about options. What alternative opportunities exist? Is concurrent processing possible while preserving the message sequencing? Will it be feasible?

Definitely, it is feasible to implement a pragmatic solution in certain scenario(s). In this article, we will explore the solution that is apt for a scenario, where the transactions in the batch can be logically grouped into sub-batches and each sub-batch can be processed in parallel.

The Scenario

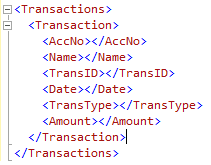

Let us consider the typical banking transactions as the scenario for demonstration and assume that the financial transactions (in Table 1) are received by the bank in a single batch as XML (Figure 1) at the end of each day. The transactions are ordered by Date and TransID of the transaction occurrence.

Table 1 - Sample banking transactions

Figure 1 - Batch Xml Structure

The batch contains six transactions in total (but in real world it could be in 1000) pertaining to three unique account holders. We certainly know that all the above transactions are to be processed in chronological order of transaction date to ensure the correctness of accounts.

Here, rather than processing the entire batch in sequence, we have the opportunity to logically treat each individual account holder’s transactions as a sub-batch and process them in parallel while still preserving the sequence of each account holder transaction.

So how the solution will look like?

Solution

Figure 2 - Concurrent FIFO Processing Solution

A custom pipeline component is utilized at the receive port to split the batch into individual transactions. It identifies unique account holder transactions, groups them together (logical sub-batch) and assigns a sequence number for each transaction within that group in chronological order it was received. Then it inserts the records into a custom-built database. The first transaction of each group (here the account holder) is inserted with Status=“Ready” and other transactions with Status=“Waiting” (as in Table 2).

Table 2 - Sample transactions stored in custom-built database

Figure 3 - Pipeline Component Code Snippet

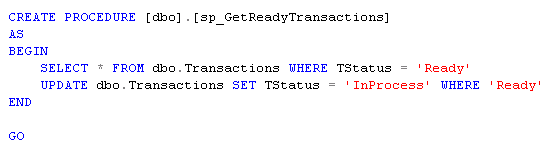

The orchestration that processes the financial transaction is bound to a Wcf-Sql receive port which polls custom-built database by invoking the stored procedure sp_GetReadyTransactions, at regular intervals, for records with Status=“Ready”. For each message published by the Wcf-Sql receive port, an orchestration instance is a spin-off, thus resulting in the concurrent processing of multiple account holder transactions.

Figure 4 - Stored Procedure to get 'Ready' transactions

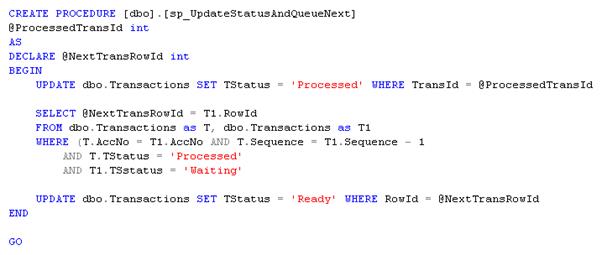

Each orchestration instance on completing the processing by calling the stored procedure sp_UpdateStatusAndQueueNext, update their associated record’s Status to “Processed” and also update the next “Waiting” transaction’s Status=“Ready”, if any, for that account holder. Records recently updated with Status=“Ready” are subsequently picked up for processing by Wcf-Sql receive port during the next database poll.

Figure 5 - Stored Procedure to Update Status and Queue Next Transaction

Add-on Features

- Control the number of concurrent orchestration instances that can be activated by limiting the number of records in “Ready” state at any given time.

- Enhance the SQL artifacts of the solution to introduce Auto Retry mechanism

- The received financial transactions are stored in custom-built database and so the custom pipeline component could suppress the message getting published into BizTalk Server’s MessageBoxDB.

Benefits

The concurrent-sequential solution delivers:

- Higher performance with efficient utilization of BizTalk Server infrastructure

- Exceptionally significant reduction in time to process the entire batch

- Localized failures at the sub-batch level and progress batch processing

- Capabilities to reprocess failed transactions

Conclusion

We recognized that how implementing classic sequential processing can limit the performance of the BizTalk Server and the significance of a viable substitute option to build a better performing solution.

We deemed the answer is to implement the solution that fulfills both concurrent and sequential functionalities. We justified our principle with a simple scenario and so realizing concurrent-sequential solution delivers better efficiency and performance.

Of course, there are other scenarios and possibilities and it is recommended that you keep your requirements in mind and tailor the solution for your scenario.

Disclaimer: The views and opinions expressed here are my own and not my company's.

See Also

Another important place to find a huge amount of BizTalk-related articles is the TechNet Wiki. The best entry point is BizTalk Server Resources on the TechNet Wiki.