SQL Server: Managing Database Backups Across all the Instances without Maintenance Plan

Introduction

As a DBA we are responsible for managing backups across all the SQL server instances which include production, data warehouse, development and QA with more than 200 SQL server instances in dedicated and shared database environments with more than 500 databases with different recovery models and DRP requirements.

There are many 3rd party tools available including SQL server native utility, maintenance plans to create the backup jobs. Maintenance plans are very helpful to create and schedule the backups, but they have very limited features where you can’t customize the backups plan as per your requirement which includes, copy the backups to secondary location, deletes the backups in secondary location, take the backups based on recovery model, keeping a track of which database been has a backup and monitor the status of all the backup jobs across all the instances in a single dashboard.

Addressing the below problems first involves deciding the right approach to choose maintenance plans or customized SSIS package to control and monitor all the database backups.

Missing Database Backups

Causes:

- When the new database created in a shared database instance then you may miss to include the backup in your backup job.

- If the disk is full then all the backups included in the maintenance plan will fail. It is manual time consuming task to trace which backup failed with what reason unless you have an automated solution to alter on missing database backups.

- Maintenance Plan has a control flow item “maintenance Cleanup Task” to delete the old backup files based on age of the backup, but it creates the problem when it deletes the full database backups based on n no.of days leaving all the dependent intermediate differential and transaction logs which are useless.

Transaction log sizes (not backups) are increasing and causing more disk space

Causes:

- Database is in full/bulk logged recovery model without a schedule transaction log backup job.

- On multi-database environments, if database recovery model got changed from simple to full/bulk-logged and later if you didn’t change the t-log backup maintenance plan to include this database

Monitor and control the backup disk space

Problems:

- Maintaining disk space to take the backups locally is one of the challenging tasks in multi database instances unless it has tape backups.

- Though you move the backup files to secondary location as a best practice and to free the local disk space, maintenance plan doesn't have the control flow item to copy the backups to secondary storage. So you may create another job to copy the backup files to the secondary storage, and execute this job at the maintenance plan. Remember: you should not modify the job created by the maintenance plan to add this step as the job will be dropped and recreated when you change the schedule at the maintenance plan.

Maintaining maintenance plans

- If you have to take the backup for more than 10 databases with terabytes in size then how many maintenance plans you create

- If you create one maintenance plan for all 10 databases and while executing if it fails at the middle, then you fix the issue and rerun the job which ends up with having redundant backups, you may manually update the maintenance plan to take the failed database backups, and revert the changes once its completed, but it’s a manual time consuming task.

- So, if you create 1 maintenance plan for each database then you have to maintain 10 Jobs. Fine, but if you include differential backups too then it will be 20 Jobs. During maintenance if few of the jobs are failed then you have to manually go and run each failed job one at a time.

Finally, maintenance plans are good to quickly create the regular maintenance jobs which include the backup tasks, but on multi-database environments where the server has more than 10 databases, it’s difficult to monitor, control and maintain the backup jobs.

Below framework/solution addresses all the problems listed above. You can modify the same solution as per your requirement to control multiple instances with multiple databases. and you can configure and check whether the backup jobs are configured as per your DRP requirements.

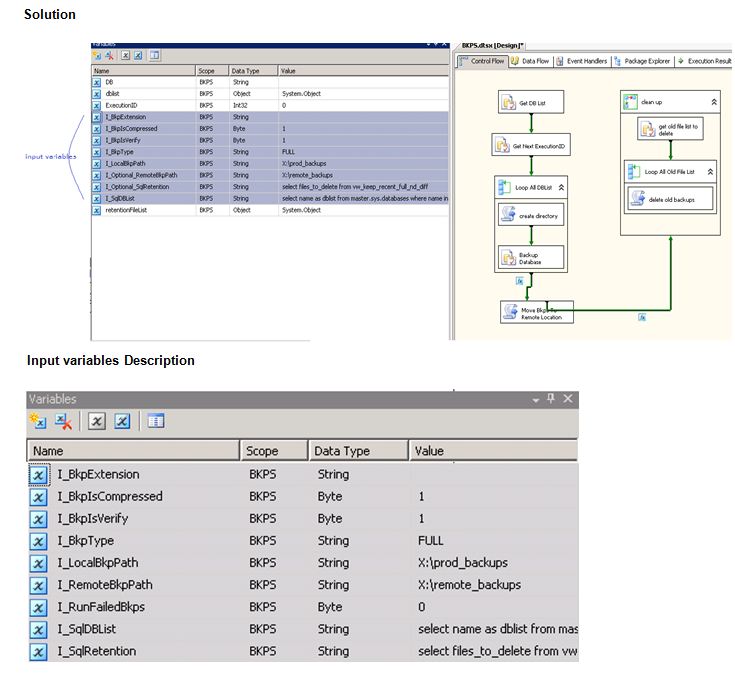

SSIS Solution

Input variables Description

Variable Name |

Description |

I_BkpExtension |

Backup extension. Don’t enter “.” |

I_BkpIsCompressed |

Enter 1 to compress the backups |

I_BkpIsVerify |

Enter 1 to verify the backup |

I_BkpType |

Enter the backup type Full /Diff/Log |

I_LocalBkpPath |

Enter local database backup path. If you want to take the backups directly to a remote location then enter the remote location name |

I_Optional_RemoteBkpPath |

This is optional. Enter remote location to move the local backups to this location. |

I_Optional_SqlRetention |

This is Optional. Enter the select query to include the list of the backup files to delete.

Eg: below query produce the list of old backups except recent,full +recent differential + all log backups after recent diff backup. select files_to_delete from vw_keep_recent_full_nd_diff |

I_SqlDBList |

Enter the select query to list the databases to take the backup.

Eg: below value includes the backups X,Y,Z select name as dblist from master.sys.databases where name in ('X','Y','Z') You can filter the database based on your own criteria. For e.g.: To take the log backups of all databases which are in full recovery model , set the variable value with “select name as dblist from sys.databases where recovery_model_desc='full' “

|

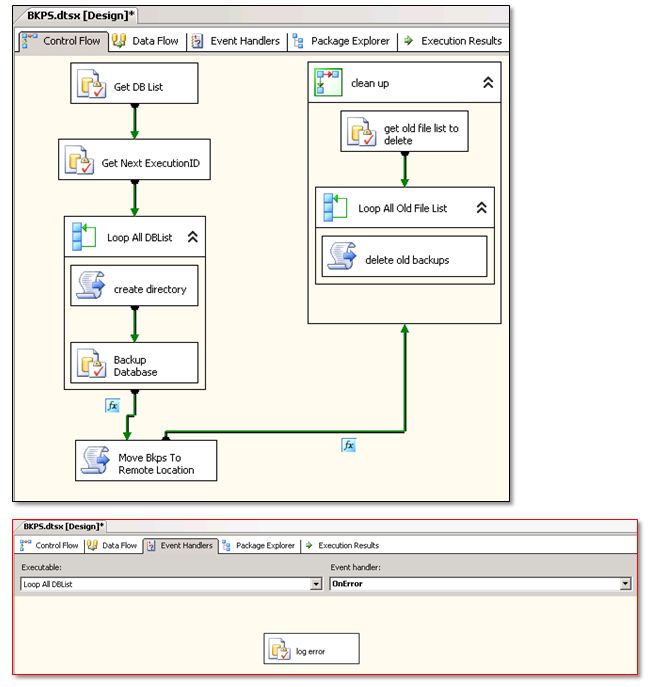

SSIS Flow Steps Description

Control Flow |

Description |

Get DB List |

This retrieves the results from the select query in variable I_SqlDBList and sets the output to another local variable dblist |

Get Next ExecutionID |

This retrieves the next ExectutionID value from BKP_file_locations using below statement to set the Execution number during each execution of the job. The purpose of using ExecutionID column is to know which database backup failed during an execution.

SELECT ISNULL(max(ExecutionID),0)+1 as ExecutionID FROM BKP_file_locations

|

Loop All DBLIst |

Loops through all the databases listed in dblist variable |

Create directory |

Creates subdirectories for each database in backup location |

Backup Database |

Executes the stored procedure [BKP_DBS] to take the backup |

Move Bkps To Remote Location |

If the optional input variable I_Optional_RemoteBkpPath sets to a remote location path then it moves the backups from the local path to remote path |

Cleanup |

< Container > |

get old file list to delete |

If the optional input variable I_Optional_SqlRetention sets to a SQL query to produce the list of the old backup files to delete then it Sets the local variable retentionFileList with the list of the files resulted from SQL query in I_Optional_SqlRetention |

Loop All Old File List |

Loops through all the files to delete |

Delete old backups |

Deletes the old backups one at a time in a loop and set the status of the delete operation in tracking table ( Bkp_file_locations) |

Log Error |

This is an event handler for “for loop container” to log the errors in tracking table. |

Database Objects

- BKP_DBS (procedure) : to take the backups with input parameters to include the dbname,backup_type,location

- Bkp_file_locations (Table) : it’s a tracking table , to store the status of the backup executions and location of the backup files

- BKP_INS_BKP_STATS(procedure) : to insert the status of the backup operation in tracking table(Bkp_file_locations)

- vw_keep_recent_full_nd_diff(view) : this view contains the all the old files to delete from the remote location. You can change this view as per your retention plan.

Steps to configure the job on your lab server

Download the files BKPS.dtsx and sqlobjects.sql

Save the BKPS.dtsx as an external file

Create all the objects listed in sqlobjects.sql in msdb database

Note: you should review and modify the view vw_keep_recent_full_nd_diff to delete the old backups , I have provided this view for simulation purpose only .

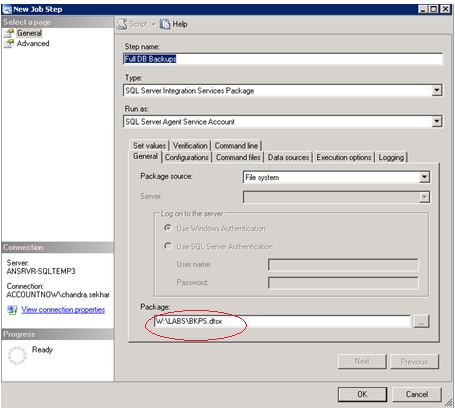

Create and Configure SQL Server agent Job

Execute the attached “CreateBkpJob.sql” script on msdb database. This creates a job with a name “BKP full system Databases”.

If you already has a job with the same name then modify the first line in the script with a new name for the job

Go to SQL server agent , right click on “BKP full system Databases” job , open the first step and modify the location of the SSIS package

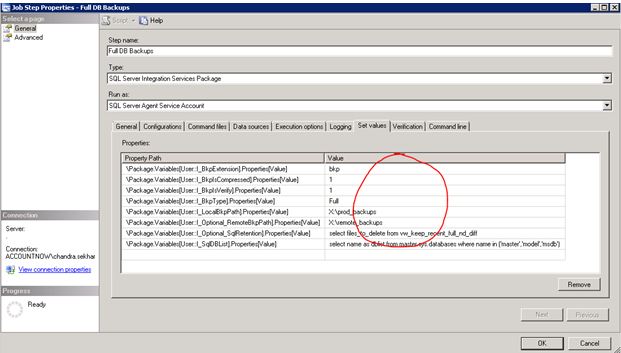

Click on [Set value] tab on same screen and modify the values for all variables as below example. Refer the section “variable description” in this article for the description of these variables

Execute the job, after successful completion of the job , you will see the records in msdb.dbo. bkp_file_locations table

Execute the job several times , and check the status column in the same table. It deletes all the old backup files and keeps only the recent one. If you don’t want to delete the old backups then don’t pass any value to the variable I_Optional_SqlRetention

Next: To create multiple jobs , you can script out the existing job and change the variable values

More on deleting old backups:

Attached download files has a view “vw_keep_recent_full_nd_diff” , this view refers the tracking table “bkp_file_locations” and produce the list of the remote old backup files to delete except the recent full ,recent differentials and all log files after the recent differential backup.