Using an HDInsight Cluster with Alternate Storage Accounts and Metastores

UNDER CONSTRUCTION

Overview

You can treat an HDInsight cluster as a resource that can access data and metadata beyond the storage accounts and metastore specified when you created the cluster. Multiple WebHCat jobs can be submitted to the cluster and each job can carry with it a description of its data and metadata. This works for Hive, MapReduce (MR), and MapReduce streaming. It currently works for Pig with storage accounts but not for metadata.

Common across Hive, Pig, MR, and MR streaming is that you can pass in a description of a new storage account (it's name and plain text key) in the arguments to a WebHCat job, and the job can access the data in the account. Any number of accounts can be specified with a job

The default behavior of HDInsight is to use the storage accounts defined in %HADOOP_HOME%/conf/core-site.xml and the metastore database defined in %HIVE_HOME%/conf/hive-site.xml. The method described below allows you to override the built-in storage accounts and metastore. Of course, users who can submit jobs to your cluster can access the storage accounts and metastore defined with the cluster.

You can edit your core-site.xml and hive-site.xml but this is not recommended. Your cluster head node may be re-imaged or migrated at any time, and any changes to those files will be lost.

Hive

You can submit a Hive job through WebHCat and specify a per-job metastore database and per-job storage accounts using a technique similar to the following example, using the HDInsight PowerShell interface:

$subscriptionName = "<subscriptionname>" $clusterName = "<cluster name>" Select-AzureSubscription -SubscriptionName $subscriptionName Use-AzureHDInsightCluster $clusterName $querystring = "show tables; select name, salary from emp;" [HashTable] $defines $defines = @{} $defines.Add("hive.metastore.uris", "") $defines.Add("javax.jdo.option.ConnectionURL", "jdbc:sqlserver://<name>.database.windows.net;database=<dbname>; encrypt=true;trustServerCertificate=true;create=false") $defines.Add("fs.azure.account.key.<name1>.blob.core.windows.net", "<clear text key1>") $defines.Add("fs.azure.account.key.<name2>.blob.core.windows.net", "<clear text key2>") $defines.Add("javax.jdo.option.ConnectionUserName", "<user>@<dbserver>") $defines.Add("javax.jdo.option.ConnectionPassword", "<password>") Invoke-Hive -Defines $defines -Query $queryString |

Important: there is a bug in WebHCat so that if your password contains an exclamation point (!) character, this will fail, because the exclamation point is not properly passed by WebHCat to the Hive command line when it is started up. It's recommended that you use passwords containing only upper and lower case letters, and numbers. Passwords of this form have been successfully tested with this method.

Metastore database initialization: You must initialize an Azure SQL DB database with the metadata tables to do this. You can do that quickest by creating a new database with collation SQL_Latin1_General_CP1_CI_AS and then running a copy of the file %HIVE_HOME%/HiveMetaStore.sql from a production cluster on the Azure SQL DB database you want to use for metadata. Alternatively, you can create an Azure SQL DB database and then create a cluster, passing it this database. That will initialize the database. Then you can drop the cluster, but use the database for other purposes. This is slow, but it will work and can be automated.

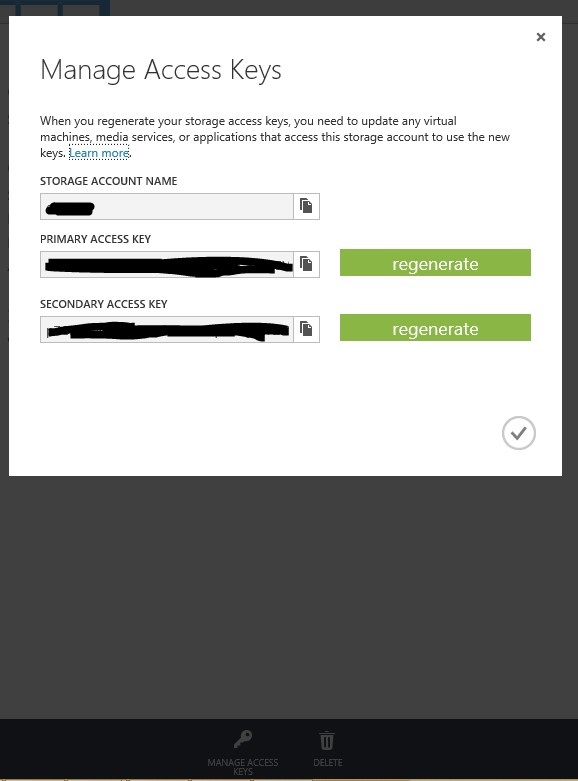

Storage account keys: Use the clear text key for your storage account with this method. You can get this from the AzureDB web portal by clicking the MANAGE ACCESS KEYS button at the bottom of the screen and then copying the PRIMARY ACCESS KEY. The picture below shows where to find the PRIMARY ACCESS KEY.

How it works: This method causes a local metastore to be started for the Hive job. This is caused by setting hive.metastore.uris="". It works whether or not the cluster’s metastore service is running. The local metastore runs inside the Hive client program so it is automatically cleaned up when the Hive client terminates.

MapReduce

It's possible to pass new storage account definitions to HDInsight as part of a WebHCat MR job submission. To do so, pass one or more storage account names and plain text keys as a define argument to the job. For example, with MR, you can do the following using the HDInsight PowerShell interface:

[HashTable] $arguments $arguments = @{ "fs.azure.account.key.<accountname>.blob.core.windows.net" = "<key>" } # Define the MapReduce job $wordCountJobDefinition = New-AzureHDInsightMapReduceJobDefinition -JarFile "wasb:///example/jars/hadoop-examples.jar" -ClassName "wordcount" -Arguments "wasbs://<containername>@<accountname>.blob.core.windows.net/data/mydata.dat", "wasbs://<containername>@<accountname>.blob.core.windows.net/output" -Defines $arguments # Submit the job Select-AzureSubscription $subscriptionName $wordCountJob = Start-AzureHDInsightJob -Cluster $clusterName -JobDefinition $wordCountJobDefinition | Wait-AzureHDInsightJob -WaitTimeoutInSeconds 3600 # Get the job output Get-AzureHDInsightJobOutput -Cluster $clusterName -JobId $wordCountJob.JobId -StandardError |

MapReduce Streaming

The following example shows how to prepare and submit an MR streaming job, passing in storage account keys as job arguments:

# Prepare storage keys as job arguments [HashTable] $arguments $arguments = @{ "fs.azure.account.key.<accountname1>.blob.core.windows.net" = "<key1>"; "fs.azure.account.key.<accountname2>.blob.core.windows.net" = "<key2>" } # Define the MapReduce job $wordCountJobDefinition = New-AzureHDInsightStreamingMapReduceJobDefinition -Files "/example/apps/wc.exe", "/example/apps/cat.exe" -InputPath "wasbs://<containername>@<accountname1>.blob.core.windows.net/data/mrdata/input" -OutputPath "wasbs://<containername>@<accountname2>.blob.core.windows.net/data/mrdata/output" -Mapper "cat.exe" -Reducer "wc.exe" -Defines $arguments

# Submit the job $wordCountJob = Start-AzureHDInsightJob -Cluster $clusterName -JobDefinition $wordCountJobDefinition | Wait-AzureHDInsightJob -WaitTimeoutInSeconds 3600

# Get the job output Get-AzureHDInsightJobOutput -Cluster $clusterName -JobId $wordCountJob.JobId -StandardError |

Pig

With Pig, you can specify additional storage accounts similar to how it's done with MR, as in the following example.

$subscriptionName = "<subscriptionname>" $clusterName = "<clustername>" Select-AzureSubscription -SubscriptionName $subscriptionName Use-AzureHDInsightCluster $clusterName $querystring = "emp = load 'wasbs://<containername>@<accountname>.blob.core.windows.net/data/emp_0.dat';" + "dump emp;" [String[]] $arguments $arguments = @( '-Dfs.azure.account.key.<accountname>.blob.core.windows.net=<plain text key>') $pigJobDefinition = New-AzureHDInsightPigJobDefinition -Query $queryString -Arguments $arguments $pigJob = Start-AzureHDInsightJob -Cluster $clusterName -JobDefinition $pigJobDefinition Wait-AzureHDInsightJob -Job $pigJob -WaitTimeoutInSeconds 3600 Get-AzureHDInsightJobOutput -Cluster $clusterName -JobId $pigJob.JobId -StandardOutput |

Best Practices

Keep your storage accounts and metastore database together as a unit in your application. It may, for example, not be possible to drop an external table from a metastore unless the storage account for the table is accessible to Hive when you run the DROP TABLE command to remove the table.

Caution: Concurrently updating metadata in the same metastore database from multiple different jobs that each set hive.metastore.uris to the empty string, and thus use a local metastore, has been observed to cause metastore database corruption (HIVE-6918). Consider serializing operations that update metadata, such as altering of table partitions, if multiple jobs will access the same metastore database using this approach.

See Also

Another important place to find an extensive amount of Cortana Intelligence Suite related articles is the TechNet Wiki itself. The best entry point is Cortana Intelligence Suite Resources on the TechNet Wiki.