Hybrid Cloud Infrastructure Design Considerations

Published: June 26, 2013

Version: 1.1

Abstract: The Hybrid Cloud Infrastructure Design Considerations guide provides the enterprise architect and designer with a collection of critical design considerations that need to be addressed before beginning the design decisions process that will drive a hybrid cloud computing infrastructure implementation. This article can be used together with the Hybrid Cloud Solution for Enterprise IT reference implementation guidance set to create a core hybrid cloud infrastructure.

To provide feedback on this article, leave a comment at the bottom of the article or send e-mail to SolutionsFeedback@Microsoft.com. To easily save, edit, or print your own copy of this article, please read How to Save, Edit, and Print TechNet Articles. When the contents of this article are updated, the version is incremented and changes are entered into the change log. The online version is the current version. See the bottom of this article for a list of technologies discussed in this article.

1.0 Introduction

Most enterprise information technology (IT) organizations have data centers that have limited IT staff, data center space, hardware, and budgets. To avoid adding more of these resources, or to more effectively use the resources they already have, many organizations now use external IT services to augment their internal capabilities and services. Examples of such services are Microsoft Office 365 and Microsoft Dynamics CRM Online. Services that are provided by external providers typically exhibit the five essential characteristics of cloud computing (on-demand self-service, broad network access, resource pooling, rapid elasticity, and measured service) that are defined in The NIST Definition of Cloud Computing.

In the remainder of this document, the term “cloud services” refers to services that exhibit the United States National Institute of Standards and Technology (NIST) essential characteristics cloud computing. Services that do not exhibit these characteristics are referred to simply as “services.” Services often don’t exhibit many, if any of the essential characteristics. Another term that is used throughout this document is “technical capabilities.” Technical capabilities are the functionality that is provided by hardware or software, and when they are used together in specific configurations, they provide a service, or even a cloud service.

For example, when providing a messaging service in your environment, you’d use an email server application, network, servers, name resolution, storage, authentication, authorization, and directory technical capabilities, at a minimum to provide it. If you wanted to provide that same messaging service as a cloud service in your environment, you’d add additional capabilities such as a self-service portal, and probably an orchestration capability (to execute the tasks that support the essential cloud characteristic of self-service).

Rather than several people consuming a number of cloud services from external providers independently, typically a department within the IT organization establishes a relationship with an external provider at the organizational level. This department consumes the service at the organizational level, integrates the service with some of their own internal technical capabilities and/or services, and then provides the integrated hybrid service to consumers within their own organization. The consumers within the organization are often unaware of whether the service is owned and managed by their own IT organization or owned and managed by an external provider. And they don’t care who owns it, as long as the service meets their requirements.

An important consideration when dealing with a hybrid cloud infrastructure is that while the in-house IT department will be seen as a provider of cloud services to the corporate consumer of the hybrid cloud solution, it is also true that the IT organization itself is a consumer of cloud services. That means that there are multiple levels of consumers. The corporate consumer might be considered a second-level consumer of the public cloud services, while the IT organization might be considered a first-level consumer of the service. This has important implications when thinking about the architecture of the solution. This issue will be covered later in this document.

This document details the design considerations and configuration options for integrating Windows Azure Infrastructure Services (virtual machines (or “compute”), network, and storage cloud services) with the infrastructure capabilities and/or services that currently exist within typical organizations. This discussion will be driven by requirements and capabilities. Microsoft technologies are mentioned within the context of the requirements and capabilities and not vice versa. It is our expectation that this approach will resonate better with architects and designers who are interested in what problems must be solved and what approaches are available for solving these problems. Only then is the technology discussion relevant.

1.1 Audience

The primary audience for this document is the enterprise architect or designer who is interested in understanding the issues that need to be considered before engaging in a hybrid cloud project, and the options available that enable them to meet the requirements based on the key infrastructure issues. Others that might be interested in this document include IT implementers who are interested in the design considerations that went into the hybrid cloud infrastructure they are tasked to build.

1.2 Document Purpose

The purpose of this document is two-fold. The first purpose is to provide the enterprise architect or designer a collection of issues and questions that need to be answered for each of the issues for building a hybrid cloud infrastructure. The second purpose is to provide the enterprise architect or designer a collection of options that can be evaluated and chosen based on the answers to the questions. While the questions and options can be used with any public cloud service provider's solution, examples of available options will focus on Windows Azure.

In addition, this document includes:

- The relevant design requirements and environmental constraints that must be gathered in an environment before integrating Windows Azure Infrastructure Services into an environment.

- Conceptual design considerations for integrating infrastructure cloud services into an existing environment, regardless of who is the external provider of the cloud services.

- Physical design considerations to evaluate when integrating Windows Azure Infrastructure Services into an existing environment.

This document was conceived and written with the desire that enterprise IT should not want to replicate their current datacenter in the cloud. Instead, it is assumed that that enterprise IT would like to base a new solution on new architectural principles specific for a hybrid cloud environment. This document focuses on the hybrid cloud infrastructure because core infrastructure issues need to be addressed before even considering creating a single virtual machine for production. Issues revolving around security, availability, performance and scalability need to be considered in the areas of networking, storage, compute and identity before embarking on a production environment. We recognize that there is a tendency to want to stand up applications as soon as the public cloud infrastructure service account is created, but we encourage you to stem that urge and read this document so that you can avoid unexpected complications that could put your hybrid cloud project at risk.

Note that the existing environment can be a private cloud or a traditional data center. The goal is to enable you to integrate your current environment with a public cloud provider of infrastructure services (an Infrastructure as a Service [IaaS] provider).

While this document does explain design considerations and the relevant Microsoft technology and configuration options for integrating Windows Azure Infrastructure Services with the existing infrastructure of technical capabilities and/or services in an environment, it does not provide any example designs for doing so. A future document set will address a specific design example. You can find more information about this on the Cloud and Datacenter Solutions Hub at http://technet.microsoft.com/en-US/cloud/dn142895.

If you’re also interested in guidance that includes lab-tested designs that integrate infrastructure cloud services into existing environments, it is available separately. For more information, see http://technet.microsoft.com/en-US/cloud/dn142895.

2.0 Hybrid Cloud Problem Definition

The following problems or challenges typically drive the need to integrate infrastructure cloud services from external providers into existing environments:

- Existing hardware, software, or staff resources cannot meet the demand for new technical capabilities and/or services within the environment.

- Periodic demand “spikes” require acquisition of hardware and software resources that sit idle during normal, non-spike usage periods.

- On-premises cloud services are usually not as cost effective as consuming the services from an external provider. Private cloud solutions make sense for maximizing flexibility and efficiency on-premises, and provide a path to integrating with or migrating to public cloud services, we should not forget that extreme economies of scale are only going to be realized via public cloud services offerings. The Economics of the Cloud whitepaper from Microsoft estimated a 10 fold reduction in cost when fully utilizing public cloud.

Organizations with a large application portfolio will need to be able to determine hybrid cloud infrastructure requirements before starting new applications, or moving existing applications into a cloud environment. Different applications will have different demands in the areas of networking, storage, compute, identity, security, availability and performance. You will need to determine if the public cloud infrastructure service provider you choose is able to deliver on the requirements you define in each of these areas. In addition, you will need to consider are regulatory issues specific to your organization's geo-political alignment.

3.0 Envisioning the Hybrid Cloud Solution

After clearly defining the problem you’re trying to solve, you can begin to define a solution to the problem that satisfies your consumer’s requirements and fits the constraints of the environment in which you’ll implement your solution.

3.1 Solution Definition

To solve the problems previously identified, many organizations are beginning to integrate infrastructure cloud services from external providers into their environments. In many organizations today, a department within the organization owns and manages network, compute (virtual machine), and storage technical capabilities. The people in this department may provide these technical capabilities for use by people in other departments within the organization, and/or, with additional technical capabilities, provide these capabilities as services, or even cloud services within their environment.

The design considerations in this document are for a solution that enables an organization to:

- Set up an organization-level account and billing with an external provider of cloud infrastructure services, so that its consumers don’t do so at an individual level.

- Allow its consumers to provision new virtual machines with the external provider that have capabilities similar to the capabilities of virtual machines that are provided on premises.

- Allow its consumers to move existing applications that run on the organization’s on-premises network into a public cloud infrastructure as a service offering.

- Allow consumers of applications in the organization to resolve names and authenticate to resources that are running on the external provider’s infrastructure cloud services, just as they do with resources that are running on premises.

- Enable core security, data access controls, business continuity, disaster recovery, availability and scalability requirements

3.2 Solution Requirements

Before integrating infrastructure cloud services from an external provider with existing infrastructure technical capabilities and/or services to solve the problems that were previously listed, you must first define a number of requirements for doing so, as well as the constraints for integrating the services. Some of the requirements and constraints are defined by the consumers of the capabilities, while others are defined by your existing environment, in terms of existing technical capabilities, services, policies, and processes.

Determining the requirements, constraints, and design for integrating the services is an iterative process. Initial requirements, coupled with the constraints of your environment may drive an initial design that can’t meet all of the initial requirements, necessitating changes to the initial requirements and subsequent design. Multiple iterations through the requirements definition and the solution design are necessary before finalizing the requirements and the design. Therefore, do not expect that your first run through this document will be the last one, as you’ll find that decisions you make earlier will exclude more preferred options that you might want to select later.

The answers to the questions in this section provide a comprehensive list of requirements for integrating infrastructure cloud services from an external provider with the existing infrastructure technical capabilities and/or services in your environment.

3.2.1 Service Delivery Requirements

Before integrating cloud infrastructure services from an external provider with existing infrastructure technical capabilities and/or services in your environment, you’ll need to work with the consumer(s) of these cloud services in your environment to answer the questions in the sections that follow. The questions are aligned to the Service Delivery processes that are defined in the CSFRM. The initial answers to these questions that you get from your consumer(s) are the initial Service Delivery requirements for your initial design.

After further understanding the constraints of your environment and the products and technologies that you will ultimately use to extend your existing infrastructure technical capabilities to an external provider however, you will likely find that not all of the initial requirements can be met. As a result, you’ll need to work with your consumer to adjust the initial requirements and continue iterating until you have a final design that satisfies the requirements and the constraints of your environment.

The outcome of this process is a clear definition of the functionality that will be provided, the service level metrics it will adhere to, and the cost at which the functionality will be provided. The service design applies the outcomes of the following questions.

The following table contains questions that you’ll need to address in these areas.

| Service delivery requirements | Questions to ask |

|---|---|

| Demand and capacity management |

|

| Availability and continuity management |

|

| Information security management |

|

| Regulatory and compliance management |

|

| Financial management |

|

3.2.2 Service Operations Requirements

You have a variety of operational processes that are applied to the delivery of all services and technical capabilities in your environment. As a result, you need to answer the questions in the following sections to determine how the hybrid cloud infrastructure you’re designing will apply to and comply with your operational processes. The questions are aligned to the service operations processes defined in the CSFRM. The answers to these questions become the service operations requirements for the design of your hybrid cloud infrastructure. Questions you need to ask to address these areas are included in the following table.

| Service operations requirements | Questions to ask |

|---|---|

| Request fulfillment |

|

| Service asset and configuration management |

|

| Change management |

|

| Release and deployment management |

|

| Access management |

|

| Systems administration |

|

| Knowledge management |

|

| Incident and problem management |

|

3.2.3 Management and Support Technical Capability Requirements

Every organization uses a variety of technical capabilities to manage and support services in their environment. As the provider of this service, you need to work with the people in your organization who provide these technical capabilities to determine the answers to the questions in this section. The questions are aligned to the Management and Support Technical Capabilities that are defined in the CSFRM. The answers to these questions become the Management and Support Technical Capability Requirements and constraints for the design of your hybrid cloud infrastructure.

While the introduction of this service may require unique changes to the existing capabilities in your environment, it’s assumed that because such changes start to de-standardize the existing capabilities, they should be avoided whenever possible.

When thinking about management support and technical capabilities, you should ask the questions in the following table.

| Management and support technical capability | Questions to ask |

|---|---|

| Service reporting |

|

| Service Management |

|

| Service Monitoring |

|

| Configuration Management |

|

| Fabric Management |

|

| Deployment and Provisioning |

|

| Data Protection |

|

| Network Support |

|

| Billing |

|

| Self-Service |

|

| Authentication |

|

| Authorization |

|

| Directory |

|

| Orchestration |

|

3.2.4 Infrastructure Services Capabilities Requirements

Every organization uses a variety of infrastructure technical capabilities, or infrastructure services, or some combination of the two to host IT services. As the provider of this service, you need to work with the people in your organization who provide these technical capabilities to determine the answers to the questions in this section. The questions are aligned to the Infrastructure Technical Capabilities that are defined in the CSFRM. The answers to these questions become the Infrastructure Technical Capability Requirements and constraints for the design of your hybrid cloud infrastructure.

While the introduction of this service may require unique changes to the existing capabilities in your environment, it’s assumed that because such changes start to de-standardize the existing capabilities, they should be avoided whenever possible.

When considering infrastructure capability requirements, you should start by asking the questions in the following table.

| Infrastructure services requirements | Questions to ask |

|---|---|

| Network |

|

| Virtual Machine |

|

| Storage |

|

3.2.5 Infrastructure Technical Capability Requirements

Every organization uses a variety of infrastructure services, or infrastructure technical capabilities, or some combination of the two to host IT services. As the provider of this service, you need to determine how best to use the existing infrastructure services in your environment. The infrastructure services that your environment uses may be provided by your own organization, by external organizations, or some combination of the two. If your environment uses existing internal or external infrastructure (and in almost all cases it will), then your organization’s technical capabilities will be driven by that infrastructure, which supports the infrastructure capabilities that are mentioned in the infrastructure component section.

One of the core tenets of cloud computing is that the infrastructure should be completely transparent to the user. So the users of the cloud service should never know (nor should they care) what the infrastructure services are that support the cloud infrastructure.

The questions in this section are aligned to the Infrastructure component in the CSFRM. The answers to these questions become the Infrastructure Requirements and constraints for the design of your hybrid cloud infrastructure. Note that the CSFRM does not assume that the infrastructure for your environment is provided by your own organization. The infrastructure might be provided by your organization, or they might be provided by an external organization. However, in a hybrid cloud infrastructure, infrastructure is provided by both the company’s IT organization and the public cloud infrastructure provider.

While the introduction of public cloud infrastructure may require unique changes to the existing services in your environment, it’s assumed that because such changes start to de-standardize the existing services, they should be avoided whenever possible.

The following table includes questions you should ask about infrastructure requirements.

| Infrastructure requirements | Questions to ask |

|---|---|

| Network |

|

| Compute |

|

| Virtualization |

|

| Storage |

|

3.2.6 Platform Requirements

Some organizations provide platform services that are consumed by application developers and the software services that they develop for the organization. As the provider of this service, you need to determine whether your organization currently provides its own platform services, or uses platform services from external providers. If you do use external providers, you need to know how their service will use the platform services. If your environment has its own existing platform services or uses external services, then your organization’s self-service technical capability will include a service catalog, which includes the list of available services in the environment and the service-level metrics they adhere to.

The questions in this section are aligned to the Platform Services that are defined in the CSFRM. The answers to these questions become the Platform Services Requirements and constraints for the design of your hybrid cloud infrastructure. Note that the CSFRM does not assume that the platform services for your environment are provided by your own organization. The platform services might be provided by your organization, or they might be provided by an external organization.

While the introduction of this service may require unique changes to the existing services in your environment, it’s assumed that because such changes start to de-standardize the existing services, they should be avoided whenever possible.

The following table includes questions you should ask about platform service requirements.

| Platform service requirements | Questions to ask |

|---|---|

| Structured data |

|

| Unstructured data |

|

| Application server |

|

| Middleware server |

|

| Service bus |

|

4.0 Conceptual Design Considerations

After determining the requirements and constraints for integrating cloud infrastructure services from a public cloud infrastructure provider into your environment, you can begin to design your solution. Before creating a physical design, it’s helpful to first define a conceptual model (commonly referred to as a “reference model”), and some principles that will work together as a foundation for further design.

4.1 Reference Model

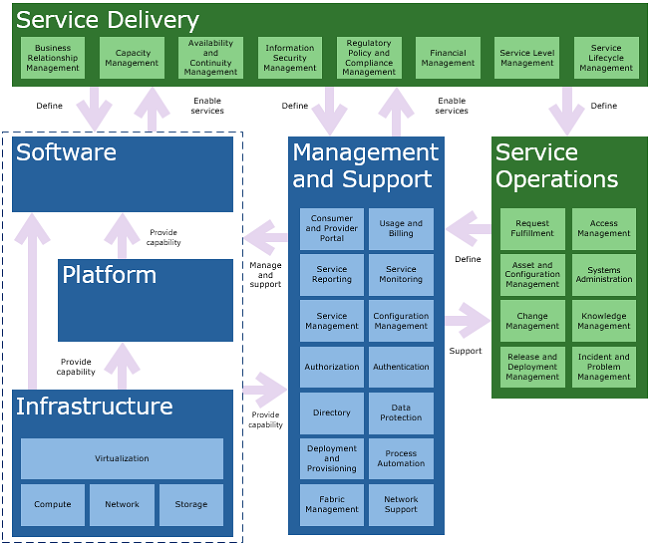

A reference model is a vendor-agnostic depiction of the high level components of a solution. A reference model can provide common terminology when evaluating different vendors’ product capabilities. A reference model also helps to illustrate the relationship of the problem domain it was created for to other problem domains within your environment. As a starting point, we can use the previously mentioned Cloud Services Foundation Reference Model (CSFRM).

We won’t include a detailed explanation of the CSFRM in this document, but if you’re interested in understanding it further, you’re encouraged to read the Microsoft Cloud Services Foundation Reference Model document. It will be available as part of the Microsoft Cloud Services Foundation Reference Architecture guidance set. To stay abreast of the work in this area, please see http://aka.ms/Q6voj9

Although it is from Microsoft, this reference model is vendor-agnostic. It can serve as a foundation for hosting cloud services and can be extended, as appropriate, by anyone. If you decide to use it in your environment, you’re encouraged to adjust it appropriately for your own use. Figure 1 illustrates the CSFRM.

Figure 1: Microsoft Cloud Services Foundation Reference Model

Recall from the Solution Definition section of this document, that the solution to the problems that are defined in the Problem Definition section of this document is to host virtual machines with an external provider such that the consumers within the organization can provision new virtual machines in a manner similar to how they provision virtual machines that are hosted on premises today.

The solution also requires that the virtual machines that are hosted by an external provider have capabilities that are similar to the capabilities of the on-premises virtual machines. As mentioned previously, the components, or boxes, in the reference model either change the way existing technical capabilities and/or services are provided in an environment, or introduce new services into an environment.

The Physical Design Considerations section of this document will discuss the design considerations for all of the black-bordered boxes in Figure 1.

4.2 Hybrid Cloud Architectural Principles

After you’ve defined a reference model, you can establish some principles for integrating infrastructure cloud services from an external provider. Principles serve as “guidelines” for physical designs to adhere to. You can use the principles that follow as a starting point for defining your own. They are a combination of both principles from the CSFRA, and principles unique to integrating infrastructure cloud services from an external provider.

The Microsoft Private Cloud Reference Architecture (PCRA) provides a number of vendor-agnostic principles, patterns, and concepts to consider before designing a private cloud. Although they were defined with private clouds in mind, they are in fact applicable to any cloud based solution. You are encouraged to read through the document, Private Cloud Principles, Concepts and Patterns, in full, as the information in it contains valuable insight for almost any type of cloud infrastructure planning, including the hybrid cloud infrastructure that is discussed in this document.

As mentioned previously, designing a cloud infrastructure may be different from how you’ve historically designed infrastructure. In the past, you often purchased and managed individual servers with specific hardware specifications to meet the needs of specific workloads. Because these workloads and servers were unique, automation was often difficult, if for no other reason than the sheer volume of variables within the environment. You may have also had different service level requirements for the different workloads you were planning infrastructure for, often causing you to plan for redundancy in every hardware component.

When designing a cloud infrastructure for a mixture of workload types with standardized cost structures and service levels, you need to consider a different type of design process. Consider the following differences between how you planned for and designed unique, independent infrastructures for specific workloads in the past, and how you might plan for and design a highly standardized infrastructure that supports a mixture of workloads for the future.

A hybrid cloud infrastructure introduces new variables, because even if you currently host a private cloud infrastructure on premises, you are not responsible for enabling the essential cloud characteristics in the public cloud infrastructure service provider’s side of the solution. And if you don’t have a private cloud on premises, you can still have a hybrid cloud infrastructure. In that case, you’re not at all responsible for providing any of the essential characteristics of cloud computing, because the only cloud you’re working with is the one on the public cloud infrastructure side.

The following table provides some perspective on some specific design aspects of a cloud based solution versus how you have done things in a traditional data center environment.

| Design aspect | Non-cloud infrastructure | Cloud infrastructure |

|---|---|---|

| Hardware acquisition | Purchase individual servers and storage with unique requirements to support unique workload requirements. | Private Cloud: Purchase a collection (in a blade chassis or a rack) of servers, storage, and network connectivity devices pre-configured to act as one large single unit with standardized hardware specifications for supporting multiple types of workloads. These are referred to as scale units. Adding capacity to the data center by purchasing scale units, rather than individual servers, lowers the setup and configuration time and costs when acquiring new hardware, although it needs to be balanced with capacity needs, acquisition lead time, and the cost of the hardware.

Public Cloud: No hardware acquisition costs other than possible gateway devices that are required to connect the corporate network to the cloud infrastructure service provider’s network. |

| Hardware management | Manage individual servers and storage resources, or potentially aggregations of hardware that collectively support an IT service. | Private Cloud: Manage an infrastructure fabric. To illustrate this simplistically, think about taking all of the servers, storage, and networking that support your cloud infrastructure and managing them like one computer. While most planning considerations for fabric management are not addressed in this guide, it does include considerations for homogenization of fabric hardware as a key enabler for managing it like a fabric.

Public Cloud: No need to manage new in-house servers or storage devices—host servers and storage infrastructure are managed by the public cloud infrastructure service provider. |

| Hardware utilization | Acquire and manage separate hardware for every application and/or business unit in the organization. | Private Cloud: Consolidate hardware resources into resource pools to support multiple applications and/or business units as part of a general-purpose cloud infrastructure.

Public Cloud: Set up virtual machines and virtual networks for specific applications and business units. No hardware acquisition required. |

| Infrastructure availability and resiliency | Purchase infrastructure with redundant components at many or all layers. In a non-cloud infrastructure, this was typically the default approach, as workloads were usually tightly coupled with the hardware they ran on, and having redundant components at many layers was generally the only way to meet service level guarantees. | Private Cloud: With a fabric that is designed to run a mixture of workloads that can move dynamically from physical server to physical server, and a clear separation between consumer and provider responsibilities, the fabric can be designed to be resilient, and doesn’t require redundant components at as many layers, which can decrease the cost of your infrastructure. This is referred to as designing for resiliency over redundancy. To illustrate, if a workload running in a virtual machine can be migrated from one physical server to another with little or no downtime, how necessary is it to have redundant NICs and/or redundant storage adapters in every server, as well as redundant switch ports to support them? To design for resiliency, you’ll first need to determine what the upgrade domain (portion of the fabric that will be upgraded at the same time) and physical fault domain (portion of the fabric that is most likely to fail at the same time) are for your environment. This will help you determine the reserve capacity necessary for you to meet the service levels you define for your cloud infrastructure.

Public Cloud: Infrastructure resiliency is built into the public cloud infrastructure service provider’s offering. You don’t need to purchase additional equipment or add redundancy. |

A hybrid cloud infrastructure shares all of the principles of a private cloud infrastructure. Principles provide general rules and guidelines to support the evolution of a cloud infrastructure. They are enduring, seldom amended, and inform and support the way a cloud fulfills its mission and goals. They should also be compelling and aspirational in some respects because there needs to be a connection with business drivers for change. These principles are often interdependent, and together they form the basis on which a cloud infrastructure is planned, designed, and created.

After you’ve defined a reference model, you can then define principles for integrating infrastructure cloud services from a public provider with your on-premises services and technical capabilities. Principles serve as “guidelines” for physical designs to adhere to, and are oftentimes, inspirational, as fully achieving them often takes time and effort. The Microsoft Cloud Services Foundation Reference Architecture - Principles, Concepts, and Patterns article lists several principles that can be used as a starting point when defining principles for both private and hybrid cloud services. While all of the Microsoft Cloud Services Foundation Reference Architecture principles are relevant to designing hybrid cloud services, the principles listed below are the most relevant, and are applied specifically to hybrid cloud services:

4.2.1 Perception of Infinite Capacity

Statement:

From the consumer’s perspective, a cloud service should provide capacity on demand, only limited by the amount of capacity the consumer is willing to pay for.

Rationale:

The rationale for applying each of the following principles is the same as the rationale for each principle listed in the Cloud Services Foundation Reference Architecture - Principles, Concepts, and Patterns, so each rationale is not restated in this article.

Implications:

Combining capacity from a public cloud with your own existing private cloud capacity can typically help you achieve this principle more quickly, easily, and cost-effectively than by adding more capacity to your private cloud alone. Among other reasons, this is because you don't need to manage the physical acquisition process and delay, this process is now the public provider's responsibility.

4.2.2 Perception of Continuous Service Availability

Statement:

From the consumer’s perspective, a cloud service should be available on demand from anywhere, on any device, and at any time.

Implications:

Designing for availability and continuity often requires some amount of normally unused resources. These resources are utilized only in the event of failures. Utilizing on-demand resources from a public provider in service availability and continuity designs can typically help you achieve this principle more cost-effectively than with private cloud resources alone. To illustrate this point, if your organization doesn't currently have it's own physical disaster recovery site, and is evaluating whether or not to build one, consider the costs in real-estate, additional servers, and software that a disaster recovery site would require. Compare that cost against utilizing a public provider for disaster recovery. In most cases, the cost savings of using a public provider for disaster recovery could be significant.

4.2.3 Optimization of Resource Usage

Statement:

The cloud should automatically make efficient and effective use of infrastructure resources.

Implications:

Some service components may have requirements that allow them only to be hosted within a private cloud. Specific security or regulatory requirements are two examples of such requirements. Other service components may have requirements that allow them to be hosted on public clouds. Individual service components may support several different services within an organization. Each service component may be hosted on a private or public cloud. According to Microsoft's The Economics of the Cloud whitepaper, hosting service components on a private cloud can be up to 10X more than hosting the service components with a public cloud provider. As a result, utilizing public cloud resources can help organizations optimize usage of their private cloud resources by augmenting them with public cloud resources.

4.2.4 Incentivize Desired Behavior

Statement:

Enterprise IT service providers must ensure that their consumers understand the cost of the IT resources that they consume so that the organization can optimize its resources and minimize its costs.

Implications:

While this principle is important in private cloud scenarios, it's oftentimes a challenge to adhere to if the actual costs to provide services are not fully understood by the IT organization or if consumers of private cloud services are not actually charged for their consumption, but rather, only shown their consumption. When utilizing public cloud resources however, consumption costs are clear, consumption is measured by the public provider, and the consumer is billed on a regular basis. As a result, the actual cost to an organization for consuming public cloud services may be much more tangible and measurable than consuming private cloud services is. These clear consumption costs may make it easier to incent desired behavior from internal consumers as a result.

4.2.5 Create a Seamless User Experience

Statement:

Within an organization, consumers should be oblivious as to who the provider of cloud services are, and should have similar experiences with all services provided to them.

Implications:

Many organizations have spent several years integrating and standardizing their systems to provide seamless user experiences for their users, and don't want to go back to multiple authentication mechanisms and inconsistent user interfaces when integrating public cloud resources with their private cloud resources. The myriad of application user interfaces and authentication mechanisms utilized across various applications has made achieving this principle very difficult. The user interfaces and authentication mechanisms utilized across multiple public cloud service providers can make achieving this principle even more difficult. It's important to define clear requirements to evaluate public cloud providers against. These requirements may include specific authentication mechanisms, user interfaces, and other requirements that public providers must adhere to before you incorporate their services into your hybrid service designs.

4.3 Hybrid Cloud Architectural Patterns

Patterns are specific, reusable ideas that have been proven solutions to commonly occurring problems. The Microsoft Cloud Services Foundation Reference Architecture - Principles, Concepts, and Patterns article lists and defines the patterns below. In this article, the definitions are not repeated, but considerations for applying the patterns specifically to hybrid infrastructure and service design are discussed for each pattern.

4.3.1 Resource Pooling

Problem: When dedicated infrastructure resources are used to support each service independently, their capacity is typically underutilized. This leads to higher costs for both the provider and the consumer.

Solution: When designing hybrid cloud services, you may have pools of resources on premises and may treat the resources at a public provider as a separate pool of resources. Further, you may separate public provider resources into separate resource partition pools for reasons such as service class, systems management, or capacity management, just as you might for your on-premises resources. For example, and organization may define two separate service class partition resource pools, one within its private cloud, which might host medium and high business impact information, and one in its public cloud, which might host only low business impact information.

4.3.2 Scale Unit

Problem: Purchasing individual servers, storage arrays, network switches, and other cloud infrastructure resources requires procurement, installation, and configuration overhead for each individual resource.

Solution: When designing physical infrastructure, application of this pattern usually encompasses purchasing pre-configured collections of several physical servers and storage. While a public provider's scale unit definition strategy is essentially irrelevant to its consumers, you may still choose to define units of scale for the resources you utilize with a public cloud provider. With a public provider, since you typically pay for every resource consumed, and you have no wait time for new capacity like you do when adding capacity to your private cloud, you may decide that your compute scale unit, for example, is an individual virtual machine. As you near capacity thresholds, you can simply add and remove individual virtual machines, as necessary.

4.3.3 Capacity Plan

Problem: Eventually every cloud infrastructure runs out of physical capacity. This can cause performance degradation of services, the inability to introduce new services, or both.

Solution: The capacity plan in a hybrid solution design incorporates all the same elements of a capacity plan for an on-premises-only solution design. Service designers however, will likely find it to be much less effort to add/remove capacity on-demand when utilizing resources from a public provider, if for no other reason than doing so doesn't require them to order and wait for the arrival of new hardware. Meeting spikes in capacity needs will often prove to be more cost-effective when using public provider resources over using only dedicated on-premises resources too, since when the spike is over, you no longer need to pay for the usage of the extra capacity required to meet the demand spike. Some public providers also offer auto-scaling capabilities, where there systems will auto-scale service component tiers based on user-defined thresholds.

4.3.4 Health Model

Problem: If any component used to provide a service fails, it can cause performance degradation or unavailability of services.

Solution: Initially, you might think that the definition of health models for hybrid services will be more difficult than defining health models for services that only include components hosted on your private cloud. Part of the reason for this may be a fear of the unknown. You understand your private cloud systems, and can do deep troubleshooting on them, if necessary. When using a public provider however, you have little understanding of the underlying hardware configuration, and no troubleshooting capability. While this might initially be concerning, after your confidence in a public provider grows, you'll likely find that defining health models for service components hosted on a public cloud are even easier than when the components are hosted on your private cloud, since all of the hardware configuration and troubleshooting responsibility is now the public provider's, not yours. As a result, your health models will have significantly less failure or degradation conditions, which also means less conditions that your systems must monitor for and remediate. Some public cloud providers offer service level agreements (SLAs) that include an availability level that they commit to meet each month. As long as your service provider meets this SLA, you no longer need be concerned with how the provider meets the SLA, only that it did meet it. While this is true when consuming infrastructure as a service functionality from a public provider, it's even more true when consuming platform as a service (PaaS) capabilities from public providers.

4.3.5 Application

Problem: Not all applications are optimized for cloud infrastructures and may not be able to be hosted on cloud infrastructures.

Solution: Not all public cloud service providers support the same application patterns. For example, if you have an application that relies upon Microsoft Windows Server Failover Clustering as its high-availability mechanism, this application can be thought of as using the stateful application pattern. This application could be deployed with some public service providers, but not with others. Among other reasons, Windows Server Failover Clustering requires some form of shared storage, a capability that few public service providers currently support. It's important to understand which application patterns are used within the organization. It's also important to identify which application patterns a public provider supports. It's only possible to migrate applications that were designed with patterns supported by the public service provider.

4.3.6 Cost Model

Problem: Consumers tend to use more resources than they really need if there's no cost to them for doing so.

Solution: While a public provider will charge your organization based on consumption, you must decide what costs you'll show or charge your internal consumers for the resources. You will likely show or charge a higher cost to your internal consumers than you were charged by the public provider. This is largely due to the fact that you will probably integrate the public cloud provider's functionality with your private cloud functionality, and that integration most likely has a cost. For example, you probably currently show or charge your internal consumers when they use a virtual machine on your private cloud. You may provide some type of single sign-on capability to your internal consumers, and offer that capability with the virtual machines that are hosted on your private cloud. As a result, some portion of the cost that you show or charge internal consumers for that virtual machine is the cost to provide the single sign-on capability. A similar cost should be added to the virtual machines that are hosted with a public provider, if you also offer the same single sign-on capability for them. You may add further costs to support additional capabilities such as monitoring, backup, or other capabilities for public cloud virtual machines too.

5.0 Physical Design Considerations

With an understanding of the requirements detailed in the Envisioning the Hybrid Cloud Solution section of this document, and the reference model and principles, you can select appropriate products and technologies to implement the hybrid cloud infrastructure design. The following table lists the hardware vendor-agnostic and Microsoft products, technologies, and services that can be used to implement various entities from the reference model that is defined in this document.

| Reference model entity | Product/technology/external service |

| Network (support and services) |

|

| Authentication (support and services) |

|

| Directory (support and services) |

|

| Compute (support and services) |

|

| Storage (support and services) |

|

| Network infrastructure |

|

| Compute infrastructure |

|

| Storage infrastructure |

|

After selecting the products, technologies, and services to implement the hybrid cloud infrastructure, you can continue the design of the hybrid cloud infrastructure solution. The sections that follow outline a logical design process for the service, but, as mentioned in the Envisioning the Hybrid Cloud Solution section of this document, the design and requirements definition process is iterative until it’s complete. As a result, after you make some design decisions in earlier sections of this document you may find that decisions you make in later sections require you to re-evaluate decisions you made in earlier sections.

The primary sub-sections of this section are the “functional” design for the service, and align to entities in the reference model. Lower-level sub-sections then address specific design considerations which may vary from functional to service-level considerations.

The remainder of the document addresses design considerations and the products, technologies, and services listed in the preceding table. In cases where multiple Microsoft products, technologies, and services can be used to address different design considerations, the trade-offs between them are discussed. In addition to Microsoft products, technologies, and services, relevant vendor-agnostic hardware technologies are also discussed.

5.1 Overview

The physical design of the hybrid cloud infrastructure brings together the answers to the questions that were presented earlier in the document and the technology capabilities and options that are made available to you. The physical design that is discussed in this document uses a Microsoft–based, on-premises infrastructure and a Windows Azure Infrastructure Services–based public cloud infrastructure component. With that said, the design options and considerations can be applied to any on-premises and public cloud infrastructure provider combination.

When considering the hybrid cloud infrastructure from the physical perspective, the primary issues that you need to address include:

- Public cloud infrastructure service account acquisition and billing considerations

- Public cloud infrastructure server provider authentication and authorization considerations

- Network design considerations

- Storage design considerations

- Compute design considerations

- Application authentication and authorization considerations

- Management and support design considerations

We will discuss each of these topics in detail and will discuss the advantages and disadvantages of each of the options. In many cases, you will find that there is a single option. When this is true, we will discuss capabilities and possible limitations and how you can work with or around the limitations.

5.2 Service Account Acquisition and Billing Considerations for Public Cloud Infrastructure

When designing a hybrid cloud infrastructure, the first issue you need to address is how to obtain and provision accounts with the public cloud infrastructure service provider. In addition, if the public cloud infrastructure service provider supports multiple payment options, you will need to determine which payment option best fits your needs now, and whether, in the future, you might want to reconsider the payment options that you’ve selected.

For example, Windows Azure offers several payment plans:

- Pay as you go—no up-front time commitment and you can cancel at any time

- 6-months—pay monthly for six months

- 6-months—pay for six months up front

- 12-months—pay monthly for twelve months

- 12-months—pay for twelve months up front

Pay as you go is the most expensive. Discounts are offered for each of the other four plans. You also have the choice to have the service billed to your credit card or your organization can be invoiced.

For more information on Windows Azure pricing plans, see Windows Azure Purchase Options.

You also need to consider whether you want to have the same person who owns the account (and therefore is responsible for paying for the service) to also have administrative control over the services that are running the public side of your hybrid cloud infrastructure. In most cases, the payment duties and the administrative duties will be separate. Determine whether your cloud service provider enables this type of role-based access control.

For example, Windows Azure as the notions of accounts and subscriptions. The Windows Azure subscription has two aspects:

- The Windows Azure account, through which resource usage is reported and services are billed.

- The subscription itself, which governs access to and use of the Windows Azure services that are subscribed to. The subscription holder manages services (for example, Windows Azure, SQL Azure, Storage) through the Windows Azure Platform Management Portal.

A single Windows Azure account can host multiple subscriptions, which can be used by multiple teams responsible for the hybrid cloud infrastructure if you need additional partitioning of your services.

It’s important to be aware that using a single subscription for multiple projects can be challenging from an organizational and billing perspective. The Windows Azure management portal provides no method of viewing only the resources used by a single project, and there is no way to automatically break out billing on a per-project basis. While you can somewhat alleviate organizational issues by giving similar names to all services and resources that are associated with a project (for example, HRHostedSvc, HRDatabase, HRStorage), this does not help with billing.

Due to the challenges with granularity of access, organization of resources, and project billing, you may want to create multiple subscriptions and associate each subscription with a different project. Another reason to create multiple subscriptions is to separate the development and production environments. A development subscription can allow administrative access by developers while the production subscription allows administrative access only to operations personnel.

Separate subscriptions provide greater clarity in billing, greater organizational clarity when managing resources, and greater control over who has administrative access to a project. However this approach can be more costly than using a single subscription for all of your projects. You should carefully consider your requirements against the cost of multiple subscriptions.

For more information on Windows Azure accounts and subscriptions, see What is an Azure Subscription.

For more information on account acquisition and subscriptions, see Provisioning Windows Azure for Web Applications

You need to determine how the public cloud service provider partitions the services for which you will be billed. For example:

- Does the public cloud infrastructure service provider surface all cloud infrastructure services that are part of a single offering?

- Does the public cloud infrastructure service provider require that you purchase each infrastructure service separately?

- Does the public cloud infrastructure provider provide their entire range of cloud infrastructure services as a single entity, but also make available some value-added services that you can purchase separately?

Note that in all three of these cases, the public cloud service provider would bill based on usage, because metered services is an essential characteristic of cloud computing.

For example, Windows Azure Infrastructure Services is a collection of unique service offerings within the entire portfolio of Azure service offerings. Specifically, Azure Infrastructure Services includes Azure Virtual Machines and Azure Virtual Networks. In addition, Azure Virtual Networks takes advantage of some of the PaaS components of the system to enable the site-to-site and point-to-site VPN gateway. However, when you obtain an Azure account and set up a subscription, all of the Windows Azure services are available to you with the exception of some additional value-added services that you can purchase separately.

5.3 Network Design Considerations

In most cases, a hybrid cloud infrastructure requires you to extend your corporate network to the cloud infrastructure service provider’s network so that communications are possible between the on-premises and off-premises components. There are several primary issues that you need to consider when designing the networking component to support the hybrid cloud infrastructure. These include:

- On-premises physical network design

- Inbound connectivity to the public infrastructure service network

- Load balancing inbound connections to public infrastructure service virtual machines

- Name resolution for the public infrastructure service network

This section expands each of these issues.

5.3.1 On-Premises Physical Network Design

You need to consider the following issues when deciding what changes you might need to make to the current physical network:

- How will you connect the on-premises network to the public infrastructure services network?

- What path should the on-premises users take to access resources in the public cloud infrastructure provider’s network?

- What network access controls will you use to control access between on-premises and off-premises resources?

5.3.1.1 Network Connection Between On-Premises and Off-Premises Resources

There are typically three options available to you to connect on- premises and off-premises resources:

- Site-to-site VPN connection

- Dedicated WAN link

- Point-to-site connection

Site-to-Site VPN

A site-to-site VPN connection enables you to connect entire networks together. Each side of the connection hosts at least one VPN gateway, which essentially acts as router between the on-premises and off-premises networks. The routing infrastructure on the corporate network is configured to use the IP address of the local VPN gateway to access the network ID(s) that are located on the public cloud provider’s network that hosts the virtual machines that are part of the hybrid cloud solution.

For more information about site-to-site VPNs, see What is VPN?

Windows Azure Virtual Networks

Windows Azure enables you to put virtual machines on a virtual network that is contained within the Windows Azure infrastructure. Virtual Networks enable you to create a virtual network and place virtual machines into the virtual network. When virtual machines are placed into an Azure Virtual Network, they will be automatically assigned IP addresses by Windows Azure, so all virtual machines must be configured as DHCP clients. However, even though the virtual machines are configured as DHCP clients, they will keep their IP addressing information for the lifetime of the virtual machine. ** **

Note:

The only time when a virtual machine will not keep an IP address for the life of the virtual machine on an Azure Virtual Network is when a virtual machine might need to be moved as a consequence of “service healing.” If a virtual machine is created in the Windows Azure portal, and it then experiences service healing, that virtual machine is assigned a new IP address. You can avoid this by creating the virtual machine by using PowerShell instead of creating it in the Windows Azure portal. For more information on service healing, please see Troubleshooting Deployment Problems Using the Deployment Properties.

Virtual machines on the same Azure Virtual Network will be able to communicate with one another only if those virtual machines are part of the same cloud service. If the virtual machines are on the same virtual network and are not part of the same cloud service, those virtual machines will not be able to communicate with one another directly over the Azure Virtual Network connection.

You can use an IPsec site-to-site VPN connection to connect your corporate network to one or more Azure Virtual Networks. Windows Azure supports several VPN gateway devices that you can put on your corporate network to connect your corporate network to an Azure Virtual Network. The on-premises gateway device must have a public address and must not be placed behind a NAT device.

For more information on which VPN gateway devices are supported, see About VPN Devices for Virtual Network.

Note:

While you can connect your on-premises network to multiple Azure Virtual Networks, you cannot connect a single Azure Virtual Network to multiple on-premises points of presence.

A single Azure Virtual Network can be assigned IP addresses in multiple network IDs. You can obtain a summarized block of addresses that represents the number of addresses you anticipate you will need and then you can subnet that block. However, connections between the IP subnets are not routed, and therefore there are no router ACLs that you can apply between the IP subnets.

However, you should still consider whether you will want multiple subnets. One reason for multiple subnets is for accounting purposes, where virtual machines that match certain roles within your hybrid cloud infrastructure are placed on specific subnets that are assigned to those roles. However, you can use Network ACLs to control traffic between virtual machines in an Azure Virtual Network. For more information on Network ACLs in Azure Virtual Networks, please see setting an Endpoint ACL on a Windows Azure VM.

You should also consider the option of using multiple Azure Virtual Networks to support your hybrid cloud infrastructure. While different Azure Virtual Networks can’t directly communicate with each other over the Azure network fabric, they can communicate with each other by looping back through the on-premises VPN gateway. Keep in mind that there are egress traffic costs that are involved with this option, so you need to assess cost issues when considering this option. This is also the case when you host some virtual machines in the Windows Azure PaaS services (which are part of a different cloud service than the virtual machines). The virtual machines in the PaaS services need to loop back through the on-premises VPN gateway to create the machines in the Azure Infrastructure Services Azure Virtual Networks.

You should decide on the IP addressing scheme, whether to use subnets, and the number of Azure Virtual Networks you will need before creating any virtual machines. After these decisions are made, you should create or move virtual machines onto those virtual networks.

Another important consideration is that Azure site-to-site VPN uses pre-shared keys to support the IPsec connection. Some enterprises may not consider pre-shared keys as an enterprise ready approach for supporting IPsec site to site VPN connections, so you will want to confer with your security team to determine if this approach is consistent with corporate security policy. For more information on this issue, please see Preshared Key Authentication. Note that your IT organization may consider the security and management issues for pre-shared keys to be an remote access VPN client problem only.

For more information on Azure Virtual Networks and how to configure and manage them, see Windows Azure Virtual Network Overview.

Dedicated WAN Link

A dedicated WAN link is a permanent telco connection that is established directly between the on-premises network and the cloud infrastructure service provider’s network. Unlike the site-to-site VPN, which represents a virtual link layer connection over the Internet, the dedicated WAN link enables you to create a true link layer connection between your corporate network and the service provider’s network.

For more information on dedicated WAN links, see Wide Area Network.

At the time this document was written, Windows Azure did not support dedicated WAN link connections between the on-premises network and Azure Virtual Networks.

Point-to-Site Connections

A point-to-site connection (typically referred to as a remote access VPN client connection) enables you to connect individual devices to the public cloud service provider’s network. For example, suppose you have a hybrid cloud infrastructure administrator working from home from time to time. The administrator could establish a point-to-site connection from his computer in his home to the entire public cloud service provider’s network that hosts the virtual machines for his organization.

For more information on remote access VPN connections, see Remote Access VPN Connections.

Windows Azure supports point-to-site connectivity that uses a Secure Socket Tunneling Protocol (SSTP)–based remote access VPN client connection. This VPN client connection is done using the native Windows VPN client. When the connection is established, the VPN client can access any of the virtual machines over the network connection. This enables administrators to connect to the virtual machines using any administrative web interfaces that are hosted on the virtual machines, or by establishing a Remote Desktop Protocol (RDP) connection to the virtual machines. This enables hybrid cloud infrastructure administrators to manage the virtual machines at the machine level without requiring them to open publically accessible RDP ports to the virtual machines.

In order to authenticate VPN clients, certificates must be created and exported. If you have a PKI, you can use an X.509 certificate issued by your CA. If you don’t have a PKI, you must generate a self-signed root certificate and client certificates chained to the self-signed root certificate. You can then install the client certificates with private key on every client computer that requires connectivity.

For more information on point-to-site connections to Windows Azure Virtual Networks, see About Secure Cross-Premises Connectivity.

The following table lists the advantages and disadvantages of each of the approaches that are discussed in this section.

| Connectivity options | Advantages | Disadvantages |

|---|---|---|

| Site-to-site VPN |

|

|

| Dedicated WAN Link |

|

|

| Point-to-site connection (remote access VPN client connection) |

|

|

5.3.2 Inbound Connectivity to the Public Cloud Infrastructure Service Network

Inbound connectivity to the public cloud infrastructure provider’s network is about how users will connect to the services that are hosted by the virtual machines within the provider’s network. Important options to consider include:

- All access to services that are hosted on the public cloud infrastructure provider’s network will be done over the Internet.

- All access to services that are hosted in the public cloud infrastructure provider’s network will be done over the corporate network and any site-to-site VPN or dedicated WAN link connection that connects the corporate network to the public cloud infrastructure service provider’s network.

- Some access to services that are hosted on the public cloud infrastructure provider’s network will be done over the Internet and some will be done from within the corporate network.

All Access to Cloud Hosted Services is Through the Internet

With the first option, all connections to services in the public cloud infrastructure service provider’s network will be made over the Internet. It doesn’t matter whether the client system is inside the corporate network or outside the corporate network. With this configuration you need to maintain only a single DNS entry for inbound access to the service, because all client machines will be accessing the same IP address, In Windows Azure, this is the address of the VIP that is assigned to the front-ends of the service that is hosted in the Azure Infrastructure Services Virtual Network.

All Access to Cloud Hosted Services is Through Site-to-Site VPN or WAN Link

The second option represents the opposite of the first, in that all clients that need to connect to parts of the service that are hosted in the public cloud infrastructure provider’s network will need to do it from within the confines of the corporate network. The service will not be available to users on the Internet “at large” and client systems will have to take a path through the corporate network to reach the services.

That doesn’t mean that the client systems must be physically attached to the corporate network (or attached through the corporate wireless). A client system could be off-site, but connected to the corporate network over a remote-access VPN client connection or similar technology, such as Windows DirectAccess. The DNS configuration in this case would require just a single entry, because all access to the resources in the public cloud infrastructure service provider’s network will be to the IP address that is assigned to the virtual machine in the public cloud infrastructure service provider’s network. In an Azure Virtual Network, this would be the DIP that is assigned to the front-end virtual machines of the service.

For more information on DirectAccess in Windows Server 2012, see Remote Access (DirectAccess, Routing and Remote Access) Overview.

Access to Cloud Hosted Services Varies with Client Location

The third option allows for hosts that are not connected to the corporate network to connect through the Internet to the service that is hosted in the public cloud infrastructure service provider’s network. Clients that are connected to the corporate network can access the service by going through a site-to-site VPN or dedicated WAN link that connects the corporate network to the public cloud infrastructure service provider’s network.

This option requires that you maintain a DNS record that client systems can use when they are not on the corporate network, which in Azure represents the VIP that is used to access the virtual machine. It also requires a DNS record that clients will use when they are connected to the corporate network, which in Azure represents the DIP that is assigned to the virtual machine. This design requires that you create a split DNS infrastructure.

For more information on a split DNS infrastructure, see You Need A Split DNS!

The following table describes some of the advantages and disadvantages of the three options for inbound connectivity.

| Inbound connectivity option | Advantages | Disadvantages |

|---|---|---|

| All inbound access is done over the Internet. |

|

|

| All inbound access is done through the corporate network. |

|

|

| Some inbound access is over the Internet and some is over the corporate network. |

|

|

5.3.3 Load Balancing of Inbound Connections to Virtual Machines of a Public Infrastructure Service

Services that you place in the public cloud infrastructure service provider’s network may need to be load balanced to support the performance and availability characteristics that you require for a hybrid application running on a hybrid cloud infrastructure. There are several ways you can enable load balancing of connections to services that are hosted on the public cloud infrastructure service provider’s network. These include:

- Use a load balancing mechanism that is provided by the public cloud infrastructure service provider that’s integrated with the service provider’s fabric management system.

- Use some form of network load balancing that is enabled by the operating systems, or use an add-on product that runs on the virtual machines themselves.

- Use an external network load balancer to perform load balancing of the incoming connections to the service components that are hosted in the public cloud infrastructure provider’s network.

Load Balancing Mechanism Provided by the Public Cloud Infrastructure Service Provider

The first option requires that the service provider has a built-in load balancing capability that is included with its service offering. In Windows Azure, external communication with virtual machines can occur through endpoints. These endpoints are used for various purposes, such as load-balanced traffic or direct virtual machine connectivity, like RDP or SSH.

Windows Azure provides round-robin load balancing of network traffic to publicly defined ports of a cloud service that is represented by these endpoints. For virtual machines, you can set up load balancing by creating new virtual machines, connecting them under a cloud service, and then adding load-balanced endpoints to the virtual machines.

For more information on load balancing for virtual machines in Windows Azure, see Load Balancing Virtual Machines

Load Balancing Enabled on the Virtual Machines

The second option requires that the operating system running on the virtual machines in the public cloud infrastructure service provider’s network must run some kind of software-based load-balancing system.

For example, Windows Server 2012 includes the Network Load Balancing feature, which can be installed on any virtual machine that runs that operating system. There are other load balancing applications that can be installed on virtual machines. The service provider must be able to support these guest-based load balancing techniques, because they often change the characteristics of the MAC address that is exposed to the network. At the time this paper was written, Azure Virtual Networks did not support this type of load balancing.

For more information about Windows Server Network Load Balancing, see Network Load Balancing Overview

Use an External Network Load Balancer

The third option is a relatively specialized one because it requires that you can control the path between the client of the service that is hosted in the public cloud infrastructure service provider’s network and the destination virtual machines. The reason for this is that the clients must pass through the dedicated hardware load balancer so that the hardware load balancer can perform the load balancing for the client systems.

This option is likely not going to be available from the public cloud infrastructure service provider’s side, because public providers in general do not allow you to place your own equipment on their network. This method would work if you are hosting an application in the service provider’s network that is accessible only to clients on the corporate network. Because you have control of what path internal clients will use to reach the service, you can easily put a load balancer in the path.

For more information on external load balancers, see Load Balancing (computing)

The following table describes the advantages and disadvantages of each of these three approaches.

| Load-balancing mechanism | Advantages | Disadvantages |

|---|---|---|

| Public cloud infrastructure service provider load balancing solution |

|

|

| OS-based or add-on load-balancing solution |

|

|

| External load-balancing solution |

|

|

5.3.4 Name Resolution for the Public Infrastructure Service Network

Name resolution is a critical activity for any application in a hybrid cloud infrastructure. Applications that span on-premises components and those in the public cloud infrastructure provider’s network must be able to resolve names on both sides in order for all tiers of the application to work easily with one another.

There are several options for name resolution in a hybrid cloud infrastructure:

- Name resolution support that is provided by the public cloud infrastructure service provider

- Name resolution support that is based on your on-premises DNS infrastructure

- Name resolution support that is based on external DNS infrastructure

Name Resolution Services Provided by the Public Cloud Infrastructure Service Provider

The public cloud infrastructure service provider may provide some type of DNS services as part of its service offering. The nature of the DNS services will vary. For example, Azure Virtual Networks provide basic DNS services for name resolution of virtual machines that are part of the same cloud service. Be aware that this is not the same as virtual machines that are on the same Azure Virtual Network. If two virtual machines are on the same Azure Virtual Network, but as not part of the same cloud service, they will not be able to resolve each other’s names by using the Azure Virtual Network DNS service.

For more information on Azure Virtual Network DNS services, see Windows Azure Name Resolution.

Name Resolution Services Based on On-Premises DNS Infrastructure

The second option is the one you’ll typically use in a hybrid cloud infrastructure where applications span on-premises networks and cloud infrastructure service provider’s networks. You can configure the virtual machines in the service provider’s network to use DNS servers that are located on premises, or you can create virtual machines in the public cloud infrastructure service provider’s network that hosts corporate DNS services and are part of the corporate DNS replication topology. This makes name resolution for both on-premises and cloud based resources to be available to all machines that support the hybrid application.

Name Resolution Services External to Cloud and On-Premises Systems

The third option is less typical, as it would be used when there is no direct link, such as a site-to-site VPN or dedicated WAN link, between the corporate network and the public cloud infrastructure services network. However, in this scenario you still want to enable some components of the hybrid application to live in the public cloud and yet keep some components on premises. Communications between the public cloud infrastructure service provider’s components and those on premises can be done over the Internet. If on-premises components need to initiate connections to the off-premises components, they must use Internet host name resolution to reach those components. Likewise, if components in the public cloud infrastructure service provider’s network need to initiate connections to those that are located on premises, they would need to do so over the Internet by using a public IP address that can forward the connections to the components on the on-premises network. This means that you would need to publish the on-premises components to the Internet, although you could create access controls that limit the incoming connections to only those virtual machines that are located in the public cloud infrastructure services network.

The following table describes some advantages and disadvantages of each of these approaches.

| Name resolution approach | Advantages | Disadvantages |

| Public cloud infrastructure service provider supplies DNS. |

|

|