Walkthrough of Azure Sync Service Sample

Overview

This document walks you through the Azure Sync Service sample, available on Code Gallery. For instructions on how to build, run, and deploy the sample, see the Installation Guide contained in the download. This walkthrough will make a lot more sense to you if you download the sample first and look at it as you read.

The Azure Sync Service sample shows you how to extend the reach of your data to anyone that has an Internet connection. The sample uses Microsoft Sync Framework deployed to an Azure Hosted Service, so your data extends even to users who have a poor or intermittent connection because the data needed by the user is kept on the user's local computer and synchronized with the server database only when needed.

Your Scenario

The typical scenario for this sample is when you have data that is stored in a SQL Database and you want to allow remote users to have access to this data but you don't want to allow users to connect directly to your SQL Database. By creating a middle tier that runs as an Azure Hosted Service, client applications connect to your hosted service, and the service connects to your SQL Database, providing a layer of security and business logic between the client and the server. Client applications can create a synchronization relationship with your server database, take their data offline, and synchronize changes back to the server database when a connection is available. In this way, client applications operate seamlessly even when the Internet connection is unreliable.

Why Deploy to Azure Hosted Service?

Deploying Sync Framework in a middle tier component as an Azure Hosted Service gives the following benefits:

- Security: No need to allow users direct access to your SQL Database. You control the middle tier, so you control exactly what flows in and out of your database.

- Business rules: The middle tier allows you to modify the data that flows between the server database and the client, enforcing any rules that are peculiar to your business.

- Ease of connection: The hosted service in this sample uses Windows Communication Foundation (WCF) to communicate between the client and the middle tier component, allowing a flexible and standard way of communicating commands and data.

How the Sample Works

The sample is a 3-tier application that contains four major components:

- SQL Database: the database running in the server tier.

- SyncServiceWorkerRole: an Azure worker role that runs in the middle tier. This component handles the majority of synchronization operations and communicates with the web role component by using a job queue and blob storage.

- WCFSyncServiceWebRole: an Azure web role that runs in the middle tier combined with a proxy provider that runs in the client application. This component uses WCF to communicate between the proxy provider on the client and the web role on Azure and communicates with the worker role component by using a job queue and blob storage.

- ClientUI: a Windows application that you use to establish a synchronization relationship between a SQL Server Compact database and the SQL Database. This component uses WCF to communicate with the web role component.

In a nutshell, you use the ClientUI application to identify a SQL Server Compact database that you'll synchronize with your SQL Database. When you start synchronization, ClientUI uses a proxy provider to make WCF calls to the WCFSyncServiceWebRole component, which retrieves items to synchronize from the SQL Database to the SQL Server Compact database. The WCFSyncServiceWebRole component puts jobs in a queue in an Azure Storage Account. The SyncServiceWorkerRole component pulls jobs off of the queue and takes action, such as to enumerate changes to be synchronized. The SyncServiceWorkerRole component puts item data and metadata in blob storage in an Azure Storage Account and marks the status of the blob as complete when it is done with its task. Meanwhile, the proxy provider polls the web role to check the status of the blob. When WCFSyncServiceWebRole finds that the status of the blob is complete, it pulls items out of blob storage and uses WCF to send them to the proxy provider in ClientUI, where they are applied to the SQL Server Compact database. Alternately, the process can be done in the reverse direction, with the client and server roles reversed.

What the Components Do

Let's take a closer look at each component and at some key parts of the code in order to understand how this sample is put together.

WindowsAzureSyncService and Configuration

This is the Azure Cloud Service project that Visual Studio uses to configure and package the web and worker roles for deployment to Azure. This project contains the two roles for the deployment: WCFSyncServiceWebRole and SyncServiceWorkerRole. You can configure settings for these two roles by double-clicking them in the Solution Explorer. Changes made to the configuration settings in the UI are reflected in the ServiceConfiguration.cscfg file.

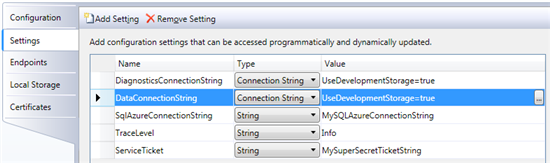

The configuration settings for WCFSyncServiceWebRole look like this in Visual Studio 2008:

DiagnosticsConnectionString and DataConnectionString define the connection to an Azure Storage Account for diagnostics and data. SqlAzureConnectionString defines the connection to the SQL Database server database. TraceLevel defines the level of tracing output. ServiceTicket provides minimal security for the sample: it is a string chosen by you that is exchanged between the client and the hosted service before a synchronization session can be started on the hosted service.

To deploy these roles to Azure, you first create a deployment package for the service by right-clicking on the WindowsAzureSyncService project in the Solution Explorer and selecting Publish…. This creates .cscfg and .cspkg files that you deploy to Azure.

Some other important configuration of the roles is contained in the SyncServiceWorkerRole and WCFSyncServiceWebRole projects. These are the Sync Framework assemblies and synchronization.assembly.manifest files that are contained in the synchronization.assembly folders, the webapp.manifest files, and the CreateActivationContext method in CommonUtil.cs of the CommonUtil project. For more information on what these are used for, see How to: Deploy Sync Framework to Windows Azure in the Sync Framework documentation.

SyncServiceWorkerRole

This component runs in an Azure Hosted Service as a worker role. Its purpose is to pull jobs off of a job queue and take action based on the type of job it finds in the queue. A job in the queue is simply the ID of a blob stored in blob storage. The blob defines its associated job type. The queue and blob storage location is defined by DataConnectionString in the configuration file for the worker role. The worker role operates independently of the web role, so multiple web role instances can be serviced by the same worker role. The worker role can operate either in batched mode or unbatched mode. The mode is determined by the client and is indicated in the blob. Batched mode means that synchronization data is broken up and sent in batches of a specified maximum size. To simplify this walkthrough, batching is not discussed.

This component contains one class, SyncServiceWorkerRole, contained in WorkerRole.cs.

The following code sample from the Run method shows how the worker role pulls a job message off of the queue, uses the job ID contained in the message to open the associated blob, and examines the blob metadata to take action based on the job type. A new thread is started for each new job.

if ((job = jobQueue.GetMessage(TimeSpan.FromMinutes(20))) != null) { CommonUtil.SyncTrace(TraceCategory.Verbose, "Job:{0} pulled off queue by worker role", job.AsString); CloudBlobContainer blobContainer = CommonUtil.GetBlobContainer(job.AsString); Thread workerThread; if (blobContainer.Metadata["jobtype"] == "upload") { workerThread = new Thread(new ParameterizedThreadStart(UploadSync)); } else { workerThread = new Thread(new ParameterizedThreadStart(DownloadSync)); } lock (_jobCounterGate) { _currentJobs++; } workerThread.Start(job); }

Depending on the job type, the new thread starts in either the DownloadSync or UploadSync method.

DownloadSync method

DownloadSync first takes the job off of the queue so that no other threads are started for it:

CloudQueueMessage job = (CloudQueueMessage)jobRef; jobId = job.AsString; jobQueue = CommonUtil.GetJobQueue(); jobQueue.DeleteMessage(job);

After taking care of some other initialization, DownloadSync gets the SQL Database connection string out of the configuration file, creates and initializes a SqlSyncProvider object to represent it for synchronization, and begins a session:

conn = new System.Data.SqlClient.SqlConnection(

RoleEnvironment.GetConfigurationSettingValue("SqlAzureConnectionString")); provider = new SqlSyncProvider(blobContainer.Metadata["scope"], conn); Directory.CreateDirectory(localBatchingFolder); provider.BatchingDirectory = localBatchingFolder; provider.MemoryDataCacheSize = CommonUtil.MaxServiceMemoryCacheSizeInKb; provider.BeginSession(Microsoft.Synchronization.SyncProviderPosition.Remote, null);

DownloadSync then gets the SyncKnowledge of the client out of where it was placed in blob storage by WCFSyncServiceWebRole, and uses it to get a ChangeBatch object that contains changes from the SQL Database that are not contained in the client database. A BinaryFormatter object is used to serialize the ChangeBatch object and its associated change data to a MemoryStream, which is used to upload the change information to blob storage. The change data will be retrieved later by WCFSyncServiceWebRole and sent to the client application.

SyncKnowledge knowledge = SyncKnowledge.Deserialize(provider.IdFormats, blobContainer.GetBlobReference("destinationKnowledge").DownloadByteArray()); uint batchSize = Convert.ToUInt32(blobContainer.Metadata["batchsize"]); BinaryFormatter bf = new BinaryFormatter(); object changeData = null; ChangeBatch cb = provider.GetChangeBatch(batchSize, knowledge, out changeData); blobContainer.Metadata.Add("isbatched", ((DbSyncContext)changeData).IsDataBatched.ToString()); blobContainer.Metadata.Add("batchesready", "false"); CloudBlob changeBatchblob = blobContainer.GetBlobReference("changebatch"); using (MemoryStream ms = new MemoryStream()) { bf.Serialize(ms, cb); changeBatchblob.UploadByteArray(ms.ToArray()); } CloudBlob changeDataBlob = blobContainer.GetBlobReference("changedata"); using (MemoryStream ms = new MemoryStream()) { bf.Serialize(ms, changeData); changeDataBlob.UploadByteArray(ms.ToArray()); }

DownloadSync then performs some cleanup and exits the thread.

UploadSync method

UploadSync is very similar, though it takes change data sent from the client and applies the changes to the SQL Database. To do this, it pulls change data out of blob storage:

CloudBlob blob = blobContainer.GetBlobReference("changedata"); DbSyncContext changeData; using (MemoryStream ms = new MemoryStream(blob.DownloadByteArray())) { BinaryFormatter bf = new BinaryFormatter(); changeData = (DbSyncContext)bf.Deserialize(ms); }

Creates a SqlSyncProvider object to handle synchronization:

conn = new System.Data.SqlClient.SqlConnection( RoleEnvironment.GetConfigurationSettingValue("SqlAzureConnectionString"));

provider = new SqlSyncProvider(blobContainer.Metadata["scope"], conn);

And uses the SqlSyncProvider object to process the change batch:

ChangeBatch cb = new ChangeBatch(provider.IdFormats, new SyncKnowledge(), new ForgottenKnowledge()); cb.SetLastBatch(); provider.ProcessChangeBatch(ConflictResolutionPolicy.ApplicationDefined, cb, changeData, new SyncCallbacks(), syncStats);

Important Be careful not to leave the worker role running in your Azure account. Because it polls once per second for jobs stored in the queue, it can unintentionally add compute hours to your Azure account if left running for long periods of time. In a production environment, you may want to implement a backoff mechanism for slow times when the polling loop can poll less frequently.

WCFSyncServiceWebRole and RelationalProviderProxy

Most of the WCFSyncServiceWebRole components run in an Azure Hosted Service web role. Their primary function is to use WCF to communicate synchronization commands and data to the client application, and to use the job queue and blob storage to communicate synchronization tasks to the worker role. The RelationalProviderProxy class runs on the client computer and fields calls made by Sync Framework in the client application. It translates these calls into WCF commands and sends them to the hosted service components. Like the worker role, the web role can operate in batched or unbatched mode. To simplify this walkthrough, batching is not discussed.

The ISyncServiceContract interface that is contained in ISyncServiceContract.cs defines the WCF service contract for the web role. This interface defines the data types and commands that the client can use to communicate with the hosted service through WCF.

The AzureSyncService class implements the ISyncServiceContract interface and runs in the hosted service. When a WCF command is sent by the client, this class does the work of processing the command and returning any required data.

Important The GetScopeDescription and BeginSession methods control access by checking a ticket string sent by the client against the ServiceTicket setting of the web role configuration. This provides a minimal level of security to help prevent unauthorized access. Because the ticket string is sent in clear text, this is not an appropriate level of security for a production environment. See Security Tips for ways to modify the sample to make it more secure.

The RelationalProviderProxy class implements KnowledgeSyncProvider and runs in the client application as the remote provider synchronized in a session. RelationalProviderProxy is tightly coupled with AzureSyncService because Sync Framework makes synchronization calls to RelationalProviderProxy and RelationalProviderProxy passes these calls on to AzureSyncService.

RelationalProviderProxy.BeginSession and AzureSyncService.BeginSession methods

The RelationalProviderProxy.BeginSession method is called by Sync Framework to start a synchronization session. It does little more than pass the call on to AzureSyncService.BeginSession.

The first thing AzureSyncService.BeginSession does is generate a job ID for the session. The job ID is passed through the job queue to the worker role and is used to identify which blobs in blob storage are associated with this session:

SessionIDManager sm = new SessionIDManager(); string jobId = sm.CreateSessionID(System.Web.HttpContext.Current);

AzureSyncService.BeginSession then creates a blob container and adds the scope name to its metadata:

CloudBlobContainer blobContainer = CommonUtil.CreateBlobContainer(jobId); blobContainer.Metadata.Add("scope", scopeName); blobContainer.Metadata.Add("status", Enum.Format(typeof(SyncJobStatus), SyncJobStatus.Created, "g")); blobContainer.SetMetadata();

RelationalProviderProxy.GetChangeBatch, AzureSyncService.PrepareBatches, and AzureSyncService.GetBatchInfo methods

These methods are used for a download synchronization to retrieve changes from the SQL Database and apply them to the SQL Server Compact database.

RelationalProviderProxy.GetChangeBatch is called by Sync Framework and starts by calling AzureSyncService.PrepareBatches:

proxy.PrepareBatches(this._jobId, batchSize, destinationKnowledge);

AzureSyncService.PrepareBatches updates the metadata of the blob container for this job to indicate that it is for download synchronization, update the blob that contains the client knowledge, and puts a message in the job queue.

CloudBlobContainer blobContainer = CommonUtil.GetBlobContainer(jobId); CloudQueue jobQueue = CommonUtil.GetJobQueue(); blobContainer.Metadata.Add("jobtype", "download"); blobContainer.Metadata.Add("batchsize", batchSize.ToString()); blobContainer.SetMetadata(); CloudBlob blob = blobContainer.GetBlobReference("destinationKnowledge"); blob.UploadByteArray(destinationKnowledge.Serialize()); jobQueue.AddMessage(new CloudQueueMessage(jobId)); blobContainer.Metadata["status"] = Enum.Format(typeof(SyncJobStatus), SyncJobStatus.InQueue, "g"); blobContainer.SetMetadata();

The worker role will then take the message off the queue, find the blob container that is marked for download, fill the blob container with any changes to download, and mark the job as complete.

Meanwhile, RelationalProviderProxy.GetChangeBatch polls the web role until the job status is complete, at which point it calls the GetBatchInfo method:

PollServiceForCompletion();

if (_syncJobStatus == SyncJobStatus.Complete) { _changeParams = proxy.GetBatchInfo(_jobId); }

AzureSyncService.GetBatchInfo retrieves the change batch and change data from the blob container identified by the job ID and packages it in a GetChangesParameters object, which is then returned to the client. If batching is not being used, the simplified code looks like this:

CloudBlobContainer blobContainer = CommonUtil.GetBlobContainer(jobId); if (blobContainer.Metadata["batchesready"] == "true") { BinaryFormatter bf = new BinaryFormatter(); GetChangesParameters returnValue = new GetChangesParameters(); if (DoesBlobExist(blobContainer, "changebatch") &&

DoesBlobExist(blobContainer, "changedata")) { CloudBlob changeBatchBlob = blobContainer.GetBlobReference("changebatch"); using (MemoryStream ms = new MemoryStream( changeBatchBlob.DownloadByteArray())) { returnValue.ChangeBatch = (ChangeBatch)(bf.Deserialize(ms)); } CloudBlob changeDataBlob = blobContainer.GetBlobReference("changedata"); using (MemoryStream ms = new MemoryStream( changeDataBlob.DownloadByteArray())) { returnValue.DataRetriever = bf.Deserialize(ms); } } return returnValue; }

If batching is not being used, RelationalProviderProxy.GetChangeBatch simply returns the ChangeBatch and change data retriever objects to Sync Framework:

_changeParams.ChangeBatch.SetLastBatch(); ((DbSyncContext)_changeParams.DataRetriever).IsLastBatch = true; changeDataRetriever = _changeParams.DataRetriever; return _changeParams.ChangeBatch;

RelationalProviderProxy.ProcessChangeBatch and AzureSyncService.ApplyChanges methods

These methods are used for an upload synchronization to apply changes from the SQL Server Compact database to the SQL Database.

RelationalProviderProxy.ProcessChangeBatch is called by Sync Framework to process changes. When batching is not used, this method calls AzureSyncService.ApplyChanges, polls for completion, and displays statistics:

DbSyncContext context = changeDataRetriever as DbSyncContext; if (sourceChanges.IsLastBatch) { this.proxy.ApplyChanges(this._jobId, changeDataRetriever); SyncJobState jobState = PollServiceForCompletion();

if (jobState.Statistics != null) { sessionStatistics.ChangesApplied = jobState.Statistics.ChangesApplied; sessionStatistics.ChangesFailed = jobState.Statistics.ChangesFailed; }}

AzureSyncService.ApplyChanges writes the change data to the blob identified by the job ID, marks it for upload, and puts a message in the job queue:

CloudBlobContainer blobContainer = CommonUtil.GetBlobContainer(jobId); CloudQueue jobQueue = CommonUtil.GetJobQueue(); CloudBlob blob = blobContainer.GetBlobReference("changedata"); using (MemoryStream ms = new MemoryStream()) { BinaryFormatter bf = new BinaryFormatter(); bf.Serialize(ms, changeData); blob.UploadByteArray(ms.ToArray()); } blobContainer.Metadata.Add("jobtype", "upload"); blobContainer.Metadata["jobtype"] = "upload"; blobContainer.SetMetadata(); jobQueue.AddMessage(new CloudQueueMessage(jobId)); blobContainer.Metadata["status"] = Enum.Format(typeof(SyncJobStatus), SyncJobStatus.InQueue, "g"); blobContainer.SetMetadata();

The worker role will then pull the message off the job queue, find the associated blob, apply the changes to the SQL Database, and mark the job as complete.

RelationalProviderProxy.EndSession and AzureSyncService.EndSession methods

After synchronization completes, Sync Framework calls RelationalProviderProxy.EndSession, which passes the call along to AzureSyncService.EndSession.

AzureSyncService.EndSession deletes the blob container for the job. This implementation relies on the client to instigate cleanup. In a production environment, it would be more robust for the service to periodically clean up old jobs, particularly to take care of the case when the client application loses its connection to the server and cannot call EndSession.

CloudBlobContainer blobContainer = CommonUtil.GetBlobContainer(jobId); if (blobContainer != null) { blobContainer.Delete(); }

ClientUI

Instructions for operating the user interface of the client application can be found in the Installation Guide included with the sample download.

When the main form of the application is displayed, the SynchronizationHelper.ConfigureRelationalProviderProxy method is called. This method provisions the SQL Database, if necessary, and creates a RelationalProviderProxy object to represent the database for synchronization:

DbSyncScopeDescription scopeDesc = new DbSyncScopeDescription(SyncUtils.ScopeName); SqlSyncScopeProvisioning serverConfig = new SqlSyncScopeProvisioning(conn); //determine if this scope already exists on the server and if not go ahead and //provision. Note that provisioning of the server is often a design time scenario //and not something that would be exposed into a client side app as it requires //DDL permissions on the server. However, it is demonstrated here for purposes //of completeness. if (!serverConfig.ScopeExists(SyncUtils.ScopeName)) { scopeDesc.Tables.Add(SqlSyncDescriptionBuilder.GetDescriptionForTable( "orders",conn)); scopeDesc.Tables.Add(SqlSyncDescriptionBuilder.GetDescriptionForTable( "order_details", conn)); serverConfig.PopulateFromScopeDescription(scopeDesc); serverConfig.SetCreateTableDefault(DbSyncCreationOption.Skip); serverConfig.Apply(); } RelationalProviderProxy provider = new RelationalProviderProxy( SyncUtils.ScopeName, hostName, ConfigurationManager.AppSettings["ticket"]);

When you create a SQL Server Compact database by clicking the Add CE Client button in the main form of the application and filling out the New CE Creation Wizard dialog, the SynchronizationHelper.ConfigureCESyncProvider method is called. This method creates a SqlCeSyncProvider object to represent the database for synchronization and configures the object with the connection to the database and registers some event handlers:

SqlCeSyncProvider provider = new SqlCeSyncProvider(); //Set the scope name provider.ScopeName = SyncUtils.ScopeName; //Set the connection. provider.Connection = sqlCeConnection; //Register event handlers //1. Register the BeginSnapshotInitialization event handler. Called when a CE peer //pointing to an uninitialized snapshot database is about to begin initialization. provider.BeginSnapshotInitialization += new EventHandler( provider_BeginSnapshotInitialization); //2. Register the EndSnapshotInitialization event handler. Called when a CE peer //pointing to an uninitialized snapshot database has been initialized for the //given scope. provider.EndSnapshotInitialization += new EventHandler( provider_EndSnapshotInitialization); //3. Register the BatchSpooled and BatchApplied events. These are fired when a //provider is either enumerating or applying changes in batches. provider.BatchApplied += new EventHandler(provider_BatchApplied); provider.BatchSpooled += new EventHandler(provider_BatchSpooled); //That's it. We are done configuring the CE provider. return provider;

Make sure you paste the URL of the AzureSyncService.svc service into the Azure Service URL text box and select the proper databases to synchronize in the Source Provider and Destination Provider drop down controls.

When you click the Synchronize button, the SynchronizationHelper.SynchronizeProviders method is called. This method creates a SyncOrchestrator object and configures it to upload changes from the source provider to the destination provider that you specified in the form. Before synchronization is started, the SQL Server Compact database is provisioned, if necessary. SyncOrchestrator.Synchronize is called to synchronize the databases, and statistics are displayed after synchronization completes:

SyncOrchestrator orchestrator = new SyncOrchestrator(); orchestrator.LocalProvider = localProvider; orchestrator.RemoteProvider = remoteProvider; orchestrator.Direction = SyncDirectionOrder.Upload; progressForm = new ProgressForm(); progressForm.Show(); RelationalProviderProxy azureProviderProxy; //Check to see if any provider is a SqlCe provider and if it needs schema if (localProvider is RelationalProviderProxy) { azureProviderProxy = localProvider as RelationalProviderProxy; CheckIfProviderNeedsSchema(remoteProvider as SqlCeSyncProvider, azureProviderProxy); } else { azureProviderProxy = remoteProvider as RelationalProviderProxy; CheckIfProviderNeedsSchema(localProvider as SqlCeSyncProvider, azureProviderProxy); } SyncOperationStatistics stats = null; try { stats = orchestrator.Synchronize(); } catch (Exception ex) { if (azureProviderProxy.JobId != null) { DialogResult result = MessageBox.Show( "Sync was interrupted. Attempt to retry?", "Retry Sync", MessageBoxButtons.YesNo); if (result == DialogResult.Yes) { progressForm.Close(); stats = this.SynchronizeProviders(localProvider, remoteProvider); } else { azureProviderProxy.JobId = null; } } else { throw ex; }} progressForm.ShowStatistics(stats);

Troubleshooting

Tracing and Diagnostics

This sample uses the DiagnosticMonitor class to collect diagnostic and tracing data for the web and worker roles. This class is configured in the OnStart method of both roles:

DiagnosticMonitorConfiguration diagConfig = DiagnosticMonitor.GetDefaultInitialConfiguration(); diagConfig.Logs.ScheduledTransferPeriod = TimeSpan.FromMinutes( CommonUtil.TraceTransferIntervalInMinutes); AppDomain.CurrentDomain.UnhandledException += new UnhandledExceptionEventHandler(AppDomain_UnhandledException); DiagnosticMonitor.Start("DiagnosticsConnectionString", diagConfig);

The sample also uses the System.Diagnostics.Trace class to write its own traces to the log. These trace commands are wrapped in the CommonUtil.SyncTrace method and are sprinkled in appropriate places throughout the sample, such as this one from AzureSyncService.BeginSession:

CommonUtil.SyncTrace(TraceCategory.Info, "BeginSession - sync session job:{0} started for scope:{1}", jobId, scopeName);

When the web and worker roles run in the Azure Simulation Environment, diagnostic data is written to the output window of Visual Studio.

When the roles are deployed to Azure, diagnostic data is transferred to the specified storage account every 2 minutes. For more information about diagnostics, see Implementing Azure Diagnostics.

You can control the amount of tracing output and the storage account by setting the TraceLevel and DiagnosticsConnectionString values in the Settings tab of the role properties sheet in Visual Studio.

Security Tips

The sample code in this project is provided to illustrate a concept. In order to make the illustration as clear as possible, the sample does not use the safest coding practices. Therefore, the code should not be used in applications or websites without appropriate modifications.

The following list highlights parts of the sample that must be modified to help ensure the security of your data:

- After you configure the sample to use your Azure Storage Account and SQL Database, the ServiceConfiguration.cscfg file contains connection information for your storage account and database, including account keys and passwords. To restrict access to this data, encrypt the configuration file or store it in a restricted folder.

- During a synchronization session, items in the process of being synchronized are stored in blob storage in your storage account. When a synchronization session completes normally, these are cleaned up automatically. However, when the session ends unexpectedly, such as when the connection between the client application and Azure is broken, these temporary items are not cleaned up. To protect sensitive information stored in these items, the blob storage must be periodically cleaned up.

- This sample uses HTTP together with a simple ServiceTicket string sent in clear text to connect to the web role defined by the WCFSyncServiceWebRole project. Because customer information is exchanged between the web role and the client application, this level of security is not appropriate for a production environment. It is recommended to secure the channel by using HTTPS. This is achieved by setting Input endpoints to use HTTPS on the Endpoints tab of the WCFSyncServiceWebRole property sheet. When you deploy to Azure, configure your hosted service to use HTTPS as well. To use an HTTPS endpoint, you'll need to associate it with a certificate. For instructions, see Configuring an HTTPS Endpoint in Azure. You can also use WCF Authentication to provide even more security. For more information, see Windows Communication Foundation Authentication Service Overview.

- The HasUploadedBatchFile, UploadBatchFile, and DownloadBatchFile methods in the AzureSyncService class take a file name as an input parameter, which makes them susceptible to name canonicalization attacks. This mechanism should not be used in a production environment without doing proper security analysis. The following two MSDN whitepapers provide more information on guidelines for securing web services: Design Guidelines for Secure Web Applications and Architecture and Design Review for Security.

See Also

-

- [[Microsoft Sync Framework]]

- [[Windows Azure SQL Database Overview]]

- [[SQL Data Sync Overview]]