Some observations on Dynamic VHD Performance

Microsoft has made significant improvements to Hyper-V and the file system with Windows Server 2008 R2 to improve performance for dynamically expanding VHDs. Details are available at the Microsoft white paper “Virtual Hard Disk Performance”. While this white paper is about a lot more than just dynamic VHDs, this blog is limited to that small topic of dynamic VHDs.

If the performance is now good enough for your purposes, by all means you should use dynamically expanding VHDs. However, some basic issues remain, and these are based upon the VHD file format itself. This blog highlights at least one shortcoming that is likely to remain for a while. This is the issue that I/Os from the guest file system may often result in either multiple I/Os in the Hyper-V parent, or significantly more amount of I/O in number of bytes in the parent partition.

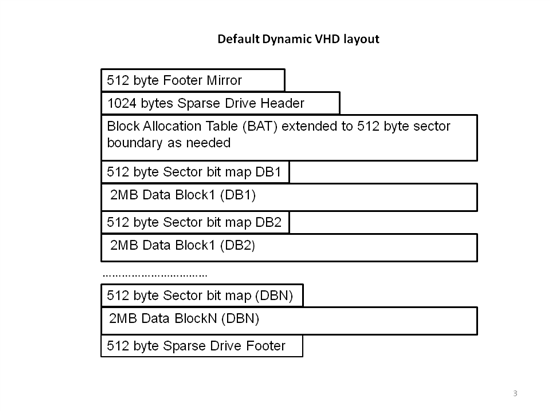

Based upon the Microsoft VHD file format specification here is a layout of a default dynamic VHD. Note the words “default” – in theory, the specification allows for different data block sizes, but the default is 2MB.

Note that the 2MB data blocks marked DB1, DB2, etc are what contain the NTFS blocks as seen by the guest VM NTFS file system. The guest VM NTFS file system has no idea about the existence of the other structures, including the sector bit maps of 512 bytes each.

Some quick observations on the dynamic VHD file layout:

· It makes most sense to have the 2MB data blocks aligned on a proper 4k boundary to optimize disk I/O. However, at best, only every 4th data block will be properly aligned. The issue is the 512 byte Sector Bitmap that exists between the 2MB data blocks. This is assuming that the meta data at the beginning aligns the first 2MB block on a proper boundary …something that may not always be true.

· The file format can turn a single I/O operation from the guest into multiple I/O operations in the parent. For example, assume the child VM NTFS issues a 4kb write. Due to non alignment, this can turn into two 4kb reads, a memory copy operation, and 2 4kb writes

· Even when the number of I/O operations is optimized (say a single 8kb read and a single 8kb write in the preceding example), the amount of data crossing the PCI bus is doubled, and with VM density, the issue is going to be the PCI bus I/O demands, sooner or later. Note that even with the I/O optimization, a single 4kb write, which would have remained a 4kb write with a fixed VHD, now becomes an 8kb read and an 8kb write.

· Add to this, the overhead of updating the sector bitmap.

· All of these observations are for where existing data within an existing already allocated data block is being updated.

· The Microsoft white paper “Virtual Hard Disk Performance” describes some remarkable performance metrics. However, is this with a single VHD and a single I/O meter engine or is it with multiple VHDs and/or multiple IoMeter engines? It appears to the author that Microsoft efforts to optimize dynamic VHD performance have been more in the area where new data blocks need to be allocated in response to a write. The VHD is expanded more aggressively, thereby eliminating repeated expansion of the VHD. This is simply an informed opinion, and not necessarily a correct one. The author further believes (and you need to form your own opinion) that when there is excess I/O capacity, dynamic VHDs will perform almost as efficiently in speed/latency as fixed size VHDs. But when there are tens of VMs, all writing to dynamic VHDs, the increased I/O overhead will take a larger toll?

In conclusion, the toll of increased I/O in terms of number of bytes, and/or number of operations remains, given the nature of the dynamic VHD file format. This toll may be acceptable for some people, and not as acceptable for others. Further, this toll in terms of increased I/O will not be perceptible on a system that has spare I/O capacity.