Windows Azure SQL Database Connection Management

Note

The information in this article is not up-to-date, and the article will be retired soon. For latest information about throttling, worker thread governance, and resource limits in Windows Azure SQL Database, see the Resource Management in Windows Azure SQL Database topic on MSDN.

**Important Note: ** To troubleshoot common connectivity issues with SQL Azure database, check the recently published **Troubleshoot connection problems to Azure SQL Database **guide walk through at http://support.microsoft.com/kb/2980233/en-us

Windows Azure SQL Database provides a large-scale multi-tenant database service on shared resources. In order to provide a good experience to all SQL Database customers, your connection to the service may be closed due to several reasons, like throttling. This article introduces SQL Database and its network topology. Then, it lists the reasons for connection losses and provides guidelines and best coding practices on how to manage the connection life cycle in SQL Database.

Last Reviewed: 8/19/2011

Note

If you wish to contribute to this page, use the Edit tab at the top (sign-in required). If you wish to provide feedback for this documentation please either send e-mail to azuredocs@microsoft.com or use the Comment field at the bottom of this page (sign-in required).

Note: If you are already familiar with this information and are looking for example code for connection retry, please see [[articles: Retry Logic for Transient Failures in Windows Azure SQL Database]].

Introduction

Windows Azure SQL Database is a cloud-based relational database service that is built on SQL Server technologies and runs in Microsoft data centers on hardware that is owned, hosted, and maintained by Microsoft.

SQL Database is built on a scalable platform involving numerous physical servers, and manages all the connections routing between your application and the physical servers where your data resides. Each SQL Database server can be associated with one or more databases. A SQL Database server is a logical group of databases.

SQL Database provides a large-scale multi-tenant database service on shared resources. To enable load balancing and high availability, databases associated with your SQL Database server may reside on separate physical computers in a Microsoft data center. Each physical computer can service many databases. When there is enough capacity, all the sessions can take full advantage of the available resources in SQL Database. However, if resources run low at peak times, SQL Database terminates the sessions using excessive resources to provide a stable system and prevents sessions from monopolizing all the resources till resources become available.

In addition to this, SQL Database employs a built-in load-balancing technology to ensure the optimal usage of the physical servers and services in the data centers. Load balancing in SQL Database is achieved by moving the databases within the physical machines and services based on periodic audit of system usage.

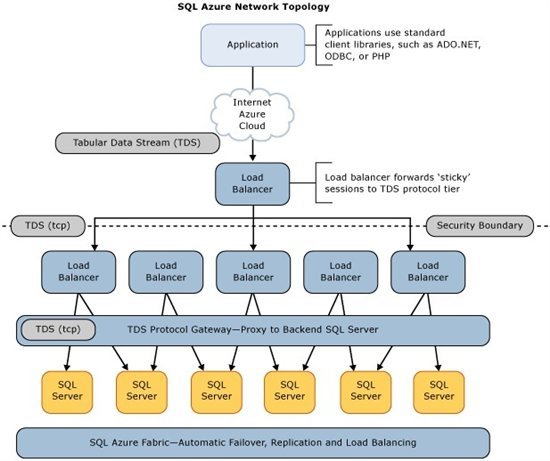

The following diagram illustrates the overall SQL Database network topology.

As seen in the diagram, SQL Database provides the same tabular data stream (TDS) interface as SQL Server. Therefore, you can use familiar tools and libraries to build client applications for data that is in the cloud.

The load balancers ensure the optimal usage of the physical servers and services in the data centers.

The TDS Gateway functions as a gateway between your application and the underlying platform, where your data resides. It performs the functions of data center isolation, provisioning, billing and metering, and connection routing.

The underlying platform consists of many instances of SQL Server, each of which is managed by the SQL Database fabric. The SQL Database fabric is a distributed computing system composed of tightly integrated networks, servers, and storage. It enables automatic failover, load balancing, and automatic replication between physical servers.

Causes of Connection Termination

Terminology

Throttling

Throttling is a mechanism used by SQL Database to prevent machines from becoming overloaded and unresponsive. Throttling works by monitoring resource consumption metrics for each machine in a cluster. When a metric exceeds a threshold, work is slowed down or aborted until the metric falls back below the threshold.

Soft throttling

Soft throttling is the first stage of throttling. It is only applied to the database consuming the most resources on the box. Soft throttling happens when a physical machine seems to be on the way to being overloaded, unless its workload is reduced. If soft throttling is successful, no other action is required to maintain the health of the cluster.

Hard throttling

Hard throttling is the second and final stage of throttling. It affects every database on the hosting machine. Hard throttling happens when a machine is critically impacted due to overload. It terminates existing operations and prevents new ones until the metric returns below expected threshold.

Connection Termination Thresholds

Connection-loss is not uncommon when databases encounter resource shortages. A unique feature of SQL Database is its ability to monitor and rebalance active and online user databases in the Microsoft data centers automatically. To achieve this, SQL Database continuously gathers and analyzes database usage statistics and will terminate connections when necessary.

The following list describes the reasons and thresholds that trigger connection-loss. All connection terminations happen asynchronously from the event causing it.

Throttling Limits

Worker Threads

As we notified earlier, Worker thread throttling mechanism has changed now and soft throttling on worker threads are replaced by worker thread governance.

With the roll-out of this new worker thread governance mechanism on all datacenters, users may see requests failing due to either one of two reasons – throttling on worker threads (40501) or worker thread governance (new error codes : 10928, 10929; see table below). During this time, it is recommended that the retry logic in your application is suitably modified to handle both throttling error code (40501) and governance error codes (10928, 10929) for worker threads.

Please go through information below and modify your applications as required.

Please note that 40501 errors seen due to hard throttling on worker threads and due to throttling on other resources will continue to be seen as before. Please ensure your error catching logic continues to handle these 40501s as before.

Old mechanism : Worker thread throttling |

Current mechanism : Worker thread governance |

|

Description |

When soft throttling limit for worker threads on a machine is exceeded, the database with the highest requests per second is throttled. Existing connections to that database are terminated if new requests are made on those connections, and new connections to the database are denied, until number of workers drops below soft throttling limit. The soft throttling limit per back-end machine currently is 305 worker threads. |

Every database will have a maximum worker thread concurrency limit. *Please note this limit is only a maximum cap and there is no guarantee that a database will get threads up to this limit, if the system is too busy.* Requests can be denied for existing connections in following cases: 1. If the maximum worker thread concurrency limit for the database is reached, user will receive error code 10928. 2. If the system is too busy, it is possible that even fewer workers are available for the database and user will receive error code 10929. This is expected to be a rare occurrence. |

Error returned |

40501 : The service is currently busy. Retry the request after 10 seconds. Incident ID: <ID>. Code: <code>. |

10928 : Resource ID: %d. The %s limit for the database is %d and has been reached. See http://go.microsoft.com/fwlink/?LinkId=267637 for assistance.

10929 : Resource ID: %d. The %s minimum guarantee is %d, maximum limit is %d and the current usage for the database is %d. However, the server is currently too busy to support requests greater than %d for this database. See http://go.microsoft.com/fwlink/?LinkId=267637 for assistance. Otherwise, please try again later.

Resource ID in both error messages indicates the resource for which limit has been reached. For worker threads, Resource ID = 1. |

Recommendations |

Back-off and retry request after 10 seconds; See best practices |

10928 : Check dm_exec_requests to view which user requests are currently executing 10929 : Back-off and retry request after 10 seconds; See best practices |

- Note :

- The hard throttling on worker thread mechanism is not being changed and will continue to return a 40501 error to user applications, as before

- In certain specific scenarios like in the case of usage of federated DB feature it is possible to hit the worker thread cap error (10928) at the time of login to a database, as this operation would utilize a worker thread underneath Connection.Open call. This may put the application above the worker thread cap threshold. Application should build in logic to handle this error appropriately (as described above) to handle such cases.

Sessions

**

Current mechanism

**Each database has a limit on the number of connections that can be made to it, specified by number of sessions that can be established. When session limit for a database is reached, new connections to the database are denied and user will receive error code 10928. Existing sessions are not terminated.

Error returned

**

10928** : Resource ID: %d. The %s limit for the database is %d and has been reached. See http://go.microsoft.com/fwlink/?LinkId=267637 *for assistance.

Resource ID in error message indicates the resource for which limit has been reached. For sessions, Resource ID = 2.

*Recommendations

**

**Check dm_exec_sessions to view which sessions are currently established.

Database Size

**

Current mechanism

**When the database space allotted to user db is full, the user gets a db full error. Non-Select DML (Insert, Update, Merge that inserts or updates) are denied.

Error returned

*40544 : The database has reached its size quota. Partition or delete data, drop indexes, or consult the documentation for possible resolutions. Incident ID: <ID>. Code: <code>.

*Limit

**

**150 GB (or less for DBs with smaller quotas)

Type of requests throttled

**

**Inserts, Updates …

Physical Database Space

Current mechanism

**

**When total database size on a machine exceeds 90% of total space available on machine, all databases become read-only. Load balancer ensures the situation is resolved by balancing databases across machines.

Error returned

**

***40545: The service is experiencing a problem that is currently under investigation. Incident ID: <ID>. Code: <code>.

*Limit

**

**90% of available space on machine

Type of requests throttled

**

**Inserts, Updates (Merge)

Long Transaction Limits

Log Bytes Used

**

Current mechanism

**SQL Database supports transactions generating log of up to 2 GB in size. Example operations that can consume log space in this volume:

insert, update, delete, merge, create index

Error returned

**

***40552 : The session has been terminated because of excessive transaction log space usage. Try modifying fewer rows in a single transaction.

*Limit

**

**Logspace per transaction : 2 GB

Type of requests throttled

**

**DDL (Create, Drop, …)

DML (Insert, Update, Delete, Merge, Select)

Recommendations

**

**To avoid getting throttled for row operations, reduce the size of data in your transaction, for example by reducing the number of rows or splitting the operation into multiple transactions. To avoid getting throttled for table/index operations that require a single transaction, ensure that the following formula is adhered to:

number of rows affected in table * (avg size of field being updated in bytes + 80) < 2 GB

(In case of index rebuild, avg size of field being updated should be substituted by avg index size)

Transaction Log Length

**

Current mechanism

**Uncommitted transactions can block the truncation of log files. To prevent this, the distance from the oldest active transaction log sequence number (LSN) to the tail of the log (current LSN) cannot exceed 20% of the size of the log file. When violated, the offending transaction is terminated and rolled back so that the log can be truncated.

Error returned

**

***40552 : The session has been terminated because of excessive transaction log space usage. Try modifying fewer rows in a single transaction.

*Limit

**

**Transaction span limit : 20% of total log space ( = 100 GB)

Type of requests throttled

**

**DDL (Create, Drop, …)

DML (Insert, Update, Delete, Merge, Select)

Transaction Lock Count

**

Current mechanism

**Sessions consuming greater than one million locks are terminated.

Error returned

**

***40550 : The session has been terminated because it has acquired too many locks. Try reading or modifying fewer rows in a single transaction.

*Limit

**

**1 million locks

Recommendations

**

**Following DMVs can be used to monitor transactions :

sys.dm_tran_active_transactions

sys.dm_tran_database_transactions

sys.dm_tran_locks

sys.dm_tran_session_transactions

Blocking System Tasks

**

Current mechanism

**Transactions request locks on resources like rows, pages, or tables, on which the transaction is dependent and then free the locks when they no longer have a dependency on the locked resources. Due to these locks, some transactions might block resources required by system sessions. If a transaction locks a resource required by an underlying system operation for more than 20 seconds, it is terminated. In addition, any transaction that runs for more than 24 hours is terminated.

Error returned

*40549 : Session is terminated because you have a long-running transaction. Try shortening your transaction.

*Limit

**

**20 seconds when holding a lock required by system operation

24 hours in general

Temp Db Usage

**

Current mechanism**

When a session uses more than 5 GB of tempdb space, the session is terminated.

Error returned

**

***40551 : The session has been terminated because of excessive TEMPDB usage. Try modifying your query to reduce the temporary table space usage.

*Limit

**

**5 GB of tempDB space (which is equivalent to 655360 pages)

Recommendations

**

**Modify queries to reduce the temporary table space usage, drop temporary objects after they are no longer needed, truncate tables or remove unused tables.

MemoryUse

**

Current mechanism

**When there are sessions waiting on memory for 20 seconds or more, sessions consuming greater than 16 MB for more than 20 seconds are terminated in the descending order of time the resource has been held, so that the oldest session is terminated first. Termination of sessions stops as soon as the required memory becomes available.

Error returned

**

***40553 : The session has been terminated because of excessive memory usage. Try modifying your query to process fewer rows.

*Limit

**

**More than 16 MB for more than 20 seconds

Too Many Requests

**

Current mechanism

**If number of concurrent requests made to a database exceed 400, all transactions that have been running for 1 minute or more are terminated.

Error returned

**

***40549 : Session is terminated because you have a long-running transaction. Try shortening your transaction.

*Limit

**

**400 concurrent requests per partition

Other causes of connection termination

Idle connections

**

Current mechanism

**Connections to SQL Database that are idle for 30 minutes or longer will be terminated.

Error returned

**

**Since there is no active request, SQL Database does not return any error.

Limit

**

**30 minutes

Denial of Service

**

Current mechanism

**When there are a high number of login failures from a particular source internet protocol (IP) address, SQL Database will block the connections from that IP address for a period of time. The connection is terminated and no error is returned.

Error returned

None

Recommendations

**

**You can configure SQL Database Firewall to protect against DoS attacks by allowing access to only the client IP addresses that are defined by the firewall rules.

Network Issues

**

Current mechanism

**Connections might be disconnected due to various network problems. Hosting application code in Windows Azure is beneficial to the performance of application because it minimizes the network latency associated with application's data requests to SQL Database. For example, if you create a Web-based user interface in Windows Azure for your database application that is hosted in SQL Database, the client code and data is hosted in the same physical data center, so network latency is minimal between the corresponding servers. Network traffic between the client code and data is much faster.

Error returned

**

**If a network problem causes connection-loss, SQL Database cannot return an error message because the session has already been terminated. However, if the same connection is reused, like with connection pooling, the following error message is returned:

10053: A transport-level error has occurred when receiving results from the server. (Provider: TCP Provider, Error: 0 - An established connection was aborted by the software in your host machine).

Failover Issues

**

Current mechanism

**SQL Database is flexible to cope with any variations in usage and load. The service replicates multiple redundant copies of data to multiple physical servers to maintain data availability and business continuity. In case of a hardware failure, SQL Database provides automatic failover to optimize availability for your application. Currently, some failover actions may result in an abrupt termination of a session.

Error returned

**

**Client application receives generic errors, such as:

*10053: A transport-level error has occurred when receiving results from the server. (Provider: TCP Provider, error: 0 - An established connection was aborted by the software in your host machine)

*or

*40197: The service has encountered an error processing your request. Please try again. Error code <code>.

*Recommendations

**

**When an abrupt termination due to failover occurs, reconnecting to your SQL Database server automatically connects you to a healthy copy of your database.

Limits Table

Resource |

Max. value per transaction/session |

Max. value per machine |

Soft throttling limit |

Hard throttling limit |

Worker Threads |

NA |

1000 |

180 | 900 |

Database Size |

150 GB per partition |

NA |

None |

100% (150 GB or upper limit for customer) |

Physical Database Space |

NA |

Total space on machine |

None |

90% |

Log Bytes Used |

2 GB per transaction |

500 GB |

NA |

NA |

Transaction Log Length |

20% of total log space (= 100 GB) |

500 GB |

NA |

NA |

Lock Count |

1 million per transaction |

NA |

NA |

NA |

Blocking System Tasks |

20 seconds |

NA |

NA |

NA |

Temp DB Space |

5 GB |

NA |

None |

5 GB |

Memory |

NA |

NA |

None |

16 MB for 20 seconds |

Too Many Requests |

400 concurrent requests per partition |

NA |

NA |

NA |

Connection-loss Errors

The following table lists the SQL Database-specific transient errors that might occur when your connection to the service is closed.

Error |

Description (message text) |

40197 |

The service has encountered an error processing your request. Please try again. Error code %d. Note: You will receive this error, when the service is down due to software or hardware upgrades, hardware failures, or any other failover problems. Reconnecting to your SQL Database server will automatically connect you to a healthy copy of your database. Note: You may see error codes 40143 and 40166 embedded within the message of error 40197. The error codes 40143 and 40166 provide additional information about the kind of failover that occurred. Do not modify your application to catch error codes 40143 and 40166. Your application should catch 40197 and try reconnecting to SQL Database until the resources are available and your connection is established again. |

40501 |

The service is currently busy. Retry the request after 10 seconds. Code: %d. For more information on how to decode the returned codes, see Windows Azure SQL Database Throttling and Reason Codes. Note: When error code 40501 is returned, retry to connect to SQL Database in intervals of 10 seconds until the resources are available and your connection is established again. |

40544 |

The database has reached its size quota. Partition or delete data, drop indexes, or consult the documentation for possible resolutions. |

40549 |

Session is terminated because you have a long-running transaction. Try shortening your transaction. |

40550 |

The session has been terminated because it has acquired too many locks. Try reading or modifying fewer rows in a single transaction. |

40551 |

The session has been terminated because of excessive TEMPDB usage. Try modifying your query to reduce the temporary table space usage. |

40552 |

The session has been terminated because of excessive transaction log space usage. Try modifying fewer rows in a single transaction. |

40553 |

The session has been terminated because of excessive memory usage. Try modifying your query to process fewer rows. |

40613 |

Database '%.*ls' on server '%.*ls' is not currently available. Please retry the connection later. If the problem persists, contact customer support, and provide them the session tracing ID of '%.*ls' |

In addition, the following table lists some additional transient errors that might occur when using SQL Database:

Error |

Description (message text) |

20 |

The instance of SQL Server you attempted to connect to does not support encryption. |

64 |

A connection was successfully established with the server, but then an error occurred during the login process. (provider: TCP Provider, error: 0 - The specified network name is no longer available.) |

233 |

The client was unable to establish a connection because of an error during connection initialization process before login. Possible causes include the following: the client tried to connect to an unsupported version of SQL Server; the server was too busy to accept new connections; or there was a resource limitation (insufficient memory or maximum allowed connections) on the server. (provider: TCP Provider, error: 0 - An existing connection was forcibly closed by the remote host.) |

10053 |

A transport-level error has occurred when receiving results from the server. An established connection was aborted by the software in your host machine. |

10054 |

A transport-level error has occurred when sending the request to the server. (provider: TCP Provider, error: 0 - An existing connection was forcibly closed by the remote host.) |

10060 |

A network-related or instance-specific error occurred while establishing a connection to SQL Server. The server was not found or was not accessible. Verify that the instance name is correct and that SQL Server is configured to allow remote connections. (provider: TCP Provider, error: 0 - A connection attempt failed because the connected party did not properly respond after a period of time, or established connection failed because connected host has failed to respond.) |

For a complete list of errors that can occur only when using SQL Database, see Error Messages (Windows Azure SQL Database).

Troubleshooting Connection-loss Errors

- Check the status of the SQL Database service: To check the status of the SQL Database service, use the Azure Health Status page. You can also click the Service Dashboard button on the Windows Azure Support page to get the status. The status page contains current service status information and historical service data.

- Trace connections: When the client application connects to SQL Database, CONTEXT_INFO (Transact-SQL) is set with a unique session specific GUID value automatically. You may retrieve this GUID value and use it in your application to trace the connectivity problems when necessary. For example code, see Development Considerations in SQL Database.

- Check DMV: SQL Database enables a subset of DMVs to diagnose the performance problems, which might be caused by blocked or long-running queries, resource bottlenecks, poor query plans, and so on. For a list of supported and unsupported DMVs in SQL Database, see System Views (Windows Azure SQL Database). The Monitoring Windows Azure SQL Database Using Dynamic Management Views topic also provides examples on how to detect common performance problems by using the DMV in SQL Database.

- Stress test: To reduce connection-losses from excessive resource usage, you can stress test your database application by slowly increasing the test workload until the desired level of concurrency is reached. When stress testing triggers a connection-loss, identify the resource limits by analyzing the returned reason codes and tune your application appropriately. For more information on reason codes, see Reason Codes in the SQL Database Database documentation.

- Contact customer support: If you think that your connection is terminated when it should not be, contact the Microsoft Customer Support. Please be prepared to provide the following information when contacting the Microsoft Customer Support:

- Your subscription ID(s), your server name, and the error message,

- The time your connection was terminated, and

- Your session ID, which is returned by the CONTEXT_INFO (Transact-SQL) function. For more information, see Trace Connections section above.

For additional troubleshooting articles, see:

- [[articles: Troubleshooting and optimizing queries with Windows Azure SQL Database]]

- Troubleshooting Performance Problems in SQL Server 2008

- Performance (Database Engine)

- Troubleshoot connection problems to Azure SQL Database

General Best Practices to Prevent Connection-Losses

To prevent the connection-losses, you must manage the application resources properly in your SQL Database applications. The established connections are the connections that are returning data or the open connections in the connection pool, or the connections being cached in the client side variables. To provide a seamless user experience when a connection is closed, re-establish the connection and then re-execute the failed commands or the query.

The following list provides a list of recommended coding practices when connecting to SQL Database. These recommended coding practices are not significantly different than the coding practices that apply to on-premise SQL Server.

Minimize network latency

- When creating a SQL Database server, choose the Microsoft data center location that is closest to you (or users) to avoid extra bandwidth costs and achieve better performance.

- (use windows azure)

Reduce network usage

- Implement an application-level caching to avoid rendering large data in every database call and also to minimize round trips between your application and SQL Database.

Manage network connection

- Keep the connection open as short as possible.

- Set connection timeout to 30 seconds in connection string. If your application cannot establish a connection to SQL Database longer than 30 seconds and if you want to report a failure, contact Microsoft Customer Support.

- Use connection pooling. The connection pooling increases code efficiency by reducing the number of times that new connections must be opened and closing the idle connections automatically. SQL Database terminates connections that have been idle for 30 minutes or longer. Implementing connection pooling in your application is beneficial as the connection pooler automatically removes a connection from the pool after it has been idle for a long time. Consider designing your application in a way that connections are opened late and closed early. For more information, see SQL Server Connection Pooling.

- Perform all database operations in transactions within a TRY...CATCH block including all exceptions. If an error prevents the successful completion of a transaction, SQL Database automatically rolls back the transaction and frees all resources held by the transaction. If an exception occurs, retry the connection.

Tune T-SQL

The following example returns information about the top five queries ranked by average CPU time. This example aggregates the queries according to their query hash, so that logically equivalent queries are grouped by their cumulative resource consumption.

-- Find top 5 queries SELECT TOP 5 query_stats.query_hash AS "Query Hash", SUM(query_stats.total_worker_time) / SUM(query_stats.execution_count) AS "Avg CPU Time", MIN(query_stats.statement_text) AS "Statement Text" FROM (SELECT QS.*, SUBSTRING(ST.text, (QS.statement_start_offset/2) + 1, ((CASE statement_end_offset WHEN -1 THEN DATALENGTH(st.text) ELSE QS.statement_end_offset END - QS.statement_start_offset)/2) + 1) AS statement_text FROM sys.dm_exec_query_stats AS QS CROSS APPLY sys.dm_exec_sql_text(QS.sql_handle) as ST) as query_stats GROUP BY query_stats.query_hash ORDER BY 2 DESC; GOQuery parameterization limits compiles: Declare the type and length of each parameter as if not specified, a parameter size might cause a cache bloat.

// if the length is not specified, a cache bloat happens. cmd.CommandText = "SELECT c1 FROM dbo.tbl WHERE c2 = @1"; cmd.Parameters.Add("@1", SqlDbType.NVarChar).Value = "1"; cmd.Parameters.Add("@1", SqlDbType.NVarChar).Value = "22"; (@1 nvarchar(1)) SELECT c1 FROM dbo.tbl WHERE c2 = @1 (@1 nvarchar(2)) SELECT c1 FROM dbo.tbl WHERE c2 = @1 // if the length is specified, there won’t be any cache bloat. cmd.CommandText = "SELECT c1 FROM dbo.tbl WHERE c2 = @1"; cmd.Parameters.Add("@1", SqlDbType.NVarChar, 128).Value = "1"; (@1 nvarchar(128)) SELECT c1 FROM dbo.tbl WHERE c2 = @1Transactions and Query Batching:

- Push query / business / application logic to SQL Database.

- Use stored procedures and batching.

- Limit the number of round-trips to the server. For example, batch 10 statements in one round-trip instead of using ten round-trips.

Implement re-try logic in your application. The SQL Database TDS Gateway retries connections for about 30 seconds before reporting a failure. If you expect a high volume of application traffic, build re-try logic in your application. If a connection fails, do the followings:

Handle idleness and transient disconnections.

Re-try to connect to SQL Database in intervals of 10 seconds until the resources are available and your connection is established again. Depending on your application, database, and network workload, increase the delay time as necessary.

Change your workload if a connection is terminated again.

If a connection is terminated again, look at the error code, find out the real problem, and then try to change your workload. You can implement a queue or a delay mechanism in your client application to reduce your workload.

One other solution could be to re-design your application and database to remove resource bottlenecks. Ensure that your application do not overload tempdb through excessive DDL or DML operations. In addition, ensure that transactions do not block any resource. When appropriate, consider partitioning your database into multiple databases. For more information on partitioning, see [[articles: Scaling out with Windows Azure SQL Database]].

Implement the following recommended coding practices in your application:

- Execute transactions in a continuous loop.

- Catch transient connection termination errors.

- Pause for a few seconds and then reconnect.

Windows Server AppFabric Customer Advisory Team developed a helper library to show the best practices for handling transient conditions in SQL Database client applications. For more information, see The Transient Fault Handling Application Block in the MSDN library.

Migration Best Practices to Prevent Connection-Losses

To move data into and out of SQL Database, you can use SQL Server Integration Services (SSIS), the bulk copy utility (bcp.exe), Microsoft Sync Framework 2.1 (or later), or custom Transact-SQL scripts. For more information on SQL Database data migration, see [[Overview of Options for Migrating Data and Schema to Windows Azure SQL Database]].

When uploading data by using custom Transact-SQL scripts (INSERT statements), try to break your upload into multiple transactions to avoid connection-losses. This will ensure to give breaks at some intervals and therefore allows you to control the upload process. If there is any unexpected connection-loss, you can resume importing data starting after the last successful batch. Note that uploading data by using Transact-SQL scripts is recommended only for small data uploads.

To transfer data to SQL Database, you can use one of the following tools:

- Bulk copy utility (bcp.exe): The bcp utility bulk copies data between SQL Database (or SQL Server) and a data file in a user-specified format. The bcp utility can be used to import large numbers of new rows into SQL Database tables or to export data out of tables into data files.

- SQL Server Integration Services (SSIS): Integration Services can extract and transform data from a wide variety of sources such as XML data files, flat files, and relational data sources, and then load the data into one or more destinations.

- Microsoft Sync Framework 2.1: Provides synchronization capabilities between on-premise and SQL Database servers, as well as between two or more SQL Databases in the same or different data centers.

When using the bcp.exe utility, all the rows in the data file are imported as one batch by default. Importing a large data file as a single batch can be problematic and might cause connection-losses. To distribute the rows among multiple batches, specify a batch_size that is smaller than the number of rows in the data file. The -b batch_size option allows you to specify the number of rows per batch of imported data. Each batch is imported and logged as a separate transaction that imports the whole batch before being committed. If the transaction for any batch fails, only insertions from the current batch are rolled back. Therefore, batches already imported by committed transactions are unaffected by a later failure. In addition, when uploading very large data, consider dividing your data into multiple sections and upload each section by executing multiple bcp commands simultaneously. To specify the number of bytes per network packet, use –a packet_size option. Increased packet size can enhance performance of bulk-copy operations. To avoid splitting data physically, you can use –F and –L options to specify the first and last rows for the upload.

When using SQL Server Integration Services (SSIS) to upload very large data, consider dividing your data into multiple files on the file system and upload each file by executing multiple streams simultaneously. For an example analysis of SSIS and BCP, see Loading Data to Windows Azure SQL Database blog post at SQL CAT Blogs.

Important: Before uploading large data to SQL Database:

- Perform pilot testing and determine the best bcp –b batch_size and –a packet_size values for your application.

- Consider reducing the number of indexes on the server side.

- If possible, disable the indexes and database constraints on server side. After upload is done, you can enable these indexes and database constraints.

For more information about migration options for SQL Database, see Migrating Databases to Windows Azure SQL Database.

See Also

- [[articles: Retry Logic for Transient Failures in Windows Azure SQL Database]]

- [[articles: Windows Azure SQL Database Connectivity Troubleshooting Guide]]

- [[Windows Azure SQL Database TechNet Wiki Articles Index]]

This article was originally written by Raja Krishnasamy, Selcin Turkarslan, and reviewed by Abirami Iyer, Cihan Biyikoglu, George Varghese, Tony Petrossian, Wayne Berry.