Azure Hadoop: Mahout Classification Sample

Overview

This tutorial illustrates how to use Apache Mahout in Hadoop on Azure to do classification.

Classification techniques attempt to answer the question: how much some object is or is not part of some type or category, or, whether it does or does not have some attribute.

The sample used in this tutorial is derived from the classification sample on [Mahout's website] (https://cwiki.apache.org/confluence/display/MAHOUT/Twenty+Newsgroups).

Goals

In this tutorial, you see two things:

How to use the Remote Desktop in Hadoop on Azure to access the head node of the HDFS cluster.

How to run Mahout classification analysis from Hadoop on Azure

Key Technologies

Setup and Configuration

You must have an account to access Hadoop on Azure and have created a cluster to work through this tutorial. To obtain an account and create a Hadoop cluster, follow the instructions outlined in the Getting started with Microsoft Hadoop on Azure section of the Introduction to Hadoop on Azure topic.

Tutorial

This tutorial is composed of the following segments:

How to do classification analysis by using Mahout on Hadoop on Azure

From your Account page, scroll down to the Remote Desktop icon in the Your cluster section and click on the icon to open the head node in your cluster.

Select Open when prompted to open the .rpd file.

Select Connect in the Remote Desktop Connection window.

Enter your credentials for the Hadoop cluster (not your Hadoop on Azure account) into the Windows Security window and select OK.

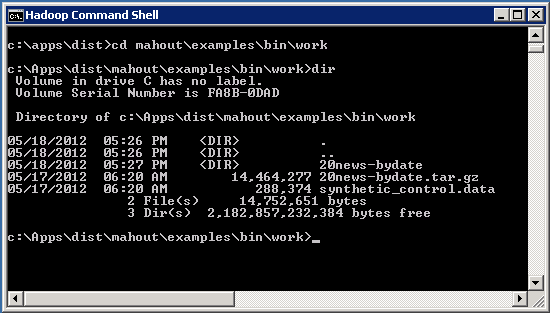

Double-click the Hadoop Command Shell in the upper left corner of the Desktop to open it. Change the directory to the c:\apps\dist\mahout\examples\bin\work\ directory. synthetic_control.data file contains the data that you analyze*.* To check that the synthetic_control.data file is present run the dir command.

To launch the Mahout cluster analysis on this data, go to folder c:\apps\dist\mahout\examples\bin and run the command: build-20news-bayes.cmd

The watch the execution status that is reported as the job progresses.

Once the job has completed, verify that the results are in the HDFS output directories by using the following command:

hadoop fs -lsr output

To download the output from the cluster analysis for further processing and visualization to the local file directory on the head node of the cluster use the following command:

hadoop fs -get output c:Apps\dist\mahout\examples

Summary

In this tutorial, you have seen how to run a Mahout classification analysis from Hadoop on Azure Hadoop using the Remote Desktop.