Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

This article presents a WPF C# example of the Blackboard design pattern, available on TechNet Gallery.

http://gallery.technet.microsoft.com/Blackboard-Design-Pattern-13a35a7e

An example of the concepts of the design pattern, highlighting points of interest for C#, WPF developers.

Introduction

The Blackboard pattern is a design pattern, used in software engineering, to coordinate separate, disparate systems that need to work together or in sequence, continually cycling for updates and actions.

The blackboard consists of a number of stores or "global variables". This is similar to a repository of messages, which can be accessed by separate autonomous processes. A "Controller" monitors the properties on the blackboard and can decide which "Knowledge Sources" to prioritize.

Transputers to Grid Computers

As a student, my passion for computers awarded my final year with college sponsorship funding, then two years golden handcuffs from Marconi. Thus, the first five years of my career thrust me into the deep end of military radar and civil aviation. At the time, I never appreciated that the racks I worked on were the world's most advanced multi-processors. This was the earliest example of grid computing. Physical parallel processing chips, each analysing their own part of the spectrum in four dimensions. The fourth being small grains of time.

The digital evolution through virtual machines, VMSS, Service Fabric, SF Mesh, Containers, Kubernetes and AKS has always been a special interest of mine. My earliest examples of grid computing (from radar of the 1980's) filled a lorry or a large room. It was a space packed full of multi-processors- hundreds/thousands of parallel connected processors, originally called transputers.

Real-time Processing of Radar Data

The challenge of making [potentially life and death] decisions in real time was two-fold:

1) Process the analogue radio data into digital patterns, as finely grained and quickly as possible

2) Analyse the pattern data to identify objects as fast and accurately as possible

When you consider the number of combinations of signal frequencies and granularities, radar data is infinitely large. We used a specialized language which was optimised for real-time called RTL2. It allowed us to embed M68000 "machine code inserts" into the code - snippets of manually-crafted raw machine code.

When you are processing in near-real time, every single instruction per processor cycle counted. So we wrote in raw machine language, rather than let a compiler interpret script into common instruction sets. An adept machine coder can write custom functions that run much faster than any machine code generated by a compiler.

Speed of analysis came from incremental cycles of processing the emerging data, in a similar way to the brain. This was a constant cycling process of elimination and weighted consensus of increasingly finer-gained (better resolution) images. This took hundreds of transputers working together for each "comb" through a slice of time. Then on consecutive passes, further combs (slices of the time window) added more granular detail, filling in the gaps to increase the resolution.

As the images emerged with increasing clarity, it would be re-analysed and scored. When patterns emerged, they could be checked against known patterns until a small set of possibilities remained. Then other processes could test the data further, until only one possibility remained. Or when a consensus between multiple actors (knowledge bases) was reached. Finally, the result would be acted upon. The action would be an alert, or indicator on an output console, showing a plane on it's flight path, collision warnings, threat warnings, etc.

This of course sounds very similar to the Blackboard pattern, so I decided to share this project with you to demonstrate some of the concepts.

http://gallery.technet.microsoft.com/Blackboard-Design-Pattern-13a35a7e

The Concepts

In this example, the controller runs in a loop, iterating over all the Knowledge Sources that are active, and executing each.

The Blackboard Pattern defines the controller as the decision maker, choosing which "Knowledge Source" to execute and in what order. In this project that is represented simply by a "Priority" enum which orders the Knowledge Sources. For example the "WarMachine" knowledge source is top priority and executed first, so any known threats are acted upon, before any further decisions are made.

The Blackboard Pattern states that each Knowledge Source is responsible for declaring whether it is available for the current state of the problem being analysed. This could in fact be an autonomous process, as the Knowledge Sources could monitor the Blackboard directly. But in this example there are multiple problems (detected objects) being analysed, so each Knowledge Source has an "IsEnabled" property, which is set if there are any valid objects on the Blackboard.

The Blackboard Pattern declares that a controller iterates over the Knowledge Sources, each taking their turn at the problem. As this is a multi-problem Blackboard, I decided to stick as closely to the original concept and let each active Knowledge Source iterate over all the problems/objects that meet it's execution criteria.

Some descriptions of the Blackboard Pattern include a callback delegate method, which the controller would then act upon, to select the next best problem solver (Knowledge Base). Alternatively, each of these modules could be working autonomously, reporting back any changes that are needed. However, this would be a change to the controller concept of the Blackboard Pattern, so I stuck to a flat turn-based system. The only exception is the Radar module. In this example, that is more of an "input" for the Blackboard, rather than an "actor" on the data.

The Project

This document describes the project available for download at TechNet Gallery

http://gallery.technet.microsoft.com/Blackboard-Design-Pattern-13a35a7e

MainWindow

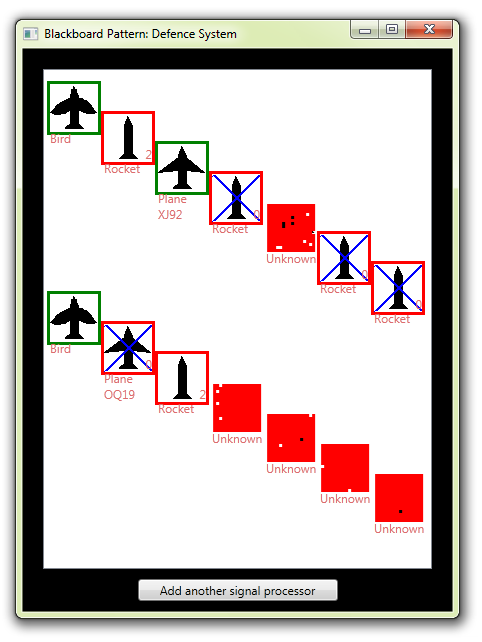

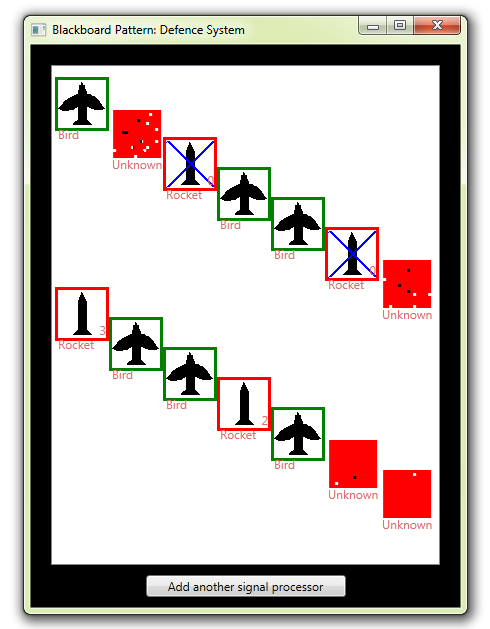

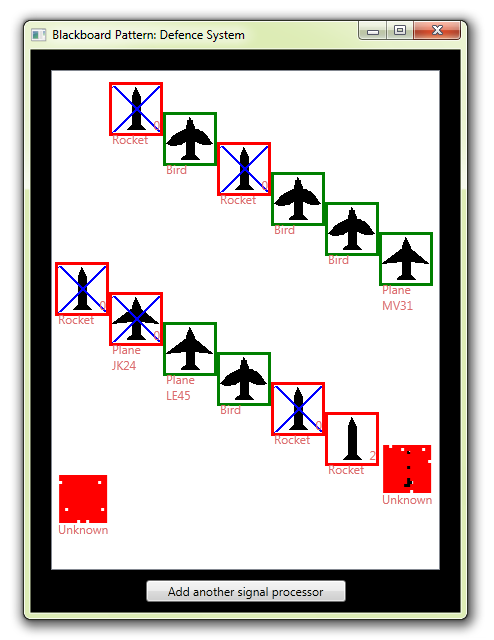

The project only has one user interface, which presents the Blackboard data as a visual representation of the objects being analysed.

The ListBox

In MainWindow.xaml is a simple ListBox. However, the ItemsPanel has been converted into a Canvas for it's ItemsHost, so that items can be positioned by X & Y, rather than just a list.

<ListBox ItemsSource="{Binding blackboard.CurrentObjects}" ItemsPanel="{DynamicResource ItemsPanelTemplate1}" ItemContainerStyle="{DynamicResource ItemContainerStyle}" ItemTemplate="{DynamicResource ItemTemplate}" Margin="20,20,20,10" Foreground="#FFDE6C6C" >

<ListBox.Resources>

<ItemsPanelTemplate x:Key="ItemsPanelTemplate1">

<Canvas IsItemsHost="True"/>

</ItemsPanelTemplate>

The Item Container

The ItemContainer is used for positioning (Canvas.Left & Canvas.Top), NOT the ItemTemplate itself.

<Style x:Key="ItemContainerStyle" TargetType="{x:Type ListBoxItem}">

<Setter Property="Background" Value="Transparent"/>

<Setter Property="HorizontalContentAlignment" Value="{Binding HorizontalContentAlignment, RelativeSource={RelativeSource AncestorType={x:Type ItemsControl}}}"/>

<Setter Property="VerticalContentAlignment" Value="{Binding VerticalContentAlignment, RelativeSource={RelativeSource AncestorType={x:Type ItemsControl}}}"/>

<Setter Property="Canvas.Left" Value="{Binding X}"/>

<Setter Property="Canvas.Top" Value="{Binding Y}"/>

<Setter Property="Template">

<Setter.Value>

<ControlTemplate TargetType="{x:Type ListBoxItem}">

<Border x:Name="Bd" BorderBrush="{TemplateBinding BorderBrush}" BorderThickness="{TemplateBinding BorderThickness}" Background="{TemplateBinding Background}" Padding="{TemplateBinding Padding}" SnapsToDevicePixels="true">

<ContentPresenter HorizontalAlignment="{TemplateBinding HorizontalContentAlignment}" SnapsToDevicePixels="{TemplateBinding SnapsToDevicePixels}" VerticalAlignment="{TemplateBinding VerticalContentAlignment}"/>

</Border>

</ControlTemplate>

</Setter.Value>

</Setter>

</Style>

The Item

The ItemTemplate defines the object, just an Image and a couple of TextBoxes, all wrapped in a colour-changing Border (for safe or threat).

There is also a countdown TextBlock for DistanceFromDestruction, which counts down until the War Machine (planes, missiles) reaches any targets identified as threats.

<DataTemplate x:Key="ItemTemplate">

<Border>

<Border.Style>

<Style TargetType="{x:Type Border}">

<Style.Triggers>

<DataTrigger Binding="{Binding IsThreat}" Value="true">

<Setter Property="Background" Value="Red"/>

</DataTrigger>

<DataTrigger Binding="{Binding IsThreat}" Value="false">

<Setter Property="Background" Value="Green"/>

</DataTrigger>

</Style.Triggers>

</Style>

</Border.Style>

<Grid Margin="3">

<Image Height="48" Source="{Binding Image}" />

<StackPanel Margin="0,0,0,-30" VerticalAlignment="Bottom" >

<TextBlock Text="{Binding Type}"/>

<TextBlock Text="{Binding Name}"/>

</StackPanel>

<TextBlock HorizontalAlignment="Right" TextWrapping="Wrap" Text="{Binding DistanceFromDestruction}" VerticalAlignment="Bottom" Width="Auto" Visibility="{Binding IsThreat, Converter={StaticResource BooleanToVisibilityConverter}}"/>

</Grid>

</Border>

</DataTemplate>

Code Behind / ViewModel

The code behind simply instantiates the Controller and Blackboard components, and sets itself as DataContext (for any bindings) - the code-behind thereby acting a bit like a lazy MVVM ViewModel implementation.

public Blackboard blackboard { get; set; }

Controller controller;

public MainWindow()

{

InitializeComponent();

DataContext = this;

blackboard = new Blackboard();

controller = new Controller(blackboard);

}

As mentioned above, the signal processing part of the application is the most time consuming, and when you run the application, you will notice some objects reach the top of the screen before they are identified. One good reason to use this pattern is it's extensibility, as you can bold extra modules on with ease, even as it is running. To demonstrate this, a button calls a method in the constructor top add extra Signal Processors, which obviously improves the speed objects are identified.

private void Button_Click(object sender, System.Windows.RoutedEventArgs e)

{

controller.AddSignalProcessor();

}

IObject, BirdObject, PlaneObject, RocketObject, ObjectBase

As all objects that are captured by the radar can have the same set of properties (name, x, y) there is a base class called IObject:

public interface IObject

{

ObjectType Type { get; set; }

string Name { get; set; }

WriteableBitmap Image { get; set; }

bool? IsThreat { get; set; }

ProcessingStage Stage { get; set; }

int X { get; set; }

int Y { get; set; }

IObject Clone();

}

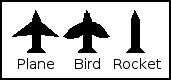

Bird, Plane & RocketObject represent the three object types we can detect, but to save time, they all derive from ObjectBase and I define them in the Radar module:

AllObjects = new List<IObject>

{

new BirdObject(ObjectType.Bird, "", new WriteableBitmap(new System.Windows.Media.Imaging.BitmapImage(new Uri(@"pack://application:,,,/Media/Bird.bmp", UriKind.Absolute))), false, false),

new PlaneObject(ObjectType.Plane, "", new WriteableBitmap(new System.Windows.Media.Imaging.BitmapImage(new Uri(@"pack://application:,,,/Media/Plane.bmp", UriKind.Absolute))), false, false),

new RocketObject(ObjectType.Rocket, "", new WriteableBitmap(new System.Windows.Media.Imaging.BitmapImage(new Uri(@"pack://application:,,,/Media/Rocket.bmp", UriKind.Absolute))), false, false),

};

This is a little lazy I admit, as each object could encapsulate it's own data, but this small project is not design best practice, just design pattern concept ;)

So we have different objects and their known images. which have similarities, but as a whole are recognisably different:

Incoming Object

Once an object is "detected" by the Radar module, a new IncomingObject is added to the Blackboard's CurrentObjects collection. The actual object is hidden in a private property of the IncomingObject, which the Knowledge Sources will interrogate, to simulate the process of detection, analysis, identification and potentially it's destruction!

public IncomingObject(IObject obj)

: base(ObjectType.Unknown, null, null, true, null)

{

actualObject = obj;

ProcessedPixels = new bool[16, 16];

//Paint the image as all red to start with

Image = new WriteableBitmap(48, 48, 72, 72, PixelFormats.Bgr32, null);

int[] ary = new int[(48 * 48)];

for (var x = 0; x < 48; x++)

for (var y = 0; y < 48; y++)

ary[48 * y + x] = 255 * 256 * 256;

Image.WritePixels(new Int32Rect(0, 0, 48, 48), ary, 4 * 48, 0);

}

As shown, the "known image" begins as red, as it has not been processed at all yet.

Knowledge Sources

Knowledge Sources represent the brains of the system, each specializing in a different stage or part of the problem. In our example, this is presented as follows:

- Signal Processing - The biggest job of number crunching the radar data (eg spectrogram analysis) into digital data

- Image Recognition - Comparing the part processed image with known signatures

- Plane Identification - "Hand-shaking" with onboard computers, requesting voice contact, checking flight paths - basically deciding if friend or foe

- War Machine - if a Rocket or hostile Plane, the military take over and decide how best to deal with the target (Missile defense, scramble rapid response fighters)

They could be completely separate systems, located in separate places, communicating through secure services. However, all they would need to co-operate in the system is a shared/common interface, as outlined as an essential part of the Blackboard design pattern.

public interface IKnowledgeSource

{

bool IsEnabled { get; }

void Configure(Blackboard board);

void ExecuteAction();

KnowledgeSourceType KSType { get; }

KnowledgeSourcePriority Priority { get; }

void Stop();

}

The first three lines here are as per the Blackboard design pattern.

- IsEnabled is set if there are any problems that this Knowledge Base can execute upon.

- Configure sets up any specific parameters or data that is offered by the controller to help solve the problem. In my multi-problem example it is not used, as each Knowledge Source interrogates the objects directly, from the Blackboard.

- ExecuteAction is the method that makes the Knowledge Source perform "do it's business" on the problem(s)

Signal Processor

This is the first Knowledge Source to "act upon the problem", in that it takes the raw radar data from the "Incoming Objects", as presented on the Blackboard, in the CurrentObjects collection, which the radar is reporting to. As explained, this is by far the most processor intensive part of the system.

To represent this, I have added a level of granularity into the signal processor, allowing it to only process one small "block" of pixels from a random square of the image. This means that if the random selection is unlucky, it can take many attempts, before the image processor returns enough to identify the object. This is demonstrated in the ProcessAnotherBit method.

public override bool IsEnabled

{

get

{

for (var ix = 0; ix < blackboard.CurrentObjects.Count(); ix++)

if (blackboard.CurrentObjects[ix].Stage < ProcessingStage.Analysed)

return true;

return false;

}

}

public override void ExecuteAction()

{

for (var ix = 0; ix < blackboard.CurrentObjects.Count(); ix++)

if (blackboard.CurrentObjects[ix].Stage < ProcessingStage.Analysed)

ProcessAnotherBit(blackboard.CurrentObjects[ix]);

}

void ProcessAnotherBit(IObject obj)

{

int GRANULARITY = 16;

int blockWidth = obj.Image.PixelWidth / GRANULARITY;

Copying Between WriteableBitmaps

ProcessAnotherBit acts upon the image, which in WPF terms is a WriteableBitmap.

The method copies the known/shown image (to be worked upon) into an array of bytes, representing the image pixels.

int stride = obj.Image.PixelWidth * obj.Image.Format.BitsPerPixel / 8;

int byteSize = stride * obj.Image.PixelHeight * obj.Image.Format.BitsPerPixel / 8;

var ary = new byte[byteSize];

obj.Image.CopyPixels(ary, stride, 0);

And a similar array for the hidden (to be analyzed) image:

var unk = obj as IncomingObject;

unk.GetActualObject().Image.CopyPixels(aryOrig, stride, 0);

It then chooses a random square from "aryOrig" and copies the pixels into the known/shown byte array "ary":

for (var iy = 0; iy < blockWidth; iy++)

{

for (var ix = 0; ix < blockWidth; ix++)

for (var b = 0; b < 4; b++)

{

ary[curix] = aryOrig[curix];

curix++;

}

curix = curix + stride - (blockWidth * 4);

}

And finally returns the updated array to the actual WriteableBitmap:

obj.Image.WritePixels(new Int32Rect(0, 0, obj.Image.PixelWidth, obj.Image.PixelHeight), ary, stride, 0);

Image Recognition

This knowledge Source is responsible for trying to figure out what the pixilated image is. This is done on every "pass" of the data, as the image takes form. Every time a block of pixels is added to the known image, it is compared against known images to see if it now only matches ONE of the images. Although not shown in this simple example, it could also trigger if all remaining images are hostile, allowing the War Machine to get involved before the image is even fully analysed.

Comparing Pixels

This is an ugly piece of byte crunching code not worth documenting, except for the actual ARGB comparison:

for (var ix = 0; ix < blockWidth; ix++)

{

var argb1 = (ary[curix + 1] * 256 * 256) + (ary[curix + 2] * 256) + ary[curix + 3];

var argb2 = (aryKnown[curix + 1] * 256 * 256) + (aryKnown[curix + 2] * 256) + aryKnown[curix + 3];

if (argb1 != 255 * 256 * 256 && argb1 != argb2)

{

nomatch = true;

break;

}

curix += 4;

}

As you can see, it is iterating through the array of bytes, pixel by pixel (4 bytes at a time). This code specifically checks the RGB values for a match.

If the detection code finds only one match remains, then the data is retrieved for the hidden object, representing the "found you" moment, when you pull the relevant data from lookup databases, and potentially representing many other Knowledge Sources which may have been watching the Blackboard for such updates.

if (matches.Count() == 1)

{

obj.Type = matches[0].Type;

obj.Name = matches[0].Name;

obj.IsThreat = matches[0].IsThreat;

obj.Image = new WriteableBitmap(matches[0].Image); //Create new image instance

if (obj.Type != ObjectType.Plane)

obj.Stage = ProcessingStage.Identified;

else

obj.Stage = ProcessingStage.Analysed;

}

Plane Identification

This Knowledge Source is just an example of another analyzer layer that could process the data further. We have BirdObjects (safe) and RocketObjects (hostile) but we also have PlaneObjects, that could be either, depending on further analysis. In this module's Execute method, it emulates the process of further analysis, whether that be initiating a hand-shake protocol to onboard computer identification systems, a manual contact attempt from air traffic controllers, and checking the resulting data against published flight paths.

This is a good example where a callback method would be used, as further analysis could take "human time", which is of course, the slowest of all.

In this simple example, this is represented by further extraction of data from the hidden actual IObject, within the IncomingObject:

for (var ix = 0; ix < blackboard.CurrentObjects.Count(); ix++)

{

var obj = blackboard.CurrentObjects[ix];

if (obj.Stage == ProcessingStage.Analysed && obj.Type == ObjectType.Plane)

{

var unk = obj as IncomingObject;

var actual = unk.GetActualObject();

obj.Name = actual.Name;

obj.IsThreat = actual.IsThreat;

obj.Stage = ProcessingStage.Identified;

}

}

The War Machine!

This final Knowledge Source represents one possible "solution" to the problem :)

To add extra "peril" to this example, it takes three seconds (passes) for the military to "respond and react" to an object that is identified and labelled as hostile.

With each pass of the War Machine, each 'military response' gets closer to it's target. This represents the delay it takes for Missile Defence Systems to respond, SAMs or "rapid response" type fighter jets to scramble and intercept the target.

Drawing on a WriteableBitmap

The "final blow" is represented by a little piece of WriteableBitmap manipulation, to draw a cross over the image:

public override void ExecuteAction()

{

for (var ix = 0; ix < blackboard.CurrentObjects.Count(); ix++)

{

var obj = blackboard.CurrentObjects[ix] as IncomingObject;

if (obj.IsThreat != null && obj.IsThreat.Value && (obj.Stage != ProcessingStage.Actioned))

{

if (obj.MoveHitsTarget())

DestroyTarget(obj);

}

}

}

private void DestroyTarget(IncomingObject obj)

{

int stride = obj.Image.PixelWidth * obj.Image.Format.BitsPerPixel / 8;

int byteSize = stride * obj.Image.PixelHeight * obj.Image.Format.BitsPerPixel / 8;

var ary = new byte[byteSize];

obj.Image.CopyPixels(ary, stride, 0);

DrawCross(stride, ary);

obj.Image.WritePixels(new Int32Rect(0, 0, obj.Image.PixelWidth, obj.Image.PixelHeight), ary, stride, 0);

obj.Stage = ProcessingStage.Actioned;

}

private static void DrawCross(int stride, byte[] ary)

{

for (var y = 1; y < 47; y++)

{

var line1Pos = (y * stride) + (y * 4);

var line2Pos = (y * stride) + (stride - 4) - (y * 4);

for (var a = -1; a < 2; a++)

{

ary[line1Pos + 4 + (a * 4)] = ary[line2Pos + 4 + (a * 4)] = 255;

ary[line1Pos + 5 + (a * 4)] = ary[line2Pos + 5 + (a * 4)] = 0;

ary[line1Pos + 6 + (a * 4)] = ary[line2Pos + 6 + (a * 4)] = 0;

ary[line1Pos + 7 + (a * 4)] = ary[line2Pos + 7 + (a * 4)] = 0;

}

}

}

Blackboard Extensibility

Considering how long the Signal processor can take to process the radar data, added to the extra delay in hitting the targets, means some objects may not get processed in time.

This is of course unacceptable in such a system, so it would need to expand, grow with demand. As our controller is simply iterating over a list of known Knowledge Sources, it is a simple matter of adding to that collection. This is demonstrated with the "Add another signal processor" button. each click of this adds extra processing power to each loop of the controller, and after just a few clicks, you will see the images getting identified within just a few iterations.

This represents one of the main selling points of this design pattern.

Extra Knowledge Sources will also bring their own priority attributes, allowing it to slip into the collection at whatever priority is required.

This is also why the Controller is needed, to centralize the decision process and mediate between multiple Knowledge Sources.

Download and Try!

This project is available for download and further study in the TechNet Gallery:

http://gallery.technet.microsoft.com/Blackboard-Design-Pattern-13a35a7e

See Also

Links to domain parent articles and related articles in TechNet Wiki.