UNISA Chatter – Operating System Concepts: Part 8 … Memory

See UNISA – Summary of 2010 Posts for a list of related UNISA posts. this post we briefly investigate memory management.

See UNISA – Summary of 2010 Posts for a list of related UNISA posts. this post we briefly investigate memory management.

What is memory and why bother?

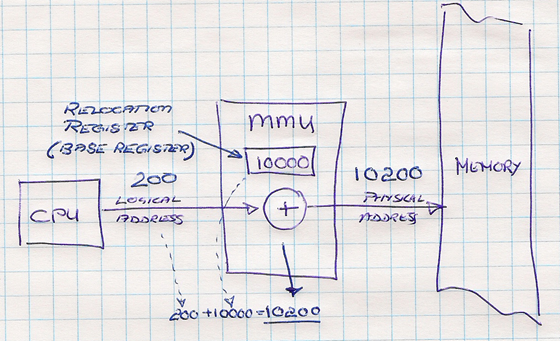

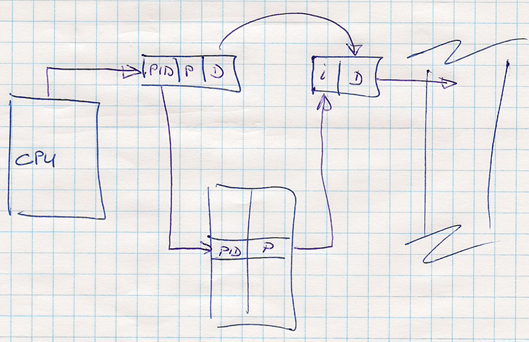

Memory is a potentially huge array of bytes used to store data and instructions, which are accessed by the central processing unit (CPU) by an address. A physical address refers to a unique physical address in memory, whereas a logical address refers to an abstract address space. The hardware device, known as memory-management unit (MMU), performs the magic of converting the logical to physical address, which means that a logical address is born in the CPU and a physical address in the MMU.

… logical address to physical address

… logical address to physical address

- Firstly, why do we need memory?

- In UNISA Chatter – Operating System Concepts: Part 1 … Introduction we introduced some of the computer system puzzles and memory is the fastest storage media. There is definitely a need for speed, which creates the demand for memory in terms of size and speed. Accessing normal memory, for example can take several CPU clock cycles, which would introduce intolerable bottlenecks, and as a result we have fast memory between the CPU and the main memory known as cache.

- Secondly, why do we need a differentiation between logical and physical addresses?

- When we start considering multi-process, multi-user, multi-processor and multi-tasking operating systems we quickly realise that we have more than one entity competing for memory.

- Binding of program instructions and data to memory addresses is typically done a compile time, at load time or at execution time. If we have one memory space and every program has its fixed home, we could use the compile time binding to generate absolute addresses. However, with the advent of dynamic link libraries, swapping and paging a mixture of load time and execution time mapping has entrenched itself.

- Protection of the memory space, sharing of the memory space and potentially offering more memory space than is physically available introduces features such as swapping, paging and segmentation which we will briefly explore.

Swapping

One way to get avoid running out of memory, is the process of swapping processes to a backing store (roll out) and swapping them back (roll in) based on utilization, priority or some other criteria. While this option was deployed in operating systems such as Windows 3.1, it has a huge context-switching latency.

Contiguous Memory Allocation

With contiguous memory allocation we can divide the memory up into fixed- or variable-sized partitions, where each partition is owned by one process. With variable-sized we may fragment the memory as processes come and go, leaving holes of unused memory lying around. To fill these holes, there are three basic strategies:

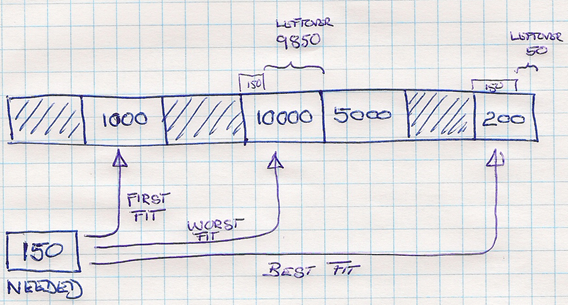

… first, best and worst fit strategies.

… first, best and worst fit strategies.

- First fit

- Allocate the first hole that is big enough.

- Best fit

- Allocate the smallest hole that is big enough.

- We must either order the list of holes by size or search the entire list to find a suitable hole.

- Worst fit

- Allocate the largest hole.

- We must either order the list of holes by size or search the entire list to find a suitable hole.

- This strategy has an advantage over the first fit, as the leftover hole (hole size – partition size) may be re-usable.

Paging

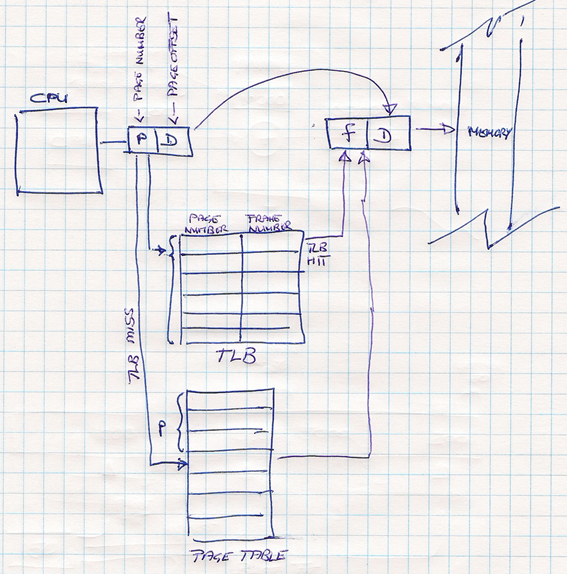

Paging is a memory-management scheme that allows physical address space of a process to be non-contiguous, which avoids external fragmentation and the need for expensive defragmentation. With paging the physical address is broken up into two parts, namely the page number used as an index into a page table and the page offset. To support large page tables for which the use of fast register is not feasible, hardware support  introduces the translation look-aside buffer (TLB) which is associated with high-speed memory.

introduces the translation look-aside buffer (TLB) which is associated with high-speed memory.

… paging, with the TLB and page table.

… paging, with the TLB and page table.

As shown in the illustration above, if a page (p) number is found in the TLB, the frame (f) number is immediately available and used to access memory. If the page is not found in the TLB, we encounter what is known as a TLB miss we have to access the page table, which results in an address as well, but clocks up a considerable amount of additional CPU cycles.

Special cases include:

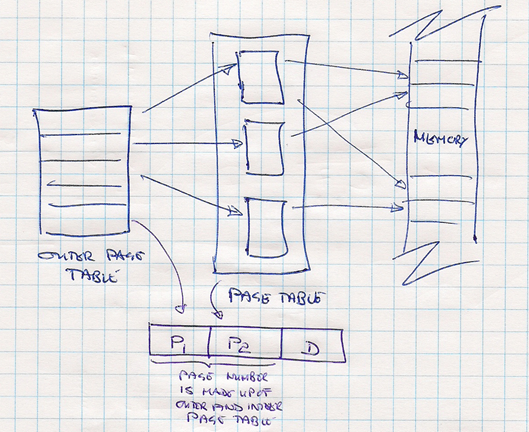

- The two-level page-table scheme, creating an outer page table and a page of page table, thus reducing the potential footprint of the page table alone.

… two-level page-table scheme.

… two-level page-table scheme. - Hashed page table scheme, which has a linked list of elements where the hash value is the virtual page number.

- Inverted page table scheme, which has one entry for each real page (frame) of memory, which includes information about the owner process.

… inverted page table scheme.

… inverted page table scheme.

Another interesting feature of paging is allowing multiple page table entries to point to the same page frame in memory, which allows users to share re-entrant code and data. Alternatively processes could use the same page number or by redirecting all references through registers that map to the page number.

Segmentation

Segmentation is the process of creating a more natural view of memory. Compilers typically divide up things like code, global variables, heap and the stack into different areas. In fact it creates content specific segments.

Some operating systems and hardware support segmentation and segmentation with paging, as is the case with the Intel Pentium processor.

Next up will be virtual memory management. See you next time :)

Comments

- Anonymous

November 08, 2013

Thanks ...really well put