UNISA Chatter – Operating System Concepts: Part 7 … Deadlocks

See UNISA – Summary of 2010 Posts for a list of related UNISA posts. this post we briefly investigates scheduling, which is important when we want to operate in a multi-process and multi-thread backyard.

In the previous post we spoke about teenagers fighting for the pizza, the tomato sauce … what happens when the one teenager grabs the pizza and the other the tomato  sauce? Probably the same as when two 747 cargo planes are lining up on either end of the runway, open throttle and rush down the runway from opposite directions, two trucks crossing a bridge from opposite sides on a single lane bridge or a climber coming down the rope, while another is busy climbing up on the same rope … we will have a clash, a conflict, a Mexican standoff … more commonly referred to as a “deadlock”.

sauce? Probably the same as when two 747 cargo planes are lining up on either end of the runway, open throttle and rush down the runway from opposite directions, two trucks crossing a bridge from opposite sides on a single lane bridge or a climber coming down the rope, while another is busy climbing up on the same rope … we will have a clash, a conflict, a Mexican standoff … more commonly referred to as a “deadlock”.

What is a deadlock?

A deadlock is a situation in which two or more processes are waiting indefinitely for an event that can only be initiated by one of the waiting processes. Operating systems deal with deadlocks in three major ways:

- Prevent deadlocks, ensuring that a system never enters a situation where a deadlock can occur.

- Detects and recovers from deadlocks.

- Put the processor in the sand and pretend that deadlocks will never occur … Windows and Unix use this option :|

To enter a deadlock, four conditions must exist. What are they?

Mutual exclusion … a resource is held in a non-shareable state. If process A is using the resource and any other process that requires the resource is placed into a wait state.

Hold and wait … a process must be holding on to resources and request other resources that are held by another process.

No pre-emption … processes and held resources cannot be pre-empted, relying on processes to voluntarily release their held resources.

Circular wait … a set of processes must exist such that the one process A is waiting on a resource held by resource B and vice versa, creating a circular dependency and wait situation.

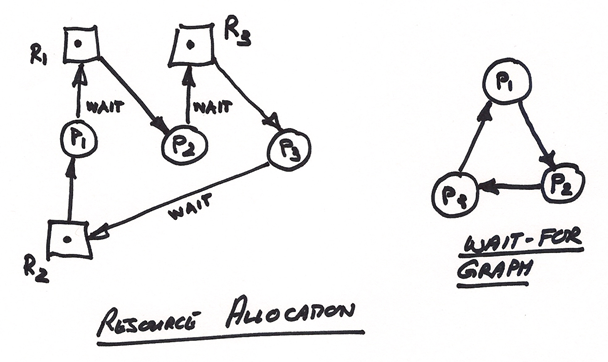

The following diagrams show a typical circular wait, where P1 is waiting for a resource (R1) held by P2, P2 is waiting for a resource (R3) held by P3 and P3 is waiting on a resource (R2) held by P1 … we have the bone-in-cheek-wait-forever scenario:

Avoiding deadlocks

To avoid deadlocks we can pursue a strategy of a “safe state”, which means that we essentially ensure that allocate resources only to a point where we still have a safe sequence, meaning that each process can obtain needed resources, performs its tasks and return its reserved resources. If this is not the case, we may enter an unsafe state, which is not necessarily the message of gloom and doom, but raises the possibility of a deadlock.

For example, assume we have processes 1, 2 and 3, competing on a system with 10 resources allocated as follows:

| Max | Current | Need | |

| P1 | 9 | 4 | 5 |

| P2 | 4 | 2 | 2 |

| P3 | 8 | 2 | 6 |

Max = Current (Allocation) + Need (Waiting)

We have three processes that will potentially ask for 21 resources, 11 more than we have. At this stage only 8 resources are allocated.

- If P1 where to complete we would release four current and a possible 9 resources, allowing P3 to complete even when asking for another 6 needed resources. It could be …

- If P2 completes we would release two current and a possible 4 resources, which would not allow either P1 or P2 to complete if they scream for their needed resources.

In short, we do not know and we cannot guarantee completing of the three processes, thus ending up in an unsafe mode.

Is it work to implement deadlock avoidance strategies? It depends … oh really, the classic IT answer … yes, it depends on how frequently deadlocks are expected and are occurring. Is the cost of the avoidance system, the overhead in terms of processing and other taxation worth more than dealing with deadlocks as they occur? Are we dealing with an operating system powering the nuclear arsenal program or passenger planes? If yes, we probably have examples where deadlock avoidance makes a lot of sense.

To avoid deadlocks we have to ensure that at least one of the four deadlock conditions mentioned in the beginning are not possible. For example, one strategy is to add the functionality to the operating system to be able to pre-empt a process, releasing the resources it holds to other waiting processes. Sounds easy, however, it means that we have to either pre-empt and kill a process, or be in a position to pre-empt the process, safe state and return it to a known state at a later stage. The latter is not possible with all resources, for example register resources allow this strategy (i.e. context switching), while a disk drive or printer would probably be a bad resource candidate for an easy and cost effective rollback.

Lets look at an example and see what is involved to determine if we are in a safe state and whether new resource requests should be honoured.

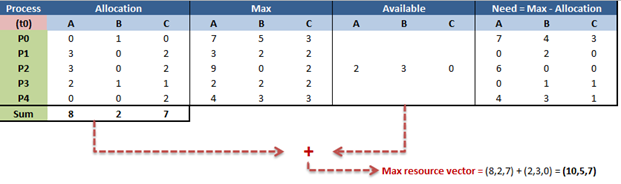

Example at snapshot in time (t0):

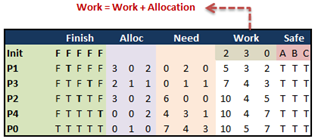

Using the Bankers Safety Algorithm we determine if we are in a safe state:

- Initialize

- Work = Available = (2,3,0)

- Finish = (false,false,false,false)

- Processes (starting from those needing the least)

- We end with a Finish array of (TRUE, TRUE, TRUE, TRUE, TRUE), which means that:

- One of the possible safe sequence is given by <P1, P3, P2, P4, P0>

- We are in a safe state.

- We end with a Finish array of (TRUE, TRUE, TRUE, TRUE, TRUE), which means that:

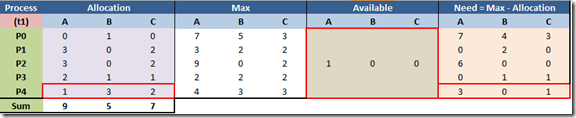

Example at snapshot (t1):

- P4 requests 1x A and 3x B resources.

- Request = (1,3,0) < Available = (2,3,0) … we can proceed

- Available = Available - Request = (1,0,0)

- Allocation = Allocation + Request = (0,0,2) + (1,3,0) = (1,3,2)

- Need = Need – Request = (4,3,1) - (1,3,0) = (3,0,1)

- Snapshot (t1) now looks like:

- Findings

- There is no process (P) such that Need < Available, i.e. < (1,0,0).

- Request cannot be granted safely.

- If we grant, we would be in an unsafe state.

Early Deadlock Detection

To detect a deadlock we need an algorithm that continuously, or on a predefined interval, examines the system state to determine if a deadlock has occurred, and as we will obviously detect deadlocks we also need a strategy to recover from detected deadlocks. The more we process the detection algorithm the more pr0-active we will be, however, we will also add considerable overhead to the system in terms of computation time.

To detect a deadlock we need an algorithm that continuously, or on a predefined interval, examines the system state to determine if a deadlock has occurred, and as we will obviously detect deadlocks we also need a strategy to recover from detected deadlocks. The more we process the detection algorithm the more pr0-active we will be, however, we will also add considerable overhead to the system in terms of computation time.

To recover from deadlocks we need a strategy based on methods such as aborting all deadlocked processes, aborting selected processes until the deadlock cycle is resolved and/or using resource pre-emption. This is a topic in itself and we will therefore postpone the exploration of which process(es) will be the victim, how pre-empted processes can roll back and avoiding starving processes … in other words waiting forever or beyond a reasonable period of time.

See you for the next UNISA chat soon.