UNISA Chatter – Operating System Concepts: Part 6 … Synchronization

See UNISA – Summary of 2010 Posts for a list of related UNISA posts. this post we briefly investigates scheduling, which is important when we want to operate in a multi-process and multi-thread backyard.

See UNISA – Summary of 2010 Posts for a list of related UNISA posts. this post we briefly investigates scheduling, which is important when we want to operate in a multi-process and multi-thread backyard.

If you have hungry boys with a black hole as a stomach, like we have at home, you will understand that “synchronization” is a very important concept at the dinner table, when there are two pizza boxes amongst three of more humanoids, one tomato sauce bottle for the table or the one and only Coca Cola bottle. Everyone seems to grab for the same thing at the same time, resulting in conflict, deadlocks and generally unhappy faces. So how does this relate to operating system concepts?

Synchronization challenges

In recent blog posts we discussed multi-processing and multi-threading, which means that similar to teenagers scrambling for the pizza, we have processes and threads scrambling for shared resources such as memory, shared data and devices.

Hera are two typical code challenges when dealing with concurrent logic…

Synchronization Support

Synchronization Support

Operating systems provide different locking mechanisms, including critical sections, hardware based synchronization, semaphores, mutexes and transactions. Let’s have a ‘quick’ look at these mechanisms.

Critical Sections

Critical sections are designed to support mutual exclusion to enforce that only one process can enter a critical section at any time, that the selection on which of a number of waiting processes can enter a critical section is not postponed indefinitely, and to enforce a bound, or limit, on the number of times a other processes are allowed to enter a critical section before a process that requester to enter the critical section is granted access.

The typical logic for critical sections is as follows:

…

{

--> Critical Entry Section

--> Critical Section

--> Critical Exit Section

}

…

The implementation of critical sections is operating system specific and you must ensure that you verify how it is implemented. In Windows, using Win32 for example, a critical section is implemented as follows and it is important to note that with Win32 a critical section is a thread synchronization mechanism, not a process synchronization mechanism. In other words, a critical section in Win32 only applies to a specific process. A ample code snippet is as follows:

static CRITICAL_SECTION cs;

InitializeCriticalSection(&cs);

void f()

{

EnterCriticalSection(&cs); /* thread-safe processing! */

… critical section …

LeaveCriticalSection(&cs);

}

Hardware

Critical sections can also be implemented by hardware instructions to implement atomic instructions, such as swapping, adding or subtracting values.

Semaphores

A semaphore can be of type binary (true or false) or of type counting, which implements a counter for a set of available resources, typically used to prevent race conditions.

In Win32 we typically create a semaphore as follows …

HANDLE hSemaphore = CreateSemaphore( NULL, 10, 10, NULL);

… then we wait for an available Semaphore.

DWORD dwWaitResult = WaitForSingleObject( hSemaphore, 0);

… then we release the Semaphore …

ReleaseSemaphore( hSemaphore, 1, NULL) )

Mutexes

In Windows a mutex is a synchronization object whose state is set to signaled when it is not owned by any thread, and nonsignaled when it is owned. It ensures that only one thread can own a mutex and can be used to protect resources such as shared memory. The implementation code is similar to the semaphore …

1. We create a mutex …

HANDLE hMutex = CreateMutex( NULL, FALSE, NULL);

2. … then we wait for a mutex …

DWORD dwWaitResult = WaitForSingleObject( hMutex, INFINITE);

3. … then we release the Mutex

ReleaseMutex ( hMutex );

Transactions

Transactions are a heavier duty synchronization weapon and implements a program unit that must be executed atomically, in other words everything succeeds or fails. A typical example is a transfer of funds from account A to account B, where we debit account A and credit account B. If the debit succeeds and the credit fails, you would be expecting that the debit is reversed … rolled back, especially if the money is coming off your account :)

Transaction are typically associated with a transaction write0-ahead log, which keeps track of what has been done. When the system crashed, the transaction log is used during the recovery to decide what is needed to restore the machine to the state it was before the crash, using undo and redo operations. Checkpoints are used to indicate in a log what has been committed to disk and need not be considered during a subsequent recovery. The more frequent we “checkpoint” the quicker the recovery, but the greater the impact on the runtime system.

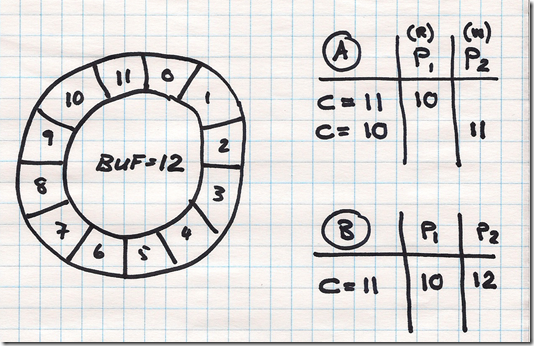

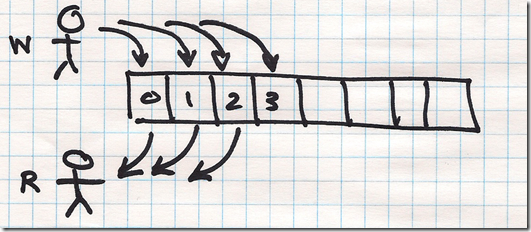

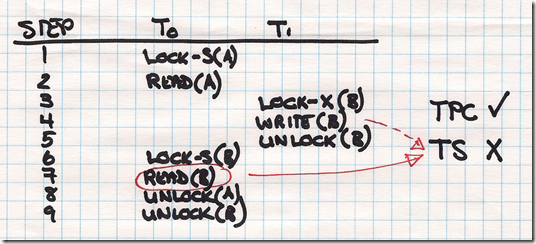

Transactions use a locking protocol using shared and exclusive locking. With the former allows us to read, but not write to locked data, while the latter allows us to write and read locked data. Another protocol uses timestamps, with write and read timestamps. In essence a write timestamp denotes the largest timestamp of any transaction that had a successful write and will fail any subsequent read of a transaction with an earlier timestamp.

In the next post in this series, we will be looking at the world of deadlocks … which will make the gibberish in this post more meaningful.

Comments

- Anonymous

December 04, 2011

What are the different locking mechanisms used by windows 7?