UNISA Chatter – Operating System Concepts: Part 4 … Threads

See UNISA – Summary of 2010 Posts for a list of related UNISA posts. Well, in this post we briefly cover the the world of threads within an operating system.

See UNISA – Summary of 2010 Posts for a list of related UNISA posts. Well, in this post we briefly cover the the world of threads within an operating system.

Threading brings back memories of excitement, but also of gloomy nights when we developed, debugged and then maintained multi-threaded services on the CTOS operating system platform and later on Windows. While multi-threading can be fun, debugging a multi-threaded program can be challenging and needs a pinch of caution before we create a maintenance monster.

In these posts I will not delve into whether multi-threading or multi-process programming is better, more efficient or the right way to go … you decide what works best for you and use that :) This specific topic has resulted in many, many discussions and beyond the scope of these posts.

Overview

Threads belong to a process and represent execution flow control, whereby a single threaded process has one execution path, whereas a multi-threaded process has several different execution paths.

Why should we consider multi-threading?

- Better responsiveness to users

- Resource sharing within the process

- Economy … for those that are convinced that creating a thread is more efficient than creating a process

- Scalability

- … and to make maximum use of the new hardware platforms, such as multiple cores.

Some example where multi-threading can add value includes web servers, calculation applications that can process multiple calculations in parallel, interactive user interface, such as WORD, which can potentially allow you to type text, while checking syntax and grammar in the background and printing your documents in the background as well.

User | Kernel threading models

From an operating system perspective we have a number of thread models, made up of user threads, which are associated with a process and managed without kernel intervention or knowledge, and kernel threads which are supported and managed by the operating system. The models come into play as a relationship must exist between user and kernel threads.

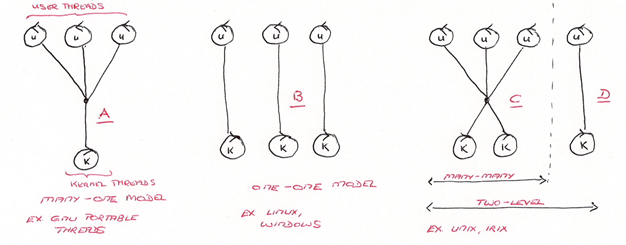

Some of the common models are sown below.

The many-one model maps many user threads to one kernel thread, managed by a thread library in user mode. If any thread makes a blocking system call in this model, all other threads are blocked.

The one-one model maps one user thread to one kernel thread, providing better concurrency and supporting for multiple blocking system calls. The challenge of this model is the high number of kernel threads, which can be taxing on the overall operating system.

The many-many model maps or rather multiplexes many user mode threads to a smaller or potentially equal number of kernel threads. The tax by kernel threads is lower and the advantage of multiple system blocking calls maintained.

The last model shown on the right is a hybrid, based on the many-many, but allowing user threads to directly hook to kernel threads. We therefore have a hybrid model of many-many and one-one, which is also referred to as the two-level model.

Scheduler

Scheduler

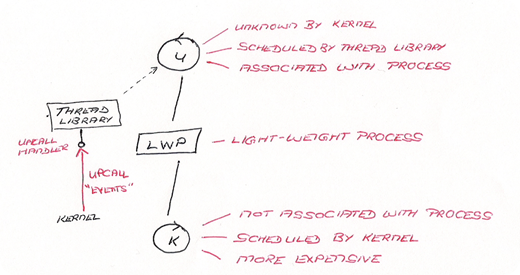

As with processes, we need communication between threads, especially between kernel and user mode threads. The illustration below shows the Lightweight-process (LWP) on which a user thread can be scheduled to execute. The LWP appears as a virtual processor to the thread library and allows the kernel world to inform applications about certain events, known as an “upcall” event. The thread library has handlers which process the events and through magic, which is again beyond the scope of this summary.

When you want to avoid excessive scheduling and complex multi-threading logic in your application, it is a good idea to investigate and make use of thread pools supplied by the application programming interfaces, such as Win32. The advantages are many fold: You avoid having to create the scheduling and especially cleanup logic in your application, you get much better load times as the threads are waiting when you need them and more importantly we are able to share a number of worker threads, instead of creating more and more concurrent threads, which can potentially exhaust system resources.

Challenges

The main challenges we find with multi-threading is synchronisation within the process and debugging a multi-threaded process, especially is we have threads going out of control.

Next on the menu is a species that lives in the same environment as Processes … “Scheduling”.

Acronyms Used

| I/O – Input / Output | LPC – Local procedure Call | PCB – process Control Block | RPC – Remote Procedure Call | WCF – Windows Communication Foundation |