ALM Rangers Ruck – Proposed innovations to the v1.2 guidance. Thoughts?

We introduced the concept of “loose Scrum” (also known as a maul or ruck in Rugby) in 2011 to streamline the ALM Rangers projects and continuously adjust and improve to maximise respect for people, optimise development flow and embrace the philosophy of kaizen (https://en.wikipedia.org/wiki/Kaizen).

Revised 2014-02-11.

remembering our constraints

Before we cover our observations and proposed innovations it is important that you peruse ALM Guidance: Visual Studio ALM Rangers — Reflections on Virtual Teams and Things you probably [did not] know about the Visual Studio ALM Rangers to understand our world, challenges and constraints. One of the self-inflicted (intentional) constraints is our 24x7 dog-fooding of VS Online and using features out-of-the-box.

We are in the process of wrapping up our last in-flight projects and preparing new teams to take-off on new adventures. As part of kaizen we have made observations and are discussing possible innovations herein.

so, where’s the fire … what are my observations?

proposed innovations

Many of the proposed innovations are based on learning's from Scaled Agile Framework® (SAFe™), which is an approach we are investigating as part of our Agile and Ruck environments.

#1 - normali[s|z]ed estimations

#1 - normali[s|z]ed estimations

Simplify estimation effort, improve estimation accuracy and ensure estimates are done and supported by team.

See How do you normalise your velocity estimation for more information.

See How do you normalise your velocity estimation for more information.

- Estimate assuming full-time availability of resources.

- Team estimates stories using story points based on developer/contributor and a tester/reviewer pair per story.

- Team finds smallest story that can be developed and validated in full day, relating it to one story point.

- Team estimates the remaining tasks, using the 1 story point task as a reference.

- Split up big stories into smaller stories that are equal or less to the part-time sprint maximum.

- Visually indicate when a story/task is ready for testing/review. For example, the snippet shows a feature #9462, with a story #9463 that has been developed and tagged as ready for testing.

- A story is only complete when development and testing activities are completed, and the definition of done (DoD) is met.

2014-02-11 …dev + test is not a waterfall sequence. Unit tests and test cases can be created before or in parallel to development efforts. - The tester/reviewer should be a motivation for the developer/contributor to avoid the last minute submission.

Clarification from discussions:

- We will use the poll feature in Lync as a bare minimum or online planning poker solutions. Requirement is that at least 50% of the team is present and that the PO does no estimating.

… 1,2,3,5,8,13,21

… 1,2,3,5,8,13,21 - Story points have no direct correlation with time (hours) and usually we calculate:

~days = days per iteration * (backlog size / velocity)

However, as we have not been able to define an average velocity for Rangers, I propose we use the hybrid story point approach and associate our story point to a day, for example, which of these piles can I develop and test in a day? That’s 1SP and that defines the base of the estimations. - A story with a SP of 1, 2, 3, 5, 8 or more could be do’able in a day as well. It is all relative and based on the smallest pile of stuff the team identified as a SP=1.

#2 - enforce a TRP sprint

#2 - enforce a TRP sprint

Introduce pro-active sprint planning, reduce ambiguity and task management.

Each new project or subsequent release starts with a training-research-planning (TRP) sprint to gather the following objectives:

- Motivation which outlines why we are considering the project

- Vision which describes the objectives of the project

- Smart objectives (specific, measurable, achievable, realistic and time-based)

- Acceptance criteria which define what it take for the product owner(PO) to signoff the project

- Definition of Done for the TRP sprint

The TRP sprints starts with a kick-off meeting, followed by research, training and finally a sprint planning meeting that plans the first construction sprint with a high-level of confidence and subsequent sprints with best-case estimates.

#3 - enforce PSI time box

#3 - enforce PSI time box

Ensure that the team has a realistic horizon in sight. Do less, better and ship what we have on demand.

In the context of Ranger solutions each release is time-boxed within one TRP sprint (see above), 1-3 development sprints and a QP sprint (see below). Each project team is divided into one or two feature teams, each with its own sprint plan, but all sharing the same cadence and project vision, objectives and acceptance criteria.

Each feature team commits time at the end of the sprint to do a sprint retrospective and plan the next sprint. In addition each team delivers a 1-3min video which summarises the sprint deliverable and can be used as reference for new team members or stakeholders. This becomes invaluable evidence for the Ruck-of-Rucks! See https://sdrv.ms/La0W1P for an exceptional example of a sprint demo video.

Clarification from discussions:

- The idea is that we make it easy for a team to deliver evidence on their working solution and allow them to do some well-deserved PR.

- Essentially I see the following standard story for each sprint:

Standard: As a stakeholder I can view a sprint demo video so that that I can get a quick overview of deliverables

#4 - enforce a QP sprint

#4 - enforce a QP sprint

Raise quality bar across the board

Scaled Agile Framework® (SAFe™) introduced a hardening-innovation-planning (HIP) sprint at the end of a potentially shippable increment (PSI).

We will us the opportunity to raise the overall quality bar and plan during this last sprint. The team focuses on eliminating any remaining debt, such as copy-editing, and validates that all quality bars have been met and encourages the product owner (PO) to signoff. In addition the team uses the time to experiment with innovations for future releases and if needed, to plan the next release.

#5 - agree on one innovation

#5 - agree on one innovation

Minimise waste of time due to meetings and encourage continuous improvement.

Instead of meeting to discuss what went well, what went not so well and how we can improve, we agree on at least one innovation for the next sprint. This becomes invaluable evidence for the Ruck-of-Rucks!

Clarification from discussions:

- The idea is that we continue to discuss and agree on what went well and badly if possible, but that each team must deliver one innovation for the next sprint.

- Essentially I see the following standard story for each sprint:

Standard: As a team we can define one innovation for the next sprint so that we can improve our project continuously

#6 - revise ruck chart

#6 - revise ruck chart

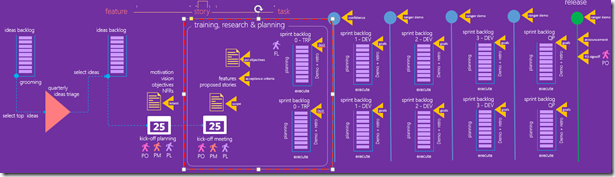

Create consistent project plan and align with visualization such as the Scaled Agile Framework .

Retire our v1.2 Ruck Cheat Sheet …

… and introduce a new poster / cheat sheet …

we need your thoughts and consensus

We need your thoughts and candid feedback, so that we can make decisions by consensus and start the dog-fooding of innovations with our next project adventures.

Add your comments below or contact us by email.

Comments

Anonymous

February 02, 2014

The comment has been removedAnonymous

February 03, 2014

Section 1 - I am not following all of the math and logic. In my day job I encourage teams to avoid adding stories with points 13 or greater (this indicates the story is too large or too many unknowns). I don't even want to see more than a single 8 point story in a sprint but allowing an 8 recognizes that some tasks are just big and easier to tackle at that size than it is to break it down into smaller stories. If a team fails to deliver on a committed item with 1 to even 8 points it tends not to be that big of deal from a velocity perspective. The sweet spot for me is around 3 points - I like to see a bunch of 3 point stories in a sprint. The problem I have with the scaling proposal as I understand it is we seem to be taking the smallest possible number in our story point scale, a 1, as a reference story which does not allow us to estimate the story that might equate to a 1/2 day story. I would rather see the reference story equate to a 3. I also don't follow how 1 point is a full time dev/rev pair and 3 points is the maximum points the dev/rev pair can achieve in a month. That suggests each team member can commit 24 hours to a project in a month, about 6 hours per week. While I do see some rangers spending 5+ hours per week on work for the team, I also see a measurable number of team members (perhaps even most) spending much less time and often dropping off of the team all together. I think we should target 2 - 3 hours per week per team member or 8 - 12 hours a month as a team members capacity. Finally, should we be trying to tie points to hours? Section 3 - I am lukewarm on the notion of having teams within the team. Normally I would want the entire team focused on the overall success of the sprint. More than one team could promote a focus on feature team deliverables which could lead to a successful feature delivery but an overall failure of the team. Everything else looks good.Anonymous

February 03, 2014

@Tim, I agree on the story points and for ALM Rangers I am looking at 3 being the "preferred" maximum and 5 being the "tolerated" maximum, viewed with suspicion. This is primarily due to us working part-time, which makes longer running tasks or "bigger piles of stuff" too risky. No need to worry about the maths, because it will be an evolving adventure ... I just tried to associate the time-less story points with a rough estimate of time within a Rangers context ... then again this will vary with each team. Assumption 1: 1SP defines a pile of stuff can be completed in a day. Assumption 2: We have 260 working days per year, which works out to 21.67 working days per month or roughly 21.67 of the 1SP stories. Assumption 3: A Ranger can commit 1/8th of a calendar month to Rangers projects, which works out to <1h/day or roughly 2.708 of 1SPs/month. Now I need to emphasise that 2 or 3 SPs may also be do'able in a day, but I am assuming they will take more.Anonymous

February 03, 2014

The comment has been removedAnonymous

February 03, 2014

The comment has been removedAnonymous

February 03, 2014

I really like this =), can't wait to start using itAnonymous

February 04, 2014

I think it looks good. The part I see as a potential challenge is the video review. While I like the idea behind it I'm not sure how well this will work in practice with our distributed teams. My initial reaction was that this practice is best suited for co-located teams, but I'd happily be proven wrong! :-)Anonymous

February 05, 2014

@Tommy, the alternative to the video is to have a sprint review meeting, which includes a the demo. While this is ideal, it has proven very challenging to schedule consistently. The idea of the demo video is that it can be done anytime towards the sprint by one of more, reviewed by all in the team and dropped in a share for other teams to review. I would be interested to understand why you feel it is not suited for distributed teams?Anonymous

February 10, 2014

Scheduling meetings can be a pain, but creating and editing a video as well. It is a very difficult thing to do, especially in a distributed collaborative fashion. What worked very well for us was using wiki of sorts. A couple of screen shorts and some commenting. Much more "collaborative" friendly, and frankly quicker than setting up a video IMO.Anonymous

February 10, 2014

@Niel, when we talk about videos it is not asking for the quality delivered by RobertM :) Setting up a Lync session, running a review session on your own or preferably with a few colleagues from the team and hitting the record button results in an invaluable video.Anonymous

February 10, 2014

The comment has been removedAnonymous

February 10, 2014

@Osmar, #1 - Pairing will hopefully improve collaboration and visibility into progress or lack thereof. It is up to the tester to raise an impediment of the progress is lacking. #2 - We are currently looking at using Lync Poll feature and the TFS Agile Poker application for the Rangers. Lync is a light-weight poll solution and should be well suited for Ranger teams which use Lync for collaboration. #3 - If I compare the effort of producing a 2-3 min video using Camtasia, Lync or any other tool, with the effort of getting the entire distributed team together for a sprint review and demo meeting, I vote for the former. The responsibility of producing the video is with the team and there is always one in the team that knows and enjoys the "fun" of producing a demo video.Anonymous

February 10, 2014

@Rangers, THANK YOU for the ongoing and candid feedback, which helps us better understand the challenges :) Keep the candid and invaluable feedback rolling in!Anonymous

February 10, 2014

The comment has been removedAnonymous

February 10, 2014

OK, well you did ask for uncandid feedback, and the quintessential New Yorker in me will come forth: #1 Normalized Estimations: Not so familiar with the SAFE abridged estimation methods, but with care I can see this may be feasible. Although, admittedly I look at it as a ratio and proportion approach with some capping, so let me explain: I've been estimating activities while building out a backlog based on story points where everyone throws in a card. (Standard Planning Poker, which has been prevalent with story point estimation for years). I have a couple scrum decks I even use: one with Fibonacci numbers, one with “2 power values” (0,2,4,8,16,32,64), and a few other variations. I like the 2-power values because I can assign bitwise operators directly and figure out all possible combinations during estimation, and group only those - rather than take the highest/lowest estimations. That helps me substitute tasks when normalizing my backlogs for resourcing and such. Anyway, the point here is you could use a regular playing card deck, it really doesn't matter. The end result is an estimation that leaves you with the most accurate effort estimates. (See en.wikipedia.org/.../Software_development_effort_estimation ). While the Wikipedia article on effort estimation lists only psychological factors, I’d say there are actually multiple factors that influence estimation: ie: perceptions, knowledge, resource availability, etc. that may produce inaccurate estimates, in addition to the psychological factors. Without enough objective data to go with the subjective plans, effort estimates will always skew larger. So for me, I think understanding HOW the normalized estimations contribute to a more accurate, ALM Rangers project-context is the info I’m missing here. So that brings me to #2, enforcement of a TRP sprint:Anonymous

February 10, 2014

The comment has been removedAnonymous

February 10, 2014

#4 & #5: Hardening-innovation-planning (HIP) sprint and the agreement on one innovation: The core principle I see to keep sights of here is to continue the Kaizen lifecycle (Plan, Do, Check and Act accordingly) as well as the Ruck principles at play. I see this as the perfect retrospective area, although nothing has been released during the HIP sprint, which again may have negative impact of the time-to-market, and a residual effect of increased cost. Do you stage deployment at this point, and move final quality gate checks to this final sprint? #6 Ruck Chart Updating, will likely be a product of an intersecting union across all projects’ HIP innovations (ie: the lessons learned and project vectoring). What epics can Ranger’s contribute to the v1.2 guidance as innovations, based on our thoughts here? A big thank you to all for contemplating my feedback too.Anonymous

February 11, 2014

The comment has been removedAnonymous

February 11, 2014

... continued. #4/5 – We never really have the time to harden our solution, to learn from our mistakes and to innovate … hence the enforced HIP sprint. The intent is to ask the developers to sit on their hands or engage on another project, while we work on quality validation, reviews, bug resolutions and learning from our mistakes and properly planning the next version (PSI) or deferring to the next triage. It is intended to embrace Kaizen principles and to deliver the quality in our “less but higher quality” mantra. It will be up to our Ruck Masters to take the sprint innovations and learnings to the Ruck of Rucks, revising our suggested innovations and the Ruck Guide accordingly. What innovations can a Ranger contribute to Ruck vNext? – Anything … this thread of comments will be giving the Ruck Masters a few sleepless nights and the feedback to discuss and revise the Ruck guidance. You can either add more thoughts to this thread, or BrianB and I direct. Hope I answered all your questions, if not “shout”.Anonymous

February 13, 2014

The comment has been removedAnonymous

February 13, 2014

Nice, I really liked the method.Anonymous

February 13, 2014

@Tommy, good point. The responsibility for defining the sprint pre-requisites and delivering the sprint nuggets lies with the TEAM. They need to own the sprint, be passionate about the project and be motivated to deliver a working solution... if not, we have a "stop the bus" scenario. To ensure the team understands the current and suggested innovations we rely heavily on the Ruck Master (RM) who mentors the team. Not sure if you noticed, but the Ruck Master takes the sprint deliverables to the Ruck-of-Ruck gathering. RMs are therefore encouraged to motivate the TEAM to deliver the sprint deliverables :)Anonymous

February 18, 2014

The point of improving estimation is to better know if we know what we need to do. Large story points or remaining work tells me you don't have a clear understanding of the work at hand. I like small since to me it means we either know what needs to be done or variance will be less impactful than with large. Getting improvements from retros (still prefer postmortem :-) ) is a challenge. If we can just get one improvement after each sprint (not necessarily from each team) then I would be happy knowing we are trying to get better. The use of video works well with our community since we are global. I would love to hear what other open source projects are doing in regard to Sprint Reviews.