How do we do SharePoint development, including TFS integration?

There’s lot of information available concerning development processes, automated builds and continuous integration in SharePoint development using Team Foundation Server, but unfortunately most of the information is in relatively high level or just concepts, but details are missing. It’s also fairly common misconception that setting for example automated build with TFS is difficult – it’s not.

Following chapters defines the practices we use in MCS Finland during development and for setting up the automated daily builds from TFS for enterprise projects. We will concentrate on the development process and setting up automated build process, but I’ll give some additional context from the project management point of view as well.

This content applies both SharePoint 2007 and 2010 development. There are minor changes and improvements with SharePoint 2010 development, which are pointed out.

In this blog post, we cover following topics

- Development process

- Visual Studio solution configuration

- TFS side configurations

- Automated testing environment setup

Development process

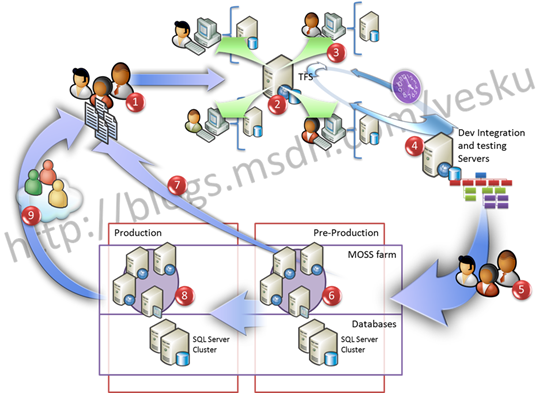

Following picture defines in high level the process we used for our enterprise projects. Actual details depends of course on the project size and project team. Actual process or methodology depends as well on the project, but process is always based on the agile approach with numerous iterations. Usually methodology used can be considered as adaptation from the SCRUM.

# |

Step | Description |

1 |

Requirements and tasks | Project requirements and tasks are collected from project owners |

2 |

Collecting tasks to TFS | All tasks to be accomplished (also documentation) are entered as tasks to the team foundation server. Tasks are divided to iterations based on the initial plan of the project. |

3 |

Virtualized development environments | All development environments are virtualized for easy management. Standardized base image has been created, which can be setup to server in matter of minutes. We commonly used centralized box to host our virtualized environment, but this depends also on project. Some consultants have 8GB on their laptop, making it as more appropriate and flexible hosting environment using Hyper-V. |

4 |

Integration and testing servers | Separate testing servers are part of the project setup, which are used to verify the delivery before installing that to customer environment. Automated builds are used and depending on the project, automated testing is being done, using process defined later in this blog post. |

5 |

Evaluation of status | Daily builds are being validated and based on the status and iteration schedule new deployments are being installed to the customer’s quality assurance environment. |

6 |

Quality assurance testing | Customers can follow up the progress of the projects and give instant feedback, if there’s something to change. Release cycle to customer environment depends based on project, but is approximately two weeks to ensure that there’s constant dialog on the functionalities being developed. Quality assurance environment is also used as the acceptance testing environment, when production release is planned to be done. |

7 |

Change requests and feedback | Feedback and change requests from the quality assurance environment are tracked to team site and entered to TFS system for developers to see the possible changes and new tasks. All incoming change requests are prioritized and scheduled to iterations based on discussions with project owners. |

8 |

Production installation | When first release can be done, QA environment is used for acceptance testing and decision to move forward is done. |

9 |

End user feedback | Since quite rarely projects end on the first production release, feedback and tasks are obviously collected from the end users to be processed to change requests and actual development tasks. |

Iteration and production release count obviously depends on the project.

Visual Studio Solution configuration

There are few things to do in the Visual Studio Solution level to enable automated builds, if you use Visual Studio 2008 in development. By default Visual Studio 2008 does not understand the solution packaging concept, so we need to enable this by modifying VS project file. Also by modifying the project settings, we can improve our development experience.

In our case the standard VS2008 project structure usually looks something like following. There’s few things to notice from the solution structure.

- Code is divided to multiple projects based on their usage models

- ApplicationLogic – contains business logic of the project, including all actual code manipulating data of the lists etc. If patterns and practices models are used, services, repositories etc. are placed here as well.

- Data – this is project for the data objects, which are used to transfer information between different solution layers. For easy manipulation in the UI layer, all code is transferred to data objects, rather then transferring actual SharePoint objects. This also helps in unit testing.

- FeatureReceivers – this project has the custom feature receiver code. Code is separated, since feature receivers have to be placed to GAC in every deployment scenario.

- Resources – this project contains the 12 hive files

- Solution.Assemblies – project to create the *.Assemblies.wsp solution package

- Solution.Resources – project to create the *.Resources.wsp solution package

- Web.UI – Project containing all the code for web parts, web controls, http modules and code behinds for application pages

- As you see, there’s actually two projects, which are used to generate solution packages. One for the resources (stuff to goes to 12 hive) and one for the actual assemblies. This is controversy approach also in our team, but if the assemblies are separate from the resources, we can more easily and risk free, just update the actual code, without touching the xml files on disk.

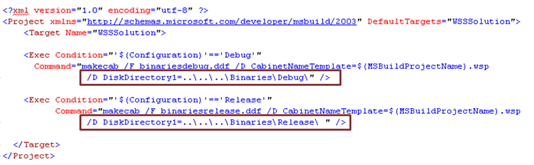

For the projects, which are outputting solution packages (wsp files), we’ve configured new .targets file, which contains separate settings for Debug and Release configurations. The Target WSSSolution has been configured as the default target to these project by modifying project file.

Notice that output folder of the assemblies has been configured as “..\..\..\Binaries\Release\” in the Release configuration and that wsp package is also placed to the same folder using the above xml configuration. This is same relative path to folder what’s used by the TFS automated build process to output assemblies, before the whole folder is copied to drop location. Since the wsp package is also placed to this folder by default, it’s automatically copied to the drop location without any additional configurations. So we use the same relative path already in the development environments, so that we don’t need to change anything as part of the automated build process.

How to debug efficiently in VS2008? – This is quite common question, since in VS2008, there’s no native way debug the SharePoint customization efficiently, unless you’re using the VS extensions for WSS 3.0. Since the extensions were introduced quite late and they didn’t first support TFS integration fully, we haven’t got used to utilize them in our projects. This does not however mean that you shouldn't use them.

In our case, customers usually accept deployment of our assemblies to GAC, so don’t have to create CAS files for the deployments, which makes also the debugging little bit easier, since during development time, we can deploy our assemblies to the bin folder of the IIS application and start debugging without IIS recycles etc.

This means that we’ve setup the output folder of the Visual Studio projects to bin folder of the IIS application and configured the web application to fully trust the assemblies (<trust level="Full" originUrl="" /> in the web.config – not good practice if CAS files are used). To avoid unnecessary application pool recycles, we’ve also added additional AllowPartiallyTrustedCallers attribute to assembly, so that assemblies are loaded to IIS process memory runtime.

After these settings have been done, only thing left to do, is to attach to IIS process (w3wp.exe). To make this as efficient as possible, we use VS macro and short cut keys to establish the debug process. Basically this means that only thing we need to do to get the latest versions to development portal is to compile the code and press the shortcut in the Visual Studio. After you request the page from you browser, debugger get’s automatically attached and you can start debugging.

What kind of third party tools we use? – Actually none. Managing solution manifest and the ddf file is something which we do manually as part of the development process. Each person involved in the project has responsibility to keep the files in sync. Generally we aim to create stub versions of each control, web part and feature file in the first iterations of the project. Meaning that each feature file and template is already included in the package, even though it doesn’t do anything. Also each web part or control only write the purpose of the particular functionality – “This web part will provide the article comment functionality in later Iteration”. This way each iteration will just increase the functionalities, which are working as designed in technical architecture for customization document.

There’s lot of great third party tools available for the build process in the VS2008, but we have not decided to use them, since in the end manual updates do not take that long and if the process is automated, there’s no way to control what actually get’s packaged from VS structure. Meaning that if some features are not finalized yet for the solution package, we don’t need to add them to manifest and ddf.

How about SharePoint 2010 environment with VS2010? – When you develop SharePoint 2010 projects using Visual Studio 2010, solution package is by default compiled to the same output directories as the assemblies, so there’s no reasons for these additional configurations. Visual studio solution structure also looks quite different, since in VS2010, you’d place the code and 14 hive elements to same Visual Studio projects.

In SharePoint 2010 development, the common way to divide the files between the Visual Studio projects is based on the functionalities, not based on the file types. This means that for example the MySite functionalities are compiled to single solution package and portal and collaboration customizations to other packages. This model enables you a way to test and version the functionalities independently.

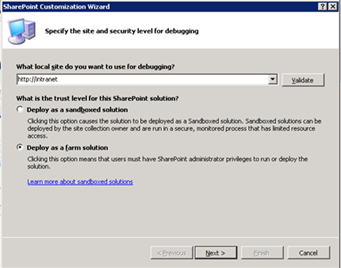

Debugging your customizations is extremely easy, since for all of the SharePoint customizations, this happens just by starting the debugging session directly from the Visual Studio IDE. Similar ways as for the 2007 environment, debugging happens in the same computer, where you develop your customizations. Target url for debugging is defined during the VS project creation or from the project properties.

How about SharePoint 2010 environment with VS2008? – This is definitely valid option and quite easy to establish (it’s only references). If you want to migrate your customizations as fast as possible to SharePoint 2010, this is most likely the temporary option you’ll use. In this case, you’ll have to modify the VS2008 project types as declared above. Since VS2010 however improve the productivity of the developers, we strongly suggest to migrate your code to VS2010.

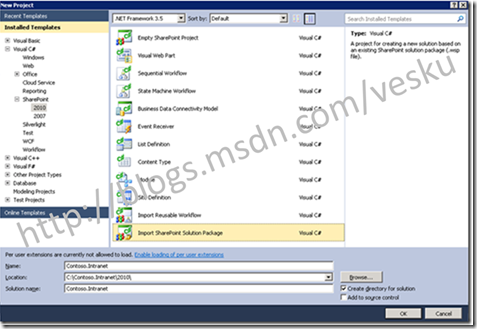

VS2010 supports importing existing solution packages to your Visual Studio solution. This way you can relatively easily migrate your VS2008 package to VS2010. After importing, you’ll need to reconfigure the packaging model, which will require refactoring, since the Visual Studio 2010 solution structure is not total imitation from the 14 hive.

TFS side configurations

In the TFS side, all we need to do is create the build agent and the build definition for the project. This is relatively trivial process, but basically we are enabling the automated build using standard TFS side configurations. Since we’ve configured the solution package to be dropped to the same locations as the compiled assemblies (dll files either in Debug or in Release folder), we actually don’t need to do any additional steps or configure any additional msbuild settings of the build project.

Some things to remember when you setup the build server

- Ensure that you have the proper assemblies installed and/or available on the build server. Setup is dependent on the decencies on your code.

- Ensure that the build service is running using domain account, which has access to the team project, so that the build service can get the latest version from the source safe

- Setup share to the build server or any staging server to where the compiled builds are stored, including the solution package

- Remember to setup proper cleaning process for the automated builds, since each build will consume space – this can be controlled using the retention policy of the build definition file

Depending on your code and unit testing practices, you can also add unit testing as part of your code compilation. Since some of the SharePoint dependent code can be difficult to mock, this might be difficult or require third party tools.

Automated testing environment setup

TFS automated build only verifies that the code itself compiles, but there’s still additional tasks to be done to ensure that code can be deployed and that the actual use cases work as expected. Especially for the larger projects, we’ve created additional steps, which are used to imitate the customer installation of the customizations. This way after each automated build, we have similar upgraded version of the portal, that the customer would have. To automate this, we’ve added few scripts to be executed.

The following model also decreases the inefficient working time of the tester to setup the actual testing environment, before the actual functionalities can be tested. We can certainly test some of the functionalities using automated testing, but for larger projects, it has been fairly common to have dedicated tester.

In one of our centralized hyper-v servers, we’ve setup a scheduled batch file, which is executed after the automated build process.

set Folder=\\mcstfs\contosoautobuild set FileMask=autowspbuild_* set VMName=contosotest02 set SnapshotName="18.10.2009 latest win updates" set LatestFile= for /f "delims=" %%a in ('dir /ad /b /o:d "%Folder%\%FileMask%"') do set LatestFile=%%a if "%LatestFile%"=="" goto :eof echo %LatestFile% powershell -command .\startsnapshot.ps1 %VMName% %SnapshotName% echo Sleep for 3 minutes... ping -n 240 localhost >nul applybuild %LatestFile% %VMName% contoso\moss_admin pwd |

As you can see from the batch file content, we first solve the latest solution file, continue with snapshot modifications and as final step installs the build to the particular virtualized environment.

We use powershell to restore the status of the testing server to same status as in the production environment. This is done by using following powershell script, which restores the snapshot given as parameter to the script.

$VMname = $args[0] $SnapshotName = $args[1] $VMManagementService = Get-WmiObject -Namespace root\virtualization -Class Msvm_VirtualSystemManagementService $SourceVm = Get-WmiObject -Namespace root\virtualization -Query "Select * From Msvm_ComputerSystem Where ElementName='$VMname'" $Snapshots = Get-WmiObject -Namespace root\virtualization -Query "Associators Of {$SourceVm} Where AssocClass=Msvm_ElementSettingData ResultClass=Msvm_VirtualSystemSettingData" #Write $Snapshots.Length foreach($Snapshot in $Snapshots) { # $Snapshot.ElementName if($Snapshot.ElementName -match $SnapshotName) { #Write $Snapshot.ElementName $TheSnapshot = $Snapshot } } Write "Applying the snapshot..." $result = $VMManagementService.ApplyVirtualSystemSnapshot($SourceVm, $TheSnapshot) ProcessWMIJob($result) Write "Starting the VM..." # Start the VM $result = $SourceVM.RequestStateChange(2) |

When this script has been executed, the virtualized environment is back online and the defined snapshot has been restored. Following step is to apply the actual build to the restored VM. For this the following script is used.

rem parameters: buildname, vmmachinename, username, password xcopy /y /s \\mcstfs\autobuild\%1 \\contosotest02\drop\%1\ c:\pstools\psexec.exe \\%2 -u %3 -p %4 -w c:\drop\%1\release c:\windows\system32\cmd.exe /c c:\drop\%1\release\upgrade.bat https://%2 dev V12 V5 |

As you can see the script, we are copying the solution package from the TFS autobuild server to share located in the testing server (which was just started). After that we can execute the upgrade script on the testing server, which contains the project dependent script to upgrade the existing portal with the new version of the customizations.

By using these relatively simple scripts we now have clean and updated environment for testing every single day with all the latest customizations. Model obviously decreases the overall project costs, since we don’t need to spend time setting up the environments manually.

How to further improve the process?

After the testing environment has been setup, we can also improve even further the process. Common improvements would be to add web testing and load testing to this scenario. Due the extensive support for scripting, we can automate these to be included to the daily process. This would mean that after the testing environment has been setup, we start the automated web tests for use case validation and performance testing to ensure that new code has not dramatically decreased the performance.

Web testing is again relatively trivial task to do with the Visual Studio and it provides quick way to ensure that basic functionalities are still working as expected.

Performance testing uses the recorded web testing scripts and imitates larger load. Even though the development testing environment is not using same hardware as the production, we can do estimates and assumptions based on baseline tests.

Baseline testing means that we have made tests in the customer environment and in testing environment using same customizations. If the new version is decreasing test environment performance for 20%, we can assume that the result would be most likely the same in the production.

Improvements in SharePoint 2010 for the process

As mentioned already earlier in this post, when you develop customizations for the SharePoint 2010 with Visual Studio 2010, there’s native support to compile solution packages to the output folder of the Visual Studio project, so there’s no need for any msbuild script changes.

Other huge improvement is the native support for upgrade and versioning in the feature framework side. This will help to upgrade already existing portals and manage the versioning of the customizations. Traditionally in SharePoint 2007, this has been done with custom feature receivers, which modify the existing content. In SharePoint 2010, we have much more powerful tools to manage the portal lifecycle and to update the already existing content. Also the logging mechanism have been dramatically improved to help with customization troubleshooting, if deployments fails or something unexpected will happen.

We’ll release lot of new information concerning the SharePoint 2010 development in upcoming months, so stay tuned.

Summary

Setting up the standardized development process might seem like a large task, but when the environments and processes have been defined, it saves lot of resources to actual efficient project work. The processes we use for our enterprise projects (30-500-xxxx man day projects), is constantly evolving process, which is improved and changed based on the experiences from the projects.

Following people have also contributed to our standardized process: Jaakko Haakana, Juhani Lith, Janne Mattila, Tom Wik and Jukka Ylimutka.

Hopefully this was useful.

Comments

Anonymous

March 31, 2015

Vesa Good post. is there an update to this based on stewardship of the PnP team? I imagine your deploying to various Azure VMs during the build process.Anonymous

March 31, 2015

Hi Dan, this one is indeed for server side code and farm solutions. App model development is completely different and typically if the apps are targeted to Office 365, we do not use any Azure VMs, rather Azure PaaS services with few different tenants.

- Dev tenant - Developer specific

- Integration tenant - Team integration

- Production - actual usage This slightly depends on the app and development details. Technically if an app is isolated and inside of a site collection, you can do all the needed development in isolated developer site collections... but again... it all comes down on the details with the business requirements and the overall setup. We have more details on the PnP side for this one in the queue and would suggest that all questions, feedback and ideas would be provided using the PnP Yammer group at aka.ms/OfficeDevPnPYammer