Three Cheers for the Authoring Resource Kit Tools! Part III – The Cookdown Analyzer

For the third and final post in the Authoring Resource Kit Tools series we introduce the Cookdown Analyzer. Before a discussion of the analyzer makes sense a brief discussion of the cookdown process itself is in order.

In my previous to posts I discussed the Workflow Analyzer - available here - and the Workflow Simulator - available here. Both of those tools are useful across a broad spectrum of scenarios. The cookdown scenario is specifically focused on workflows that use scripts and target classes with multiple objects. A good example of this would be targeting the logical disk class where a single system may have multiple disk drives that need to be analyzed. Classes like this are potentially tricky even for non-script monitoring because the way you normally think about targeting a monitor or rule may not be appropriate for a class containing multiple instances. If you don’t target these classes correctly you can end up with monitoring that constantly flips between healthy and unhealthy - causing churn, inaccurate results and overhead. Kevin has a good blog post discussing how to properly target in such scenarios available here. When you add a script into the mix it is very easy to break cookdown. OK, let’s stop here – so what the heck is cookdown?

In OpsMgr, cookdown is a process that specifically applies to scripts targeted at classes with multiple instances. Think of a system with 10 hard disks. If you want to target a script to monitor these disks you would likely end up running one instance of the script per hard disk on the system – or 10 instances of the script executing at once - just to get the job done. Script execution is the most labor intensive way to run a workflow so when we have to use scripts we want to ensure that we run as few instances as possible. Cookdown helps optimize scripts in these scenarios. When written properly cookdown will analyze the workflow and allow the script (the data source for the workflow) to only execute one time (not one time per disk, one time total) and supply resulting data to the 10 workflows. Yes, there will still be one workflow per disk but only one instance of the script execution that feeds information to all 10 workflows. This results in a significant improvement in workflow processing. I’ll avoid further discussion of cookdown itself as it is a topic beyond the scope of this blog post but if you find yourself writing scripts in scenarios targeting classes with multiple instances, cookdown is something you definitely want to understand and to use!

That said, let’s take a look at the cookdown analyzer tool. This tool is available in the authoring console and runs against the management pack as a whole with the ability to exclude classes with just a few instances. Those classes that only have single instances are excluded automatically. We will look at the logical disk free space monitor from the windows 2008 management pack and compare it against a customized version created to address some specific monitoring needs.

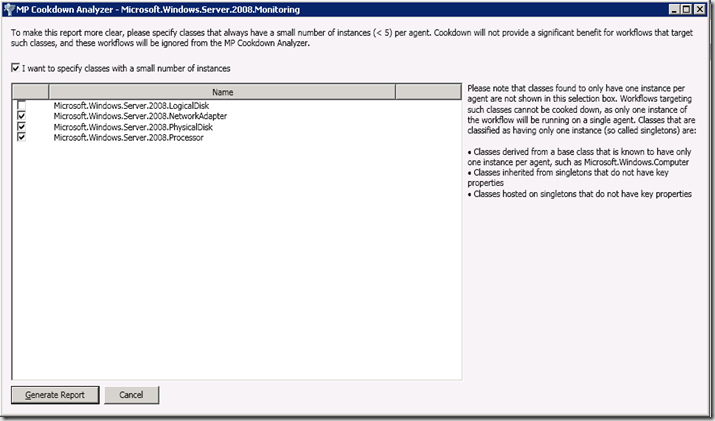

To get started, load up the Windows 2008 monitoring management pack in the authoring console and from the tools menu, select perform cookdown analysis. This will bring up the screen below.

Note the text on the right that discusses single-instance classes being excluded and also the ability to choose which multi-instance classes to include in the analysis. For our sample analysis I’m only interested in the logical disk class so I exclude all the others. To start the analysis select generate report and you will see the report below.

I’m particularly interested in the logical disk free space monitor since that is the one I’ve customized so scrolling down the report I find that monitor and see the results – shown below.

OK, well and good – the default logical disk free space monitor passes the cookdown analysis without problem (I would hope so, it is one that we built after all!)

But what about the custom monitor that was built to modify logical disk free space monitoring? Before I show the analysis of that monitor – a brief comment. If you need to customize a default rule/monitor/discovery the guidance is to override the default monitor and disable it and recreate that monitor in a custom management pack. OK, a bit painful but not that big of a deal….at least for most situations. The logical disk free space monitor is one of the exceptions because this particular monitor cannot be built in the opsmgr UI – and also cannot be built using the authoring console. Instead, this monitor is built directly in XML – so to truly replicate the monitor and all of the moving parts requires XML diving. XML diving actually isn’t that bad – if you want to understand how all of the pieces of XML fit together, take a look at my very details (took a LOT of work) blog post available here. OK, on with the analysis.

In the customized example, the author didn’t want to mess with the XML so did the work as best possible in the UI – and thats cool as long as the end result functions as needed. The result works but fails cookdown analysis as shown. Note that some identifying information has been omitted from the screen snap – but nothing relevant to the analysis.

Why does the customized version fail cookdown while the default does not? We see similar variables being used for each. We do note that different modules are listed as part of the workflow but thats not surprising since the default example was built in the UI.

The key problem here is the text in red (you can get more detail by clicking on the red or green text). If you compare the first and second examples you see that the same value of $Target/Property/Type….$ is used in each but the positioning is different. OK Steve – BRILLIANT analysis! So whats causing this to be red? One of THE KEY requirements to avoid breaking cookdown is to NOT pass any specific instance into the script being executed. Rather, let the script handle doing the discovery of the different instances internally as part of processing. I can hear you asking – isn’t this a second discovery – kind of, but not really. Doing it this way allows a single iteration of the script to operate against all instances of interest (in this case, disk drives). So, if we look at the properties of the monitor and, specifically, the parameters being passed to the script we see that the specific device ID (disk drive) is being passed to the script explicitly – causing cookdown to break.

If we simply remove the argument and adjust the script internally to handle the disk drive instances, the problems with cookdown go away. Note that the screenshot below was taken after removing the offending argument – the script was not adjusted.

There you have it – the cookdown analyzer is really useful in specific scenarios – and it’s a good idea to run it against your custom MP’s – just to be sure.

To wrap up let me state again - this blog was not intended to be a deep dive into the internals of cookdown but, rather, a demonstration of using the cookdown analyzer to find problems with cookdown in custom management packs and help demonstrate how to use that information to fix these issues which can cause notable overhead and churn in an environment – particularly those with a large instance space.