Creating Better User Experiences with Microsoft Cognitive Services

Computer scientists have been captivated by the potential of artificial intelligence to create human like experiences with computers for decades. Although we are not at the point of true human intelligence, we have seen great advances in vision, speech, language understanding and knowledge recall that will produce better human computer experiences. These advances were made possible by a variety of factors:

- With larger data sets we have more data to train our models giving us better precision when we are making comparisons between a new piece of data (e.g. pictures, text) and our base.

- More powerful computers and the availability of relatively infinite computing with the cloud supports more computationally expensive data processing.

- Increased focus across the industry and academia is resulting in better collaboration and new advances.

This started with investments in speech recognition and natural language processing (NLP) for a variety of uses. Microsoft demonstrated this technology 15 years ago at CES and since then has continued to drive these innovations into products.

Having computers mimic human behavior at scale has augmented our lives in useful ways. Automated translation services can take one language and convert it into another language almost instantaneously. Skype Translator has demonstrated doing this in real-time. Language understanding in email for example, can determine that I forgot to add an attachment based on what I wrote and then make a recommendation to do so before sending an email. Email has gotten easier to manage with spam detecting algorithms that do a good job of determining which emails are spam and which ones are not. Only if there was a way for me to get fewer emails overall. J Search engines and online stores have long used machine learning to make recommendations to help us make better decisions. Credit card companies have used it to detect potentially fraudulent transactions.

Opportunities for making computers mimic human behavior in product experiences go beyond the examples that I previously mentioned. My team and I spend a lot of time meeting with partners (both small and large) and we consistently hear a similar story. The partner has a great opportunity to better serve their customers using a rich set of data that they have, but don’t have the resources to do so. Today there are a few options that are available to them. Implement their own solution which is not good when resources and expertise are limited. Use a pre-built community library that will have implemented algorithms but still requires you to understand the algorithms and do all of the additional work. Some popular libraries include OpenNLP for text analysis, OpenCV for computer vision and Mahout for machine learning. Or use tools from large organization like the Computational Network Toolkit (CNTK) from Microsoft Research, TensorFlow from Google and Facebook’s AI Research team’s open sourced modules for Torch.

All of these options work but it requires expertise and resources to get started. To help developers build apps and services that detect, interpret and gain insights in human like ways without requiring a dedicated team of AI experts, we have now put the pieces together in Microsoft Cognitive Services (it is part of Cortana Intelligence Suite and is in preview). Developers with just a few lines of code can benefit from over 20 years of intelligence research that has been used in Microsoft products. Since it is a service you don’t have to deploy it, update it, or train the models (although you have the option for some services). With Cognitive Services you have access to vision, speech, language, knowledge and search APIs.

Using the Computer Vision APIs

To bring this to life I will show you how easy it is to get started with Cognitive Services by using the Computer Vision APIs. It is estimated that over 2 trillion photos will be taken this year. Classifying these images can be difficult. There is also the problem that very few people are great photographers resulting in images that have an interesting area of focus disguised by a lot of extra “background.” Creating a proof of concept to caption and crop an image with the Computer Vision API is straight forward.

You can call the APIs directly or use the client library. Since this is a proof of concept I used the sample code which is available on GitHub and tweaked it. Even though there are a lot of scenarios already supported in the sample I am going to make a tweak and create a new scenario that merges analyzing and cropping an image. After doing some refactoring to do both the image analysis and cropping together, followed by updating the XAML to displaying it correctly, it was done.

The analysis of the image covers some great details but it is also really interesting to see how well the images were cropped. In my code I opted for two layouts, wide and tall. In both cases the most visually interesting parts of the image were selected within the dimensions I provided. In your applications you can make better use of images by finding the most interesting parts of an image without requiring human intervention.

It was fun testing this out on a variety of images and seeing the richness of the data that was returned. I was able to do this by just registering for the preview, downloading the sample code and making a few tweaks.

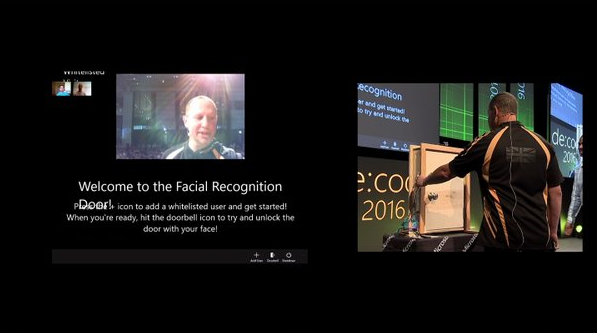

When cognitive services are combined with devices and sensors we can create new experiences with the physical objects we interact with. For example, during my de:code keynote in Tokyo, Japan we showed the Facial Recognition Door. It started as a Microsoft Garage project and it uses cognitive services to detect faces, determine who is approved to unlock the door and then unlocks the door if the person is authorized to. The door is running Windows 10 IoT so we are able to call the Face APIs from the app on the Minnowboard Max and do the comparisons there.

What’s Next

Since Build the interest in cognitive services is really growing. When a technology breaks out it is always important to remember the scenarios that it enables. As a platform company we can help developers create new experiences by making our technology investments available in an easily consumable way. In the future you can look out for more articles on advances in technology and how we can help you create the future that you envision.

Cheers,

Guggs

Comments

- Anonymous

May 28, 2016

Everyone loves what you guys tend to be up too.This sort of clever work and exposure! Keep up the awesome works guys I've added you guys to our blogroll. - Anonymous

June 12, 2016

Where can i get a good trial on how to have a better experience with cognitive user experience?- Anonymous

October 12, 2016

Hi Thabitha - you can go to https://www.microsoft.com/cognitive-services/en-us/sign-up and sign up for cognitive experiences.

- Anonymous