OS & Framework Patching with Docker Containers - a paradigm shift

Thinking of containers, we think of the easy packaging of our app, along with its dependencies. The dockerfile FROM defines the base image used, with the contents copied or restored into the image.

We now have a generic package format that can be deployed onto multiple hosts. No longer must the host know about what version of the stack that's used, nor even the version of the operating system. And, we can deploy any configuration we need, unique to our container.

Patching workloads

In the VM world, we patch workloads by patching the host VM. The developer hands the app over to operations, who deploys it on a VM. Along with the list of dependencies that must be applied to the VM. Ops then takes ownership, hoping the patching of the OS & Frameworks don't negatively impact the running workloads. How often do you think ops does a test run of a patch on a VM workload before deploying it? It's not an easy thing to do, or expectation in the VM world. We basically patch, and hope we don't get a call that something no longer works...

The workflow involves post deployment management. Projects like Spinnaker and Terraform are making great strides to automating the building process of VM, in an immutable infrastructure model. However, are VMs the equivalent to the transition from vhs to dvds?

Are containers simply a better mousetrap?

In our busy lives, we tend to look for quick fixes. We can allocate 30 seconds of dedicated and open ended thought before we latch onto an idea and want to run with it. We see a pattern, figure it's a better drop-in replacement and boom, we're off to applying this new technique.

When recordable dvd players became popular, they were mostly a drop in replacement for vhs tapes. They were a better format, better quality; no need to rewind, but the workflow was generally the same. Did dvds become a drop in replacement for the vhs workflow? Do you remember scheduling a dvd recording, which required setting the clock that was often blinking 12:00am from the last power outage, or was off by an hour as someone forgot how to set it after daylight savings time? At the same time the dvd format was becoming prominent, streaming media became a thing. Although dvds were a better medium to vhs tapes, you could only watch them if you had the physical media. DVRs and streaming media became the primary adopted solution. Not only were they a better quality format, but they solved the usability for integrating with the cable providers schedule. With OnDemand, NetFlix and other video streaming, the entire concept for watching videos changed. I could now watch from my set-top box, my laptop in the bedroom, the hotel, or my phone.

The switch to the dvd format is an example of a better mousetrap that didn't go far enough to solve the larger problem. There were better, broader options that solved a broader set of problems, but it was a paradigm shift.

Base image updates

While you could apply a patch to a running container; this falls into the category of: "just because you can, doesn't mean you should". In the container world, we get OS & Framework patches through base image updates. Our base images have stable tags that define a relatively stable version. For instance the microsoft/aspnetcore image has tags for :1.0, 1.1, 2.0. The difference between 1.0, 1.1, 2.0 represent functional and capability changes. Moving between these tags implies there may be a difference in functionality, as well as expanded capabilities. We wouldn't blindly change a tag between these versions and deploy the app into production without some level of validations. Looking at the tags we see the image was last updated some hours or days ago. Even though the 2.0 version was initial shipped months prior.

To maintain a stable base image, owners of these base images maintain the latest OS & Framework patches. They continually monitor and update their images for base image updates. The aspnetcore image updates based on the dotnet image. The dotnet image updates based on the Linux and Windows base images. The Windows and Linux base images update their base images, testing them for the dependencies they know of, before releasing.

Windows, Linux, Dotnet, Java, Node all provide patches for their published images. The paradigm shift here is providing updated base images with the patches already applied. How can we take advantage of this paradigm shift?

Patching containers in the build pipeline

In the container workflow we continually build and deploy containers. Once a container is deployed, the presumption is it's never touched. The deployment is immutable. To make a change, we rebuild the container. We can then test the container, before it's deployed. The container might be tested individually, or in concert with several other containers. Once there's a level of comfort, the container(s) are deployed. Or, scheduled for deployment using an orchestrator. Rebuilding each time, testing before deployment is a change. But, it's a change that enables new capabilities, such as pre-validating the change will function as expected.

Container workflow primitives

To deliver a holistic solution to OS & Framework patching, there are several primitives. One model would provide a closed-loop solution where every scenario is known. A more popular approach involves providing primitives that can be stitched together. If a component needs to be swapped out, for whatever reason, the user isn't blocked.

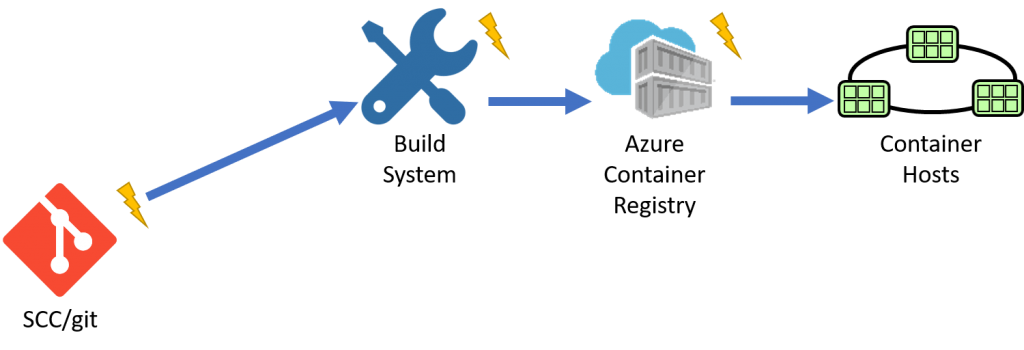

If we follow the SCC build workflow, we can see the SCC system notifies the build system. When the build system completes, it pushes to a private registry. When the registry receives updates, it can trigger a deployment, or a release management system can sit between, deciding when to release.

The primitives here are:

- Source that provides notifications

- Build system that builds an image

- Registry that stores the results

The only thing missing is the source for base images that can provide notifications?

Azure Container Builder

Over the last year, we've been talking with customers, exploring the problem area for how to handle OS & Framework patching. How can we enable a solution that fits within current workflows? Do we really want to enable patching running containers? Or, can we pickup the existing workflows, or the evolving workflows for containerized builds?

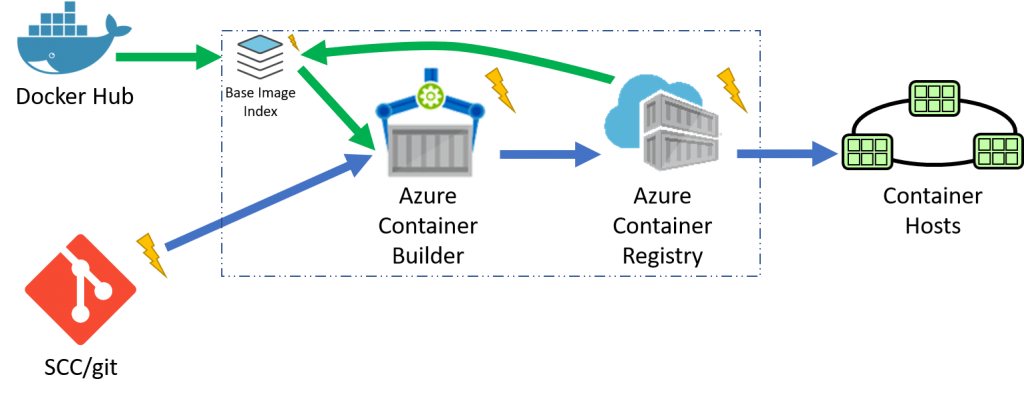

Just as SCC systems provide notifications, the Azure Container Builder will trigger a build based on a base image update? But, where will the notifications come from? We need a means to know when base images are updated. The missing piece here is the base image cache. Or, more specifically, an index to updated image tags. With a cache of public docker hub images, and any ACR based images your container builder has access to, we have the primitives to trigger an automated build. When code changes are committed, a container build will be triggered. When an update to the base image specified in the dockerfile is made, a container build will be triggered.

The additional primitive for base image update notifications is one of the key aspects of the Azure Container Builder. You may choose to use these notifications with your existing CI/CD system. Or, you may choose to use the new Azure Container Builder that can be associated with your Azure Container Registry. All notifications will be available through the Azure Event Grid, providing a common way to communicate asynchronous events across Azure.

Giving it a try...

We're still finalizing the initial public release of the Azure Container Builder. We were going to wait until when we had this working in public form. The more I read posts from our internal Microsoft teams, our field, Microsoft Regional Directors, and customers looking for a solution, the more I realized it's better to get something out for feedback. We've seen the great work the Google Container builder team has done, and the work AWS Code-build has started. Any cloud provider that offers containerized workflows will need private registries to keep their images network-close. We believe containerized builds should be treated the same way.

- What do you think?

- Will this fit your needs for OS & Framework patching?

- How will your ops teams feel about delegating the OS & Framework patching to the development teams workflow?

Steve

Comments

- Anonymous

December 21, 2017

I would like to see the ability to patch a layer of a container image for security patches and the like. This would mimic the current patch strategy many companies use. An "updated" image would still need to be deployed, but Kubernetes make that easy enough.The problem with triggering a new build with every patch from an base image is that we have audit and approval workflows around apps that are SOX significant that require work items to start the work and approvals from the various teams along the pipeline. Changing this would requiring changing the mindset of the auditors and that is a difficult conversation because, auditors...- Anonymous

February 06, 2018

Hi Rob,Various compliance requirements are something we are tracking. That said, this is a paradigm shift. Not just for developers, but ops as well. You make a good point that we need to be aware of additional auditors that will need to "buy in" to this paradigm shift. We will have an audit trail to know if an image was updated due to a SCC commit, a base image update, or just a manually triggered build, and by whom. If you know the code your team owns hasn't changed, rather the build/test/deploy (pending) was due to a base image update. And, you could see the underlying image that caused the update, would that satisfy your auditors? In reality, any patch applied to a VM today could include just about anything. How do your auditors feel about these changes? If you'd like to share your views directly, please reach out and we can schedule a call.Thanks,Steve

- Anonymous