Trouble shooting Availability Group Listener in Azure SQL VM

Last week, I have had one mystery challenge while creating Availability Group listener in Azure. We followed Configure one or more Always On Availability Group Listeners - Resource Manager and found out the listener didn't work as expect. Let me walk you through what we have experienced.

In this blogpost, for demonstration purposes, we will create 2 Azure VMs named SQL1 and SQL2 for Availability Group and the listener name is AG1.

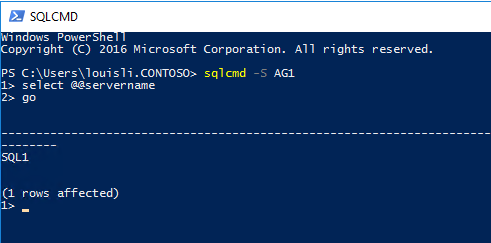

After setting up the listener AG1 using ILB (Internal Load Balancer) with steps provided in the link above, we have a SQL Availability Group configured in Azure. Currently SQL1 is primary, and connection from SQL1 to AG1 is successful:

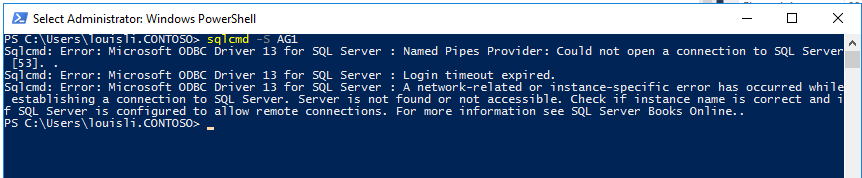

However, after failover to SQL2 (SQL2 is now primary), connection from SQL1 to AG1 failed:

And interestingly, connection from SQL2 to AG1 is success. Another test showed while SQL1 is primary, connection from SQL2 to AG1 also fails.

More tests showed direct connection to the SQL Servers (e.g. SQL2 to SQL1, SQL1 to SQL2) are ALL successful.

At this point, it appears that listener isn't functioning as we expected. And below is what we have tried and would like to share with those who might experience similar issue and hopefully you might save some troubleshooting time

Steps:

- Check ILB properties

- Frond End port (which normally is SQL Server instance port 1433)

- Back End Port (which normally is also 1433)

- Back End Pool – SQL1 and SQL2

- Probe port – a port that is not being used by any application on both VMs. This port number will be used later in cluster parameter.

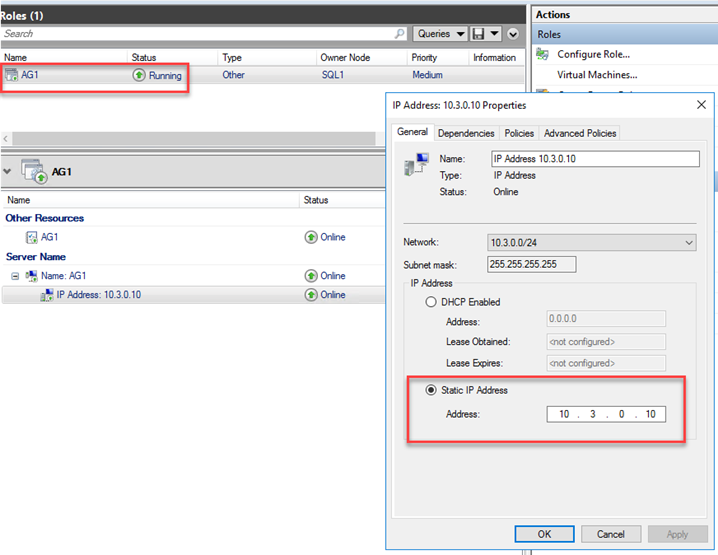

From this screenshot, you can see my Front End IP is 10.3.0.10, this is going to be my AG listener IP. Front End port is 1433, default port for SQL Server default instance. So is Backend port. The health probe port is 59999, an unused port on both VMs.

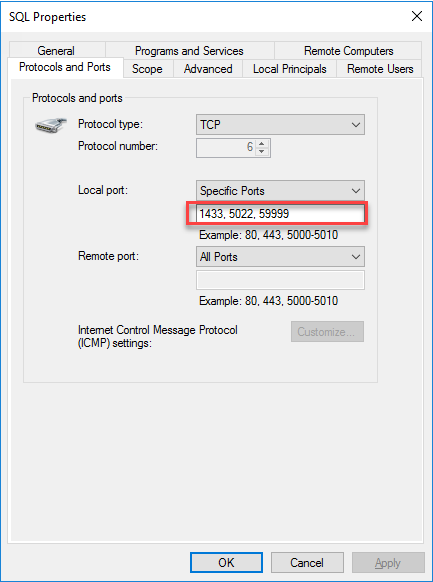

- Verify firewall settings on both VMs.

There are 3 ports need to be open – SQL Server connection port (1433), Availability Group communication port 5022 and Health Probe port 59999. Your firewall setup on both VMs should be similar to my configuration below:

- Verify cluster parameters:

Verify cluster is health, all resources are online and IP resource is using ILB IP:

We will also need to confirm Probe port is same port as the ILB Probe port. We could use Get-ClusterResource | Get-ClusterParameter

All above checks are to confirm ILB listener has been configured properly. And in my case, all these setting are correct and yet, remote connection to listener still fails.

Based on symptom observed, SQL Servers are functioning properly since we are able to connect to SQL directly. Failing over is also functioning, cluster is health. All these evidences excluded VMs and WSFC being root cause.

- Verify Probe Port in VM:

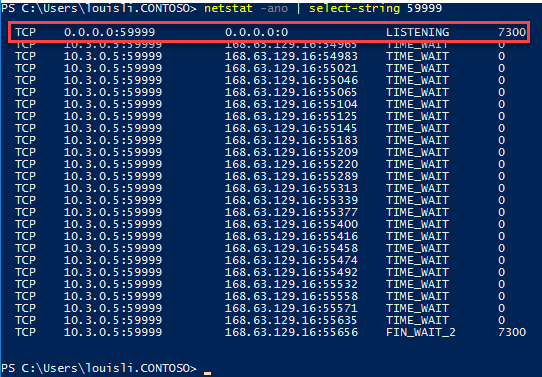

We decided to investigate a little further on the VM side, starting from verifying the Probe port is being listened on active cluster node (Primary replica):

And to confirm the process that is listening to the probe port 59999:

- Monitoring ping messages:

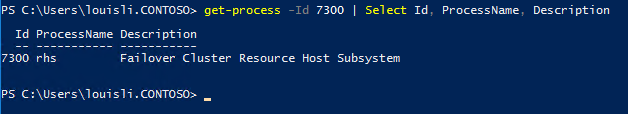

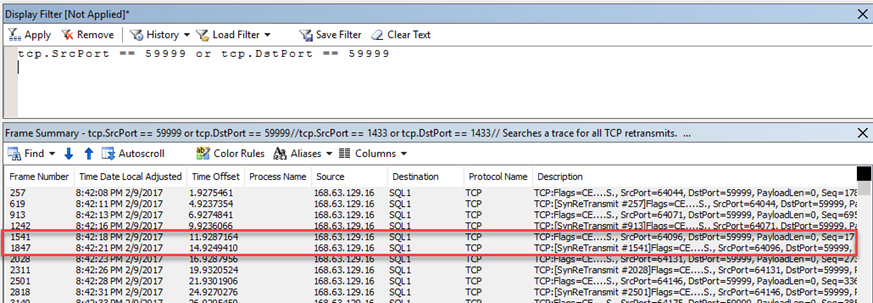

It seems the port is open, but is there any ping messages from ILB. To answer this question, we will need to use Microsoft Network Monitor and monitor the network traffic on port 59999:

And there is no message coming in or going out from port 59999.

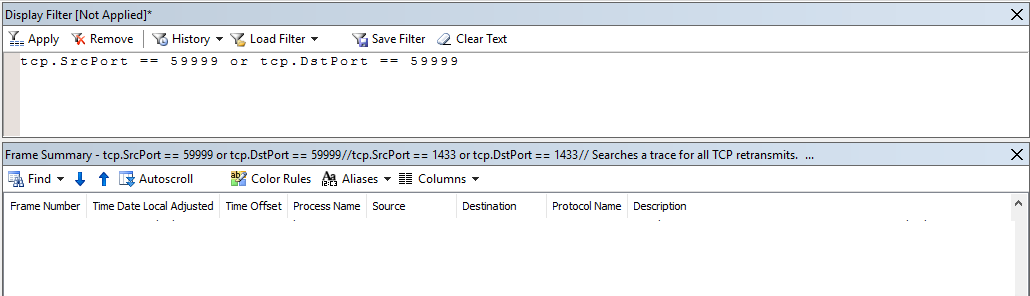

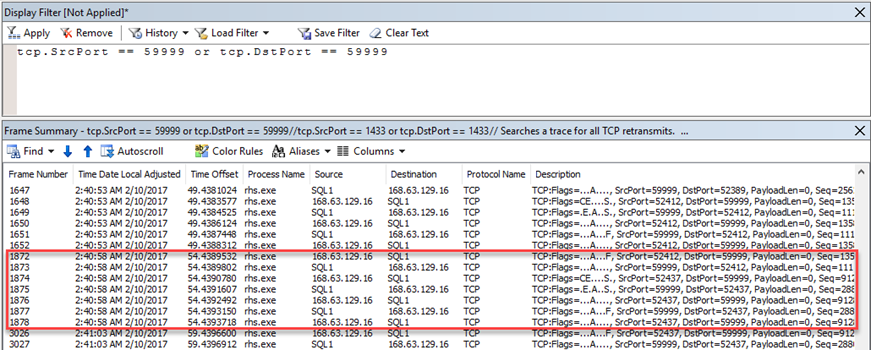

However, under normal circumstances, there are ping messages to the probe port every a few seconds (interval is defined in ILB):

On passive node, there are also ping messages with no reply from VM:

Seeing no ping message from ILB, we have confirmed messages between ILB and VMs are not going through. Now we will need to find out what caused the disconnection.

So we need to go back to the portal and see what we have missed about ILB. Another review of ILB configuration has confirmed everything was configured properly and yet, NetMon is still not showing any ping messages.

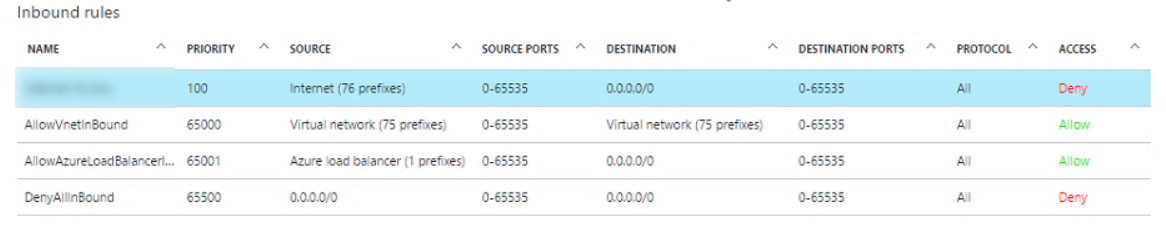

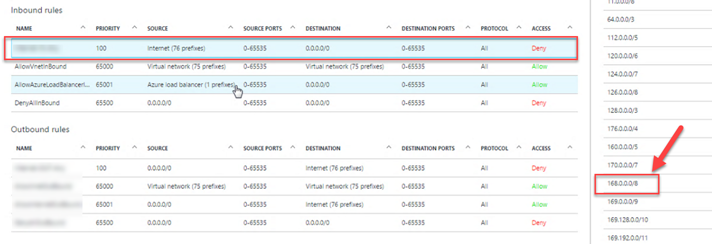

Now, if we go back to the VM and take a look at the Network Adaptor, there is an option called "Effective security rules" like the name sugguest, shows the effective security rules on current network adapter. What we saw from the list is besides 3 default rules, including a rule called "AllowAzureLoadBalanderInBound", this rule was added when we created the ILB. However, there is another rule that denies internet connections with priority 100:

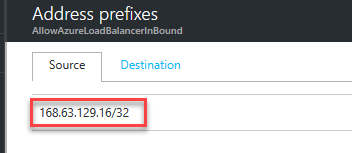

This rule seems suspicious since it denies 76 IP ranges, how do we know it is not blocking ILB? Luckily, there is one easy way to find out - If we click on the third rule named "AllowAzureLoadBalancerInBound", it shows the IP address of our LB:

Then when we go back to the first rule and cross check with 76 prefixes, we find a match – 168.0.0.0/8. Any IP address from 168 is blocked, including ILBs!

This ended our investigation - the high priority deny rule is blocking ILB IP therefore ILB cannot ping SQL VMs and would not be able to redirect connections to SQL VM accordingly. Updating this deny rule and exclude 168.0.0.0 from the block list allowed ILB to ping SQL VMs and resolved this issue.

I hope this blog helps you might save you sometime in trouble shooting AG listener in Azure.

Comments

- Anonymous

October 30, 2017

Really good article, I had exactly the same issue and it has been really difficult to find a solution over the internet, until I found this. Thank you so much for sharing your case and solution.