SQL Diagnostics Project Part 2 – Custom SQL Nexus Imports

Several years ago, I wrote a blog post as to how you can collect your own custom SQL data from a SQL: Server instance (usually one from a customer) using the PSSDiag utility from Codeplex. At the end of the blog post, I mention that I was going to get around to each showing you how you can use SQL Nexus (another really useful tool from Codeplex) to upload the data you collected into a SQL database. Time, as it sometimes does, got away from me, and I wasn't able to get around to writing that post…until today. J So, the goal of today's blog post is to show you how you can take text data from literally any text-based file and use SQL Nexus to upload that data into a database and then write your own reports off that data.

Quick note: PSSDiag is the collection of scripts/utilities that ultimately calls SQL Diag – which then runs the scripts on a target SQL Server machine (your own if you're a DBA or a customer's machine if you're a consultant). So, PSSDiag is the mechanism for getting the scripts to and executing on the customer machine. The tool I reference above from Codeplex, DiagManager, is the tool for creating the PSSDiag. I outline how all of this works in my previous post.

I will begin where left off in the previous post - where we have a folder named output that has a bunch of data that the PSSDiag utility collected for me (again - this typically happens on a customer's SQL instance). Some of these files PSSDiag collected by default and several them are my own custom DMV/Powershell scripts. That is the simple beauty of PSSDiag – it is very easy to write and then collect data from your own custom scripts. However, the data in the text files is very hard to consume in their file format. This is where SQL Nexus comes into play. Most people use SQL Nexus to upload data from a PSSDiag/SQL Diag capture and then run some generic built-in SQL Nexus reports from it. These reports can be useful - but only up to a certain point. I'm usually not too interested in the reports that it generates. I am much more interested in its ability to upload that data that I've collected in my scripts so that I can run queries against it. And this is very easy to do.

This is a sample file collector script from my previous blog post. Note the PRINT statement I've embedded in the file. This tag is there so I can tell SQL Nexus to upload the result set in the file to a new table.

This is what the resulting file looks like when pssdiag outputs it from the customer's SQL Server:

The tag exists in the file – but…so what?

That tag is all I need to tell SQL Nexus to upload that data for me into a SQL table. That's right. Whatever files you've collected – just tell SQL Nexus to look for certain tags and it will create a table based on those tags so long as the result set you wish to import is immediately below the tag. It really doesn't matter how the output is formatted – SQL Nexus usually does an amazing job figuring everything out.

But – how does it know to do that? I'm glad you asked. J

The secret sauce here is a configuration file that SQL Nexus uses named TextRowsets.xml. If you navigate to where you have SQL Nexus installed (you can get it here) you'll see this TextRowsets.xml file. This is where you'll place all the snippets that you have marked in your files for your own custom data collections. You'll want to make yourself familiar with this file.

Here is a snippet from my file. I've highlighted where I have added the snippet for BackupHistory. The other part I've highlighted, cust_BackupHistory, is the name of the table that the result set will be uploaded to. Each rowset XML element relates to a rowset that SQL Nexus will try to import for you. You can add as many as you'd like. I can specify the column names and data types if I wanted to (there are examples for this in the TextRowsets.xml file), but I just let SQL Nexus figure it out for me. It uses general varchar data types – but that is perfectly fine for my purposes.

Once I have added the tag for the table to the XML file for SQL Nexus – my next step is to run through a normal SQL Nexus import. I'm not going to detail how to go through a data import for SQL Nexus – see the tool documentation on the CodePlex site for this. Basically, you point SQL Nexus at an instance of SQL that you plan to upload the data to for analysis (NOT the customer's SQL instance – a local instance for you, preferably on your work machine) and then tell SQL Nexus where the output folder is (the data you have collected from the customer) located from the PSSDiag collection (these two tools are very good friends). It will then loop through all the files in the folder and try to import whatever it can based on the data it has in the TextRowsets.xml file.

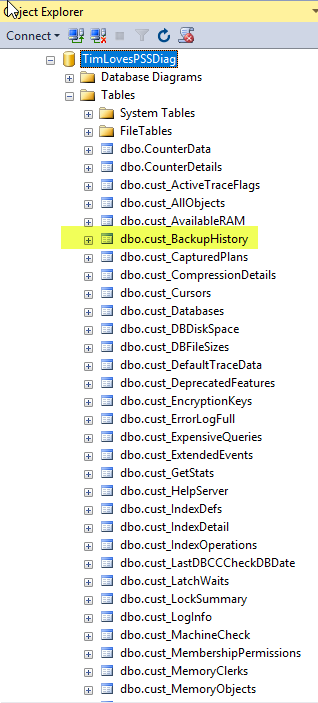

Once the SQL Nexus data import is complete, we can see that a new table named cust_BackupHistory has been created by SQL Nexus. This table contains the results from the file immediately following the –BackupHistory tag. All of the tables prefixed with cust_ are tables that I have written custom scripts for collecting customer data. I will share these again at some point. I'm working on something at the moment to automate this whole process and produce a nice report with findings, etc. Stay tuned there…

Also – very important – SQL Nexus also imports the Performance Monitor .blg files that PSSDiag collects by default. Even if you do not collect your own custom DMV data, the ability to analyze the data from Perfmon is incredibly useful. So much so that I can usually get a good idea as to what may be occurring on a customer machine before I even need to look at output from DMV queries, etc.

Now, once you have the data into database tables - you can run whatever analysis you want.

I hope this was useful!

Tim