Optimizing memory footprint: Compression in .Net 4.5

Performance is about tradeoffs. If you need to do something faster you can probably do it if you use more memory. If you need to reduce the space that your data is using you can use CPU to compress it. But you will be paying the CPU cost.

When I was working on Azure Service Bus I was asked to optimize the amount of data we were storing in Sql Azure. We found a few options that were specific to our product (such as avoiding writing duplicate data in headers, etc) but also explored using compression for each message. Here are some interesting things I found about compression using the built in .Net methods.

Compression algorithms available in .Net

Out of the box you have two options: Deflate and GzipStream. Both were introduced in 2.0 version of the Framework and significantly improved in 4.5. You can see people in the web asking which one is better. GzipStream uses Deflate internally so other than adding some extra data (header, footer) it will be the same algorithm.

Note that if your payloads are very small you might be tempted to use Deflate. In my experience the header/frame/footer are actually very nice to have. By doing some bit manipulation you can embed custom information in the Gzip Header. Also by using GZipStream you have a well formed Zip stream that you can visualize/decompress with any tool that supports GZip.

How well do they compress?

To measure how good they compressed the data I tried a few different scenarios:

- Text payload vs Binary payload: Text payload obviously compressed a lot more, close to 55% compression vs 20% compression for a binary payload.

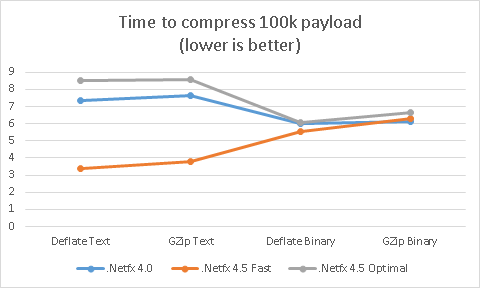

- Framework version and Level: In 4.5 a parameter was added to the compress method that lets you tweak how aggressive you want the compression to be. So I tried version 4.0, version 4.5 fast and version 4.5 optimal.

Here is the data:

Some conclusions:

- GZip takes longer and compresses about the same that Deflate. As mentioned before this is not a surprise since it uses the Deflate algorithm.

- 4.5 is much better than 4.0. 4.0 is bad even with the text payload.

- CPU was about the same for all.

- .Netfx 4.5 with Compression Level Optimal is pretty slow for text payload. For binary all of them are about the same.

Implementation

My first attempt for compressing and decompressing was something like this:

internal static MemoryStream CompressStream(Stream original){ var compressedStream = new MemoryStream(); original.Position = 0; using (var compressor = new GZipStream(compressedStream, CompressionLevel.Fastest, leaveOpen:true)) { original.CopyTo(compressor); } compressedStream.Position = 0; return compressedStream;}internal static MemoryStream Decompress(MemoryStream inputStream){ if (inputStream == null) { return null; } inputStream.Position = 0; // Decompress var decompressed = new MemoryStream(); using (var decompressor = new GZipStream(inputStream, CompressionMode.Decompress)) { decompressor.CopyTo(decompressed); } decompressed.Position = 0; return decompressed; } |

After taking a profile with Perfview we saw very high CPU in GC due to Compression.DeflateStream. I was expecting high CPU but not a lot of allocation. Since Perfview allows you to take allocation profile as well here is the problem. 93% of all the bytes were being allocated by this method:

Name |

Type System.Byte[] |

+ CLR <<clr!JIT_NewArr1>> |

+ mscorlib.ni!Stream.InternalCopyTo |

|+ Microsoft.ApplicationServer.Messaging.Broker!CompressionHelper.Decompress |

If we look at the recently released .Net code for the Stream class we can see the implementation of this Stream.InternalCopy (Which is called by Stream.Copy).

private void InternalCopyTo(Stream destination, int bufferSize){ int num; byte[] buffer = new byte[bufferSize]; while ((num = this.Read(buffer, 0, buffer.Length)) != 0) { destination.Write(buffer, 0, num); } } |

As you can see we are allocating one buffer (which is 80K so that it won't be allocated in the Large Object Heap) for every stream we copy. This might be ok for a normal scenario but in our case there was no way we could do it for each message. Allocating so much memory adds pressure to the GC that will need to collect much more frequently.

Once we had identified this the solution was straightforward. Use our own custom method to copy the data from one stream to the other.

We replaced Stream.Copy with a method that did buffer pooling and that dropped the GC load dramatically. So if you are using compression in a server where GC is something you need to take care of I recommend you use a method like this instead of using Stream.Copy:

internal static void CopyStreamWithoutAllocating(Stream origin, Stream destination, int bufferSize, InternalBufferManager bufferManager){ byte[] streamCopyBuffer = bufferManager.TakeBuffer(bufferSize); int read; while ((read = origin.Read(streamCopyBuffer, 0, bufferSize)) != 0) { destination.Write(streamCopyBuffer, 0, read); } bufferManager.ReturnBuffer(streamCopyBuffer); } |

After doing this our allocation went back to normal. Note that there are many BufferPools implementations in the web in C#.