Building True Multi-Subnet Windows Server Failover Clusters

Something you may have heard of if you know clustering at all is the idea of “multi-subnet clusters”; a capability introduced in Windows Server 2012 (if memory serves) to Windows Failover Clustering. It’s conceptually the same thing as a normal cluster; it’s designed to withstand an instance failure but multi-subnet clusters allow us to withstand whole network failures too, in theory at least. In reality though multi-subnet clusters need some more thought to make it true multi-subnet and this article is about how.

For this article I’ll use SharePoint as the “client” of SQL Server as this kind of setup is commonly used for SharePoint farms; SQL Server is version 2014 which has some excellent new automatic recovery features for when AlwaysOn clusters loose connectivity. It’s just beautiful to see in fact; as soon as network connectivity is restored the cluster is automatically restored; DB syncing resumes even if there’s been a failover during light-out - it certainly made writing this article much easier I can tell you.

But I digress; the point of this article is to show more abstractly how Windows Server Failover Clustering (WSFC) can handle network outages when setup properly; WSFC being the foundation for any type of clustering from server roles to SQL Server. We’re using SQL Server in this example but it’s the same for any clustered role on WSFC.

The True Multi-subnet Cluster

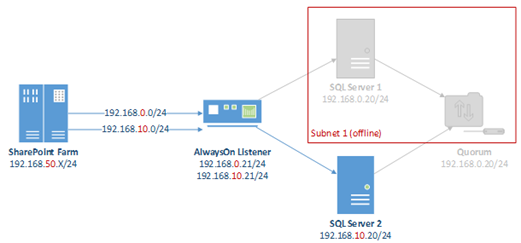

Here’s a cluster example that technically qualifies, but isn’t very good, for reasons we’ll see in minute:

Notice how each SQL Server is in its own subnet, which just this alone qualifies this cluster as a multi-subnet cluster. There is however a big difference between a multi-subnet cluster and a multi-subnet cluster than can withstand a network failures correctly though and as we’ll see here, this is one that’ll die horribly if & when the wrong network goes down – kinda defeating the purpose of having a multi-subnet cluster. Here it is:

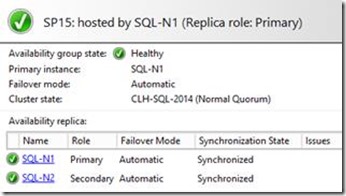

A happy cluster, until network “1” goes down anyway as basically we didn’t put the quorum resource on the right subnet (storage1 is in the same subnet as server 1). SQL Server too reports everything is hunky-dory:

SQL Server 1 is the primary at the moment. Now let’s cause a network outage and see what happens.

Test Outage – Network 1 Offline!

So in our above example, let’s say that network 192.168.0.0/24 goes offline, taking with it SQL Server 1 & the quorum. Let’s say the router connecting that network to the others dies, effectively isolating the network. From the perspective of SharePoint in this example; this is what will happen:

The important question is what happens to the SQL client, SharePoint, if one of our subnets goes offline? Good question; let’s see what happens to SQL to answer that:

SQL Server 1 says:

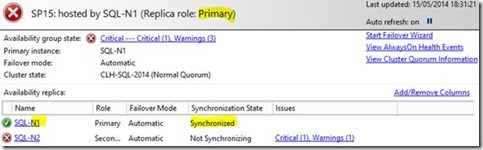

Looking at the AlwaysOn dashboard for server 1 we see this:

Server 1 is quite happy to continue being the primary, which is very bad news indeed as that’s the server that’s “offline” to everyone else. Really we want it to realise its no longer needed.

SQL Server 2 says:

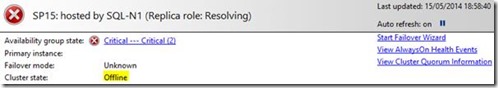

Looking at server 2s’ AlwaysOn status we see:

Server 2 is struggling too and is trying to figure out why it can’t connect to server 1, which considering it’s the only server that’s still visible to SharePoint, is a bad thing. Really it should’ve picked up as the primary, active node.

What Happened?

If this were a real outage we’d be doomed and SharePoint at this point would be serving up only a torrent of fatal errors. SQL Server 1 still thinks it’s the active node because it still has the quorum even though it can’t connect to server 2; server 2, unable to connect to either server 1 or the quorum so assumes it is the faulty server and so doesn’t failover.

Databases are offline; our cluster has failed us and it’s our bad cluster design that’s at fault.

How Should the Cluster be Setup?

When any given SQL Server instance loses connectivity with its’ partner(s) because its’ network is down the server needs to correctly fail, which if it has the quorum it won’t do. While a server has “the quorum” or while it can see something else in the cluster it will assume that it’s the other guy that’s gone offline, which is kind of the point of having a quorum – to agree which server is the most suitable to respond to requests. Clusters are fundamentally just a way of having groups of computers vote on who’s the best person to run things and when a machine disappears, the remaining machines need to be able to figure out whose fault it is – a quorum in this 2x node cluster helps that vote happen accurately.

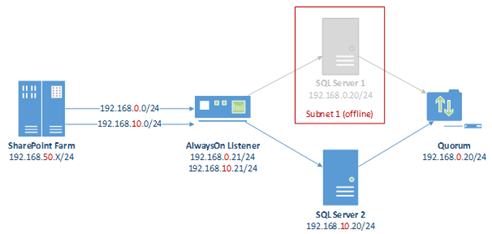

So it’s simple: put the quorum in a 3rd network so each node can detect a network failure correctly.

Now let’s break it again – let’s kill the router onto server 1’s network to see if we survive any better this time.

Network 1 Down Again!

This time breaking network 1 again has this more limited impact:

As we can see, network 1 only includes the one server. This means server 2 will have the node majority, meaning the correct server has taken over as the active node…

SQL Server 1 Says

Our previously active SQL Server node (server 1) should’ve given up, which this confirms it has:

This basically says “I’m pretty sure I’ve gone offline so I’m basically just trying to figure out where everyone else has gone”, which is what we want.

SQL Server 2 Says

Our previously passive node should now take over as it has the quorum, rightly so.

This confirms it has. Automatic failover is only possible with x2 standalone instances; otherwise you have to initiate the failover manually but here’s it’s done it for us.

Wrap-up

So there you have multi-subnet clustering properly explained. If it’s not done right then your client application just won’t survive the failover correctly if the wrong network goes offline. Set it up correctly and you’ve just made your SQL Server cluster that bit more resilient.

Cheers,

// Sam Betts

Comments

Anonymous

October 23, 2014

the quorum IP in the last picture is incorrect and should be .20.Anonymous

October 23, 2014

Ah, so it is - well spotted!Anonymous

March 15, 2017

So in the Proper Setup Scenario, what subnet would the Cluster Name Object reside on? I have a scenario that I'm working on where Server1 and Server2 are on different subnets at different sites that cannot share layer 2 subnets. My problem is if I put the cluster name on the same subnet as Server1 and Server1 goes down, it tries to bring up the cluster on Server2, and the IP address of the cluster name object is not on the same subnet as Server2, so the entire cluster shows Offline.