SharePoint Search and performance

Introduction

During a CritSit, I came across a very interesting problem that was related to the Search Service. Before I arrived onsite, this was the scope to solve the issue:

We will consider this case solved and ready to be closed once we provide the information on how the numbers of items crawled will affect your search web part.

Ok, so if you have some experience using search in your custom code, you are aware of the fact that the search is actually pretty well performing no matter the amount of items in the index right? I mean, it’s the thing I evangelize at customers when they want to aggregate data because it’s the way to get data from everywhere (cross sitecollection/webapplication) in your environment in a very fast way, security trimmed and all!

But….. there are some things you should think about when dealing with LARGE amount of data. When dealing with 10.000+ items as result sets, it becomes (even) more important to create efficient queries and try to avoiding using “Order By” on non optimized fields (just consider the Order by to be EVIL ;)

Testing SharePoint Search

Let’s take a look at the performance of the following query against different number of items in the index by using the following test scenario :

- An announcement List that holds an x amount of announcements, where x will be 1000, 10.000, 100.000;

- Order by Rank query :

- Select Title, Rank, Size, Description, Write, Path, contentclass

FROM Scope() where contentclass = 'STS_ListItem_Announcements'

Order by 'Rank'

- Select Title, Rank, Size, Description, Write, Path, contentclass

- Order by Write query :

- Select Title, Rank, Size, Description, Write, Path, contentclass

- FROM Scope() where contentclass = 'STS_ListItem_Announcements'

- Order by 'Write'

- No order by query :

- Select Title, Rank, Size, Description, Write, Path, contentclass

- FROM Scope() where contentclass = 'STS_ListItem_Announcements'

- Order by Rank query :

First, I’ve added 1000 items to the list and then performed each query 10 times in a row.

| Search Query | 1000 Order by Rank | 1000 (Order by "Write") | 1000 (No Order by) |

| 321 | 470 | 338 | |

| 320 | 438 | 304 | |

| 449 | 421 | 331 | |

| 326 | 363 | 288 | |

| 326 | 392 | 298 | |

| 372 | 389 | 361 | |

| 325 | 417 | 291 | |

| 339 | 410 | 310 | |

| 361 | 370 | 334 | |

| 354 | 393 | 338 | |

| Average duration (in ms) | 349.3 | 406.3 | 319.3 |

Then I added 9000 items more, which results in 10.000 items in one list. After the crawl and then by rerunning the same query, the following outcome was the result:

| Search Query | 10000 Order by Rank | 10000 Order by "Write) | 10000 No Order by |

| 243 | 1705 | 266 | |

| 261 | 1529 | 380 | |

| 247 | 1411 | 248 | |

| 270 | 1418 | 263 | |

| 242 | 1507 | 252 | |

| 303 | 1705 | 282 | |

| 256 | 1693 | 255 | |

| 261 | 1697 | 285 | |

| 236 | 1699 | 242 | |

| 277 | 1625 | 301 | |

| Average duration | 259.6 | 1598.9 | 277.4 |

Then when adding an additional 90.000 items to have 100.000 items in the list, we get the following results

| Search Query | 100000 Order by Rank | 100000 Order by "Write) | 100000 No Order by |

| 317 | 3666 | 156 | |

| 314 | 3654 | 189 | |

| 309 | 3948 | 205 | |

| 294 | 3689 | 197 | |

| 300 | 3735 | 196 | |

| 289 | 3971 | 175 | |

| 341 | 3736 | 172 | |

| 329 | 3608 | 163 | |

| 356 | 3748 | 169 | |

| 359 | 3701 | 164 | |

| Average duration | 320.8 | 3745.6 | 178.6 |

As you can see in the tables and the graphs, the search results coming back from the “Order by Rank” and “No Order by” stay relatively the same. However, there is quite a big difference in the performance when we are using another column for the ‘Order by’. The reason for this is, even though we set a RowLimit, this RowLimit is only important for the amount of results being returned. In order to give back a good search result, the entire index has to be queried and those results are ‘ordered by’ internally using the specified column. Because this column is not very optimized (it’s like doing a string compare on every row returned) it’s takes a lot of time for the results to be returned.

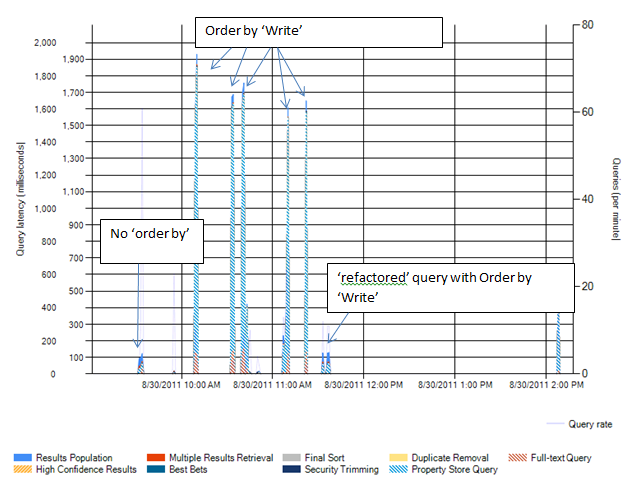

To prove my point, here is the graph of the Backend Query Latency, where it shows that most of the time is spent in the Property Store

So, what can we do about it? There are two basic things:

- Refactor the Query. Most of the time, if we don’t really care about the relevance of the result, we want to order the results on DateTime like ‘last modified’. Now, this means that we don’t actually need every record from the index that was like uploaded three years ago right? (it’s like the same as going to page 503 on the search results page of your favorite online search engine :). So we are going to narrow down the results that will be returned by appending this to the query “AND Write > '2011-1-1' AND Write < ‘2011-2-1’ “ will narrow down the results to only get the items from 1st of January 2011 and 1st of February 2011 (or ‘last month’). Likewise we can do same for something like ‘last week’ by using this “AND Write > '2011-1-1' AND Write < ‘2011-1-7’” (depending on the culture settings, the notation of the datetime is like this : Year-Month-Day')

In our test scenario, I used the following query “SELECT Title, Rank, Write, Path, contentclass FROM Scope() where contentclass = 'STS_ListItem_Announcements' AND write = '2011-8-29 12:29:00' ORDER BY write” . This query gave me back 1000 items with an average of 180ms(!!!), quite a big performance gain right? ;) (The reason for the specific time is because I used ProcessBatchdata to add my announcement items, meaning that using only the date will still return 100.000 items) - Do the ordering/sorting in memory, a second option is to use the Rank for getting the results back based on their relevancy and then do ordering/sorting in memory in code like you would when sorting/ordering a custom collection or DataTable. But please note that doing this might affect the data integrity since the results were not ordered on that column/field but on the Rank column.

- Have dedicated disks (SSD) for every Property Store Database, if you didn’t already knew, the search databases are the databases that actually require the most disk i/o throughput so it maybe worth looking into how good the disks on SQL Server are doing. A good read on the whole SAN discussion can be found at Ryan Campbell’s blogpost https://blogs.technet.com/b/rycampbe/archive/2011/08/23/virtualization-the-san-and-why-one-big-raid-5-array-is-wrong.aspx

Conclusion

So, to conclude, this is how provided the customer with the facts on how the number of items being crawled can impact the performance of SharePoint Search if you use another column/field for doing the Order By on. The recommendation was to refactor the query I just mentioned and also to talk to the SAN team since they were claiming performance that wasn’t met when we did the testing.

Comments

Anonymous

September 13, 2011

Really great post most, very interesting stuff you have drawn out there. I have shared it with the team. Thanks! DanielAnonymous

September 13, 2011

Robin, Really interesting post mate @Dan thanks for sharing, worth trying the search performance with differnt scenarios.. Thanks!!! SuyogAnonymous

September 13, 2011

Great Research, Thanks for providing us your effort It's really a great help