Parallel Programming with OpenMP

OpenMP is the first parallel programming model (compiler directives and library routines) for shared memory multiprocessors. It was pioneered by SGI then becoming de-facto standard for parallelizing FORTRAN and C/C++ applications. Take a look on the official standard website of OpenMP to get more information about this standard. In this post I will guide you more practically to use OpenMP in Microsoft Visual C++.

OpenMP uses Fork-Join Model of parallel execution. Every OpenMP program begins as a single process: called it master thread. The master thread will be executed sequentially until the first parallel region construct is encountered. Most of constructs in OpenMP are compiler directives, with the following structure:

#pragma omp construct [clause [clause] …]

In C/C++ general code structure of the simplest OpenMP program is using omp.h directive and #pragma omp. You have to look on OpenMP documentation in MSDN library for complete construct options in MS VC++ then use /openmp option to compile your program.

#include <omp.h>

#include <iostream>

using namespace std;

int main () {

int nthreads, tid;

/* Fork a team of threads giving them their own copies of variables */

#pragma omp parallel private(tid)

{

/* Obtain and print thread id */

tid = omp_get_thread_num();

cout << "Hello World from thread = " << tid << endl;

/* Only master thread does this */

if (tid == 0)

{

nthreads = omp_get_num_threads();

cout << "Number of threads = " << nthreads << endl;

}

} /* All threads join master thread and terminate */

}

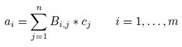

Please remember to include omp.h because all function prototypes and types are there. Using OpenMP to parallelize an application is “not hard” as modifications to the original source program are often needed in just a few places. Moreover, one usually does not need to rewrite significant portions of the code. So In general, the effort of parallelizing a program with OpenMP goes mainly into identifying the parallelism, and not in reprogramming the code to implement that parallelism. Let me show you how to use OpenMP to parallelize a simple operation that realizes a very basic problem: multiplying an m x n matrix B with a vector c

of length n, storing the result into a vector a of length m. Here is the formula:

The sequential codes will look like:

void multiplyer(int m, int n, double *a, double *b, double *c)

{

int i, j;

for (i=0; i<m; i++)

{

a[i] = 0.0;

for (j=0; j<n; j++) a[i] += b[i*n+j]*c[j];

}

}

Lets review the codes before parallelizing it. As you can see no two dotproducts compute the same element of the result vector and also the order in which the values for the elements a[i] for i = 1, . . . , m are calculated does not affect correctness of the answer. In other words, this problem can be parallelized over the index value i.

Data in an OpenMP program either is shared by the threads in a team, in which case all member threads will be able to access the same shared variable, or is

private. For private case, each thread has its own copy of the data object, and hence the variable may have different values for different threads. In our case, we want each thread will access the same variable m,n,a,b,c, but each will have its own distinct variable i and j. A single #pragma (directive) is sufficient to parallelize the multiplyer.

void multiplyer(int m, int n, double *a, double *b, double *c)

{

int i, j;#pragma omp parallel for default(none) shared(m,n,a,b,c) private(i,j)

for (i=0; i<m; i++)

{

a[i] = 0.0;

for (j=0; j<n; j++) a[i] += b[i*n+j]*c[j];

}

}

You can continue your OpenMP exploration by reading this tutorial. If you prefer to read books, I would like to recommend you the following books from MIT Press. I will post more about parallel computing later.

Cheers – RAM