The Iceberg Effect

In cybersecurity, especially in the Digital Forensics Incident Response (DFIR) space, the "Iceberg Effect" plays a detrimental role in the execution phase of response and recovery. This often leaves analysis incomplete which directly translates to insufficient response and recovery plans—and worse, a very high probability of failed attempts to evict the actor in the environment.

But what exactly is "the Iceberg Effect" and what can we do about it?

As cyber warriors with various tools deployed and implemented, there is tons of data at our fingertips. Most of the time too much data, since most of the bosses want to "log everything" and auditors often simply ask "do you log [x]". To get the checkbox checked the response is either a "yes" or "no but we will start logging it!". Now, whether anyone ever looks at that data, triggers on it to build or start specific workflows or automation, analyzes it or even knows it exists once the audit is passed becomes a secondary if not tertiary question. In the rare cases when we do have data that is actionable and where insights can be drawn from, well, this becomes "the tip of the iceberg". This is typically where analysis stops! For example, for those who are highly trained and developed a culture of network defense, we start and stop with network defense tools—sure, they might analyze an endpoint but that typically means do some quick forensics of the box then turn it into ashes. Our network tools point to a device where known malicious or anomalous activity came from, and we conduct additional analysis only on that box.

As cyber warriors with various tools deployed and implemented, there is tons of data at our fingertips. Most of the time too much data, since most of the bosses want to "log everything" and auditors often simply ask "do you log [x]". To get the checkbox checked the response is either a "yes" or "no but we will start logging it!". Now, whether anyone ever looks at that data, triggers on it to build or start specific workflows or automation, analyzes it or even knows it exists once the audit is passed becomes a secondary if not tertiary question. In the rare cases when we do have data that is actionable and where insights can be drawn from, well, this becomes "the tip of the iceberg". This is typically where analysis stops! For example, for those who are highly trained and developed a culture of network defense, we start and stop with network defense tools—sure, they might analyze an endpoint but that typically means do some quick forensics of the box then turn it into ashes. Our network tools point to a device where known malicious or anomalous activity came from, and we conduct additional analysis only on that box.

Now back to the Iceberg and how the heck that relates…

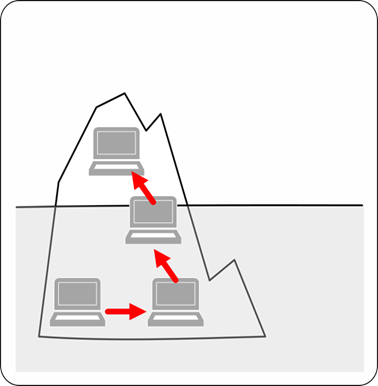

As many of us know an Iceberg is roughly 10% above the water and 90% under the water. As cyber defenders, when we do see an iceberg (our trigger and when we spring into action), we see the 10% but fail to see exactly what is under the water; we are unable to see the other 90% which could provide additional context, sometimes very important context such as root-cause or other indicators that we could pivot on to see potentially what other assets of ours are compromised.

This isn't our fault. Our tools are disparate from each other, they aren't integrated. SIEMS try to fill this void by allowing workflows through the collection and analysis of small sets of data in which they collect but they don't make up for the absence of the right sensors of data. Most if not all the tools in the environment are geared towards keeping the bad guy out but not detecting the bad guy who is already in our network!

Understanding the steps that take place after an adversary establishes a foothold in the environment is important to learn how to create a strong detect strategy. At the highest level, here is the problem space to include the post-exploit phaseof the actor's actions:

Again, unfortunately our sensors are geared towards the protect phase which is to purely prevent the initial beachhead. The common belief is that network sensors and even host sensors provide a level of defense in depth—unfortunately these sensors detect the same things but at different places in the environment. Repetitive sensing at various stages is certainly not a bad strategy—however, it still is insufficient. A true detect strategy must give us visibility of adversarial activities across their campaign, to include when they successfully infiltrate. Tools like Advanced Threat Analytics (https://aka.ms/ata) help turn Active Directory and the Identity plane into one of the best detections of this post-exploit activity. To build the broader picture, backwards, taking this data and fusing it with tools like Operational Management Suite (https://oms.microsoft.com) and Windows Defender Advanced Threat Protection (https://aka.ms/wdatp) help tell the full story. The full story gives the computer/network defender (CND) the most information allowing them to discover the most indicators of compromise (IOC) to fully see the persistence of the actor in their environment. Depending on purely MD5 hashes and filenames should no longer be considered a strategy.

So how does this happen? Unfortunately, many environments have cultures around specific tools. They are trained to operate a very specific way looking at a very specific data set. This is the iceberg effect. It is rare to find a team which can take data from multiple sources, fuse that together at the right pivot points, and build insights from that data. Teams must discover what is under the water to build the full picture. It is important to know why the device in which the network sensor said malicious packets came from might not be the only device impacted by an actor's campaign on the environment!

This blog will dive into specifics introduced in this blog—from methods of persistence that transcend malicious software to understanding the phases of activity of an actor, specifically after the initial foothold has been established in the environment.

Cheers,

Andrew