Office Graph API and Cortana - Chapter 3: Integration with Cortana

![]() French version / Version française

French version / Version française

Preface

Welcome to the third and last chapter of this section dedicated to the integration of the Office Graph API within a .net Windows Phone 8.1 application and Cortana. In the previous chapters, we have seen how interrogate in C# and XML the Office Graph to find elements of its network, connecting securely to Azure Active Directory through the ADAL library. We could find elements of the graph and display to browse through them.

You can DOWNLOAD THE SOURCE CODE in the joined ZIP file, available at the end of each chapter.

This article is published to the TechDays 2015, which will take place from 10 to 12 January 2015 to the Palais des Congrès de Paris. This year I would have the chance to co-animate the session dedicated to Office Graph with Alexandre Cipriani and Stéphane Palluet. Enjoy many of our session for this example and many more.

For this chapter, I want to thank Steve Peschka. Much of the code used here is inspired by his example "CloudTopia" which was presented at numerous events. I recommend more than strongly reading his blog post.

Steve is a source of incredible information, thanks a lot.

Chapter 1: Introduction to the Office Graph

Chapter 2: How to make queries by code to the Office Graph?

Chapter 3: Integration with Cortana (this article)

About the Cortana API

In this last section, we will now focus to how drive our research on the graph through voice commands. Since a few weeks in France on Windows Phone 8.1, it is now possible to control his phone with the voice, thanks to the Cortana. Cortana is a true voice recognition interface, allowing to speak with his phone to steer its applications.

In this example, we will use the SDK of Cortana to achieve controls that will know naturally ask our business graph, as shown in the following schema:

When you develop an application for Cortana, can use two types of components:

- Speech recognition kit (SpeechRecognition namespace) : allows to listen to the user, understand the phrases and words as he speaks.

- Speech synthesis kit (SpeechSynthesis namespace) : allows to talk with Cortana's voice, so for example our application to respond to user commands.

In addition to the integration of these two components, we will see that we also instruct Cortana how automatically launch our application, interpreting the command to open at the same time.

About voice commands

To query the Office Graph vocally, we could for example ask the application to display documents about its social graph axes. These axes could be persons of his circle, his manager, the popular elements around itself, the documents that we've read, etc. etc.

In this example, we will rely vocal instruction, which will allow us to understand what is the axis that wants to see the user. We can examine the graph with controls detailed in the table below. It is important to understand that each of these commands has one or more words that will be detected in a sentence to launch the command:

Command samples |

Office Graph query |

« Popular documents » |

Equivalent to Delve homepage |

« My work » |

Items recently modified by the user on its different collaborative spaces |

« Documents I have read » |

Items recently viewed by the user |

« Shared with me documents » |

Items shared recently with the user |

« Around me » |

Items shared recently around the user (network close) |

« Documents from my manager » |

Items shared items by the user manager |

« Documents from my team » |

Items recently shared by people from the team of the user |

« Documents from 'Pierre Dupont' » |

Items changed recently by Pierre Dupont. For this query can use any term after 'from' to search for documents of a person.The research goes well work for first names. For family names, interpretation will be based on the ability of Cortana to understand and spelled a proper name. |

« Documents about 'Office' » |

Items recently created or modified about a theme or a keyword items. One can for example use the keyword "about" to search with the words spoken after the keyword. You can search from a theme, a term used recently, the name of a product, service, etc. |

Now let's see how to implement these orders with Cortana and voice APIs of Windows Phone.

Cortana main classes

To use Cortana, we must include two namespaces corresponding to speech recognition and synthesis libraries:

using Windows.Media.SpeechRecognition;

using Windows.Media.SpeechSynthesis;

How to learn to Cortana to speech words and phrases ?

We'll start with the SpeechSynthesis part, to talk about Cortana, allowing him to answer us at voice commands.

To do this, we first initialize an object of type SpeechSynthesizer. During this initialization, can specify what type of voice and the language used by the speech engine. In our example we will for example choose to use the female voice and the English language, with the following code:

SpeechSynthesizer Synthesizer = new SpeechSynthesizer();

foreach (var voice in SpeechSynthesizer.AllVoices)

{

if (voice.Gender == VoiceGender.Female && voice.Language.ToLowerInvariant().Equals("en-us"))

{

Synthesizer.Voice = voice;

}

}

With the synthesizer object, we can get him to say phrases in English. We use two different ways:

- Phrases as simple strings, without any form of formatting through the SynthesizeTextToStreamAsync method;

- Phrases formatted at the SSML (XML format) format through the SynthesizeSsmlToStreamAsync method. The advantage of this format is that you can for example control the level of intonation of voice over this or that Word;

Consider the following example to say to Cortana "Sorry, I did not understand " when we fail to understand the command launched by the user.

In SSML, can represent the sentence in the following way to accentuate the intonation of the word "sorry":

<speak version='1.0' xmlns='https://www.w3.org/2001/10/synthesis' xml:lang="en-US">

<prosody pitch='+35%' rate='-10%'> Sorry</prosody>

<prosody pitch='-15%'> I did not understand.</prosody>

</speak>

Simply write the following code method giving in argument the string containing the SSML formatted text :

public async void StartSpeakingSsml(string ssmlToSpeak)

{

// Generate the audio stream from plain text.

SpeechSynthesisStream stream = await this.Synthesizer.SynthesizeSsmlToStreamAsync(ssmlToSpeak);

// Send the stream to the media object.

var mediaElement = new MediaElement();

mediaElement.SetSource(stream, stream.ContentType);

mediaElement.Play();

}

Recognize the voice command

Now we've seen start do talk about Cortana, let's look at how to ensure that Cortana listens to the words and how to interpret them by code.

The first step is to initialize the SpeechRecognizer object, that will serve us to start listening to the command.With this object, we can also define a method which will be executed when the listen operation is completed.

Listening status is returned via the AsyncStatus type argument that indicates the result (Completed or Error).The text as a string is returned via the GetResults method which returns us an object of type SpeechRecognitionResult.

In the example below, we will for example initialize the SpeechRecognizer, then set the code that will be executed upon receipt of voice control. If the status is good, we retrieve the contents of the text and let's look at the text contains the keywords "my work" . If this is the case, we will load method which will call the Office Graph on the current user work (LoadMyWork). Otherwise we will call a method which will talk about Cortana to indicate that the command has not been understood.

.

private async void InitializeRecognizer()

{

this.Recognizer = new SpeechRecognizer();

SpeechRecognitionTopicConstraint topicConstraint

= new SpeechRecognitionTopicConstraint(SpeechRecognitionScenario.Dictation, "Work");

this.Recognizer.Constraints.Add(topicConstraint);

await this.Recognizer.CompileConstraintsAsync(); // Required

recoCompletedAction = new AsyncOperationCompletedHandler<SpeechRecognitionResult>((operation, asyncStatus) =>

{

Dispatcher.RunAsync(Windows.UI.Core.CoreDispatcherPriority.Normal, () =>

{

this.CurrentRecognizerOperation = null;

this.SearchTextBox.Text = "Listening your command";

switch (asyncStatus)

{

case AsyncStatus.Completed:

SpeechRecognitionResult result = operation.GetResults();

if (!String.IsNullOrEmpty(result.Text))

{

string res = result.Text.ToLower();

if (res.Contains("my work"))

LoadMyWork();

else

speechHelper.WrongCommand();

}

break;

case AsyncStatus.Error:

speechHelper.WrongCommand();

break;

default:

break;

}

}).AsTask().Wait();

});

}

We will now look at how to start listening to Cortana in the application. At the click of a button (the microphone in our case), we will launch the active listening of Cortana. For this, we use the RecognizeAsync method, specifying the method which will be called when the listen operation will be completed:

private void StartListening()

{

try

{

beepStart.Play();

this.SearchTextBox.Text = "Listening...";

// Start listening to the user and set up the completion handler for the result

// this fires when you press the button to start listening

this.CurrentRecognizerOperation = this.Recognizer.RecognizeAsync();

this.CurrentRecognizerOperation.Completed = recoCompletedAction;

}

catch (Exception)

{

beepStop.Play();

recoCompletedAction.Invoke(null, AsyncStatus.Error);

}

}

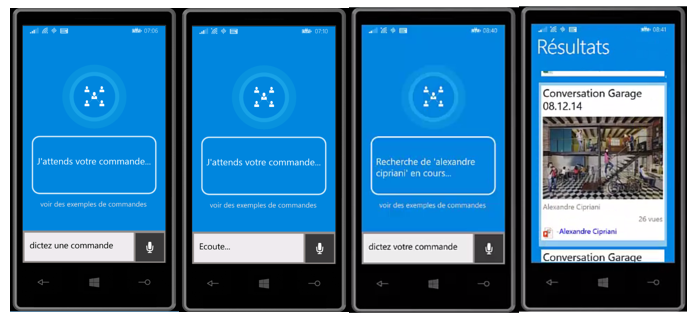

Let's see now how the application works:

Screenshot |

Description |

#1 |

The user arrives on the interface of the application. They can click on the microphone to start listening to the voice command. |

#2 |

The text of the text box down changes with the text 'listen.... ». |

#3 |

If the voice command has been understood, we will have a voice message confirmation on the part of Cortana and the query is displayed in the central text box. |

#4 |

The Office Graph query returns the results, and we display them to the user via the interface realized in the previous chapter. |

Here, we are now able to launch voice commands via Cortana, understand the realized commands and launch the Office Graph based on the criteria selected by the user.

Now the best of all: allowing to start research on the Office Graph without even manually start the application My Office Graph!

How to use Cortana to start directly our voice commands?

It is one of the most impressive features of Cortana: its ability to learn new commands through the applications installed on the phone.

When an application uses this feature, the user has the ability to send voice commands to an application directly from the main interface of Cortana. Cortana will open the application and 'send' the identified voice command. Cortana will be able to understand that any order concerning such application, thanks to a system of prefixes.

In our example, we will define starting his sentence with "Office Graph", Cortana can know whether a specific command to the Office Graph and start the My Office Graph application. It will also analyze the text after the words "Office Graph" to detect a built-in command and run this command within the application.

We could define the following commands to start queries: "Office Graph my work», «Office Graph, search the shared items», etc. etc.

To do this, we need to set up a voice command file, which is a file in the XML format that is included in the application. This file will contain the prefix from the application, as well as the different commands. Different commands will define the sample text, the words listened to, be they voluntary or mandatory:

<?xml version="1.0" encoding="utf-8"?>

<!-- Be sure to use the new v1.1 namespace to utilize the new PhraseTopic feature -->

<VoiceCommands xmlns="https://schemas.microsoft.com/voicecommands/1.1">

<CommandSet xml:lang="en-us" Name="officeGraphCommandSet">

<!-- The CommandPrefix provides an alternative to your full app name for invocation -->

<CommandPrefix> Office Graph </CommandPrefix>

<!-- The CommandSet Example appears in the global help alongside your app name -->

<Example> load my work </Example>

<Command Name="popular">

<Example> popular documents </Example>

<ListenFor>[Display] [Find] [Search] popular [elements] [items] [documents]</ListenFor>

<Feedback>Search popular items in progress...</Feedback>

<Navigate />

</Command>

...

To load the voice commands into Cortana, can execute the method below during initialization of the application.This method will install the commands contained in the VoiceCommandDefinition_8.1.xml file in the App package:

/// <summary>

/// Install the voice commands

/// </summary>

private async void InstallVoiceCommands()

{

const string wp81vcdPath = "ms-appx:///VoiceCommandDefinition_8.1.xml";

Uri vcdUri = new Uri(wp81vcdPath);

StorageFile file = await StorageFile.GetFileFromApplicationUriAsync(vcdUri);

await VoiceCommandManager.InstallCommandSetsFromStorageFileAsync(file);

}

Once the order has been installed, the application is visible from "See more samples" screen inside hte Cortana interface. To check this, we simply open Cortana (in button on the phone for example search button), down everything at the bottom to see the installed applications using Cortana, click on our app «My Office Graph» and the list of examples of available commands is displayed:

Now that the controls are installed, Cortana will be able to recognize our command during a speech, summarize the order included and then launch the application. The following screenshots show the process for the user:

When Cortana will launch our application, we will be notified in the code via our App OnActivated event. Cortana will send us an argument of type "VoiceCommand" for which we will be able to know the name of the detected command and eventually the page that must be opened with this command:

protected override void OnActivated(IActivatedEventArgs args)

{

// When a Voice Command activates the app, this method is going to

// be called and OnLaunched is not. Because of that we need similar

// code to the code we have in OnLaunched

// For VoiceCommand activations, the activation Kind is ActivationKind.VoiceCommand

if (args.Kind == ActivationKind.VoiceCommand)

{

// since we know this is the kind, a cast will work fine

VoiceCommandActivatedEventArgs vcArgs = (VoiceCommandActivatedEventArgs)args;

// The NavigationTarget retrieved here is the value of the Target attribute in the

// Voice Command Definition xml Navigate node

string voiceCommandName = vcArgs.Result.RulePath.First();

string target = vcArgs.Result.SemanticInterpretation.Properties["NavigationTarget"][0];

…

}

}

We are able now to see our social graph of business in a few seconds thanks to the voice. We are able to know the news of its network in the Organization and do not miss any document, presentation, excel or even video published around us!!!

We have seen that it is simple to query his business graph and integrate it into a third-party application. The Office Graph is powerful and offers us a great way to integrate a social dimension to any enterprise application.

We could, with the same principles as those detailed in this article, display in an App or an Intranet site a large number of information to assist the end-user to find or identify in seconds.

Thank you for your interest for this article, and have fun with the Office Graph!