Cross Process Resource Management - do we need it now?

At the heart of the native Concurrency Runtime (ConcRT) in Visual Studio 2010 is a user-mode cooperative scheduler that is responsible for executing tasks. While the Windows operating system schedules threads in a preemptive manner, the ConcRT scheduler schedules tasks cooperatively. That is, regardless of how many tasks are ready to be executed by the scheduler, only a fixed number are allowed to run at a time, and this number is usually equal to the number of CPUs or cores on the system. Until one of these tasks voluntarily relinquishes the underlying core, a different task is not allowed to execute. This reduces the overhead from constantly switching between tasks.

The runtime does, however, allow you to create more than one scheduler within the same process. Now, if each of these schedulers has as many concurrently running tasks as cores on the system, the OS will have to schedule these sets of tasks pre-emptively. This means that even though the scheduler is trying to minimize overhead by keeping a fixed number of tasks running at a time, adding another scheduler to the mix can cause us to lose those benefits. To alleviate this problem the Concurrency Runtime’s Resource Manager steps in and reduces the number of cores that each scheduler can work with. So if two schedulers were executing tasks on a machine with 4 cores, each scheduler would get 2 cores each. Over time, as workloads change, cores could get reassigned from one scheduler to the other.

Now let’s extend this problem to multiple processes. Let’s say a ConcRT process was running on a 4 core machine with 100% CPU utilization. What would happen if two such processes were running simultaneously? It turns out that for some applications, the time taken to complete two instances of the application when they are run at the same time is a little more than twice the time it takes for a single instance to complete. This delta exists because of the overhead from context switching between the two processes. What if there was a global Resource Manager similar to ConcRT’s in-process Resource Manager that could control the number of cores each process was allowed to use? Let’s simulate this by restricting each process to a subset of cores on the system – say the first process can only use cores 0 and 1 and the second process can only use cores 2 and 3. Now each ConcRT process will execute 2 tasks at a time instead of 4, and the number of context switches will dramatically reduce.

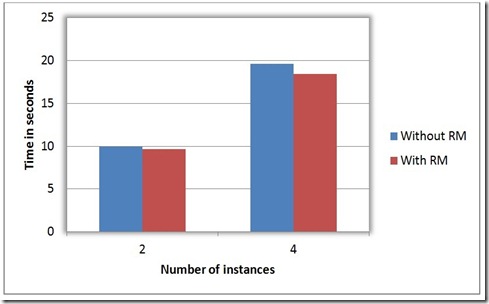

Here’s some data we measured while running multiple instances of an application that performs a bitonic sort algorithm using ConcRT.

We measured the total time it took to run 2 instances of the application with and without our simulated Resource Manager, and the same for 4 instances. The graph clearly shows that for this particular application there is an improvement if we separate the processes and allow them to use a fraction of the cores, as opposed to letting them compete for all the cores. As a side note, cache-sensitive applications experience much more overhead from context switching when compared to apps that are not cache sensitive; the latter apps experience negligible overhead. A cache- sensitive application is one that, for example, repeatedly accesses the same memory, such that having that memory in the L1 cache gives it improved performance.

It is very likely that as multicore architectures become increasingly mainstream, applications written for future machines will be highly concurrent. Depending on the types of applications, letting all processes span all the existing cores on the system may not be the best core allocation strategy.

It is easy to partition cores and see benefits with well-defined workloads whose nature is known in advance, as we showed above. But it is not as easy to come up with a general core allocation strategy that will be 'successful' for all processes running on a Windows PC. Success here is defined as a noticeable improvement in overall quality of service. So we're asking you, the app writer, if there are scenarios you have encountered where you see noticeable degradation of performance or quality of service from multiple concurrent processes executing simultaneously, which could be alleviated if each process reduced its concurrency to accommodate other applications. In other words, have you seen evidence that suggests that system-wide CPU resource management is necessary to solve problems that exist today?

What we're really interested in is the effects observed with real world scenarios. Are you working on an app that is concurrent and CPU bound and is expected to execute along with other apps that are also highly concurrent? Do you have data which shows that having system-wide CPU resource management would significantly improve your scenario?

Please send us your feedback and any data you might have.

Genevieve Fernandes Parallel Computing Platform Team

Comments

- Anonymous

April 07, 2010

The comment has been removed - Anonymous

April 08, 2010

The comment has been removed - Anonymous

April 08, 2010

I think everyone who tried to use VS 2008 and VS 2010 build system in multi-threading mode faced the problem raised in this article: First, you have a switch for the compiler, telling it to use a number of cores to build a single project. Second, you have a corresponding switch for MSBuild or VCBuild that tells it to build multiple projects at the same time. So, when you specify both, you can easily get several compiler and linker processes competing for more cores than you have. And again, you may need to start limiting the number of threads to be used by both compiler and build system to reduce overhead. And now you are not even the concurrent application developer (i.e. cl/MSBuild), although, of course, the scenario will greatly depend on the solution being built. With introduction of VS 2010 and concurrency runtime, I believe we will be seeing more and more highly concurrent apps in the nearest future. My opinion is that a system-wide resource manager will definitely be a win here. An appropriate solution I think will be a special global manager that would manage only ConCRT apps, for example, leaving other (legacy) apps untouched. It may even provide some interface for a managed app to provide hints about its behavior.