Running Web API using Docker and Kubernetes

Context

As companies are continuously seeking ways to become more Agile and embracing DevOps culture and practices, new designs principles have emerged that are more closely aligned with those aspirations. One such a design principle that had gained more popularity and adoption lately is Microservices. By decomposing applications to smaller independent components, companies are able to achieve the following benefits:

- Ability to scale specific components of the system. If “Feature1” is experiencing an unexpected rise in traffic, that component could be scaled without the need to scale the other components which enhances efficiency of infrastructure use

- Improves fault isolation/resiliency: System can remain functional despite the failure of certain modules

- Easy to maintain: teams can work on system components independent of one another which enhances agility and accelerates value delivery

- Flexibility to use different technology stack for each component if desired

- Allows for container based deployment which optimizes components’ use of computing resources and streamlines deployment process

I wrote a detailed blog on the topic of Microservices here

In this blog, I will focus more on the last bullet. We will see how easy it is to get started with Containers and have them a deploy mechanism of our Microservices. I will also cover the challenge that teams might face when dealing with a large number of Microservices and Containers and how to overcome that challenge

Creating a simple web API with Docker Support using Visual Studio

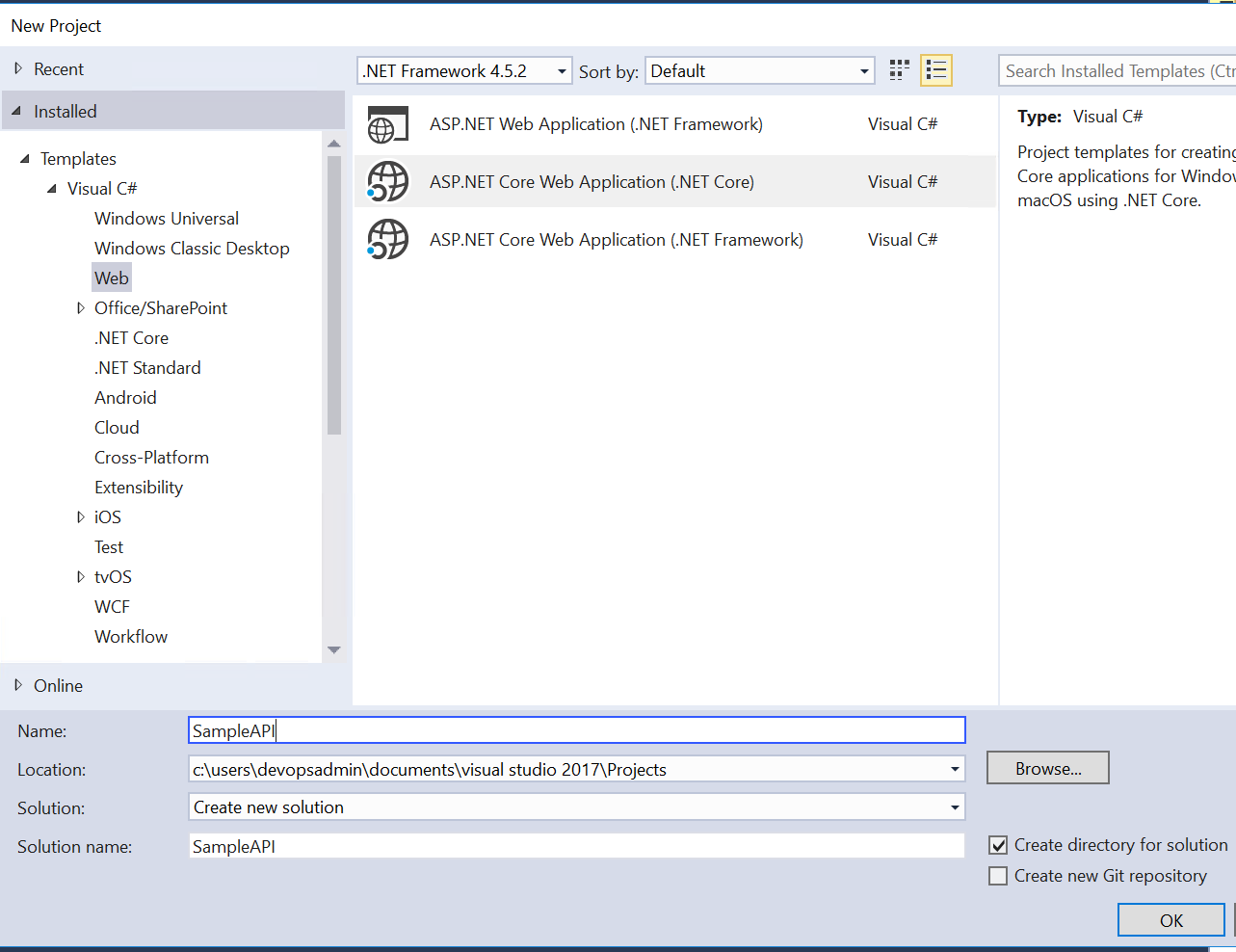

For this section, I am using Visual Studio 2017 Enterprise Edition to create the Web API.

- In Visual Studio, go to File => New => Project => Web => ASP.NET Core Web Application (.NET Core)

- Select Web API and make sure that Enable Docker Support is enabled and then click OK to generate the code for the web API

Note that the code generated by Visual Studio has the files needed by Docker to compile and run the generated application

- Open docker-compose.yml file and prefix the image name with your docker repository name. For example, if your Docker repository name is xyz then the entry in the docker-compose.yml should be: image: xyz/sampleapi Here is how that file should look like:

version: '1'

services:

sampleapi:

image: xyz/sampleapi

build:

context: ./SampleAPI

dockerfile: Dockerfile

- Check in the generated Web API code into Version Control System. You can use the git repository within Visual Studio Team Services for free here

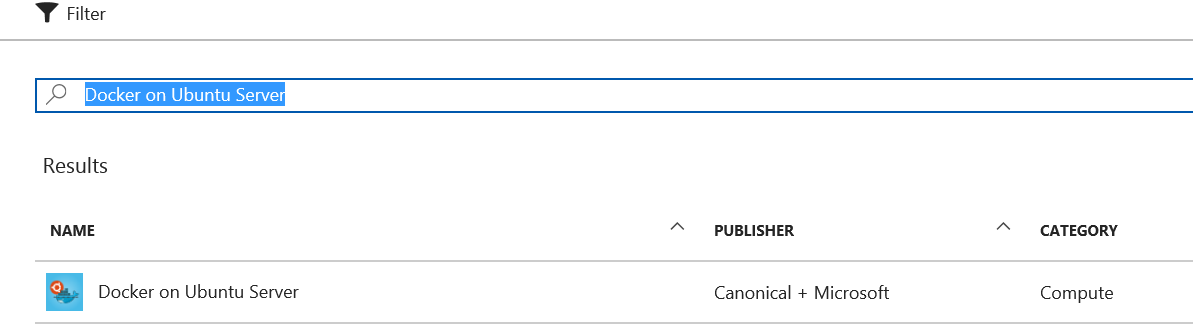

- Checkout the code to an Ubuntu machine with Docker engine installed. In Azure you can provision an Ubuntu machine with Docker using “Docker on Ubuntu Server” as shown below

- Run the code by navigating to the directory where the repository was cloned and then run the following command

docker-compose -f docker-compose.ci.build.yml up && docker-compose up

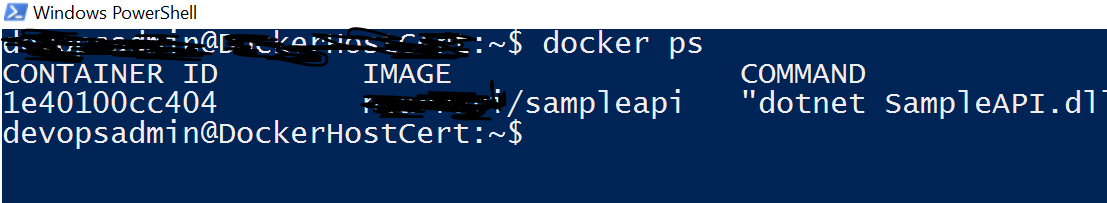

- Verify that the container is running by entering this command

docker ps

- This should return the container that was just created in the previous step. The output should look something similar to below

- Finally push the docker image created (i.e. xyz/sampleapi) to the Docker image Registry

docker push xyz/sampleapi:latest

As your application becomes more popular and users ask for more features, new microservices will need to be created. As the number of microservices increases, so is the complexity of deploying, monitoring, scaling and managing the communication among them. Luckily there are orchestration tools available that make this task more manageable.

Orchestrating Microservices

Managing a large number of Microservices can be a daunting task. Not only will you need to to track their health, you will also need to ensure that they are scaled properly, deployed without interrupting users and also recover when there are failures. The following are orchestrators that have been created to meet this need:

- Service Fabric

- Docker Swarm

- DC/OS

- Kubernetes

Stacking up these tools against one another is out of scope for this blog. Instead, I will focus more on how we can deploy the Web API we created earlier into Kubernetes using Azure Container Service.

Creating Kubernetes in Azure Container Service (ACS)

For this section, I am using a Windows Server 2016 machine. You can use any platform to achieve this but you might need to make a few minor changes to the steps detailed below

- Install Azure CLI using this link

- Login to Azure using Azure CLI by entering the following command

az login

- Follow the steps to login to Azure using Azure CLI. Also if you have access to multiple subscriptions, make sure the right subscription is selected

az account set --subscription “your subscription ID”

- Create a resource group

az group create -l eastus -n webAPIK8s

- Create Kubernetes cluster in ACS under the resource group created

az acs create -n myKubeCluster -d myKube -g webAPIK8s --generate-ssh-keys --orchestrator-type kubernetes --agent-count 1 --agent-vm-size Standard_D1

- Download the Kubernetes client by entering this command:

az acs kubernetes install-cli --install-location=C:\kubectl\kubectl.exe

Note: Ensure that kubectl folder exists otherwise the command would throw an error

- Get the Kubernetes cluster credentials

az acs kubernetes get-credentials --resource-group=webAPIK8s --name=myKubeCluster

- Tunnel into the Kubernetes cluster

C:\kubectl\kubectl.exe proxy

- Open a web browser and navigate to https://localhost:8001/ui

Deploying the Web API

To deploy to the Kubernetes cluster, a deployment descriptor is needed. Create a file called sampleapi.yml in the machine you have used to connect to the Kubernetes cluster and fill it with the following:

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: sampleapi-deployment

spec:

replicas: 3

template:

metadata:

labels:

app: sampleapi

spec:

containers:

- name: sampleapi

image: xyz/sampleapi:latest

ports:

- containerPort: 80

- Deploy the app by opening a command line, navigating to where the yml file is located and running

C:\kubectl\kubectl.exe apply -f sampleapi.yml

- To expose the API outside of the cluster, run the following command

C:\kubectl\kubectl.exe expose deployments sampleapi-deployment --port=80 --type=LoadBalancer

- Periodically check whether the service is exposed. Keep running this command until the service gets an External-IP

C:\kubectl\kubectl.exe get services

- Once the service is exposed, obtain its external IP (i.e. xxx.xxx.xxx.xxx) and check whether you can access the api by using either Rest client (i.e. Postman) or simply opening a web browser and entering:

https://xxx.xxx.xxx.xxx/api/values

Conclusion

Working with a large number of Microservices can be a challenging task. Also creating an orchestrator cluster of any type can sometimes be hard. With Azure Container Service (ACS), creating a cluster can be as easy as entering one command. Also Visual Studio can be used to jump start a project that has Docker support. I hope this blog was informative. My next blog will cover how CI/CD can be implemented with this scenario.

Please leave your feedback…