Integrating PolyBase with Cloudera using Active Directory Authentication

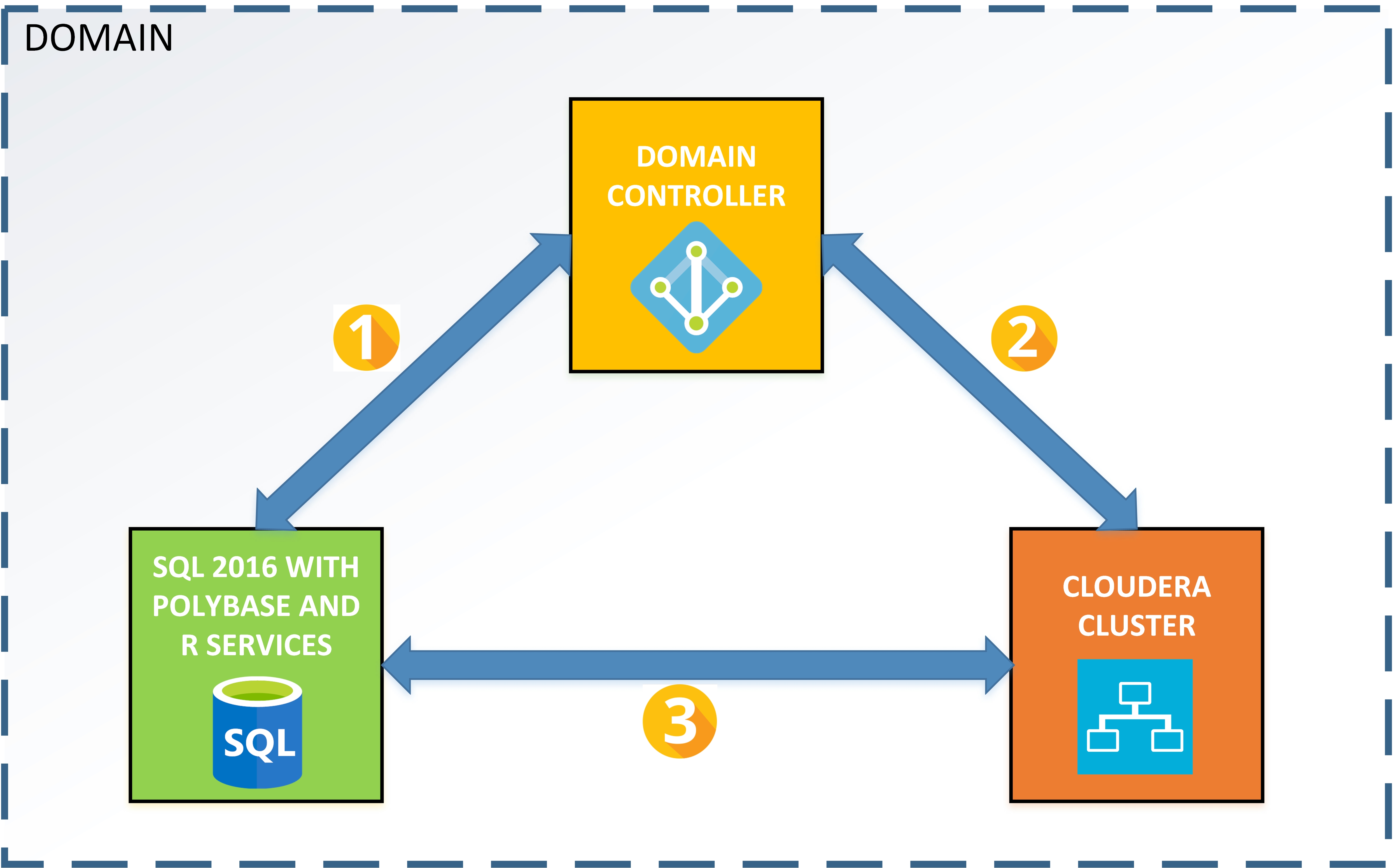

This article outlines the steps to use PolyBase in SQL 2016(including R-Services) with a Cloudera Cluster and setup authentication using Active Directory in both SQL 2016 and Cloudera.

Prerequisites

- Cloudera Cluster

- Active Directory with Domain Controller

- SQL Server 2016 with PolyBase and R-Services installed

NOTE: We have tested the configuration using the Cloudera Cluster 5.5 running on CentOS 6.6, SQL Server 2016 running on Windows Server 2012 R2 and Active Directory with Domain Controller running on Windows Server 2012 R2. Other Windows Server and CentOS operating systems might also work in this configuration.

All the prerequisites above must be in the same network and domain say (CORP.CONTOSO.COM). After the prerequisites are completed, we will follow the steps listed below in order:

- Connecting SQL to AD

- Connecting Cloudera to AD

- Connecting PolyBase to Cloudera

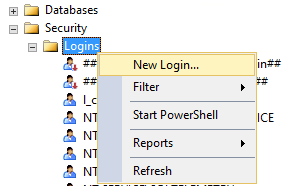

Connecting SQL 2016 with AD

Since SQL 2016 and DC are in the same domain CORP.CONTOSO.COM – you should be able to create a new login in SQL Server from an existing user in CORP.CONTOSO.COM

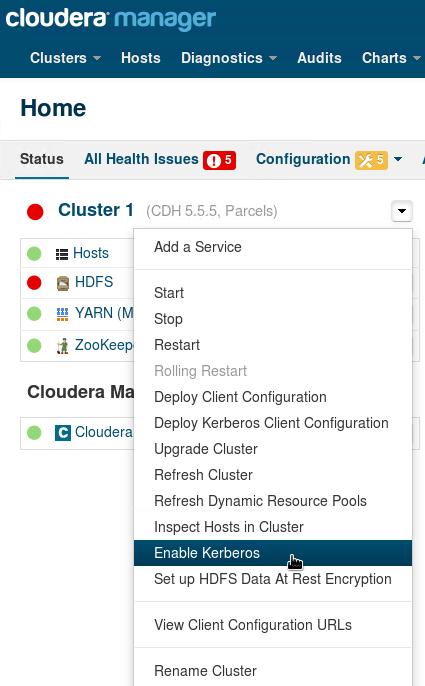

Connecting Cloudera with AD

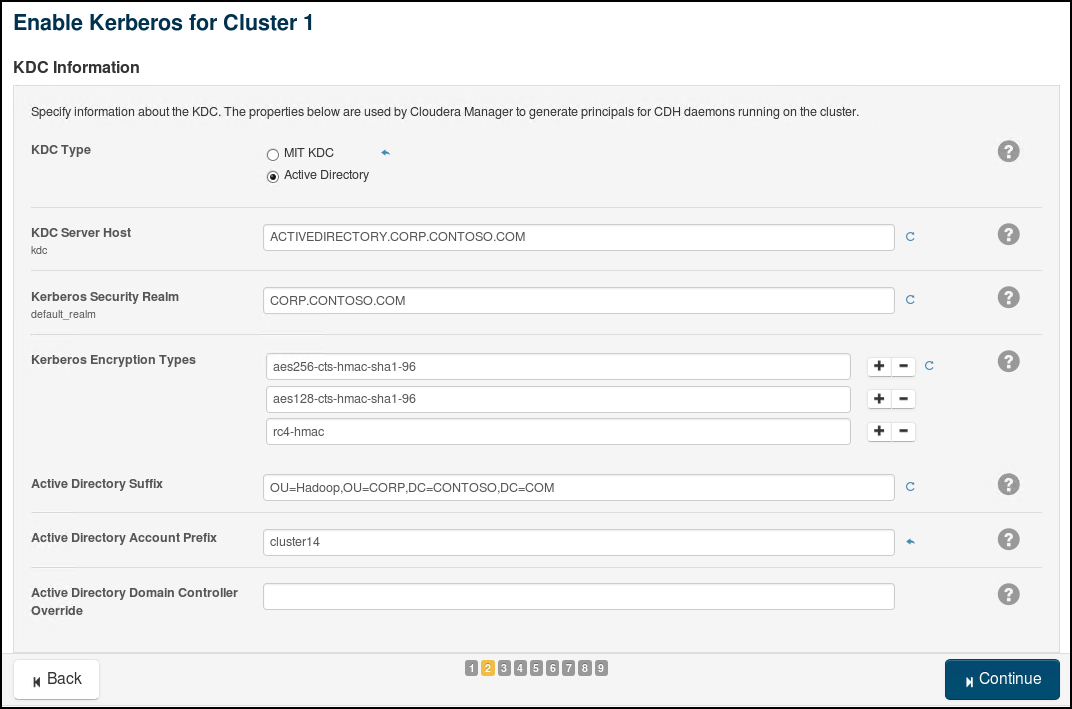

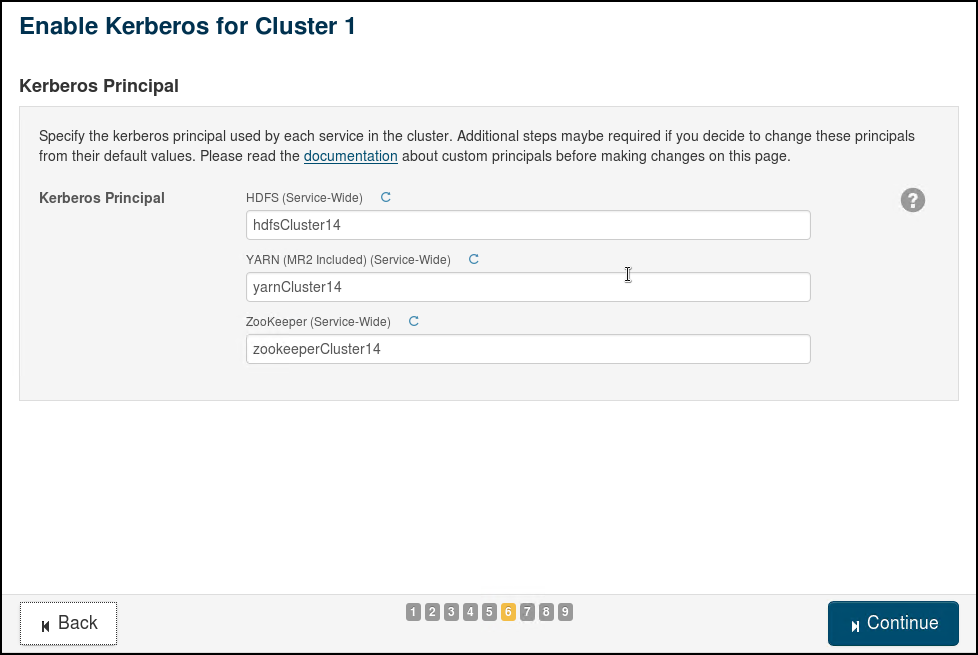

For all usernames and principals, we will use the suffixes like Cluster14 for name-scalability.

- Active Directory setup:

- Create a new Organizational Unit for Hadoop users in AD say (OU=Hadoop, OU=CORP, DC=CONTOSO, DC=COM).

- Create a hdfs superuser : hdfsCluster14@CORP.CONTOSO.COM

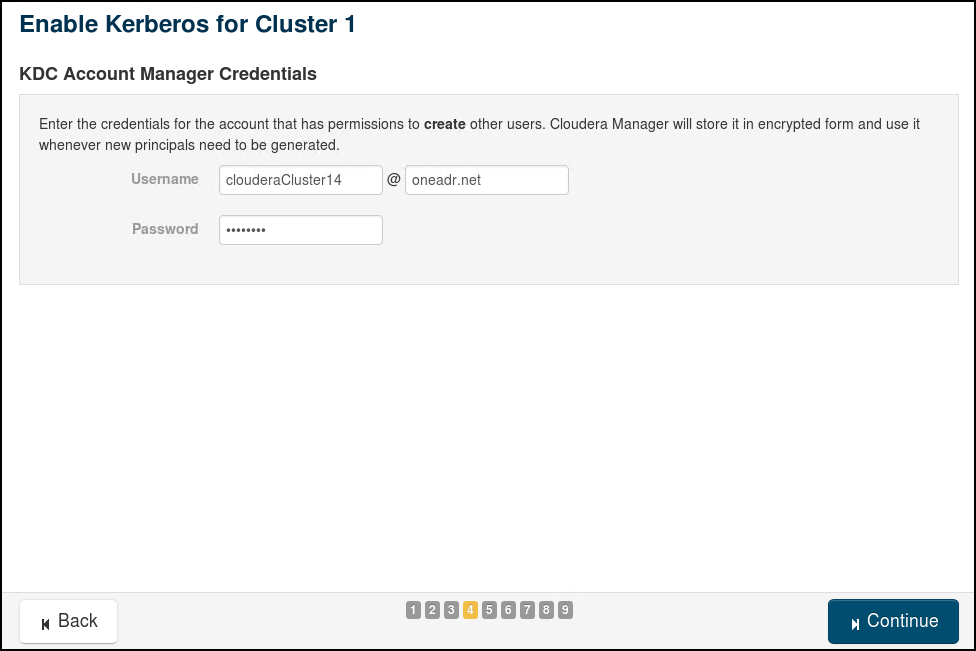

- Cloudera Manager requires an Account Manager user that has privileges to create other accounts in Active Directory. You can use the Active Directory Delegate Control wizard to grant this user permission to create other users by checking the option to “Create, delete and manage user accounts”. Create a user clouderaCluster14@CORP.CONTOSO.COM in OU=Hadoop, OU=CORP, DC=CONTOSO, DC=COM as an Account Manager.

- Install OpenLDAP utilities (openldap-clients on RHEL/Centos) on the host of Cloudera Manager server. Install Kerberos client (krb5-workstation on RHEL/Centos) on all hosts of the cluster. This step requires internet connection in Hadoop server. If there is no internet connection in the server, you can download the rpm and install.

sudo yum -y install openldap-clients krb5-workstation

sudo yum -y install krb5-workstation

- Apply the JCE Unlimited Strength Jurisdiction Policy Files. Download the Java Cryptography Extension (JCE) Unlimited Strength Jurisdiction Policy Files from Oracle. Be sure to download the correct policy file updates for your version of Java 7 or Java 8. Uncompress and extract the downloaded file. The download includes a Readme.txt and two .jar files with the same names as the existing policy files. Locate the two existing policy files: local_policy.jar, US_export_policy.jar. Look in JAVA_HOME/lib/security/ and replace the existing policy files with the unlimited strength policy files you extracted.

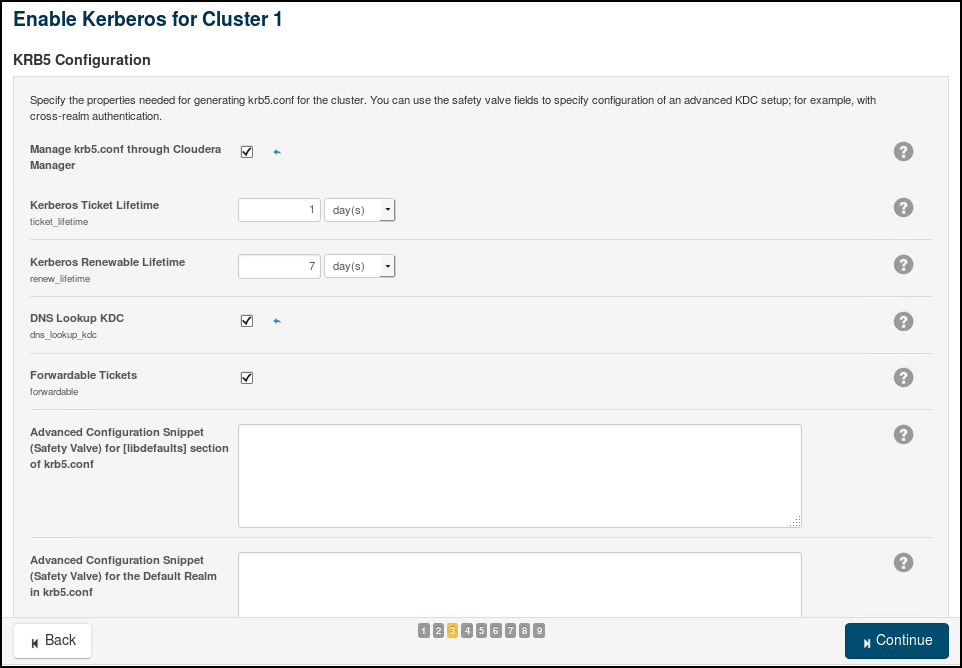

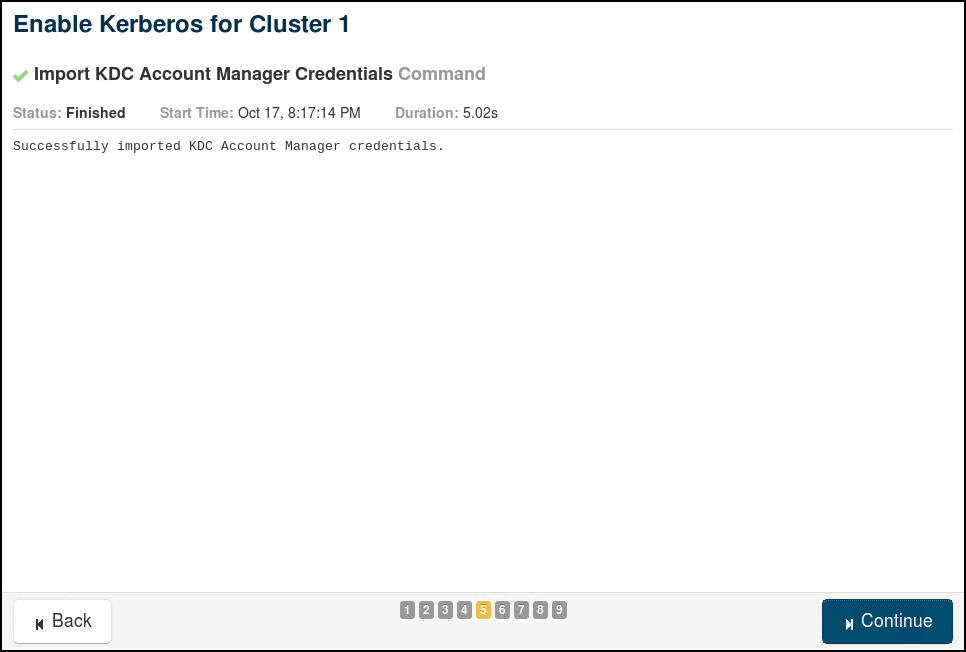

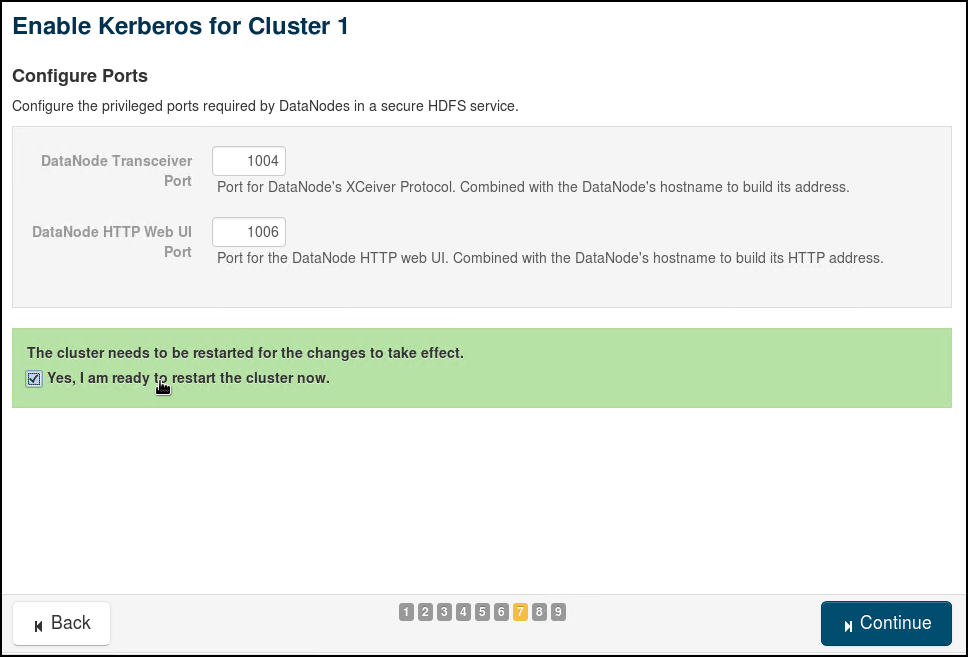

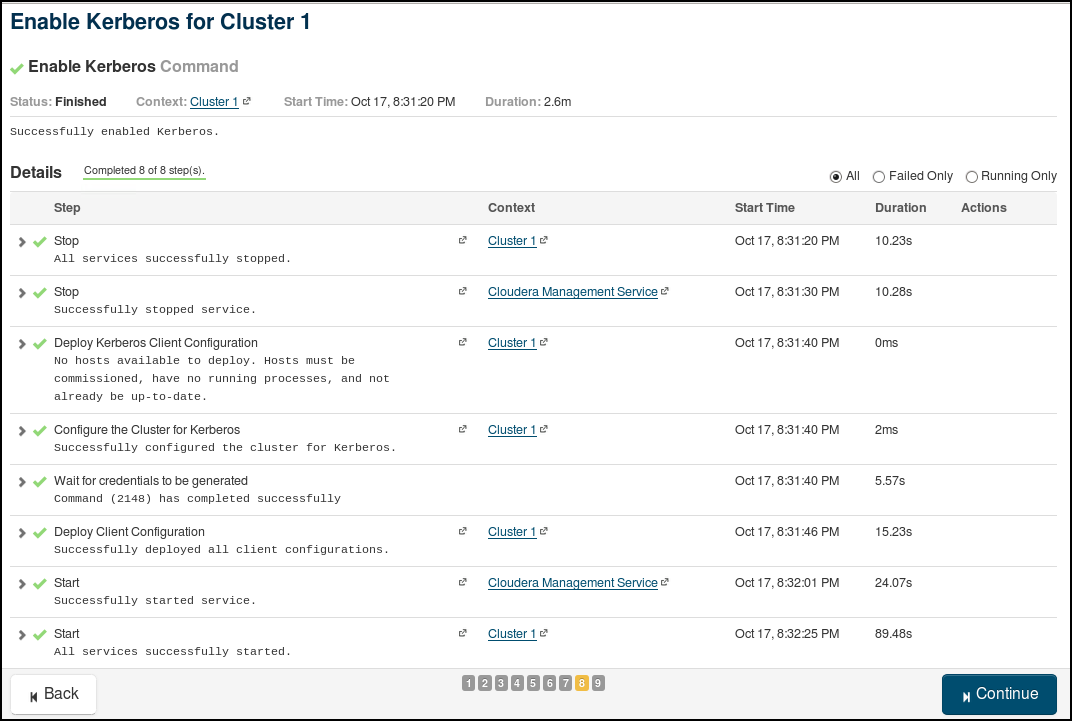

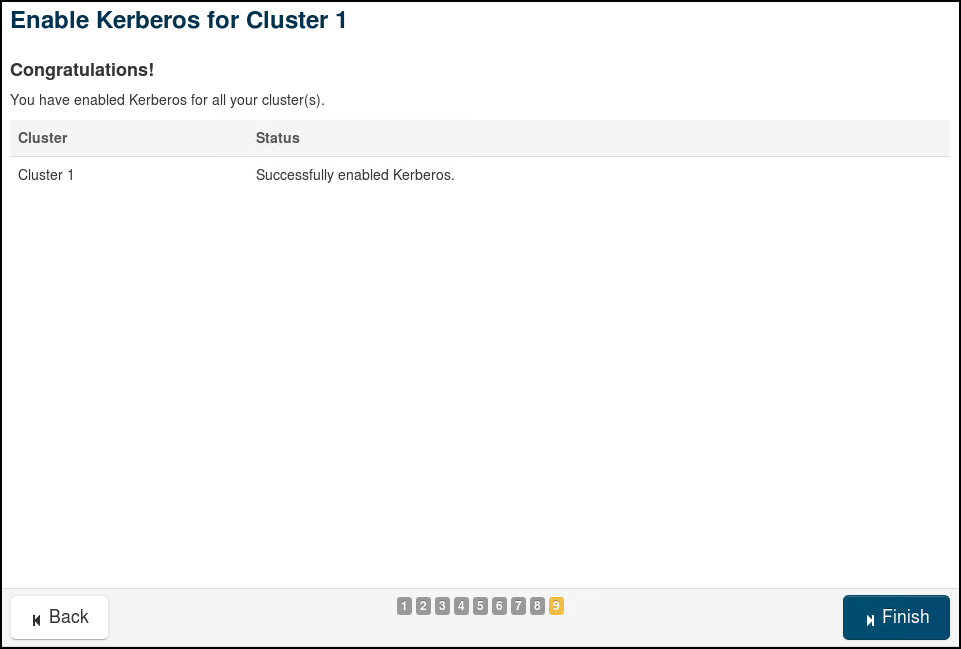

We will use the wizard in Cloudera Manager to enable Active Directory Authentication. The 9 steps involved in the "Enable Kerberos" Wizard are provided through the following screenshots (use relevant values for your own cluster and AD):

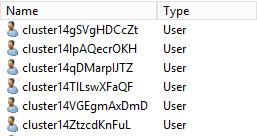

You can view the credentials generated by Cloudera in the Active Directory OU=Hadoop, OU=CORP, DC=CONTOSO, DC=COM ( we gave the prefix “cluster14” in step 2)

Once Kerberos is successfully enabled, let us use kinit to obtain a ticket in cache and then list the directories in HDFS

kinit hdfsCluster14@CORP.CONTOSO.COM

hadoop fs -ls /

If the above command is successful, then we have configured AD Authentication for Cloudera!

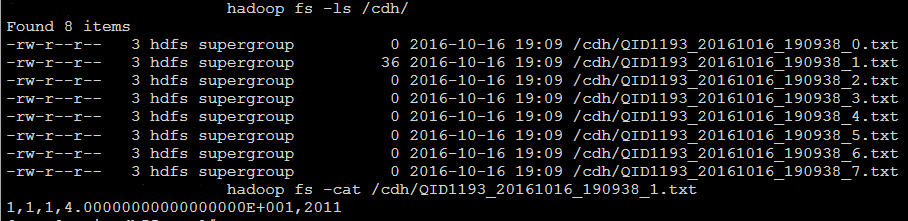

Create a folder in hdfs for PolyBase tables (Say cdh)

hadoop fs -mkdir /cdh

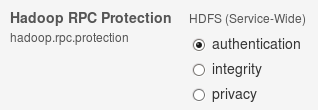

NOTE: Make sure the hadoop.rpc.protection setting in HDFS is set to Authentication:

Currently there is a known issue when setting this to “integrity” or “privacy” will result in failures to connect from PolyBase to HDFS. You will see error message like the following:

EXTERNAL TABLE access failed due to internal error: 'Java exception raised on call to HdfsBridge_IsDirExist: Error

[Failed on local exception: java.io.IOException: Couldn't setup connection] occurred while accessing external file.'

Connecting PolyBase to Cloudera

Run the following command to confirm that PolyBase has been successfully installed. If PolyBase is installed, returns 1; otherwise, 0

SELECT SERVERPROPERTY ('IsPolybaseInstalled') AS IsPolybaseInstalled;

Run sp_configure (Transact-SQL) ‘hadoop connectivity’ and set an appropriate value. To find the value, see PolyBase Connectivity Configuration (Transact-SQL).

sp_configure 'hadoop connectivity', 6;

sp_configure 'allow polybase export', 1;

reconfigure

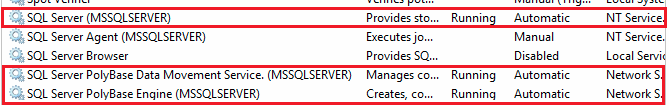

You must restart SQL Server using services.msc. Restarting SQL Server restarts these services:

- SQL Server PolyBase Data Movement Service

- SQL Server PolyBase Engine

In the following location, set the appropriate values in the configuration files from the Cloudera Cluster settings:

C:\Program Files\Microsoft SQL Server\MSSQL13.MSSQLSERVER\MSSQL\Binn\Polybase\Hadoop\conf

core-site.xml

<property>

<name>polybase.kerberos.realm</name>

<value>CORP.CONTOSO.COM</value>

</property>

<property>

<name>polybase.kerberos.kdchost</name>

<value>ACTIVEDIRECTORY.CORP.CONTOSO.COM</value>

</property>

<property>

<name>hadoop.security.authentication</name>

<value>KERBEROS</value>

</property>

hdfs-site.xml

<property>

<name>dfs.namenode.kerberos.principal</name>

<value>hdfsCluster14/_HOST@CORP.CONTOSO.COM</value>

</property>

mapred-site.xml

<property>

<name>mapreduce.jobhistory.principal</name>

<value>mapred/_HOST@CORP.CONTOSO.COM</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value><HOSTNAME and port of YARN JobHistory Server></value>

</property>

yarn-site.xml

<property>

<name>yarn.application.classpath</name>

<value>$HADOOP_CLIENT_CONF_DIR,$HADOOP_CONF_DIR,$HADOOP_COMMON_HOME/*,$HADOOP_COMMON_HOME/lib/*,

$HADOOP_HDFS_HOME/*,$HADOOP_HDFS_HOME/lib/*,$HADOOP_YARN_HOME/*,$HADOOP_YARN_HOME/lib/*</value>

</property>

<property>

<name>yarn.resourcemanager.principal</name>

<value>yarnCluster14/_HOST@CORP.CONTOSO.COM</value>

</property>

Now, we are ready to use PolyBase – let’s try creating an external table:

-- 1: Create a database scoped credential.

-- Create a master key on the database. This is required to encrypt the credential secret.

CREATE MASTER KEY ENCRYPTION BY PASSWORD = 'Pas5w0rd_';

-- 2: Create a database scoped credential for Kerberos-secured Hadoop clusters.

-- IDENTITY: the Kerberos user name.

-- SECRET: the Kerberos password

CREATE DATABASE SCOPED CREDENTIAL myCredObject WITH IDENTITY = 'myHdfsUser', Secret = 'P455w0rd!#' ;

-- 3: Create an external data source.

-- LOCATION (Required) : Hadoop Name Node IP address and port.

-- RESOURCE MANAGER LOCATION (Optional): Hadoop Resource Manager location to enable pushdown computation.

-- CREDENTIAL (Optional): the database scoped credential, created above.

CREATE EXTERNAL DATA SOURCE clouderaCluster14 WITH (

TYPE = HADOOP,

LOCATION ='hdfs://CLUSTER14.CORP.CONTOSO.COM:8020',

RESOURCE_MANAGER_LOCATION = 'CLUSTER14.CORP.CONTOSO.COM:8032',

CREDENTIAL = myCredObject

);

-- 4: Create an external file format.

CREATE EXTERNAL FILE FORMAT CsvFileFormat WITH (

FORMAT_TYPE = DELIMITEDTEXT,

FORMAT_OPTIONS (FIELD_TERMINATOR =',', USE_TYPE_DEFAULT = TRUE))

-- 5: Create an external table pointing to data stored in Hadoop.

-- LOCATION: path to file or directory that contains the data (relative to HDFS root).

CREATE EXTERNAL TABLE [dbo].[CarSensor_Data] (

[SensorKey] int NOT NULL,

[CustomerKey] int NOT NULL,

[GeographyKey] int NULL,

[Speed] float NOT NULL,

[YearMeasured] int NOT NULL

)

WITH (LOCATION='/cdh/',

DATA_SOURCE = clouderaCluster14,

FILE_FORMAT = CsvFileFormat

);

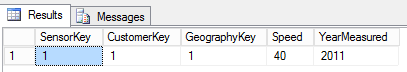

-- 6: Insert some data into external table and view the data

INSERT INTO [dbo].[CarSensor_Data] VALUES (1,1,1,40,2011)

SELECT * FROM [dbo].[CarSensor_Data]

The above data will be stored in CSV format in hdfs, you can browse the demo folder in hdfs to find the contents.

Now you can work with the table [dbo].[CarSensor_Data] as a normal table in SQL, but the data storage will be in HDFS.

You can also import existing data in HDFS as a SQL table to leverage awesome SQL features like Columnstore Indexes, R-services (In-Database)

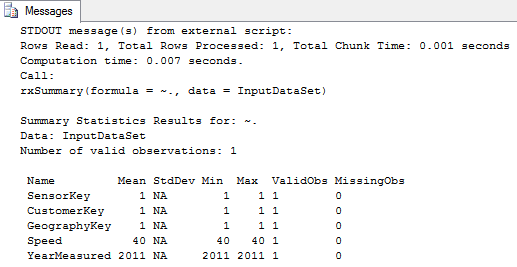

Here is a simple example of using rxSummary on the external table [dbo].[CarSensor_Data]

exec sp_execute_external_script

@language =N'R',

@script=N'print(rxSummary(~.,InputDataSet))',

@input_data_1 =N'select * from [dbo].[CarSensor_Data]'

REFERENCES

Enabling Kerberos Authentication Using the Wizard

Direct Active Directory Integration for Kerberos Authentication

Quickly Configure Kerberos for Your Apache Hadoop Cluster

Integrating Cloudera cluster with Active Directory

Get Started with PolyBase PolyBase Connectivity Configuration PolyBase Configuration PolyBase Setup Errors and Possible Solutions

Comments

- Anonymous

February 23, 2017

I see in the yarn-site.xml config file that needs to be created on the SQL server a reference to yarnCluster14 principal, but I do not see the creation of that principal in the Hadoop OU. I do see the creation of hdfsCluster14 and its use in hive-site.xml. I assume I simply need to create the yarnCluster14 principal just like the hdfsCluster14 principal??- Anonymous

February 23, 2017

Yes Justin, you just need to create yarnCluster14@CORP.CONTOSO.COM in Hadoop OU. In this article, I am just accessing data (read/write) from HDFS - hence hdfsCluster14 principal is enough. yarnCluster14 principal will be required when I perform pushdown computation in PolyBase.

- Anonymous

- Anonymous

May 24, 2017

Ravkumar, when will NOT having hadoop.rpc.protection=Authentication be possible? I am not seeing this fixed in SP1 CU3 as of end of May 2017. When will MS fix this so it can be set to Privacy?- Anonymous

May 24, 2017

Hi Serjio, Please post your request as feedback here : https://feedback.azure.com/forums/307516-sql-data-warehouse/category/171048-polybase- Anonymous

June 02, 2017

Thanks Ravkumar, done.https://feedback.azure.com/forums/307516/suggestions/19482808

- Anonymous

- Anonymous