Converting between Azure Tables and CSV

I published a nuget package (CsvTools.Azure) to easily read/write CSVs to azure blobs and tables. It builds on the CSV reader, also on Nuget (see CsvTools) and GitHub (https://github.com/MikeStall/DataTable ).

Azure Tables are very powerful, but can be tricky to use. I wanted something that:

- handled basic scenarios, such as uploading a CSV file to an Azure table with strongly typed columns, and downloading an Azure table as a CSV that I could then open in Excel.

- Was easy to use and could accomplish most operations in a single line.

- Could still be type-safe.

- Had intelligent defaults. If you didn’t specify a partition key, it would infer one. If the defaults weren’t great, you could go back and improve them.

The CsvTools.Azure nuget package adds extension methods for DataTable, contained in the core CsvTools package. These extension methods save a DataTable to an Azure blob or table, and can read a DataTable from an azure blob or table.

Examples with Azure Blobs

Writing to and from blobs is easy, since blobs resemble the file system. Here’s an example to write a data and read it back from blob storage.

var dt = DataTable.New.Read(@"c:\temp\test.csv");

// Write and Read from blobs

dt.SaveToAzureBlob(Account(), "testcontainer", "test.csv");

var dataFromBlob = DataTable.New.ReadAzureBlob(Account(), "testcontainer", "test.csv"); // read it back

These code snippets assume a sample CSV at c:\temp\test.csv:

name, species, score

Kermit, Frog , 10

Ms. Piggy, Pig , 50

Fozzy, Bear , 23

Examples with Azure Tables

The scenarios I find interesting with Csv and Azure Tables are:

- Ingress: Uploading a CSV as an azure table. I successfully uploaded a 3 million row CSV into Azure using this package. While CSVs don’t support indexing, once in Azure, you can use the standard table query operators (such as lookup by row and partition key)

- Egress: download an Azure table to a CSV. I find this can be useful for pulling down a local copy of things like logs that are stored in azure tables.

Azure Tables have some key differences from a CSV file:

| Azure Tables | CSV | |

| special columns and indexing | every row in an Azure Tables has a Partition and Row key. These keys combine to form a unique index and have several other key properties documented on MSDN. | No unique keys for indexing, and no mandated columns. |

| Schema? | Each row can have its own schema. | All rows have the same schema. A CSV is conceptually a 2d array of strings. |

| Typing | The “columns” in an Azure Tables are strongly typed. | CSVs are all strings |

| naming | table and column names are restricted. See naming rules on msdn. | No naming restriction on columns. |

Practically, this means when “uploading” a CSV to an Azure Table, we need to provide the types of the columns (or just default to everything being strings). When “downloading” an Azure Table to a CSV, we assume all rows in the table have the same schema.

Uploading a CSV to an Azure Table

Here’s an example of uplaoding a datatable as an Azure table:

// will fabricate partition and row keys, all types are strings

dt.SaveToAzureTable(Account(), "animals");

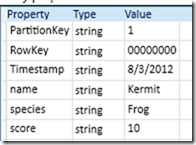

And then the resulting azure table, as viewed via Azure Storage Explorer. You can see the single line only supplied a) an incoming data table, b) a target name for the azure table to be created. So it picked intelligent defaults for the partition and row key, and all columns are typed as string.

We can pass in an Type[] to provide stronger typing for the columns. In this case, we’re saving the “score” column as an int.

// provide stronger typing

var columnTypes = new Type[] { typeof(string), typeof(string), typeof(int) };

dt.SaveToAzureTable(Account(), "animals2", columnTypes);

How is the partition and row key determined when uploading?

Every entity in an azure table needs a Partition and Row Key.

- If the CSV does not have columns named PartitionKey or RowKey, then the library will fabricate values. The partition key will be a constant (eg, everything gets put on the same partition), and the RowKey is just a row counter.

- If the csv has a column for PartitionKey or RowKey, then those will be used.

- One of the overloads to SaveToAzureTable takes a function that can compute a partition and row key per row.

Here’s an example of the 3rd case, where a user provided function computes the partition and row key on the fly for each row.

dt.SaveToAzureTable(Account(), "animals3", columnTypes,

(index, row) => new ParitionRowKey { PartitionKey = "x", RowKey = row["name"] });

Downloading an Azure Table as a CSV

Here we can download an Azure table to a CSV in a single line.

var dataFromTable = DataTable.New.ReadAzureTableLazy(Account(), "animals2");

The convention in the CsvTools packages is that methods ending in “Lazy” are streaming, so this can handle larger-than-memory tables.

We can then print it out to the console (or any stream) or do anything else with the table. For example, to just dump the table to the console, do this:

dataFromTable.SaveToStream(Console.Out); // print to console

And it prints:

PartitionKey,RowKey,Timestamp,name,species,score

1,00000000,2012-08-03T21:04:08.1Z,Kermit,Frog,10

1,00000001,2012-08-03T21:04:08.1Z,Ms. Piggy,Pig,50

1,00000002,2012-08-03T21:04:08.103Z,Fozzy,Bear,23

Notice that the partition key, row key, and timestamp are included as columns in the CSV.

Of course, once we have a DataTable instance, it doesn’t matter that it came from an Azure Table. We can use any of the normal facilities in CsvTools to operate on the table. For example, we could use the strong binding to convert each row to a class and then operate on that:"

// Read back from table as strong typing var dataFromTable = DataTable.New.ReadAzureTableLazy(Account(), "animals2"); IEnumerable<Animal> animals = dataFromTable.RowsAs<Animal>(); foreach (var row in animals) { Console.WriteLine("{0},{1},{2}%", row.name, row.species, row.score / 100.0); }

// Class doesn't need to derive from TableContext

class Animal

{

public string name { get ;set; }

public string species { get ; set; }

public int score { get ;set; }

}

Full sample

Here’s the full sample.

This is a C# 4.0 console application (Client Profile), with a Nuget package reference to CsvTools.Azure, and it uses a dummy csv file at c:\temp\test.csv (see above).

When you add the nuget reference to CsvTools.Azure, Nuget’s dependency management will automatically bring down references to CsvTools (the core CSV reader that implements DataTable) and even the azure storage libraries. I love Nuget.

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using DataAccess;

using Microsoft.WindowsAzure;

class Program

{

static CloudStorageAccount Account()

{

return CloudStorageAccount.DevelopmentStorageAccount;

}

static void Main(string[] args)

{

var dt = DataTable.New.Read(@"c:\temp\test.csv");

// Write and Read from blobs

dt.SaveToAzureBlob(Account(), "testcontainer", "test.csv");

var dataFromBlob = DataTable.New.ReadAzureBlob(Account(), "testcontainer", "test.csv"); // read it back

dataFromBlob.SaveToStream(Console.Out); // print to console

// Write and read from Tables

// will fabricate partition and row keys, all types are strings

dt.SaveToAzureTable(Account(), "animals");

// provide stronger typing

var columnTypes = new Type[] { typeof(string), typeof(string), typeof(int) };

dt.SaveToAzureTable(Account(), "animals2", columnTypes);

{

Console.WriteLine("get an Azure table and print to console:");

var dataFromTable = DataTable.New.ReadAzureTableLazy(Account(), "animals2");

dataFromTable.SaveToStream(Console.Out); // print to console

Console.WriteLine();

}

{

Console.WriteLine("Demonstrate strong typing");

// Read back from table as strong typing

var dataFromTable = DataTable.New.ReadAzureTableLazy(Account(), "animals2");

IEnumerable<Animal> animals = dataFromTable.RowsAs<Animal>();

foreach (var row in animals)

{

Console.WriteLine("{0},{1},{2}%", row.name, row.species, row.score / 100.0);

}

}

// Write using a row and parition key

}

// Class doesn't need to derive from TableContext

class Animal

{

public string name { get ;set; }

public string species { get ; set; }

public int score { get ;set; }

}

}

Comments

- Anonymous

May 21, 2018

Hi Mike,Where are you providing the Azure Storage Account Name and its access key. How are we authenticating the storage account ?Thanks,Girish