@home with Windows Azure: Behind the Scenes

As over a thousand of you know (because you signed up for our Webcast series during May and June), my colleague Brian Hitney and I have been working on a Windows Azure project – known as @home With Windows Azure – to demonstrate a number of features of the platform to you, learn a bit ourselves, and contribute to a medical research project to boot. During the series, it quickly became clear (…like after the first session) that the two hours was barely enough time to scratch the surface, and while we hope the series was a useful exercise in introducing you to Windows Azure and allowing you to deploy perhaps your first application to the cloud, we wanted (and had intended) to dive much deeper.

As over a thousand of you know (because you signed up for our Webcast series during May and June), my colleague Brian Hitney and I have been working on a Windows Azure project – known as @home With Windows Azure – to demonstrate a number of features of the platform to you, learn a bit ourselves, and contribute to a medical research project to boot. During the series, it quickly became clear (…like after the first session) that the two hours was barely enough time to scratch the surface, and while we hope the series was a useful exercise in introducing you to Windows Azure and allowing you to deploy perhaps your first application to the cloud, we wanted (and had intended) to dive much deeper.

So enter not one but two blog series. This introductory post appears on both of our blogs, but from here on out we’re going to divide and conquer, each of us focusing on one of the two primary aspects of the project. I’ll cover the application you might deploy (and did if you attended the series), and Brian will cover the distributed.cloudapp.net application, which also resides in Azure and serves as the ‘mothership’ for @home with Windows Azure. Source code for both is available, so you’ll be able to crack open the solutions and follow along – and perhaps even add to or improve our design.

You are responsible for monitoring your own Azure account utilization. This project, in particular, can amass significant costs for CPU utilization. We recommend your self-study be limited to using the Azure development fabric on your local machine, unless you have a limited-time trial account or other consumption plan that will cover the costs of execution.

So let’s get started. In this initial post, we’ll cover a few items

Project history

Brian and I have both been intrigued by Windows Azure and cloud computing in general, but we realized it’s a fairly disruptive technology and can often seem unapproachable for many of your who are focused on your own (typically on-premises) application development projects and just trying to catch up on the latest topical developments in WPF, Silverlight, Entity Framework, WCF, and a host of other technologies that flow from the fire hose at Redmond. Walking through the steps to deploy “Hello

Brian and I have both been intrigued by Windows Azure and cloud computing in general, but we realized it’s a fairly disruptive technology and can often seem unapproachable for many of your who are focused on your own (typically on-premises) application development projects and just trying to catch up on the latest topical developments in WPF, Silverlight, Entity Framework, WCF, and a host of other technologies that flow from the fire hose at Redmond. Walking through the steps to deploy “Hello World Cloud” to Windows Azure was an obvious choice (and in fact we did that during our webcast), but we wanted an example that’s a bit more interesting in terms of domain as well as something that wasn’t gratuitously leveraging (or not) the power of the cloud.

Originally, we’d considered just doing a blog series, but then our colleague John McClelland had a great idea – run a webcast series (over and over… and over again x9) so we could reach a crop of 100 new Azure-curious viewers each week. With the serendipitous availability of ‘unlimited’ use, two-week Windows Azure trial accounts for the webcast series, we knew we could do something impactful that wouldn’t break anyone’s individual pocketbook – something along the lines of a distributed computing project , such as SETI.

SETI may be the most well-known of the efforts, but there are numerous others, and we settled on one (Folding@home, sponsored by Stanford University) based on its mission, longevity, and low barrier to entry (read: no login required and minimal software download). Once we decided on the project, it was just a matter of building up something in Windows Azure that would not only harness the computing power of Microsoft’s data centers but also showcase a number of the core concepts of Windows Azure and indeed cloud computing in general. We weren’t quite sure what to expect in terms of interest in the webcast series, but via the efforts of our amazing marketing team (thank you, Jana Underwood and Susan Wisowaty), we ‘sold out’ each of the webcasts, including the last two at which we were able to double the registrants - and then some!

For those of you that attended, we thank you. For those that didn’t, each of our presentations was recorded and is available for viewing. As we mentioned at the start of this blog post, the two hours we’d allotted seemed like a lot of time during the planning stages, but in practice we rarely got the chance to look at code or explain some the application constructs in our implementation. Many of you, too, commented that you’d like to have seen us go deeper, and that’s, of course, where we’re headed with this post and others that will be forthcoming in our blogs.

Overview of Stanford’s Folding@Home (FAH) project

Stanford’s Folding@home (FAH) project was launched by the Pande lab at the Departments of Chemistry and Structural Biology at Stanford University on October 1, 2000, with a goal “to understand protein folding, protein aggregation, and related diseases,” diseases that include Alzheimer’s, cystic fibrosis, CBE (Mad Cow disease) and several cancers.The project is funded by both the National Institutes of Health and the National Science Foundation, and has enjoyed significant corporate sponsorship as well over the last decade. To date, over 5 million CPUs have contributed to the project (310,000 CPUs are currently active), and the effort has spawned over 70 academic research papers and a number of awards.

Stanford’s Folding@home (FAH) project was launched by the Pande lab at the Departments of Chemistry and Structural Biology at Stanford University on October 1, 2000, with a goal “to understand protein folding, protein aggregation, and related diseases,” diseases that include Alzheimer’s, cystic fibrosis, CBE (Mad Cow disease) and several cancers.The project is funded by both the National Institutes of Health and the National Science Foundation, and has enjoyed significant corporate sponsorship as well over the last decade. To date, over 5 million CPUs have contributed to the project (310,000 CPUs are currently active), and the effort has spawned over 70 academic research papers and a number of awards.

The project’s Executive Summary answers perhaps the three most frequently asked questions (a more extensive FAQ is also available):

What are proteins and why do they "fold"? Proteins are biology's workhorses -- its " nanomachines ." Before proteins can carry out their biochemical function, they remarkably assemble themselves, or "fold." The process of protein folding, while critical and fundamental to virtually all of biology, remains a mystery. Moreover, perhaps not surprisingly, when proteins do not fold correctly (i.e. "misfold"), there can be serious effects, including many well known diseases, such as Alzheimer's, Mad Cow (BSE), CJD, ALS, and Parkinson's disease.

What does Folding@Home do? Folding@Home is a distributed computing project which studies protein folding , misfolding, aggregation, and related diseases. We use novel computational methods and large scale distributed computing, to simulate timescales thousands to millions of times longer than previously achieved. This has allowed us to simulate folding for the first time, and to now direct our approach to examine folding related disease.

How can you help? You can help our project by downloading and running our client software . Our algorithms are designed such that for every computer that joins the project, we get a commensurate increase in simulation speed.

FAH client applications are available for the Macintosh, PC, and Linux, and GPU and SMP clients are also available. In fact, Sony has developed a FAH client for its Playstation 3 consoles (it’s included with system version 1.6 and later, and downloadable otherwise) to leverage its CELL microprocessor to provide performance at a 20 GigaFLOP scale.

As you’ll note in the architecture overview below, the @home with Windows Azure project specifically leverages the FAH Windows console client.

@home with Windows Azure high-level architecture

The @home with Windows Azure project comprises two distinct Azure applications, the distributed.cloudapp.net site (on the right in the diagram below) and the application you deploy to your own account via the source code we’ve provided (shown on the left). We’ll call this the Azure@home application from here on out.

distributed.cloudapp.net has three main purposes:

- Serve as the ‘go-to’ site for this effort with download instructions, webcast recordings, and links to other Azure resources.

- Log and reflect the progress made by each of the individual contributors to the project (including the cool Silverlight map depicted below)

- Contribute itself to the effort by spawning off Folding@home work units.

Brian was at the helm for the design and implementation of distributed.cloudapp.net and will delve into its implementation via his blog.

The other Azure service in play is the one you can download from distributed.cloudapp.net (either in VS2008 or VS2010 format) – the one we’re referring to as Azure@home. This cloud application contains a web front end and a worker role implementation that wraps the console client downloaded from the Folding@home site. When you deploy this application, you will be setting up a small web site including a default page (image the left below) with a Bing Maps UI and a few text fields to kick off the folding activity. Worker roles deployed with the Azure service are responsible for spawning the Folding@home console client application - within a VM in Azure - and reporting the progress to both your local account’s Azure storage and the distributed.cloudapp.net application (via a WCF service call).

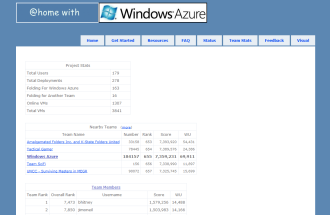

Via your own service’s website you can keep tabs on the contribution your deployment is making to the Folding@home effort (image to right above), and via distributed.cloudapp.net you can view the overall impact of the @home with Windows Azure project – as I’m writing this the project is ranked 583 out of over 184,000 teams; that’s in roughly the top 0.3% after a little over two months, not bad!

I’ll be exploring the design and implementation of the Azure@home piece via upcoming posts on my blog.

Prerequisites to follow along

Everything you need to know about getting started with @home with Windows Azure is available at the distributed.cloudapp.net site, but here’s a summary:

- Operating System

- Windows 7

- Windows Server 2008 R2

- WIndows Server 2008

- Windows Vista

- Visual Studio development environment

- Visual Studio 2008 SP1 (standard or above), or

- Visual Web Developer 2008 Express Edition with SP1,

- Visual Studio 2010 Professional, Premium or Ultimate (trial download), or

- Visual Web Developer 2010 Express

- Windows Azure Tools for Visual Studio (which includes the SDK) and has the following prerequisites

- IIS 7 with WCF Http Activation enabled

- SQL Server 2005 Express Edition (or higher) – you can install SQL Server Express with Visual Studio or download it separately.

- Azure@home source code

- Folding@home console client For Windows XP/2003/Vista (from Stanford’s site)

In addition to the core pieces listed above, feel free to view one of the webcast recordings or Brian’s screencast to learn how to deploy the application. We won’t be focusing so much on the deployment in the upcoming blog series, but more on the underlying implementation of the constituent Azure services.

Lastly, we want to reiterate that the Azure@home application requires a minimum of two Azure roles. That’s tallied as two CPU hours in terms of Azure consumption, and therefore results in a default charge of $0.24/hour; add to that a much smaller amount of Azure storage charges, and you’ll find that it’s left running 7x24, your monthly charge will be around $172! There are various Azure platform offers available, including an introductory special; however, the introductory special includes only 25 compute hours per month (equating to12 hours of running the smallest version of Azure@home possible).

Lastly, we want to reiterate that the Azure@home application requires a minimum of two Azure roles. That’s tallied as two CPU hours in terms of Azure consumption, and therefore results in a default charge of $0.24/hour; add to that a much smaller amount of Azure storage charges, and you’ll find that it’s left running 7x24, your monthly charge will be around $172! There are various Azure platform offers available, including an introductory special; however, the introductory special includes only 25 compute hours per month (equating to12 hours of running the smallest version of Azure@home possible).

Most of the units of work assigned by the Folding@home project require at least 24 hours of computation time to complete, so it’s unlikely you can make a substantial contribution to the Stanford effort without leveraging idle CPUs within a paid account or having free access to Azure via a limited-time trial account. You can, of course, utilize the development fabric on your local machine to run and analyze the application, and theoretically run the Folding@home client application locally to contribute to the project on a smaller scale.

That’s it for now. I’ll be following up with the next post within a few days or so; until then keep your head in the clouds, and your eye on your code.