Increasing the ROI of our Automation

A while back I posted this on automation. I want to resurface this topic now, as it has been on my mind a lot lately as we develop dev10. What is the “right” approach for automation? How do we make it pay off?

Automation is hugely important for us. It enables us to ensure our software works on a myriad of OS (XP, Vista, Win7, Server 2003, Server 2008), processor architecture (x86, ia64), and language (English, German, Japanese, Chinese, etc.) combinations.

At the end of the day, return on investment is what it is all about. Is it faster to develop and maintain an automated test case, or simply run the tests manually? If over the lifetime of a test case you spend more time fixing it than it would have taken to run the test manually for each time you ran the automated test, that test has not paid off. This is basic and obvious, but often times get overlooked when considering automation.

Going back a bit in history to the VS 2005 release when we initially introduced load testing, we made a few huge mistakes with our approach to automation, mistakes which I see others repeating over and over:

- My test team wrote automation exclusively using UI automation.

- My test team owned developing and maintaining the automation.

- Dev “unit tests” were not considered “automation”.

- Devs and testers used different test automation frameworks.

With dev10, we have reversed each of these, and are benefitting greatly from it.

One thing I really want to emphasize is the cost of maintaining our UI automation. I have not looked at the exact number, but in the course of developing dev10 nearly every single UI automation test case has broken. Contrast that with our tests that test at the API layer, I estimate that fewer than 10% have broken. To add insult to injury, the UI tests are hugely expensive to fix. API tests that break nearly always give compile errors, which are easy to fix. UI tests do not fail until you run them, and then you have to grovel through the test log to figure out what UI controls have changed to cause the failure, make a fix, rerun the test (perhaps to find another failure), all of which is extremely time consuming.

Increasing our ROI: A Cost-Based Approach to Automation

For dev10, we have developed four methodologies for automation, and consider each when considering how to automate testing of particular functionality. We give preference to the lowest cost approach. That is, if we can use a cheaper approach to automate, we do it that way and we don’t automate everything through the UI. In fact, the UI is the least favored approach, and is only done if none of the other approaches will give us the coverage we need. The approaches, in order of preference, are:

Unit tests that run in the test process. Really “unit test” is a misnomer for most of these tests, as they typically test a broader area than a pure unit test. I prefer to call these tests API tests. The defining characteristics of these tests is that they are hosted by the test process.

An example of this for our load test product is we have tests that run requests through our web test engine.Another example is in our TCM server product. Nearly all of the automation for TCM server has been developed using this approach.

Command line tests. With this approach we have “unit tests” that spawn our command line. The test code then waits for the command to complete, and then uses APIs to validate the command did the right thing. We use this approach for both web and load test features and for our TCM server.

For example, for load tests we have tests that run a load test from the command line, then access the load test database to ensure the results were as expected. This gives us substantial coverage of our web and load test feature set.API tests that run in the process under test. mstest has a feature that enables processes other than vstesthost.exe to host the test code. An example of this is ASP.NET unit tests, which run in the ASP.NET process. We take advantage of this extensibility point to add our client processes as host processes.

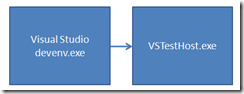

An example of this is we run our new TCM test client Camano (aka Microsoft Test and Lab Manager) as a test host. This is a bit of a mind bender, but we also actually run VS as a test host! So devenv starts vstesthost which starts a second copy of devenv. Once VS is loaded, our test code runs and has access to our architectural layer “just beneath the glass” and enables us to run web and load tests in the IDE (e.g. open a project, open and edit load tests in the project, run them, validate the UI through code).UI Automation. With this approach, our test code instantiates the client under test, and drives it through the mouse and keyboard (we drive VS through MSAA, and we drive our TCM client using UIA).

The cost of UI automation is at least an order of magnitude greater than the other three approaches. Isn’t it ironic that we are introducing a new UI automation test type in dev10. If UI tests are so bad, why are we bringing them to market? While I maintain that they are very expensive, UI tests are also absolutely necessary. There are some things we simply cannot test without them (like dialogs, or some integrated, cross-process end-to-end scenarios). But if I go back and look at the UI tests we developed for web and load tests in VS 2005, the vast majority of them could have been implemented using a cheaper alternative.

Engineering for Automation: Developing Testable Software

Developing these other automation approaches did not come for free. We have deliberately architected our software to enable each of these approaches, and spent time developing test frameworks and reference patterns for each approach. It has really paid off in a big way. If you look at our TCM tests, we maintain an extremely high pass rate (98% plus). Our “legacy” UI tests from the 2005 release are in bad shape.

Whose job is it anyway?

At the end of the day, it is the test team’s job to help us understand whether or not we are ready to ship. Automation plays a huge role in that, since we are able to run our automation suites unattended and generate test results relatively quickly and concurrently on many different configurations. The test team is responsible for ensuring we have the right set of automation in place help us understand where we are at.

Getting Testers and Developers on the Same Page

However, it is not solely the test team’s job to develop and maintain the automation. The test team develops the test plan, which includes the scenarios and functionality we want to automate, but both the dev and test team develop and maintain the automation. If a developer is writing “unit tests” for a new API they are developing, they work off the test plan to develop one of the specified test cases. Tester do not duplicate this effort by developing “their own” automation to cover. A key tenet is that testers and developers use the same frameworks and methodologies for developing automation. Testers are able to run tests developed by devs, and visa-versa. Going back to the mistakes we made in VS 2005, this simple methodology, along with considering the most cost-effective way to automate a given test case, is the key to fixing each of these mistakes.

Similarly, it is the entire team’s job to maintain the automated tests. As I said earlier, maintenance is the hidden and huge cost of doing automation. Devs must fix any compile errors in tests introduced by their changes, and also must ensure that all P1 tests pass before checking in. Testers and devs share the load on ensuring all P2 and P3 tests maintain a high pass rate. We run these once a week and distribute the load on driving them to passing.

I hope you can also learn from our mistakes, and use our experience to improve your approach to automation.

Ed.

Comments

Anonymous

June 14, 2009

Thank you for submitting this cool story - Trackback from DotNetShoutoutAnonymous

June 17, 2009

The comment has been removedAnonymous

June 18, 2009

Ed has published some interesting articles on automated Testing. http://blogs.msdn.com/edglas/archive/2008/08/15/so-you-want-to-automate-your-test-cases.aspxAnonymous

June 18, 2009

The comment has been removedAnonymous

November 06, 2009

One of the techniques to reduce the cost of maintenance of automation test cases is the implementation of Frameworks, being one of them the Keyword Driven Framework. The question is, do you think it would be possible to implement in VSTS 2010 ? Has MSFT considered this ?