The Basics of Securing Applications: Part 5 – Incident Response Management Using Team Foundation Server

![Visual-Studio-One-on-One_thumb1_thum[2] Visual-Studio-One-on-One_thumb1_thum[2]](https://msdntnarchive.z22.web.core.windows.net/media/MSDNBlogsFS/prod.evol.blogs.msdn.com/CommunityServer.Blogs.Components.WeblogFiles/00/00/01/43/73/metablogapi/6663.Visual-Studio-One-on-One_thumb1_thum2_thumb_53B74614.jpg) In this post, we’re continuing our One on One with Visual Studio conversation from May 26 with Canadian Developer Security MVP Steve Syfuhs, The Basics of Securing Applications.If you’ve just joined us, the conversation builds on the previous post, so check that out (links below) and then join us back here. If you’ve already read the previous post, welcome!

In this post, we’re continuing our One on One with Visual Studio conversation from May 26 with Canadian Developer Security MVP Steve Syfuhs, The Basics of Securing Applications.If you’ve just joined us, the conversation builds on the previous post, so check that out (links below) and then join us back here. If you’ve already read the previous post, welcome!

Part 1: Development Security Basics

Part 2: Vulnerabilities

Part 3: Secure Design and Analysis in Visual Studio 2010 and Other Tools

Part 4: Secure Architecture

Part 5: Incident Response Management Using Team Foundation Server (This Post)

In this session of our conversation, The Basics of Securing Applications, Steve talks about the differences between protocols and procedures when it comes to security and how to be ready in an event of a security situation.

Steve, back to you.

There are only a few certainties in life: death, taxes, and one of your applications getting attacked. Throughout the lifetime of an application it will undergo a barrage of attack – especially if it's public facing. If you followed the SDL, tested properly, coded securely, and managed well, you will have gotten most of the bugs out.

Most.

There will always be bugs in production code, and there will very likely always be a security bug in production code. Further, if there is a security bug in production code, an attacker will probably find it. Perhaps the best metric for security is along the lines of mean-time-to-failure. Or rather, mean-time-to-breach. All safes for storing valuables are rated in how long they can withstand certain types of attacks – not whether they can, but how long they can. There is no one-single thing we can do to prevent an attack, and we cannot prevent all attacks. It's just not in the cards. So, it stands to reason then that we should prepare for something bad happening. The final stage of the SDL requires that an Incident Response Plan is created. This is the procedure to follow in the event of a vulnerability being found.

In security parlance, there are protocols and procedures. The majority of the SDL is all protocol. A protocol is the usual way to do things. It's the list of steps you follow to accomplish a task that is associated with a normal working condition, e.g. fuzzing a file parser during development. You follow a set of steps to fuzz something, and you really don't deviate from those steps. A procedure is when something is different. A procedure is reactive. How you respond to a security breach is a procedure. It's a set of steps, but it's not a normal condition.

An Incident Response Plan (IRP - the procedure) serves a few functions:

- It has the list of people to contact in the event of the emergency

- It is the actual list of steps to follow when bad things happen

- It includes references to other procedures for code written by other teams

This may be one of the more painful parts of the SDL, because it's mostly process over anything else. Luckily there are two wonderful products by Microsoft that help: Team Foundation Server. For those of you who just cringed, bear with me.

Microsoft released the MSF-Agile plus Security Development Lifecycle Process Template for VS 2010 (it also takes second place in the longest product name contest) to make the entire SDL process easier for developers. There is the SDL Process Template for 2008 as well.

It's useful for each stage of the SDL, but we want to take a look at how it can help with managing the IRP. First though, lets define the IRP.

Emergency Contacts (Incident Response Team)

The contacts usually need to be available 24 hours a day, seven days a week. These people have a range of functions depending on the severity of the breach:

- Developer – Someone to comprehend and/or triage the problem

- Tester – Someone to test and verify any changes

- Manager – Someone to approve changes that need to be made

- Marketing/PR – Someone to make a public announcement (if necessary)

Each plan is different for each application and for each organization, so there may be ancillary people involved as well (perhaps an end user to verify data). Each person isn't necessarily required at each stage of the response, but they still need to be available in the event that something changes.

The Incident Response Plan

Over the years I've written a few Incident Response Plans (Never mind that I was asked to do it after an attack most times – you WILL go out and create one after reading this right?). Each plan was unique in it's own way, but there were commonalities as well.

Each plan should provide the steps to answer a few questions about the vulnerability:

- How was the vulnerability disclosed? Did someone attack, or did someone let you know about it?

- Was the vulnerability found in something you host, or an application that your customers host?

- Is it an ongoing attack?

- What was breached?

- How do you notify the your customers about the vulnerability?

- When do you notify them about the vulnerability?

And each plan should provide the steps to answer a few questions about the fix:

- If it's an ongoing attack, how do you stop it?

- How do you test the fix?

- How do you deploy the fix?

- How do you notify the public about the fix?

Some of these questions may not be answerable immediately – you may need to wait until a post-mortem to answer them.

This is the high level IRP for example:

- The Attack – It's already happened

- Evaluate the state of the systems or products to determine the extent of the vulnerability

- What was breached?

- What is the vulnerability

- Define the first step to mitigate the threat

- How do you stop the threat?

- Design the bug fix

- Isolate the vulnerabilities if possible

- Disconnect targeted machine from network

- Complete forensic backup of system

- Turn off the targeted machine if hosted

- Initiate the mitigation plan

- Develop the bug fix

- Test the bug fix

- Alert the necessary people

- Get Marketing/PR to inform clients of breach (don't forget to tell them about the fix too!)

- If necessary, inform the proper legal/governmental bodies

- Deploy any fixes

- Rebuild any affected systems

- Deploy patch(es)

- Reconnect to network

- Follow up with legal/governmental bodies if prosecution of attacker is necessary

- Analyze forensic backups of systems

- Do a post-mortem of the attack/vulnerability

- What went wrong?

- Why did it go wrong?

- What went right?

- Why did it go right?

- How can this class of attack be mitigated in the future?

- Are there any other products/systems that would be affected by the same class?

Some of procedures can be done in parallel, hence the need for people to be on call.

Team Foundation Server

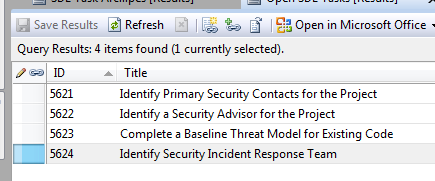

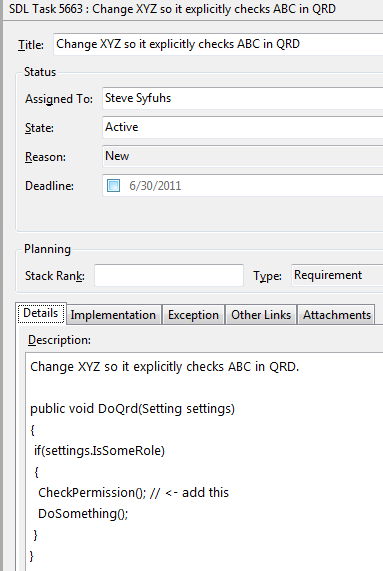

So now that we have a basic plan created, we should make it easy to implement. The SDL Process Template (mentioned above) creates a set of task lists and bug types within TFS projects that are used to define things like security bugs, SDL-specific tasks, exit criteria, etc..

While these can (and should) be used throughout the lifetime of the project, they can also be used to map out the procedures in the IRP. In fact, a new project creates an entry in Open SDL Tasks to create an Incident Response Team:

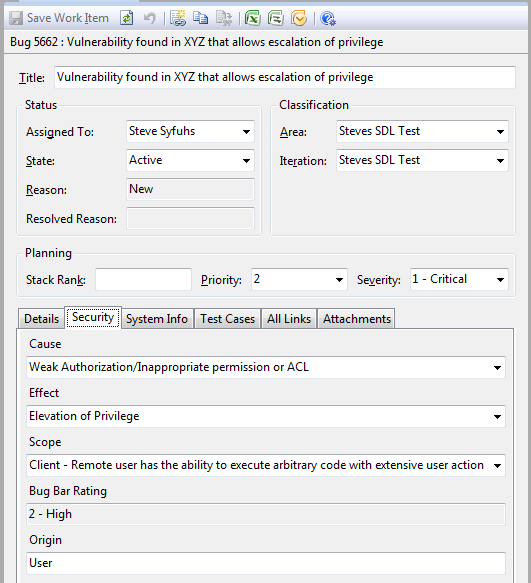

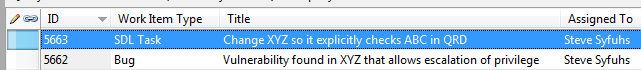

A bug works well to manage incident responses.

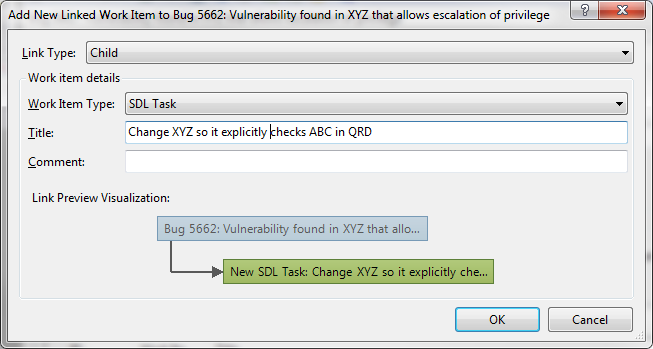

Once a bug is created we can link a new task with the bug.

And then we can assign a user to the task:

Each bug and task are now visible in the Security Exit Criteria query:

Once all the items in the Exit Criteria have been met, you can release the patch.

Conclusion

Security is a funny thing. A lot of times you don't think about it until it's too late. Other times you follow the SDL completely, and you still get attacked.

In this conversation (which spanned over a few weeks) we looked at writing secure software from a pretty high level. We touched on common vulnerabilities and their mitigations, tools you can use to test for vulnerabilities, some thoughts to apply to architecting the application securely, and finally we looked at how to respond to problems after release. By no means will this conversation automatically make you write secure code, but hopefully you’ve received guidance around understanding what goes into writing secure code. It's a lot of work, and sometimes its hard work.

Finally, there is an idea I like to put into the first section of every Incident Response Plan I've written, and I think it applies to writing software securely in general:

Something bad just happened. This is not the time to panic, nor the time to place blame. Your goal is to make sure the affected system or application is secured and in working order, and your customers are protected.

Something bad may not have happened yet, and it may not in the future, but it's important to plan accordingly because your goal should be to protect the application, the system, and most importantly, the customer.

About Steve Syfuhs

| Steve Syfuhs is a bit of a Renaissance Kid when it comes to technology. Part developer, part IT Pro, part Consultant working for ObjectSharp. Steve spends most of his time in the security stack with special interests in Identity and Federation. He recently received a Microsoft MVP award in Developer Security. You can find his ramblings about security at www.steveonsecurity.com |