Dynamics AX Application Test Strategy

Dynamics AX provides a fully features testing framework that works in a pattern similar to any xUnit test harness. This harness allows developers to author unit, component and scenario level tests. Additionally, since the development environment is integrated with Visual Studio, there are many productivity enhancing developer scenarios like better debugging, diagnostics and code analysis available with the IDE.

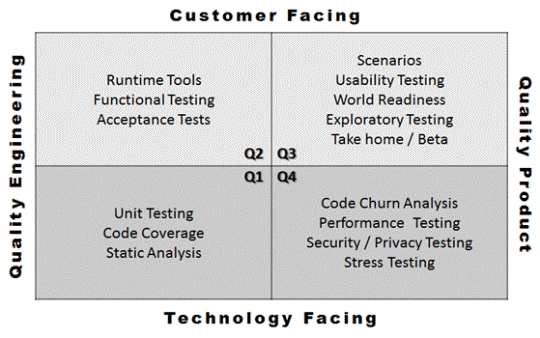

A version of the testing quadrants by Alan Page describes scope and activities of testing quite well. This has been adapted from Brain Marick’s “Agile Quadrants” to describe the scope of testing. [1] [2]

Task recorder based record and playback tests falls under Q4. These tests are typically scenario based and are customer facing. Unit and component tests fall under Q1. For Q4, performance SDK offers load and performance testing capabilities.

Dynamics AX Test Strategy

A good test strategy answers three basic questions [4]

- Why bother? Testing and test automation takes effort and time. In order to ensure proper investment, testing activities should be driven to address specific risks.

- Who Cares? Include activities in your tests that serves somebody’s interest. For ex., customers who care about a specific feature or scenario.

- How much? It is impossible to test all possible combinations and scenarios. There should be clear definition of how much effort will be put in testing and test automation.

What is a good test case?

There are many articles about quality of a test case. In a nutshell a good test case is [3]

- Relevant : Uniquely tests what is intended

- Effective : Proves or disproves the software. Finds bugs! No false positives or negatives.

- Maintainable: Easy transition of tests for someone else to setup, run and interpret results

- Efficient : Returns definitive results quickly with efficient use of resources.

- Manageable: Test deployment, configuration, organization, test business metadata (owner, priority, feature association, etc.)

- Portable: Runs anywhere with appropriate adaption to current environment

- Reliable: Software reliability + results repeatability. Same input and environment=same test results.

- Diagnosable: Low Mean Time to Root Cause (MTTRC) and accurately identifies product, test and infrastructure failures separately, starting with a good name.

Resources

For some excellent advice on testing and test strategy please refer to blog

Achieving balance in testing software

References

[1] Agile Testing Directions; https://www.exampler.com/old-blog/2003/08/21/#agile-testing-project-1

[2] Riffing on the Quadrants; https://angryweasel.com/blog/riffing-on-the-quadrants/?utm_source=feedburner&utm_medium=feed&utm_campaign=Feed%3A+ToothOfTheWeasel+%28Tooth+of+the+Weasel%29

[3] Improving Test Quality; David Catlett, Mind Shift, 2011 Engineering Forum

[4] Kaner, C., Bach, J., & Pettichord, B. (2001). Lessons learned in software testing (1 ed.). New York, NY, USA: John Wiley & Sons.