Hello @KingJava,

Thanks for the question and using MS Q&A platform.

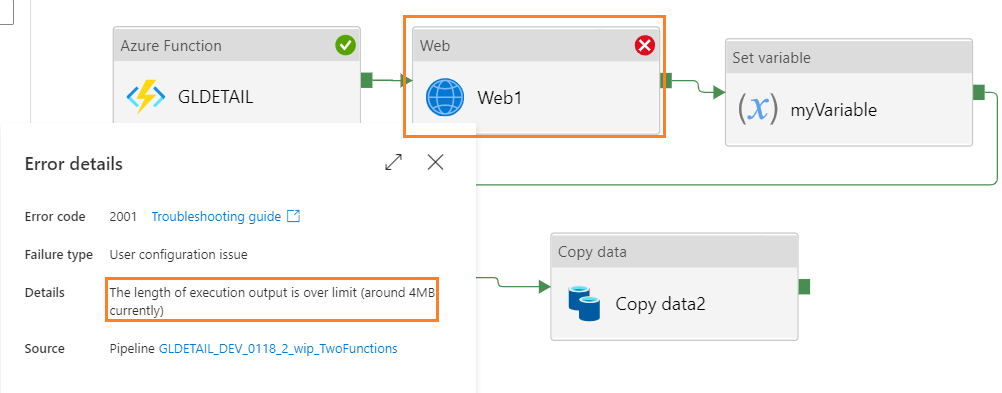

As we understand the ask here is if there is a way to break the 4MB limit. for web activity in ADF , please let me know if thats not accurate .

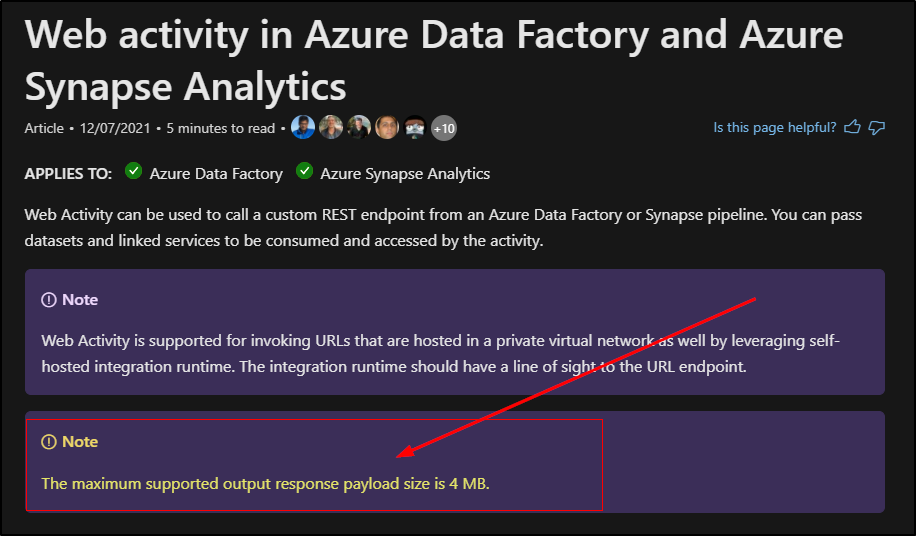

Unfortunately, this is a hard limit on Web activity where the response size should be less than or equal to 4MB. This is being called out in the public documentation

Looks like Azure Resource Manager has limits for API calls. You can make API calls at a rate within the Azure Resource Manager API limits. The maximum size of the API schema that can be used by a validation policy is 4 MB. If the schema exceeds this limit, validation policies will return errors on runtime. For more info please refer to this doc : API Management policies to validate requests and responses

In the past I had a conversation with product team about the same, but they have confirmed that it is a hard limit and there is no roadmap to increase these capacity limits in the near future.

And as a workaround you may try using ForEach Activity. Maybe you need to use paging query for your rest api/endpoint and return a limited number of the data each time. Then query your data in loop until the number of return data is lower than threshold value. Please refer to this source: Web activity throws overlimit error when calling rest api

Here is existing feedback on the web activity response size limitation submitted by a user, please feel free to up-vote and comment on it as it would help increase the priority of feature request suggestion. Feedback link: Azure Data Factory - Web Activity - maximize supported output response payload

Hope this info will help. Please let us know if any further queries.

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators